By Zhaofeng Zhou (Muluo)

In the previous article, we presented a basic overview of Lucene. In this article, we will delve deeper into IndexWriter, one of Lucene's core classes, to explore the whole data writing and indexing process in Lucene.

// initialization

Directory index = new NIOFSDirectory(Paths.get("/index"));

IndexWriterConfig config = new IndexWriterConfig();

IndexWriter writer = new IndexWriter(index, config);

// create a document

Document doc = new Document();

doc.add(new TextField("title", "Lucene - IndexWriter", Field.Store.YES));

doc.add(new StringField("author", "aliyun", Field.Store.YES));

//index a document

writer.addDocument(doc);

writer.commit();Let us first look at how to use IndexWriter for data writing in Lucene. The above is sample code for a simple call. The whole process involves three main steps:

The above is a concise example of the process of writing data in Lucene. IndexWriter is at the core and the whole procedure is abstracted clearly and concisely. The most important feature of a well-designed library is that ordinary users can learn and use it at very little cost, while providing advanced users with configurable performance parameters and customizable functionality.

IndexWriterConfig provides some core parameters for advanced users to optimize performance and customize functionality. We will look at a few examples:

IndexWriter provides several simple operation interfaces. This chapter gives a simple explanation of their features and uses while the next chapter gives a detailed breakdown of their internals. The core APIs provided by IndexWriter are as follows:

The last few chapters presented the basic processes, configuration and core interfaces of IndexWriter. These are very simple and easy to understand. In this chapter we take a closer look at IndexWriter internals to explore the kernel implementation.

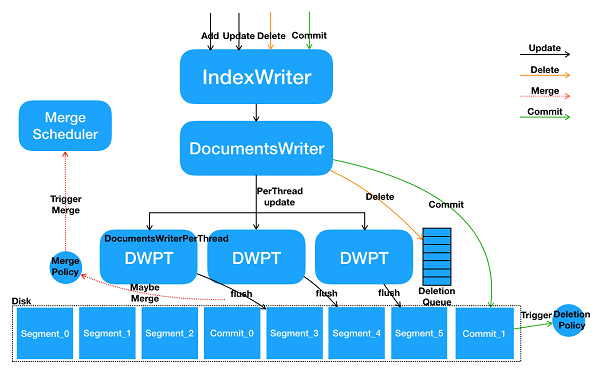

The above chart shows IndexWriter's internal core processes. Next we explain IndexWriter's internal data path in terms of its main operations, add/update/delete/commit.

The core interfaces provided by IndexWriter are thread-safe and involve some internal concurrency tuning to optimize multi-thread writing. IndexWriter opens up an independent space for every thread to write. These spaces are controlled by DocumentsWriterPerThread. The whole multi-thread, data-processing process is:

After introducing DocumentsWriterPerThread (DWPT), Lucene only needs to lock the first and third steps when processing data internally. The second step does not have to be locked as each thread processes data in its own independent space. Generally speaking, the first and third steps are lightweight while the second step needs the most computing power and memory. Doing it this way greatly reduces locking time, improving concurrency efficiency. Every DWPT includes its own in-memory buffer, which is eventually flushed into a different, independent segment file.

This solution greatly improves the performance of multi-thread, concurrent writes. When new documents are added, there is no conflict between any of the data writes, and this scenario is particularly suited to this type of space-separated writing. However, when documents are deleted, a single delete operation can delete data in different thread spaces. In this case, Lucene adopts a special method of interaction to reduce lock overhead. This is analyzed in detail when discussing delete.

In the search scenario, the whole stage of indexing is basically a new file write, and the subsequent index increment stage (especially when the data source is a database) involves a large quantity of update and delete operations. In terms of principles, one of the best practices is to assign the same processing thread to documents containing the same, unique primary key term so that the data updates in the same independent thread space to avoid cross-threading.

The add interface is used to add a new document and the update interface is used to update a document. But Lucene updates in a different way to databases. Databases update after a query while Lucene deletes and re-adds a document after a query. Lucene does not support updating the internal sorting of a document. The process is delete by term then add document.

The add and update interfaces provided by IndexWriter are all mapped to the update interface of DocumentsWriter. See the following interface declarations:

long updateDocument(final Iterable<? extends IndexableField> doc, final Analyzer analyzer,

final Term delTerm) throws IOException, AbortingException The internal process of this function is:

The delete operation is discussed in detail in the next summary. The add operation writes the documents directly to the in-memory buffer in DWPT.

Compared to add and update, delete is a completely different data path. Even though update and delete internally delete data, they are different data paths. Deleting a document has no direct effect on data in the in-memory buffer and you must use another way to delete it.

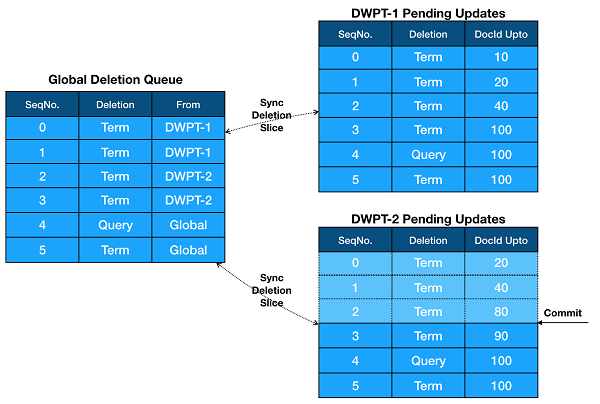

The key data structure in the Delete path is Deletion queue. A global Deletion Queue is generated in IndexWriter known as Global Deletion Queue, and each DWPT has an independent Deletion Queue known as Pending Updates. DWPT Pending Updates and Global Deletion Queue synchronize bi-directionally because document deletion is global in scope and is not restricted to DWPT.

Pending Updates records each delete in the order of occurrence and marks the range of documents affected by the delete. The range of documents is marked by recording the largest DocId so far written (DocId Upto), meaning that the delete only affects documents with a DocId smaller than or equal to the DocId in question.

The update and delete interfaces can both be used to delete documents but there are some differences:

The differences between the update and delete processes also account for differences in their behaviors, as update first acts on DWPT internally, and happens at the same time as add, ensuring the atomicity of the delete and add within the DWPT. That is, it ensures that all qualifying documents are definitely deleted before the add occurs.

When does the delete operation in DWPT Pending Updates actually act on the data? The data in Lucene Segment is not actually deleted. There is a special file in Segment called live docs, which internally is a bitmap structure that records which DocIds in the Segment are live and which have been deleted. So the process of deleting is just the process of building a bitmap of live docs flags. The data is not actually deleted, but its flag is deleted from live docs. Term deletion and Query deletion build live docs at different stages. A Term deletion requires all the docs referenced by a Term query to be enumerated, so this clearly happens when the inverted index is being built. A Query deletion requires a complete query to get the corresponding docIds and occurs after a segment has been flushed. After the index file has been built based on the flush, IndexReader can then complete the search.

Note that live docs only affects the inverse index so there is no way to retrieve documents flagged for deletion from live docs, but the stored fields can be queried by doc id. Naturally the document data is eventually deleted physically and this process occurs during a merge.

A flush is the process of making in-memory buffers in DWPT into data-persistent files. A flush is automatically triggered after a new document is added based on FlushPolicy, and a flush can also be manually triggered using IndexWriter's flush interface.

Every DWPT is flushed into a segment file. A segment file is not searchable after a flush has completed, and is only searchable after a commit, when all flushed documents become searchable.

A commit triggers a forced data flush. Only after a commit does the data flushed before this become searchable. A commit triggers the generation of a file called a commit point. A commit point is managed by the IndexDeletionPolicy. Lucene's default policy only retains the last commit point. Naturally Lucene provides other policies to choose from.

A merge is the merging of segment files. The advantage of merging is that it improves query efficiency and enables some deleted documents to be reclaimed. When merge flushes a segment file, it causes MergePolicy to decide on automatic triggering, and it can also do a force merge using IndexWriter.

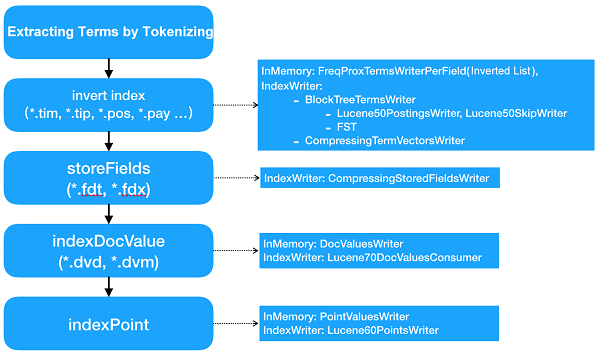

In the previous few chapters we looked at the processes of several key operations. This section looks at how DWPT, the most core part of Lucene, internally implements the process of indexing. The key concept in Lucene's internal indexing is IndexingChain, which as its name suggests, is chain-like indexing. Why is it a chain? This is related to the whole structure of Lucene's indexing system. Lucene provides different types of indexes, such as inverted indexes, forward indexes (column storage), StoreField, and DocValues. Each different type of index corresponds to a different type of indexing algorithm, data structure, and file storage. Some of them are at column-level, some are file-level and some are document level. So after a document is written, it is processed by many different types of index, some of which share memory buffers and some are completely independent. Lucene is theoretically able to extend other types of index based on this framework, something the best users can try.

Within IndexWriter, the order of indexing on the indexing chain is inverted index, store fields, doc values and point values. Some types of index write the index directly to a file (mainly store field and term vector) after document processing, whereas other index types write the document contents into a memory buffer, which are then written to a file at the next flush. It is usually document-level indexes that can write the index of files, and indexing can be incremental at the document level. Indexes that cannot write files, for example inverted indexes, have to wait for all documents in a segment to be written and then carry out a complete sort of the Terms and only then can it create the index, so a memory-buffer is needed to cache all the documents.

Previously we mentioned that IndexWriterConfig supports the configuration of codecs. Codecs are the encoder and decoder for each type of index. As can be seen from the above figure, Lucene 7.2.1 has these main codecs:

This chapter mainly looked at the document indexing processes of the Indexing Chain internals. The core is chained-phase indexes, and different indexes support configuration of codecs.

This article mainly looks at IndexWriter from a global perspective, explaining its configuration, interfaces, and concurrency models, as well as data paths of core operations and index chains. The next article takes a deeper look at the indexing process of different types of indexes, exploring the implementation of memory buffers, indexing algorithms and data storage formats.

A Comprehensive Analysis of Open-Source Time Series Databases (1)

57 posts | 12 followers

FollowAlibaba Cloud Storage - April 10, 2019

ApsaraDB - December 13, 2024

Data Geek - April 8, 2024

ApsaraDB - July 7, 2021

Alibaba Cloud Storage - March 28, 2019

Whybert - January 10, 2019

57 posts | 12 followers

Follow Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch

Alibaba Cloud Elasticsearch helps users easy to build AI-powered search applications seamlessly integrated with large language models, and featuring for the enterprise: robust access control, security monitoring, and automatic updates.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn MoreMore Posts by Alibaba Cloud Storage