This post features a basic introduction to machine learning (ML). You don’t need any prior knowledge about ML to get the best out of this article. Before getting started, let’s address this question: "Is ML so important that I really need to read this post?"

After introducing Machine Learning and discussing the various techniques used to deliver its capabilities, let’s move on to its applications in related fields: big data, artificial intelligence (AI), and deep thinking.

Before 2010, Machine Learning applications played a significant role in specific fields, such as license plate recognition, cyber-attack prevention, and handwritten

character recognition. After 2010, a significant number of Machine Learning applications were coupled with big data which then provided the optimal environment for

Machine Learning applications.

The magic of big data mainly revolves around how big data can make highly accurate predictions. For example, Google used big data to predict the outbreak of H1N1

in specific U.S. cities. For the 2014 World Cup, Baidu accurately predicted match results, from the elimination round to the final game.

This is amazing, but what gives big data such power? Machine Learning technology. At the heart of big data is its ability to extract value from data, and Machine

Learning is a key technology that makes it possible. For Machine Learning, more data enables more accurate models. At the same time, the computing time needed by

complex algorithms requires distributed computing, in-memory computing, and other technology. Therefore, the rise of Machine Learning is inextricably intertwined with

big data.

However, big data is not the same as Machine Learning. Big data includes distributed computing, memory database, multi-dimensional analysis, and other

Although the Machine Learning results are amazing, and, in certain situations, the best demonstration of the value of big data, it is not the only analysis method

available to big data, it is one of several big data analysis methods.

Having said that, the combination of Machine Learning and big data has produced great value. Based on the development of Machine Learning technology, data can

be used to make predictions. For example, the more extensive the experience, the better you can predict the future. It is said that people with "a wealth of experience" are

better at their jobs than "beginners." This is because people with more experience can develop more accurate rules based on their experience.

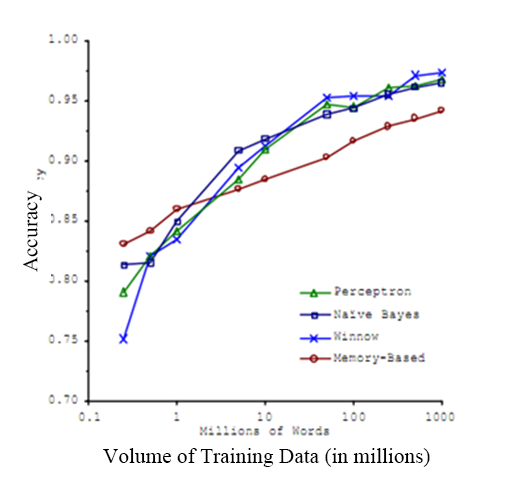

There is another theory about Machine Learning: the more data a model has, the better its prediction accuracy. The following graph depicts the relationship between

Machine Learning accuracy and data.

The graph shows that after the input data volume for various algorithms reaches a certain level, they have nearly identically high accuracy. This led to a famous saying

in the Machine Learning sphere, ‘it's not who has the best algorithm that wins, it's who has the most data!’.

The big data era has many advantages that promote wider application of Machine Learning. For example, with the development of IoT and mobile devices, we now

have even more data, including images, text, videos, and other types of unstructured data. This ensures Machine Learning modules have more data. At the same time, the

big data distributed computing technology, Map-Reduce, allows faster Machine Learning, making it more convenient to use. The advantages of big data allow the strengths

of Machine Learning to be leveraged to their full potential.

Recently, the development of Machine Learning has taken a new turn, deep learning.

Although the term deep learning sounds pretentious, the concept is quite simple. It refers to the development of a traditional neural network into one with many

hidden layers.

In a previous blog post, we talked about the disappearance of neural networks after the 1990s (link to blog 2). However, Geoffrey Hinton, the inventor of BP algorithms,

never gave up on his research on neural networks. As a neural network reaches more than two hidden layers, its training speed becomes extremely slow. They have always

been less practical than SVM. However, in 2006, Hinton published an article in Science that demonstrated two points:

fundamental characterization of the data, which is conducive to visualization and classification.

This discovery not only solved computing difficulties of neural networks, but also showed the excellent learning capabilities of deep neural networks. This led to the re-

emergence of neural networks as a mainstream and powerful learning technology in the Machine Learning field. At the same time, neural networks with many hidden layers

began to be called, "deep neural networks," and the learning and research based on deep neural networks was called, "deep learning."

Owing to its importance, deep learning gained a lot of attention. The following four milestones in the development of this field are worth mentioning:

a parallel computing platform with 16,000 CPU cores, the team trained a deep neural network Machine Learning model that has had great success in speech and image

recognition.

while a machine simultaneously recognized the speech and translated it into Chinese with a Chinese voice. The system, based on deep learning, performed extremely

well.

In January 2013 at Baidu's annual conference, Baidu founder and CEO Robin Li made a high-profile speech, announcing the establishment of a research institute thatwould focus on deep learning, marking the creation of the Institute of Deep Learning.

In April 2013, the MIT Technology Review placed deep learning at the top of its list of 10 breakthrough technologies for 2013.

In the previous article, "An Introduction to Machine Learning," three titans in the ML field were identified. They are not only experts in Machine Learning, but pioneers

in deep learning research. These men lead the technology divisions at major Internet companies because of their technical abilities as well as the unlimited potential of their

field of research.

Currently, the progress of image and speech recognition technology in the Machine Learning industry is driven by the development of deep learning.

Deep learning is a sub-field of Machine Learning and its development has substantially raised the status peer. It has driven the industry to turn its attention, once again,

to the idea that gave birth to Machine Learning: Artificial intelligence (AI).

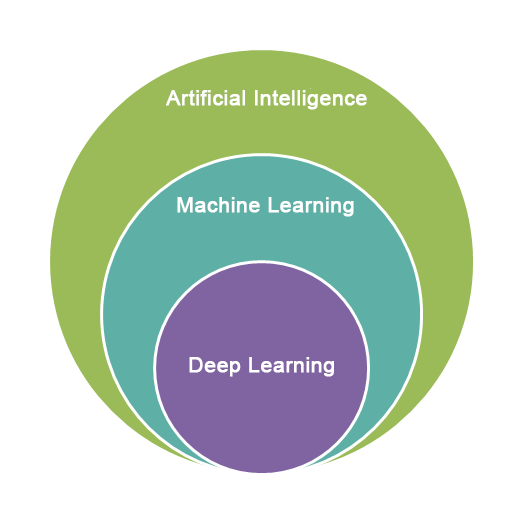

AI is the father of Machine Learning, and deep learning is the child of Machine Learning. The following figure shows the relationships between the three:

Without a doubt, AI is the most groundbreaking scientific innovation that humans can imagine. Like the name of the game, Final Fantasy, AI is the ultimate scientific

dream of mankind. Since the concept of AI was proposed in the 1950s, the scientific and industrial communities have explored its possibilities. During this time, various

novels and movies portrayed AI in different ways. Sometimes they feature humans inventing human-like machines, an amazing idea! However, since the 1950s, the

development of AI has encountered many difficulties with no scientific breakthroughs.

Overall, the development of AI has passed through several phases. The early period was defined by logical reasoning and the middle period by expert systems. These

scientific advances did take us closer to intelligent machines, but the distance to the ultimate goal is still far away. After the advent of Machine Learning, however, the AI

community thought it had finally found the correct path. In some vertical fields, image and speech recognition applications based on ML have rivaled human capability.

Machine Learning has, for the first time, brought us close to the dream of AI.

In fact, if you compare AI-related technology with the technology of other fields, you will discover that the centrality of Machine Learning to AI is with good reason.

The main thing that separates humans from objects, plants, and animals is "wisdom." But what best embodies our wisdom? Is it computing ability? Maybe not. We think of

people with high mental computing capabilities as savants, but not necessarily wise. Is it our ability to respond to stimuli? Also, no. Is it memory? No. People with a

photographic memory may have retentive minds. What about logical reasoning? Although this might make someone highly intelligent, like Sherlock Holmes, it is still not

So, what kind of people do we describe as wise? Sages, like Lao Tzu or Socrates? Their wisdom lies in their perception of life as well as their accumulation of experience

and deep thinking about life. But is this similar to the concept of Machine Learning? Indeed, it is. The use of experience to draw general rules to guide and predict the

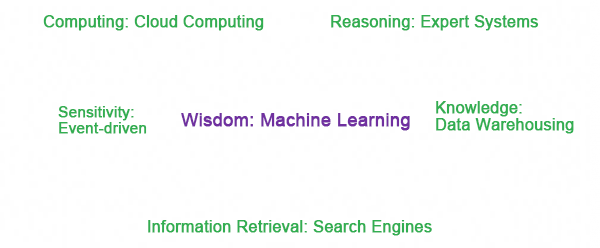

For a computer, the abilities listed can all be achieved using a variety of technology. For computing capabilities, there is distributed computing; for responsiveness, there

is event-driven architecture; for information retrieval, there are search engines; for knowledge storage, there is data warehousing; and for logical reasoning, there are expert

why Machine Learning can best characterize wisdom.

Let's think about creating a robot. The primary components would be powerful computing capabilities, massive storage, fast data retrieval, quick response, and excellent

logical reasoning. Then, a wise brain is added. This would be the birth of AI in the true sense. With the rapid development of Machine Learning, AI may no longer be a

simulates the structure of the human mind and makes significant breakthroughs on the initial limitations of Machine Learning in visual and speech recognition. Therefore, it

is highly likely that deep learning proves to be a core technology in the development of true AI. Both Google Brain and Baidu Brain are built from a deep learning network

with a massive number of layers. Perhaps, with the help of deep learning technology, a computer with human intelligence may come into reality in the near future.

The rapid development of AI with the assistance of deep learning technology has already caused concern among some. Tesla CEO Elon Musk, a real-world Iron Man, is

one such person. Recently, while attending a seminar at MIT, Musk expressed his concerns about AI. He said that AI research was akin to "summoning the demon" and that

we must be "very careful" about some areas.

Although Musk's warning may sound alarmist, his reasoning is sound. "If its function is just something like getting rid of email spam and it determines the best way of

getting rid of spam, it’s getting rid of humans." Musk believes that government regulation is necessary to prevent such a phenomenon. If at the birth of AI, some rules are

introduced to restrain it, a scenario where AI overpowers humans can be avoided. AI would not function based only on Machine Learning, but a combination of Machine

Learning with a rule engine and other systems. If an AI system does not have learning restrictions, it is likely to misunderstand certain things. Therefore, additional guidance

is required. Just as in human society, laws are the best practice. Rules differ based on patterns set up for Machine Learning. Patterns are guidelines derived from

and controllable AI with learning abilities can be created.

Lastly, let's look at a few other ideas related to Machine Learning. Let's go back to the story with John from our first blog in this 3 part series, where we talked about

methods to predict the future. In real life, few people use such an explicit method. Most people use a more direct method called, intuition. So, what exactly is intuition?

Intuition is composed of patterns drawn from past experiences in your subconscious. It is just as if you use an Machine Learning algorithm to create a pattern that can be

reused to answer a similar question. But when do you come up with these patterns? It is possible that you develop them unconsciously, for example, when you are sleeping

or walking down a street. At such times, your brain is doing imperceptible work.

To better illustrate intuition and subconscious, let's contrast them with another type of experiential thinking. If a man is a very diligent, he examines himself every day or

often discusses his recent work with his colleagues. The man is using a direct training method. He consciously thinks about things and draws general patterns from

car, you drive to work every day. Each day you take the same route to work. The interesting thing is that, for the first few days, you were very nervous and paid constant

attention to the road. Now, during the drive, your eyes stare ahead, but your brain does not think about it. Still, your hands automatically turn the steering wheel to adjust

your direction. The more you drive, the more work is handed over to your subconscious. This is a very interesting situation. While driving, your brain records an image of the

road ahead and remembers the correct actions for turning the steering wheel. Your subconscious directs the movements of your hands based on the image of the road.

Now, suppose you were to give a video recording of the road to a computer and have it record the movements of the driver that correspond to the images. After a period

of learning, the computer could generate a Machine Learning pattern and automatically drive the car. That's amazing, right? In fact, this is exactly how self-driving car

technology works for companies like Google and Tesla.

In addition to self-driving cars, subconscious thinking can be applied to social interactions. For example, the best way to persuade others is to give them some relevant

information to generalize and reach a conclusion that we want. That's why when we are presenting a viewpoint, it is much more effective to use facts or tell a story than

simply list reasons or moral principles. Throughout the ages, all great advocates for whatever cause have adopted this approach. During the Spring and Autumn period of

ancient China, ministers would speak with monarchs of different states. To persuade a monarch to take a certain course of action, they wouldn't simply tell him what to do

(that was a good way to lose one's head). Rather, they told a story so that their preferred policy would suddenly dawn on the monarch as a lesson he drew from the story.

There are many examples of such great persuaders, including Mozi and Su Qin. But why are stories more effective? As a person grows, they form many patterns and

subconscious attitudes through reflection. If you present a pattern that contradicts a pattern held by the other party, you will probably be rejected. However, if you tell a

story with new information, they may change their mind upon reflection. This thinking process is very similar to Machine Learning. It is just like giving someone new data

and asking them to retrain their mental models to incorporate this new input. If you give the other party enough data to force them to change their model, they will act in

agreement with the new patterns suggested by the data. Sometimes, the other party may refuse to reflect on new information. However, once new data is inputted,

whether or not they intend to change their thinking, their mind will subconsciously incorporate the new data into their thinking and lead them to change their

opinions.

But what if a computer was to have a subconscious? For instance, if a computer gradually develops its sub-consciousness during its operations, it might complete some

tasks before being told to do so. This is a very interesting idea. Think about it!

Machine Learning is an amazing and exciting technology. You find Machine Learning applications everywhere, from Taobao's item recommendations to Tesla’s self-

driving cars. At the same time, Machine Learning is the most likely to make the AI dream come true. Various AI applications exist currently, such as Microsoft XiaoIce chat-

bot and computer vision technology, incorporate Machine Learning elements. Consider learning even more about Machine Learning, as it may help you better understand

the principles behind the technology that brings so much convenience to our lives.

2,593 posts | 793 followers

FollowAlibaba Clouder - April 28, 2021

Alibaba Clouder - April 28, 2021

Alibaba Clouder - December 26, 2016

Alibaba Clouder - May 6, 2020

Apache Flink Community China - July 27, 2021

Alibaba Clouder - January 9, 2017

2,593 posts | 793 followers

Follow Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Machine Translation

Machine Translation

Relying on Alibaba's leading natural language processing and deep learning technology.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 16, 2019 at 6:07 am

For training and inference where do we route, CPU or GPU or it depends?