By Jin Rong, VP of Research at Alibaba DAMO Academy

Artificial Intelligence (AI) has developed rapidly over recent years. From its infancy to its current prosperity, AI has given rise to many excellent practices. However, it also faces many challenges, which need to be overcome through technological innovation. Through this article, Jin Rong, VP of Research at the Alibaba DAMO Academy, will introduce the current applications and practices of AI, innovations in AI, and future avenues of exploration.

This article is divided into four parts:

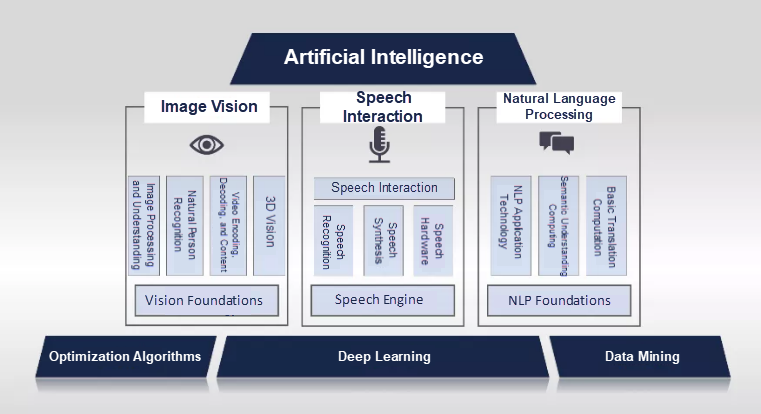

Current AI technology is based on deep learning. To perform complex learning processes, deep learning requires two elements. First, it requires a large amount of data. Deep learning relies heavily on data mining to generate a large amount of effective training data. Second, deep learning requires optimization algorithms because it must find the best model for data matching in a very complex network. Deep learning models are most commonly used in image vision, speech interaction, and natural language processing. Image vision includes image processing and understanding, natural person recognition, video encoding/decoding and content analysis, as well as three-dimensional (3D) vision. Speech interaction includes speech recognition, speech synthesis, and speech hardware technologies. Natural language processing (NLP) includes technologies such as natural language application, semantic understanding and computing, and basic translation computing. All these technologies are examples of AI technology. In short, AI consists of deep learning and machine learning.

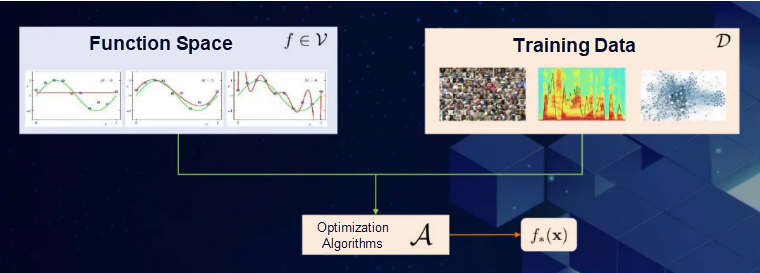

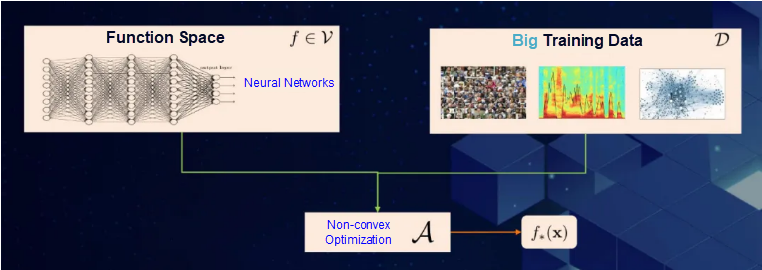

The goal of machine learning is to get approximate solutions of an unknown objective function with given limited samples. A machine learning model has three components. The first determines the function space to be learned, the second determines the training data that will be used to fit the machine learning model, and the third identifies the optimization algorithm that allows the machine to learn the best model from the function space, that is, the model that best matches the data.

Machine learning considers all possible functions, while deep learning only considers a special type of function, neural networks. Deep learning has higher data requirements than common machine learning models. Only with the support of big data can we truly tap the full potential of deep learning. Traditional optimization only includes convex optimization, whereas non-convex optimization is required in deep learning scenarios. Therefore, deep learning confronts major challenges in all the three components of machine learning. First, function spaces composed of neural networks are ambiguous. Second, because of the complexity of big data, training is more difficult than in traditional machine learning. Finally, there are no well-formed templates for non-convex optimization in either theory or practice. Therefore, the industry has been conducting a lot of experimental researches to find best practices.

The development of AI involves two key points. First, a large amount of "living" data can be used. There are many applications that use "living" data, such as Google's AlphaGo, which defeated the Go world champion in 2016. In addition, AI technology has strong computing power. For example, Google's Waymo self-driving system can drive for long distances without human intervention. However, these same practices were from around 20 years ago. In 1995, the Backgammon program became the world champion in backgammon by playing 15,000 games with itself. In 1994, the self-driving car ALVINN traveled from the East Coast to the West Coast of the United States at a speed of 70 miles per hour. The development of AI over the past 20 years has been essentially driven by the exponential growth in data and computing power. Traditional AI technology relied on multiple GPUs to achieve better modeling results.

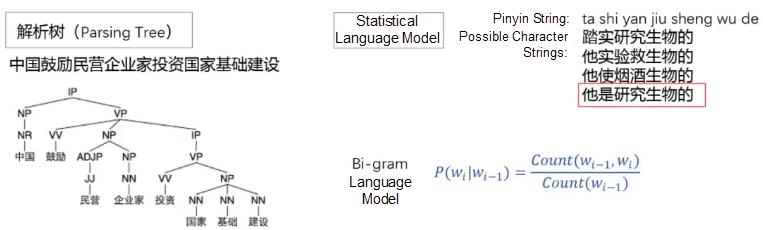

NLP also has a long history, starting back when it was called computational linguistics. Traditional computational linguistic methods used statistical language probability models to build natural language models. The following figure shows how the phrase "中国鼓励民营企业家投资国家基础建设" (China encourages private entrepreneurs to invest in national infrastructure) can be parsed into a parse tree and divided into subjects, predicates, objects, verbs, and nouns. In other words, the grammatical structure of this sentence can be expressed by the parse tree. In addition, the most common technology used in the traditional natural language field was the statistical language model. For example, the Pinyin string "ta shi yan jiu sheng wu de" in the following figure can be expressed in various Chinese character strings. A human who considers the phrase will likely conclude it means "he/she studies biology" (他是研究生物的). In fact, the human brain forms a concept chart through its massive reading experience and knows which expressions are possible. This is the process of forming a statistical language model. The most typical statistical language model is the Bi-gram model, which calculates the probabilities that different words follow a given word. However, traditional computational linguistic methods have disadvantages such as model inaccuracy and mediocre text processing performance.

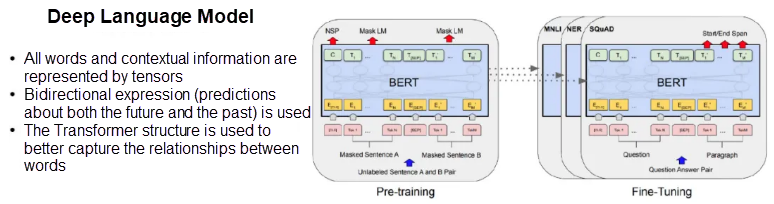

Given the limitations of traditional methods, deep learning can be used for good effect in NLP. The most successful type of deep learning model in this field is the deep language model. It differs from traditional methods in that the context information of all words is represented by tensors. It also can use bidirectional expressions, which means it can predict both the future and the past. In addition, the deep language model uses the Transformer structure to better capture the relationships between words.

Traditional Q&A applications use frequently asked question (FAQ) pairs and knowledge-based question answering (KBQA). For example, in the following figure, the Q&A pairs are a database that contains the questions and answers. This approach is relatively conservative, and the people who compile the questions and answers must have a deep understanding of the relevant domain. In addition, it is difficult to expand the domain and cold start is slow. To solve these problem, researchers introduced machine reading comprehension technology, which can automatically find answers to questions from a document. It does this by converting questions and documents into semantic vectors through the deep language model, and then finding matched answers.

Currently, Q&A applications are widely used by major enterprises, such as AlimeBot and Xianyu. Every day, these applications help millions of buyers obtain information about products and campaigns through self-service.

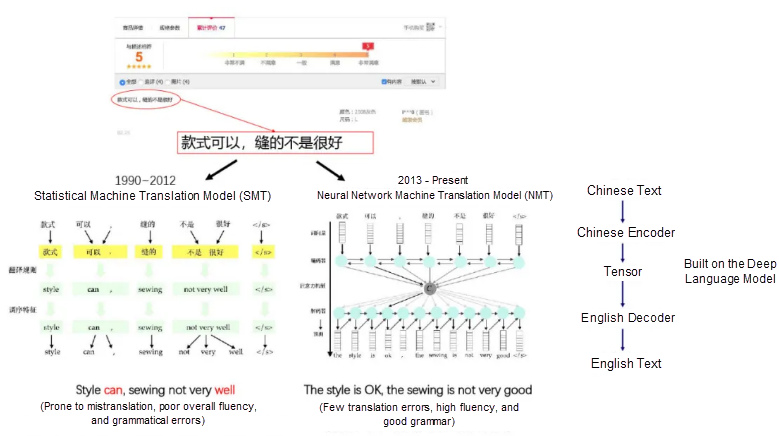

Another mature application of AI technology is machine translation. The traditional translation model is called a statistical machine translation (SMT) model. The translation results on the left of the following figure show that SMT models are prone to mistranslations, with poor overall fluency and more grammatical errors. The translation results of a neural machine translation (NMT) model enabled by deep learning are better. The fluency is relatively high and the text conforms to the rules of English grammar.

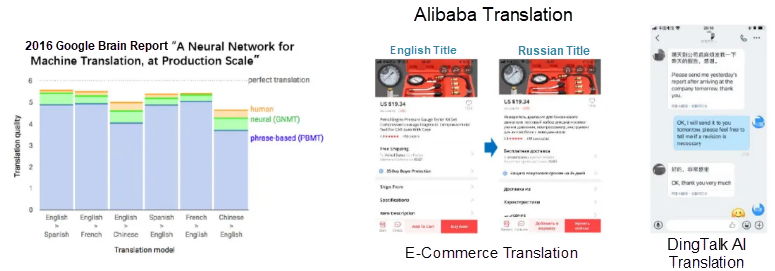

As shown in the following figure, Google Brain's evaluation report on neural networks shows that phrase-based translation models provide limited performance, whereas translation models based on neural networks have improved significantly. Machine translation is already widely used in Alibaba businesses, such as for the translation of product information in e-commerce businesses and AI translation in DingTalk. However, there is still room for DingTalk AI translation to improve in the future because DingTalk conversations are very casual and do not follow strict grammatical rules.

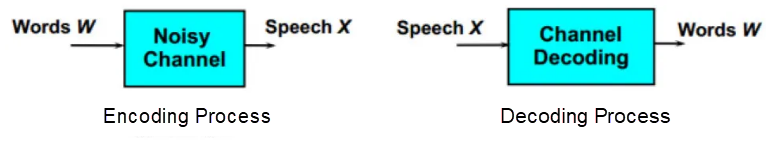

For a long time, speech synthesis technology has been viewed as an encoding technology that translates text into signals. Conversely, speech recognition is a decoding process.

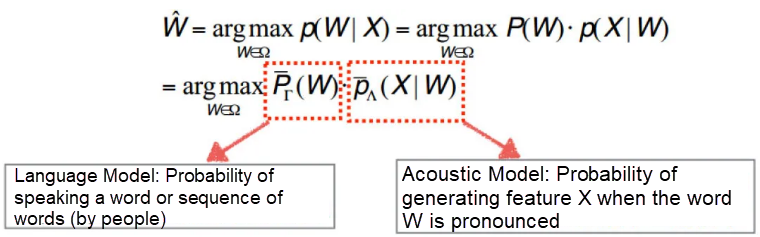

Generally, speech recognition involves two models, a language model and an acoustic model. The main function of a language model is to predict the probability of a word or word sequence. An acoustic model predicts the probability of generating feature X based on the pronunciation of the word W.

The traditional hybrid speech recognition system is called GMM-HMM (Gaussian Mixture Model-Hidden Markov Model). The GMM is used as the acoustic model, and the HMM is used as the language model. Even though great efforts have been made in the field of speech recognition, machine speech recognition still cannot be compared to human speech recognition. After 2009, speech recognition systems based on deep learning began to develop. In 2017, Microsoft claimed that their speech recognition system showed significant improvements over traditional speech recognition systems and was even superior to human speech recognition.

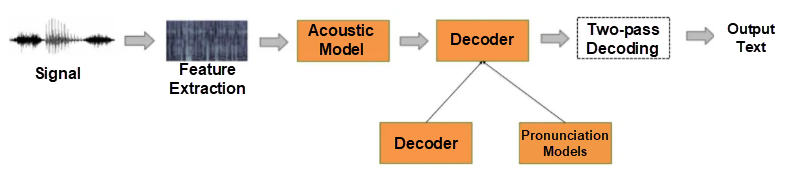

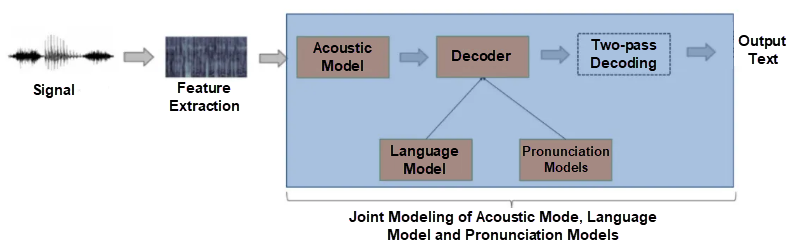

Traditional hybrid speech recognition systems contain independently optimized acoustic models, language models, and linguist-designed pronunciation models. As you can clearly see, the construction process of traditional speech recognition systems is very complicated. It requires the parallel development of multiple components, with each model independently optimized, resulting in unsatisfactory overall optimization results.

Learning from the problems of traditional speech recognition systems, end-to-end speech recognition systems combine acoustic models, decoders, language models, and pronunciation models for unified development and optimization. This allows such systems to achieve optimal performance. Experimental results show that end-to-end speech recognition systems can further reduce the error rate during recognition by over 20%. In addition, the model size is significantly reduced to only several tenths that of a traditional speech recognition model. Also, end-to-end speech recognition systems can work in the cloud.

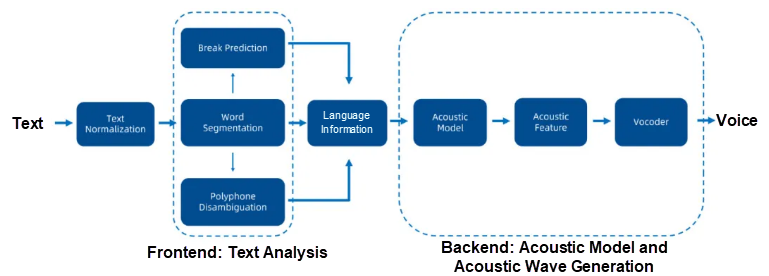

Speech synthesis consists of several components. First, the frontend text analysis conducts word splitting and break recognition to constitute language information. Then, this information is transmitted to the backend, where sound waves are generated through the acoustic model.

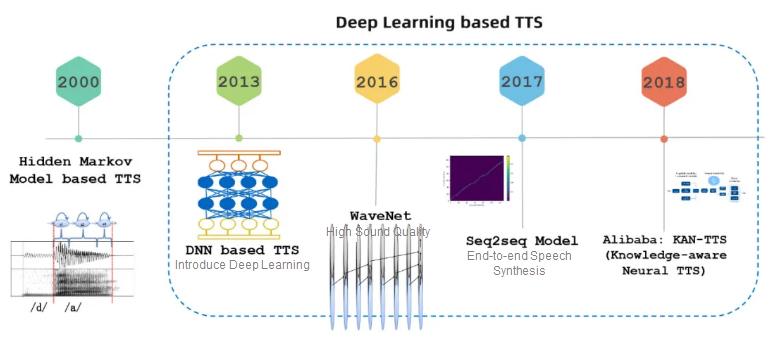

Speech synthesis technology originally started with GMMs, transitioned to HMMs in 2000, and then evolved to deep learning models in 2013. In 2016, WaveNet made a qualitative improvement in speech quality compared with previous models. End-to-end speech synthesis models emerged in 2017. In 2018, Alibaba's Knowledge-aware Neural model not only could produce speech with good acoustic quality, but also with significantly reduced model size and with improved computing efficiency, therefore enabling effective real-time speech synthesis.

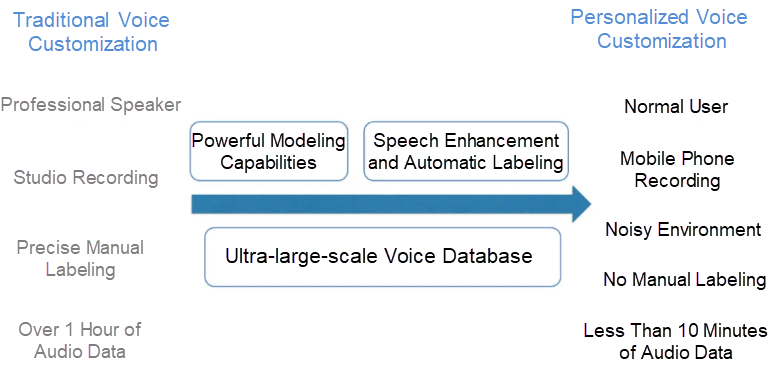

There has been a large borderline area in speech synthesis, that is, high customization cost. Generally, traditional speech customization requires professional speakers, a recording studio, precise manual labeling, and a large amount of audio data with a length typically over an hour. Nowadays, speech synthesis is attempting to provide personalized voice customization. With this feature, normal users can complete voice customization by recording on their mobile phones, even in a noisy environment. For example, you could change the voice of your in-car navigation system to the voice of a family member.

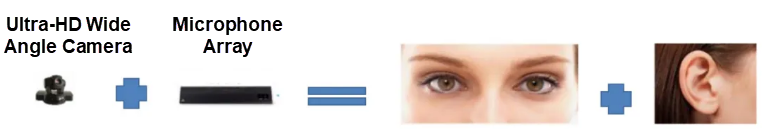

When we talk with each other, instead of only listening to sounds, we process both auditory and visual information to understand the meaning expressed by other people. Therefore, multimodal interaction solutions must be introduced into the speech interaction systems in the future. The performance of existing speech recognition systems in noisy environments remains unsatisfactory, and such systems encounter even greater difficulties in noisy public environments such as subways. The Alibaba DAMO Academy hopes to use multimodal human-machine interaction technology to combine speech recognition with computer vision so that robots can look at and listen to users. This will allow them to accurately identify the sound made by the user in noisy environments.

For example, if we want to design voice-interaction ticketing kiosks for subway stations, we must account for the fact that the user will be surrounded by many other people, who may also be talking. To solve this problem, we can give the kiosk vision capabilities, so it can identify the user who wishes to buy a ticket based on face size. The following figure shows the primary algorithm processing flow for target speaker speech separation based on facial feature monitoring information. The final step is the input of extracted audiovisual feature to a source mask estimation model based on audiovisual fusion.

Audiovisual fusion technology is already widely used in many aspects of our daily life. It is used in major transportation hubs in Shanghai, such as subway stations, Hongqiao Railway Station, Shanghai Railway Station, Shanghai South Railway Station, Hongqiao Airport, and Pudong Airport. Since March 2018, more than one million visitors have been served by audiovisual fusion applications. In addition, at the Hangzhou Computing Conference held in September 2018, the smart ordering machine based on multimodal technology, a collaboration of the DAMO Academy and KFC, took 4,500 orders in 3 days. In August 2019, DingTalk launched its new smart office hardware product M 25, which uses multimodal interaction technology for more effective interaction in noisy environments.

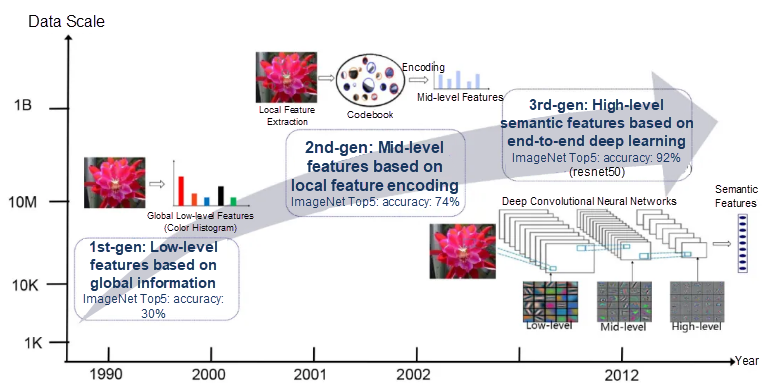

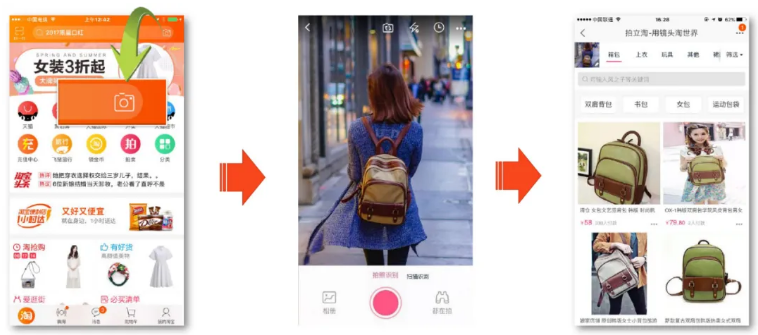

The most important part of vision technology is the recognition capabilities of image search. These capabilities have been developed over a long period of time. In the early 1990s, search was based on the low-level features of global information, such as the distribution of image color information. However, this method was not accurate. For example, the top five performers on the ImageNet database only achieved 30% accuracy. By the beginning of 2000, people began to search and recognize images basing on local feature encoding, and the search accuracy reached 70%. However, the local information had to be manually determined, so features not seen by the user could not be extracted. By around 2010, developers started to use deep learning to automatically extract local features, achieving an accuracy of 92%. This meant that image search was ready for commercial use. The following image shows the history of the development of image search and recognition technology.

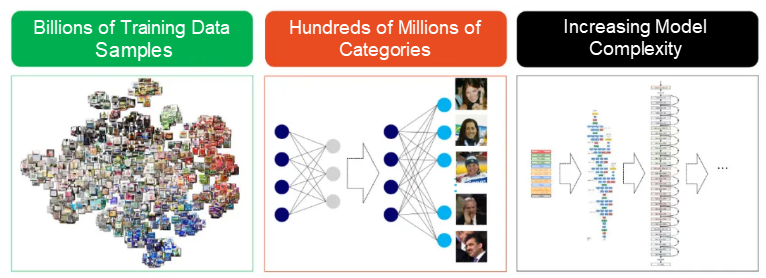

Currently, image search applications face three major challenges. First, they must deal with more and more data, with training data sets containing billions of samples. Second, image search must handle hundreds of millions of classifications. Third, model complexity is continuously increasing.

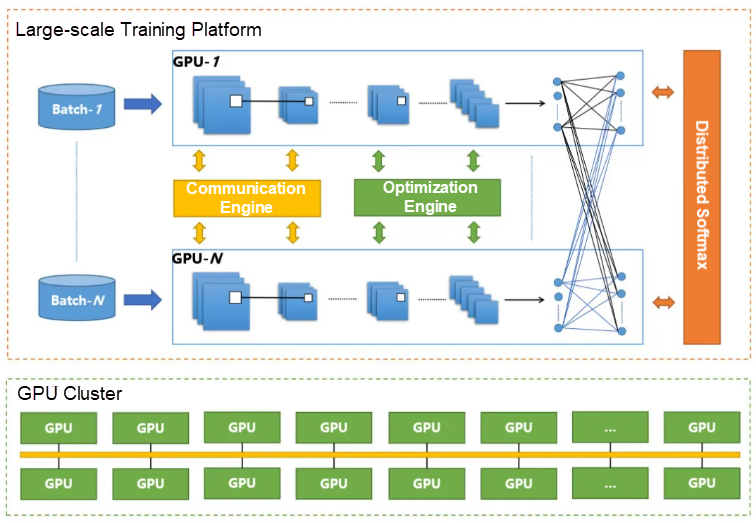

To solve these challenges, Alibaba launched Jiuding, a large-scale AI training engine. Jiuding is a large-scale training vehicle and an expert system, covering vision, NLP, and other fields. Jiuding consists of two parts. The first part is communication. As all large-scale training requires multi-machine and multi-GPU architectures, finding ways to effectively improve the model training under such architectures and reduce the cost of communication is a very important area of research. The other part is optimization algorithms. Implementing distributed optimization is also a major challenge. This large-scale training engine can classify large volumes of data and achieve ideal training results. For example, it can complete ImageNet ResNet50 training in 2.8 minutes. To process hundreds of millions of IDs, training for the classification of billions of images can be completed within seven days.

Image search is widely used in real-life scenarios. Currently, Pailitao can process ultra-large-scale image recognition and search tasks, including tasks that involve more than 400 million products, more than 3 billion images, and more than 20 million active users. It can identify more than 30 million entities, such as SKU commodities, animals, plants, vehicles, and more.

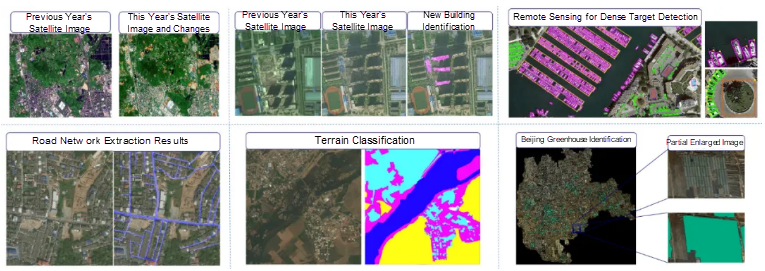

Tianxun is a recognition and analysis application for remote sensing images. It can carry out large-scale remote sensing image training, and process tasks such as road network extraction, terrain classification, new building identification, and illegal building identification.

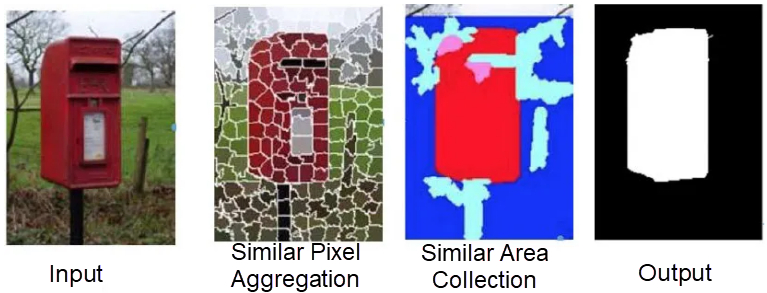

Image segmentation is to segment objects from an image. The traditional image segmentation method is shown in the figure below. It divides an image into multiple segments based on the similarity between pixels. Areas with similar pixels are aggregated for output. However, traditional image segmentation technology cannot learn semantic information. It knows there is an object in the image, but does not know what the object is. In addition, because an unsupervised learning method is used, is it not good at handling corners.

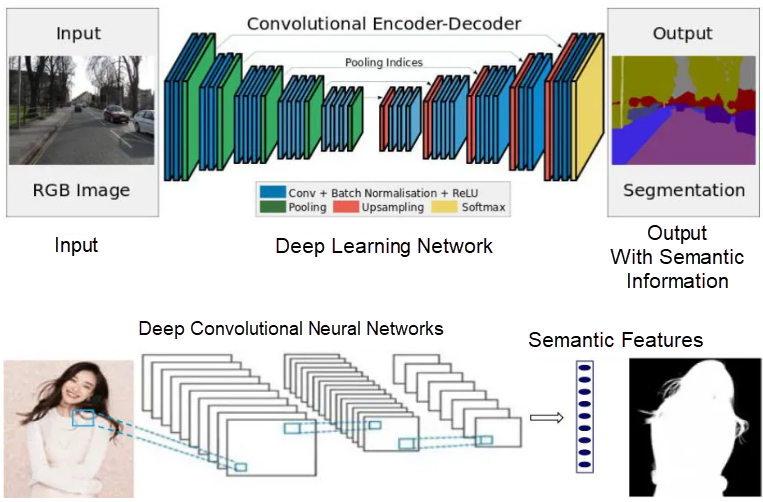

In contrast, as shown in the figure below, segmentation technology based on deep learning uses supervised learning, which incorporates many training samples. Segmentation and classification results can be obtained at the same time, so the machine can understand the instance attributes of each pixel. When given a large volume of relevant data, the encoder and decoder model can finely partition the edges of the object.

Alibaba applies image segmentation technology to all categories of products in Taobao ecosystem. With this technology, Alibaba can automatically generate product images on a white background to accelerate product publishing.

In addition, this technology can also be used to mix and match clothing and apparel. When merchants provide model images, segmentation technology can be used to dress the models in different clothing.

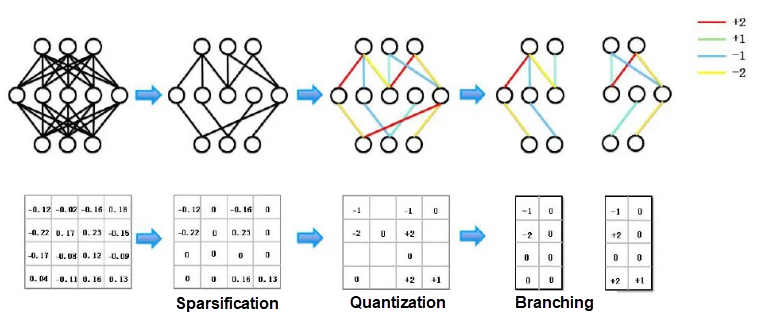

At present, deep learning technology is already widely used in many industries, but it also faces many challenges. First, deep learning models are becoming more and more complex. They require an increasing amount of computing power, already more than 20 GFLOPS, and increasing numbers of connections. When models become larger, they require more memory for data storage. Therefore, finding the right device can be a difficult task. Even with a powerful device, the model may take a long time to run. In this case, model compression technology becomes very important. It can compress a model of several dozen GB into several dozen MB, allowing it to be run quickly on any device.

Model compression methods have developed over a long period of time. For the model shown in the following figure, unimportant boundaries can be removed to sparsify the model. Then, the model boundaries are quantified and given different weights. Finally, the model is branched to change its structure. By using a field-programmable gate array (FPGA) acceleration solution, we can speed up the model inference by a factor of 170 compared to GPUs under the same QPS conditions (only 174us is required for RESNet-18.)

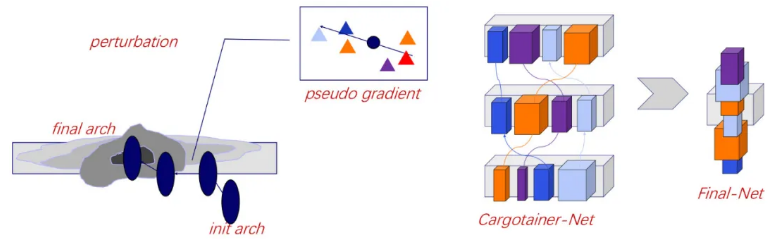

In essence, model compression changes the structure of a model. The selection of a model structure is a difficult problem. It is not a common optimization problem as different structures are in discrete spaces. The cargotainer method proposed by Alibaba can obtain accurate pseudo gradients more quickly. In fact, it won the Low-Power Image Recognition competition held at the 2019 International Conference on Computer Vision.

The FPGA-based solution is applied in Hema self-service POS terminals, where a computer vision method is used to identify whether a product has been missed out during the bar-code scanning. This cuts the GPU cost by half. In addition, our proprietary high-efficiency detection algorithm can complete various behavior analysis tasks within 1 second, and the classification accuracy of scanning actions exceeds 90%. The scenario classification accuracy is above 95%.

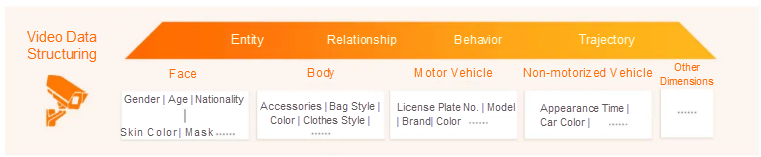

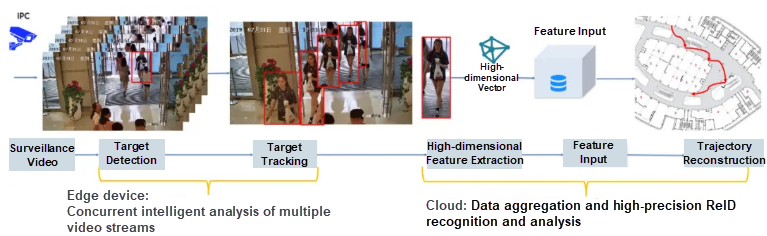

In addition, computer vision technology can be applied in video information structuring tasks, such as detecting, recognizing, and tracking target objects. The following figure shows the main procedure of a target detection, recognition, and tracking task. The task includes video decoding, target detection, target tracking, high-dimensional feature extraction, attribute extraction, and structured data storage.

Target detection technologies have also been around for a long time. Traditional detection methods, such as histogram of oriented gradients (HoG) and deformable part model (DPM), rely on handcrafted features, which means manually selected features. The problem with such methods is that they have poor robustness, cannot be generalized, and the computation workload is highly redundant. Currently, many new target detection methods based on deep learning have been proposed, such as Faster R-CNN, SSD, RetinaNet, and FCOS. Their advantages lie in that the machine can identify features itself, they can better cope with object size and appearance changes, and they provide good generalization performance. In fact, from 2008 to 2019, the accuracy of target detection increased from about 20% to about 83%.

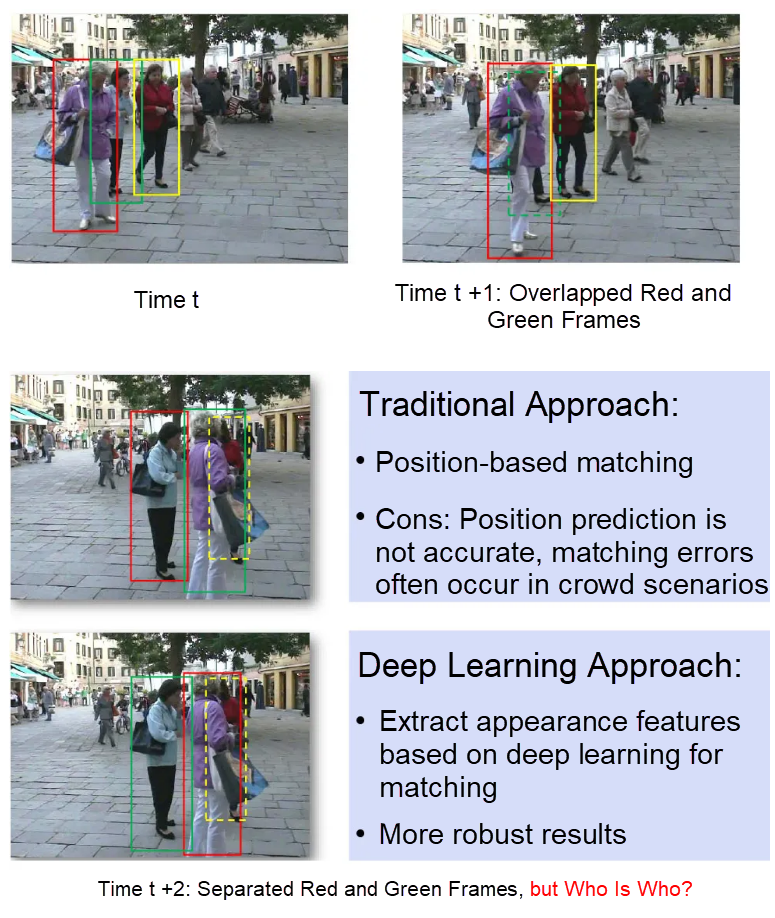

After the target is identified, we need to track it. The difficulty of target tracking is that people move around and can be blocked by other objects or people, so the target may be lost. In the following figure, the person dressed in red is covered by the person in purple. In the traditional method, matching is position-based. However, in crowd scenarios like the one above, it is difficult to predict positions accurately. This results in a high matching error rate. If we use methods based on deep learning to extract appearance features for matching, the prediction results are more robust.

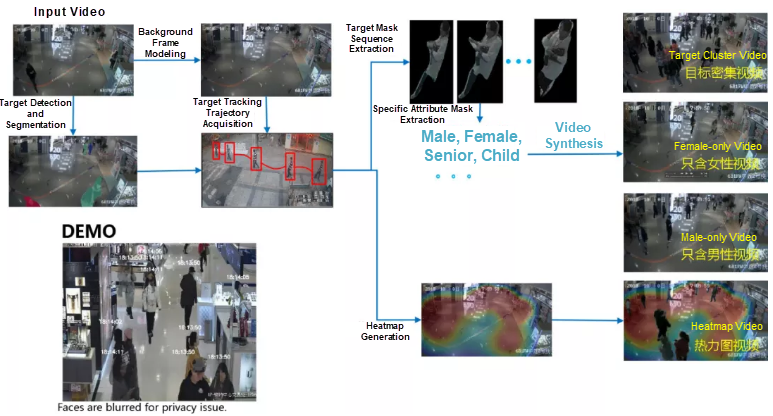

Target tracking is extensively applied in new retail scenarios. Shopping centers and brand stores require in-depth insights of passenger flows and on-site behavior to build data associations between consumers, goods, and venues. This allows retail businesses to improve their offline operational management efficiency, enhance the consumer experience, and ultimately promote business growth.

In addition, target tracking technology is used in crime prevention and investigation. Often, a crime investigation involves a large amount of video reviewing, so it is difficult to review manually. Target detection and tracking technology helps to extract the relevant information in 24 hours of video into a clip of just a few minutes to be viewed. People and objects in the video can be identified, and their trajectories can be tracked. This technology also allows you to play back specific trajectories across different time periods. If you are interested in a certain trajectory or a certain type of trajectory, you can choose to play back video content of this type, greatly reducing the time spent watching videos.

From the preceding discussion, we can see that the development of AI technology cannot proceed without the support of a large amount of data. Therefore, existing AI technology is still data-driven. For example, compared with machine translation, human translators do not translate in a purely data-driven way. This means they do not need to read hundreds of millions of lines of text. Instead, humans can use knowledge-based approaches to efficiently process existing information. In the future, we need to continue to explore how machines can move from a data-driven approach to a knowledge-driven approach.

Get to know our core technologies and latest product updates from Alibaba's top senior experts on our Tech Show series

Alibaba Named by Gartner as Third Biggest Global Provider for IaaS and First in Asia Pacific

Finding the Balance: Why FinTech Organizations Need the Hybrid Cloud

2,593 posts | 793 followers

FollowAcademy Insights - August 29, 2025

Alibaba Clouder - March 30, 2017

Alibaba Cloud MaxCompute - March 24, 2021

AdamR - April 6, 2023

PM - C2C_Yuan - August 28, 2023

Alibaba Clouder - May 4, 2021

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Image Search

Image Search

An intelligent image search service with product search and generic search features to help users resolve image search requests.

Learn More Online Education Solution

Online Education Solution

This solution enables you to rapidly build cost-effective platforms to bring the best education to the world anytime and anywhere.

Learn MoreMore Posts by Alibaba Clouder