By Huaike

This article explains the principles and use of thread pools and thread variables and gives best practices (with examples) to help developers build stable and efficient Java application services.

With the development of computing technology, improving the performance of servers through multicore processor technology has become the main direction to improve computing power.

Java-based backend servers take the lead in the field of servers. Therefore, mastering Java concurrent programming technology and making full use of the concurrent processing power of the CPU are basic skills for developers. This article briefly introduces the use of thread pools and thread variables based on the source code and practices of thread pools.

A thread pool is a pooled thread usage mode. By creating a certain number of threads, the system response speed can be improved by keeping these threads in the ready state. After the threads are used, they are returned to the thread pool. This way, thread reuse is achieved, thus reducing the consumption of system resources.

In general, thread pools have the following advantages:

1) Reduce Resource Consumption: Thread pools can reduce the consumption caused by thread creation and destruction by reusing created threads.

2) Improve Response Speed: When a task arrives, the task can be executed immediately without waiting for the thread to be created.

3) Improve the Manageability of Threads: Threads are scarce resources, and unlimited thread creation consumes system resources and reduces system stability. Thread pools can be used for unified allocation, tuning, and monitoring.

In Java, the implementation class of a thread pool is ThreadPoolExecutor, and the constructor is listed below:

public ThreadPoolExecutor(int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit timeUnit,

BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler)You can create a thread pool by using new ThreadPoolExecutor(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue, threadFactory,handler)

In the constructor, corePoolSize specifies the number of kernel threads in a thread pool. The kernel threads are always alive by default, but when allowCoreThreadTimeout is set to true, the kernel threads are also recycled if they are timeout.

In the constructor, maximumPoolSize specifies the maximum number of threads that a thread pool can hold.

In the constructor, keepAliveTime specifies the duration during which threads may remain idle before being terminated. If threads are idle for longer than that duration, the non-kernel threads will be recycled. If you set allowCoreThreadTimeout to true, the kernel threads will also be recycled in this case.

In the constructor, timeUnit specifies the time unit of the duration during which threads may remain idle. Common timeUnits are TimeUnit.MILLISECONDS, TimeUnit.SECONDS, and TimeUnit.MINUTES.

In the constructor, blockingQueue represents the task queue. Common implementation classes of thread pool task queues are listed below:

· ArrayBlockingQueue: A bounded blocking queue backed by an array. This queue orders elements first-in-first-out (FIFO) and supports fair queue accesses for threads.

· LinkedBlockingQueue: An optionally-bounded blocking queue based on linked nodes. The capacity, if unspecified, is equal to Integer.MAX_VALUE. This queue orders elements FIFO.

· PriorityBlockingQueue: An unbounded blocking queue. It sorts elements in a natural order by default. It can also specify a Comparator.

· DelayQueue: An unbounded blocking queue of Delayed elements in which an element can only be taken when its delay has expired. It is often used in cache system design and scheduled task scheduling.

· SynchronousQueue: A blocking queue that does not store elements. In SynchronousQueue, each insert operation must wait for a corresponding remove operation by another thread and vice versa.

· LinkedTransferQueue: An unbounded TransferQueue based on linked nodes. Compared with LinkedBlockingQueue, it has transfer() and tryTranfer() methods, which immediately transfer the element to the consumer waiting to receive it.

· LinkedBlockingDeque: An optionally-bounded blocking deque based on linked nodes. It allows elements to be inserted and removed from both the head and the tail of the queue.

In the constructor, threadFactory represents the thread factory. It is used to create new threads on demand. You can set parameters (such as thread name, thread group, and priority) by using threadFactory. For example, you can set the thread names in a thread pool through the Google toolkit:

new ThreadFactoryBuilder().setNameFormat("general-detail-batch-%d").build()In the constructor, rejectedExecutionHandler represents the rejection policy. When the maximum number of threads is reached, and the task queue is full, you need to execute the rejection policy. Common rejection policies are listed below:

· ThreadPoolExecutor.AbortPolicy: A handler for rejected tasks that throws a RejectedExecutionException when the task queue is full.

· ThreadPoolExecutor.DiscardPolicy: A handler for rejected tasks that silently discards the rejected task.

· ThreadPoolExecutor.CallerRunsPolicy: A handler for rejected tasks that runs the rejected task directly in the calling thread of the execute method.

· ThreadPoolExecutor.DiscardOldestPolicy: A handler for rejected tasks that discards the oldest unhandled request and then retries execute.

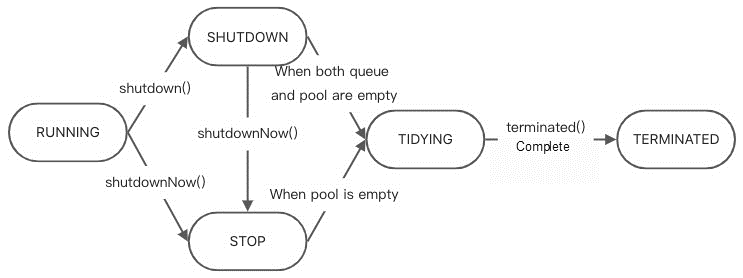

ThreadPoolExecutor has the following states:

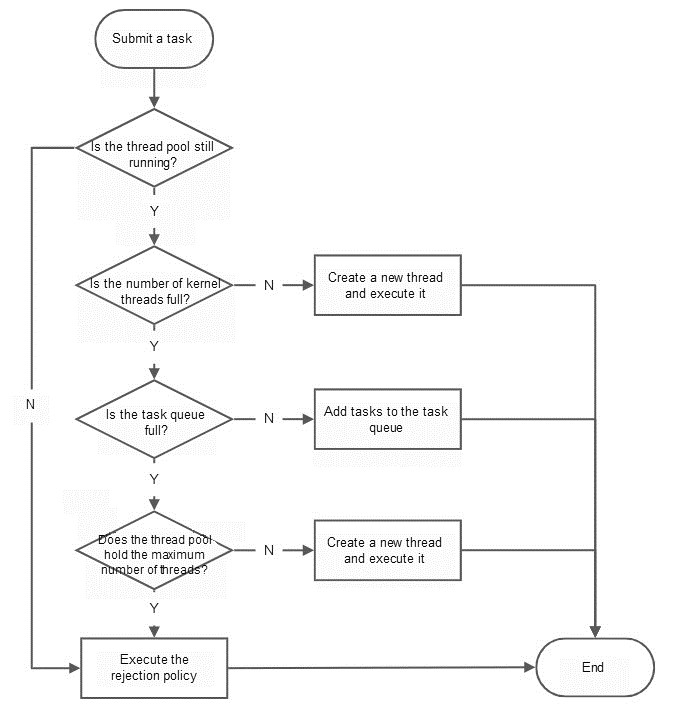

The main steps of task scheduling when a thread pool submits a task are listed below:

1) When the number of kernel threads alive in the thread pool is less than the value of the corePoolSize parameter, the thread pool will create a kernel thread to process the submitted task.

2) If the number of kernel threads alive in the thread pool is equal to the value of the corePoolSize parameter, the newly submitted task will be put into the workQueue for execution.

3) When the number of threads alive in the thread pool is equal to the value of the corePoolSize parameter and the workQueue is full, judge whether the current number of threads has reached the value of the maximumPoolSize parameter. If not, create a non-kernel thread to execute the submitted task.

4) If the current number of threads has reached the value of the maximumPoolSize parameter, and there are new tasks submitted, the rejection policy is executed.

The core code is listed below:

public void execute(Runnable command) {

if (command == null)

throw new NullPointerException();

/*

* Proceed in 3 steps:

*

* 1. If fewer than corePoolSize threads are running, try to

* start a new thread with the given command as its first

* task. The call to addWorker atomically checks runState and

* workerCount, and so prevents false alarms that would add

* threads when it shouldn't, by returning false.

*

* 2. If a task can be successfully queued, then we still need

* to double-check whether we should have added a thread

* (because existing ones died since last checking) or that

* the pool shut down since entry into this method. So we

* recheck state and if necessary roll back the enqueuing if

* stopped, or start a new thread if there are none.

*

* 3. If we cannot queue task, then we try to add a new

* thread. If it fails, we know we are shut down or saturated

* and so reject the task.

*/

int c = ctl.get();

if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

if (! isRunning(recheck) && remove(command))

reject(command);

else if (workerCountOf(recheck) == 0)

addWorker(null, false);

}

else if (!addWorker(command, false))

reject(command);

}

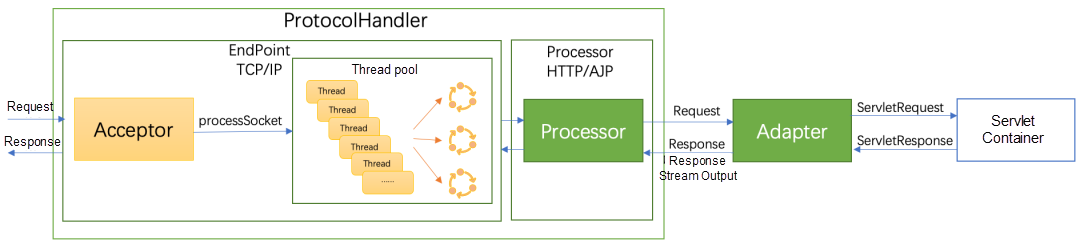

The overall architecture of Tomcat consists of connector and container. The connector is responsible for communication with the outside world, and the container is responsible for internal logic processing. In the connector:

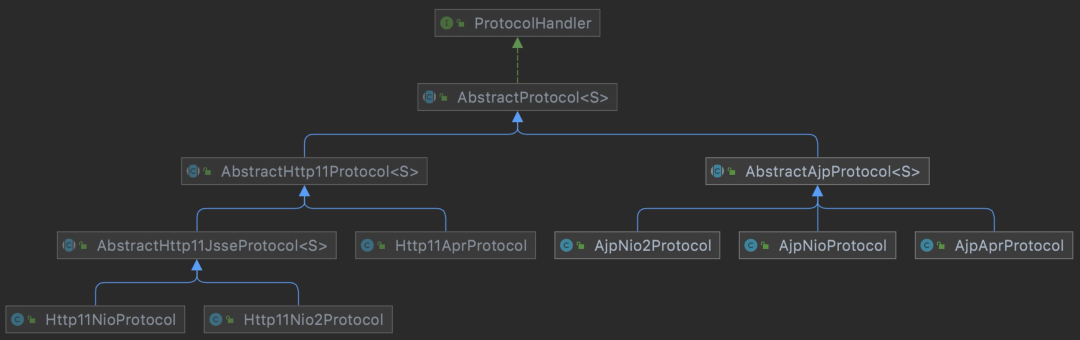

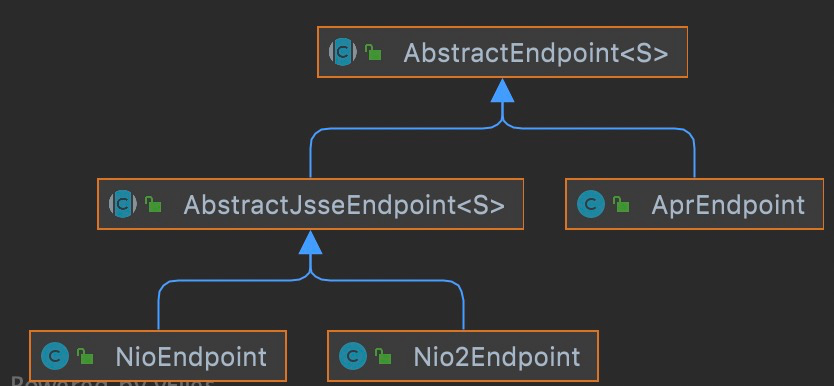

1) Use the ProtocolHandler interface to encapsulate the difference between the I/O model and the application layer protocol. The I/O model supported by Tomcat includes non-blocking I/O, asynchronous I/O, and APR, and the application layer protocol supported by Tomcat includes HTTP, HTTPS, and AJP. ProtocolHandler combines the I/O model with the application layer protocol, EndPoint is responsible for sending and receiving byte streams, and Processor is responsible for parsing byte streams into Tomcat Request/Response objects to achieve high cohesion and low coupling of functional modules. The following figure shows the inheritance relationship of the ProtocolHandler interface.

2) Convert the Tomcat Request object to a standard ServletRequest object through the Adapter

Tomcat uses a thread pool to improve the ability to process requests to quickly respond to requests. Let's take HTTP non-blocking I/O as an example to briefly analyze the Tomcat thread pool.

In Tomcat, the AbstractEndpoint class provides underlying network I/O processing. If the user does not configure a custom public thread pool, AbstractEndpoint will create a default Tomcat thread pool through the createExecutor() method.

The core code is listed below:

public void createExecutor() {

internalExecutor = true;

TaskQueue taskqueue = new TaskQueue();

TaskThreadFactory tf = new TaskThreadFactory(getName() + "-exec-", daemon, getThreadPriority());

executor = new ThreadPoolExecutor(getMinSpareThreads(), getMaxThreads(), 60, TimeUnit.SECONDS,taskqueue, tf);

taskqueue.setParent( (ThreadPoolExecutor) executor);

}TaskQueue is the Tomcat custom task queue, and ThreadPoolExecutor is the Tomcat thread pool implementation.

The custom thread pool of Tomcat inherits from java.util.concurrent.ThreadPoolExecutor and adds some member variables to more efficiently count the number of tasks that have been submitted but not completed (submittedCount) yet, including tasks that have been in the queue and tasks that have been handed over to worker threads but have not been executed yet.

/**

* Same as a java.util.concurrent.ThreadPoolExecutor but implements a much more efficient

* {@link #getSubmittedCount()} method, to be used to properly handle the work queue.

* If a RejectedExecutionHandler is not specified a default one will be configured

* and that one will always throw a RejectedExecutionException

*

*/

public class ThreadPoolExecutor extends java.util.concurrent.ThreadPoolExecutor {

/**

* The number of tasks submitted but not yet finished. This includes tasks

* in the queue and tasks that have been handed to a worker thread but the

* latter did not start executing the task yet.

* This number is always greater or equal to {@link #getActiveCount()}.

*/

// The newly added member variable, submittedCount, is used to count the number of tasks that have been submitted but not completed.

private final AtomicInteger submittedCount = new AtomicInteger(0);

private final AtomicLong lastContextStoppedTime = new AtomicLong(0L);

// 构造函数

public ThreadPoolExecutor(int corePoolSize, int maximumPoolSize, long keepAliveTime, TimeUnit unit, BlockingQueue<Runnable> workQueue, ThreadFactory threadFactory,

RejectedExecutionHandler handler) {

super(corePoolSize, maximumPoolSize, keepAliveTime, unit, workQueue, threadFactory, handler);

// Pre-start all kernel threads.

prestartAllCoreThreads();

}

}Tomcat rewrites the execute() method in the custom thread pool ThreadPoolExecutor and adds one to submittedCount for the tasks submitted for execution. In the custom ThreadPoolExecutor, when a thread pool throws RejectedExecutionException, Tomcat will call the force() method to add tasks to the TaskQueue. If the addition fails, the RejectedExecutionException is thrown after the submittedCount is reduced by one.

@Override

public void execute(Runnable command) {

execute(command,0,TimeUnit.MILLISECONDS);

}

public void execute(Runnable command, long timeout, TimeUnit unit) {

submittedCount.incrementAndGet();

try {

super.execute(command);

} catch (RejectedExecutionException rx) {

if (super.getQueue() instanceof TaskQueue) {

final TaskQueue queue = (TaskQueue)super.getQueue();

try {

if (!queue.force(command, timeout, unit)) {

submittedCount.decrementAndGet();

throw new RejectedExecutionException("Queue capacity is full.");

}

} catch (InterruptedException x) {

submittedCount.decrementAndGet();

throw new RejectedExecutionException(x);

}

} else {

submittedCount.decrementAndGet();

throw rx;

}

}

}TaskQueue (a blocking queue) is redefined in Tomcat and derived from LinkedBlockingQueue. In Tomcat, the default number of kernel threads is 10, and the maximum number of threads is 200. In order to prevent subsequent tasks from waiting in the queue after the number of threads reaches the number of kernel threads, Tomcat uses TaskQueue to rewrite the offer() method to create threads when the number of core threads reaches the threshold.

Specifically, from the implementation of the thread pool task scheduling mechanism, it can be seen that when the offer() method returns false, the thread pool will try to create new threads to achieve a quick response to tasks. The core implementation code of TaskQueue is listed below:

/**

* As task queue specifically designed to run with a thread pool executor. The

* task queue is optimised to properly utilize threads within a thread pool

* executor. If you use a normal queue, the executor will spawn threads when

* there are idle threads and you wont be able to force items onto the queue

* itself.

*/

public class TaskQueue extends LinkedBlockingQueue<Runnable> {

public boolean force(Runnable o, long timeout, TimeUnit unit) throws InterruptedException {

if ( parent==null || parent.isShutdown() ) throw new RejectedExecutionException("Executor not running, can't force a command into the queue");

return super.offer(o,timeout,unit); //forces the item onto the queue, to be used if the task is rejected

}

@Override

public boolean offer(Runnable o) {

// 1. parent is a thread pool, and it is a custom thread pool instance in Tomcat.

//we can't do any checks

if (parent==null) return super.offer(o);

// 2. When the number of threads reaches the upper limit, the newly submitted task is enqueued.

//we are maxed out on threads, simply queue the object

if (parent.getPoolSize() == parent.getMaximumPoolSize()) return super.offer(o);

// 3. When the number of submitted tasks is less than the number of threads in the thread pool, there are idle threads, and the tasks can be enqueued.

//we have idle threads, just add it to the queue

if (parent.getSubmittedCount()<=(parent.getPoolSize())) return super.offer(o);

// 4. Key point: If the current number of threads does not reach the maximum number, directly return false, and the thread pool creates a new thread.

//if we have less threads than maximum force creation of a new thread

if (parent.getPoolSize()<parent.getMaximumPoolSize()) return false;

// 5. Put the last one into the queue.

//if we reached here, we need to add it to the queue

return super.offer(o);

}

}Tomcat records the creation time of each thread through TaskThread. Runnable is wrapped with a static nested class WrappingRunnable to handle StopPooledThreadException.

/**

* A Thread implementation that records the time at which it was created.

*

*/

public class TaskThread extends Thread {

private final long creationTime;

public TaskThread(ThreadGroup group, Runnable target, String name) {

super(group, new WrappingRunnable(target), name);

this.creationTime = System.currentTimeMillis();

}

/**

* Wraps a {@link Runnable} to swallow any {@link StopPooledThreadException}

* instead of letting it go and potentially trigger a break in a debugger.

*/

private static class WrappingRunnable implements Runnable {

private Runnable wrappedRunnable;

WrappingRunnable(Runnable wrappedRunnable) {

this.wrappedRunnable = wrappedRunnable;

}

@Override

public void run() {

try {

wrappedRunnable.run();

} catch(StopPooledThreadException exc) {

//expected : we just swallow the exception to avoid disturbing

//debuggers like eclipse's

log.debug("Thread exiting on purpose", exc);

}

}

}

}When the JUC native thread pool submits tasks, if the number of worker threads reaches the number of kernel threads, the JUC native thread pool will try to put the tasks into the blocking queue. Only when the number of currently running threads does not reach the maximum number and the task queue is full will the JUC native thread pool continue to create new worker threads to process tasks.

Therefore, the JUC native thread pool cannot meet the requirement of a fast response from Tomcat.

Tomcat uses acceptCount and maxConnections parameters in EndPoint to avoid excessive request backlogs. maxConnections specifies the maximum number of connections received and processed by Tomcat at any time. When the number of connections received by Tomcat reaches the value of maxConnections, Acceptor will not read the connections in the accept queue. At this time, threads in the accept queue will be blocked until the number of connections received by Tomcat is less than the value of maxConnections (maxConnections is set to 10000 by default. If it is set to -1, the number of connections is unlimited). acceptCount specifies the length of the accept queue. When the number of connections in the accept queue reaches the value of acceptCount, the queue is full, and all incoming requests are rejected at this time. The default value is 100 (based on Tomcat version 8.5.43). Thanks to the two parameters, the default unbounded task queue used by Tomcat does not cause an OOM error.

/**

* Allows the server developer to specify the acceptCount (backlog) that

* should be used for server sockets. By default, this value

* is 100.

*/

private int acceptCount = 100;

private int maxConnections = 10000;

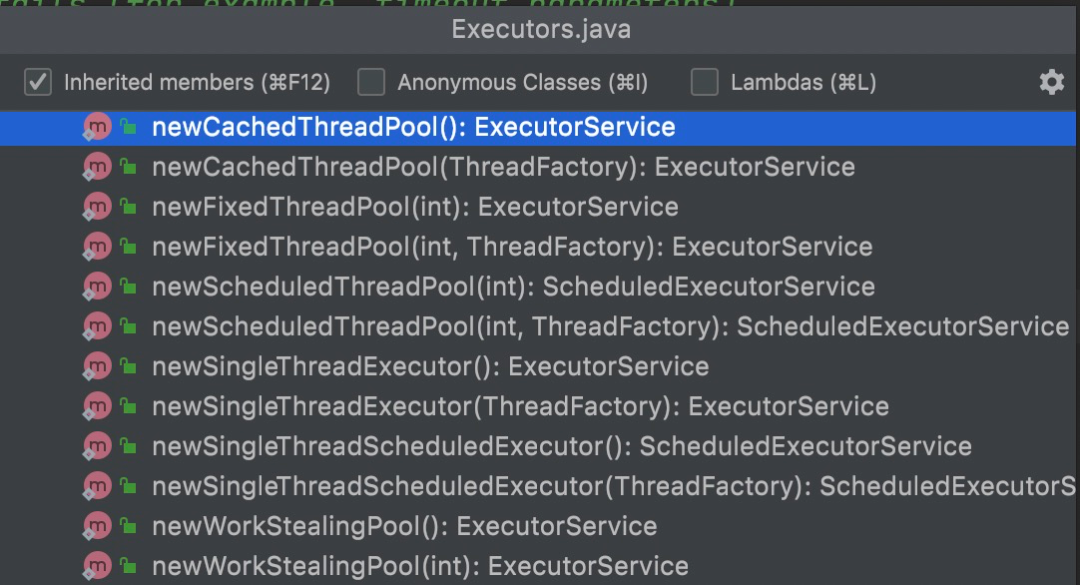

Executors are commonly used in the following ways:

1) newCachedThreadPool(): Create a cacheable thread pool, and calling execute will reuse the previously created thread (if the thread is available). If no thread is available, a new thread needs to be created and added to the thread pool. Terminate and remove threads that have been idle for 60 seconds from the cache. CachedThreadPool is suitable for concurrent execution of a large number of short-term tasks or servers with light load.

2) newFiexedThreadPool(int nThreads): Create a thread pool with a fixed number of threads. When the number of threads is less than nThreads, new threads will be created for the newly submitted tasks. When the number of threads is equal to nThreads, the newly submitted tasks will be added to the blocking queue. Threads take tasks from the blocking queue for execution. FiexedThreadPool is suitable for scenarios with a slightly heavy load but not too many tasks. It is necessary to limit the number of threads to use resources properly.

3) newSingleThreadExecutor(): Create a single thread Executor. SingleThreadExecutor is suitable for scenarios where tasks are executed serially.

4) newScheduledThreadPool(int corePoolSize): Create a thread pool that supports timed and periodic task execution. It can be used to replace the Timer class in most cases. In ScheduledThreadPool, a ScheduledThreadPoolExecutor instance is returned, and the ScheduledThreadPoolExecutor inherits from ThreadPoolExecutor. It can be seen from the code that ScheduledThreadPool is based on ThreadPoolExecutor. The value of corePoolSize is the passed-in corePoolSize, the value of maximumPoolSize is Integer.MAX_VALUE, the timeout period is 0, and workQueue is DelayedWorkQueue. ScheduledThreadPool is a scheduling pool that implements three methods: schedule(), scheduleAtFixedRate(), and scheduleWithFixedDelay(). These methods can implement operations (such as delayed execution and periodic execution).

5) newSingleThreadScheduledExecutor(): Create a ScheduledThreadPoolExecutor with corePoolSize of 1

6) newWorkStealingPool(int parallelism): Return a ForkJoinPool instance. ForkJoinPool is mainly used to implement the divide and conquer algorithm and is suitable for compute-intensive tasks.

The Executors class seems powerful and easy to use, but it has the following drawbacks:

1) The task queue length of FiexedThreadPool and SingleThreadPool is Integer.MAX_VALUE, which may accumulate a large number of requests, resulting in an OOM error.

2) The number of threads allowed to be created by CachedThreadPool and ScheduledThreadPool is Integer.MAX_VALUE, which may create a large number of threads, resulting in an OOM error.

When you use threads, you can directly call the constructor of ThreadPoolExecutor to create a thread pool and set parameters (such as corePoolSize, blockingQueue, and RejectedExecuteHandler) based on the actual business scenario.

When you use a local thread pool, if the shutdown() method is not executed, or there are other improper references after the task is executed, system resources will be easily exhausted.

In engineering practice, the following formula is commonly used to calculate the number of kernel threads:

nThreads=(w+c)/c*n*u=(w/c+1)*n*u

In this formula, w is the waiting time, c is the calculation time, n is the number of CPU cores (it is usually obtained through the Runtime.getRuntime().availableProcessors() method), and u is the target CPU utilization (value range is from 0 to 1). Under the condition of maximizing CPU utilization, when the task being processed is a compute-intensive task, the waiting time w is 0, and the number of kernel threads is equal to the CPU cores.

The calculation formula above is the recommended number of kernel threads in an ideal situation. However, different systems and applications may have certain differences when running different tasks. Therefore, the optimal number of threads also needs to be fine-tuned based on the actual running situation and stress test performance of the tasks.

We recommend handling exceptions when using multiple threads to identify, analyze, and solve problems better. Exception handling has the following solutions:

public void destroy() {

try {

poolExecutor.shutdown();

if (!poolExecutor.awaitTermination(AWAIT_TIMEOUT, TimeUnit.SECONDS)) {

poolExecutor.shutdownNow();

}

} catch (InterruptedException e) {

// If the current thread is interrupted, cancel all tasks again.

pool.shutdownNow();

// Maintain the interrupt status.

Thread.currentThread().interrupt();

}

}We should call the shutdown() method first to achieve a graceful shutdown. It means that the thread pool will not receive any new tasks, but the submitted tasks will continue to be executed. Then, we should call the awaitTermination() method. It can set the maximum timeout period before the thread pool is closed. If the thread pool can be closed normally before the timeout period expires, it will return true. If not, false will be returned. Generally, we need to estimate a reasonable timeout period based on the business scenario and then call this method.

If the awaitTermination() method returns false, but you want to recover other resources after the thread pool is closed, you can consider calling the shutdownNow() method. At this time, all unprocessed tasks in the queue will be discarded, and the interrupt flag bit of each thread in the thread pool will be set. The shutdownNow() method does not guarantee that a running thread will stop working unless the task submitted to the thread responds correctly to the interrupt.

/**

* In the main thread, enable the EagleEye asynchronous mode and pass CTX to the multithreading tasks.

**/

// To prevent the EagleEye link from being lost, you need to pass CTX.

RpcContext_inner ctx = EagleEye.getRpcContext();

// Enable the asynchronous mode.

ctx.setAsyncMode(true);

/**

* Set the EagleEye RPC environment in the thread pool task thread.

**/

private void runTask() {

try {

EagleEye.setRpcContext(ctx);

// do something...

} catch (Exception e) {

log.error("requestError, params: {}", this.params, e);

} finally {

// Determine whether the current task is executed by the main thread. If the Rejected policy is CallerRunsPolicy, check the current thread.

if (mainThread != Thread.currentThread()) {

EagleEye.clearRpcContext();

}

}

}The ThreadLocal class provides thread-local variables. These variables are different from ordinary variables. Each thread that accesses thread-local variables (through the get() method or the set() method) has an independently initialized copy of the variable. Therefore, ThreadLocal does not have the problem of multithreading contention and does not need to lock the thread separately.

Three common methods for ThreadLocal are the get() method, the set() method, and the initialValue() method.

In Java, SimpleDateFormat has thread safety problems. In order to use SimpleDateFormat safely, in addition to creating SimpleDateFormat local variables and adding synchronization lock, we can use ThreadLocal.

/**

* Use ThreadLocal to define a global SimpleDateFormat.

*/

private static ThreadLocal<SimpleDateFormat> simpleDateFormatThreadLocal = new

ThreadLocal<SimpleDateFormat>() {

@Override

protected SimpleDateFormat initialValue() {

return new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

}

};

// Usage

String dateString = simpleDateFormatThreadLocal.get().format(calendar.getTime());Thread internally maintains a ThreadLocal.ThreadLocalMap instance (threadLocals). ThreadLocal operations are performed around threadLocals.

/**

* Returns the value in the current thread's copy of this

* thread-local variable. If the variable has no value for the

* current thread, it is first initialized to the value returned

* by an invocation of the {@link #initialValue} method.

*

* @return the current thread's value of this thread-local

*/

public T get() {

// 1. Obtain the current thread.

Thread t = Thread.currentThread();

// 2. Obtain the ThreadLocalMap variable t.threadLocals inside the current thread.

ThreadLocalMap map = getMap(t);

// 3. Check whether the map is null.

if (map != null) {

// 4. Use the current threadLocal variable to get entry.

ThreadLocalMap.Entry e = map.getEntry(this);

// 5. Check whether the entry is null.

if (e != null) {

// 6. Return Entry.value.

@SuppressWarnings("unchecked")

T result = (T)e.value;

return result;

}

}

// 7. Set initial value if map/entry is null.

return setInitialValue();

}

/**

* Variant of set() to establish initialValue. Used instead

* of set() in case user has overridden the set() method.

*

* @return the initial value

*/

private T setInitialValue() {

// 1. Initialize value. If the value is rewritten, use the new value. The default value is null.

T value = initialValue();

// 2. Obtain the current thread.

Thread t = Thread.currentThread();

// 3. Obtain the ThreadLocalMap variable inside the current thread.

ThreadLocalMap map = getMap(t);

if (map != null)

// 4. Set key to threadLocal, and set value to value, if the map is not null.

map.set(this, value);

else

// 5. Create a ThreadLocalMap object if the map is null.

createMap(t, value);

return value;

}

/**

* Create the map associated with a ThreadLocal. Overridden in

* InheritableThreadLocal.

*

* @param t the current thread

* @param firstValue value for the initial entry of the map

*/

void createMap(Thread t, T firstValue) {

t.threadLocals = new ThreadLocalMap(this, firstValue);

}

/**

* Construct a new map initially containing (firstKey, firstValue).

* ThreadLocalMaps are constructed lazily, so we only create

* one when we have at least one entry to put in it.

*/

ThreadLocalMap(ThreadLocal<?> firstKey, Object firstValue) {

// 1. Initialize the entry array. Size: 16

table = new Entry[INITIAL_CAPACITY];

// 2. Calculate the index of the value.

int i = firstKey.threadLocalHashCode & (INITIAL_CAPACITY - 1);

// 3. Assign a value to the corresponding index.

table[i] = new Entry(firstKey, firstValue);

// 4. entry size

size = 1;

// 5. Set the threshold: threshold = len * 2 / 3.

setThreshold(INITIAL_CAPACITY);

}

/**

* Set the resize threshold to maintain at worst a 2/3 load factor.

*/

private void setThreshold(int len) {

threshold = len * 2 / 3;

}/**

* Sets the current thread's copy of this thread-local variable

* to the specified value. Most subclasses will have no need to

* override this method, relying solely on the {@link #initialValue}

* method to set the values of thread-locals.

*

* @param value the value to be stored in the current thread's copy of

* this thread-local.

*/

public void set(T value) {

// 1. Obtain the current thread.

Thread t = Thread.currentThread();

// 2. Obtain the ThreadLocalMap variable inside the current thread.

ThreadLocalMap map = getMap(t);

if (map != null)

// 3. Set the value.

map.set(this, value);

else

// 4. Create a ThreadLocalMap object if the map is null.

createMap(t, value);

}As shown in the JDK source code, the Entry in ThreadLocalMap is of a weak reference type. It means that if this ThreadLocal is only referenced by this Entry but not strongly referenced by other objects, it will be recycled in the next GC.

static class ThreadLocalMap {

/**

* The entries in this hash map extend WeakReference, using

* its main ref field as the key (which is always a

* ThreadLocal object). Note that null keys (i.e. entry.get()

* == null) mean that the key is no longer referenced, so the

* entry can be expunged from table. Such entries are referred to

* as "stale entries" in the code that follows.

*/

static class Entry extends WeakReference<ThreadLocal<?>> {

/** The value associated with this ThreadLocal. */

Object value;

Entry(ThreadLocal<?> k, Object v) {

super(k);

value = v;

}

}

// ...

}EagleEye is widely used as an end-to-end monitoring system within the Alibaba Group. Information (such as traceId, rpcId, and pressure indicators) is stored in the ThreadLocal variable of EagleEye and passed between HSF and Dubbo service calls. EagleEye uses Filter to initialize data to ThreadLocal. Some relevant code is listed below:

EagleEyeHttpRequest eagleEyeHttpRequest = this.convertHttpRequest(httpRequest);

// 1. Store the traceId and rpcId data in the ThreadLocal variable of EagleEye by initializing.

EagleEyeRequestTracer.startTrace(eagleEyeHttpRequest, false);

try {

chain.doFilter(httpRequest, httpResponse);

} finally {

// 2. Clear the ThreadLocal variable value.

EagleEyeRequestTracer.endTrace(this.convertHttpResponse(httpResponse));

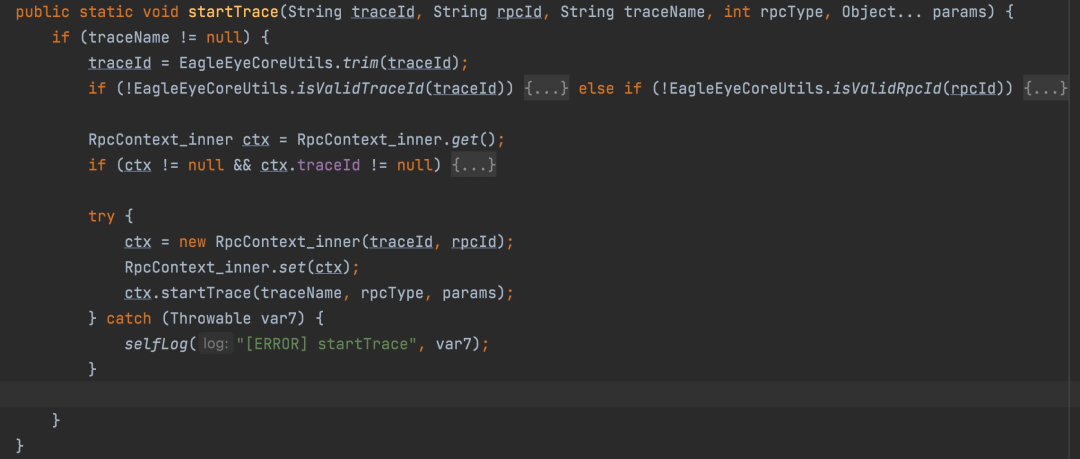

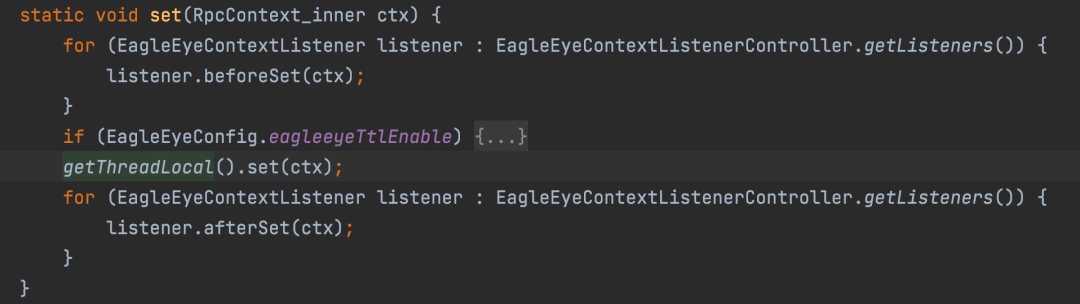

}In EagleEyeFilter, the EagleEyeRequestTracer.startTrace() method is used for initialization. After the pre-placed parameter conversion, the EagleEye context parameter is stored in ThreadLocal through the startTrace() overloading method. The relevant code is listed below:

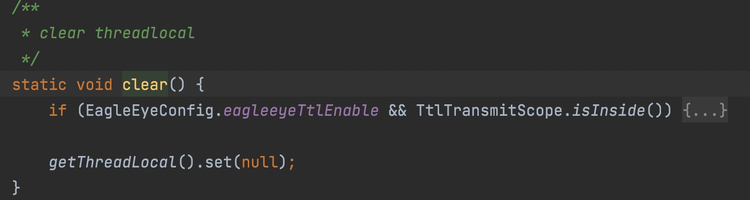

EagleEyeFilter uses the EagleEyeRequestTracer. endTrace() method to end the call chain and clean up the data in ThreadLocal using the clear() method. The relevant code is listed below:

In the original link of an equity claim, the equity claim request can only be initiated after the first-level page is opened through an app. The request reaches the server after passing through the Mobile Taobao Open Platform (MTOP), and the server obtains the current session information through MTOP SDK.

In the XX project, the equity claim link has been upgraded, and the equity claim request is initiated through the server at the same time as the requests are initiated on the first-level page. Specifically, when the server processes the first-level page request, it calls the HSF or Dubbo interface to claim the equity. Therefore, when the RPC call is initiated, it needs to carry the user's current session information, and the service provider extracts and injects the session information into the MTOP context, so information (such as session id) can be obtained through the MTOP SDK. When a developer implements ThreadLocal, the following problems occur due to improper use of ThreadLocal:

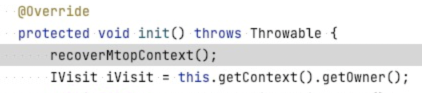

In the equity claim service, the application builds an efficient and thread-safety dependency injection framework. The business logic modules based on the framework are usually abstracted into the form of xxxModule, and there is a mesh dependency relationship among modules. The framework will automatically call the init() method according to the dependency relationship (where the init() method of the dependent module is executed first).

In the application, the main entry of the equity claim interface is the CommonXXApplyModule class, and it depends on the XXSessionModule. When the request comes, the init() method will be called in turn according to the dependency relationship, so the init method of XXSessionModule will be executed first. However, the developer expected to restore the MTOP context by calling the recoverMtopContext() method in the init method of the CommonXXApplyModule class. Since the call time of the recoverMtopContext () method is too late, the XXSessionModule module cannot get the correct session id and other information, resulting in equity claim failure.

When the equity claim service processes a request, if the current thread has processed an equity claim request before and the ThreadLocal variable value is not cleared, the XXSessionModule will obtain the session information of the previous request through the MTOP SDK, thus causing dirty data.

The MTOP context information is injected through the recoverMtopContext method at the entry of dependency injection framework AbstractGate#visit (or in the XXSessionModule), and the threadlocal variable value of the current request is cleaned in the finally code block of the entry method.

If a strong reference type is used, the reference chain of threadlocal is Thread → ThreadLocal.ThreadLocalMap → Entry[] → Entry → key (threadLocal object) and value. In this scenario, as long as this thread is running (such as the thread pool scenario), if the remove() method is not called, the object and all associated strongly referenced objects will not be collected by the garbage collector.

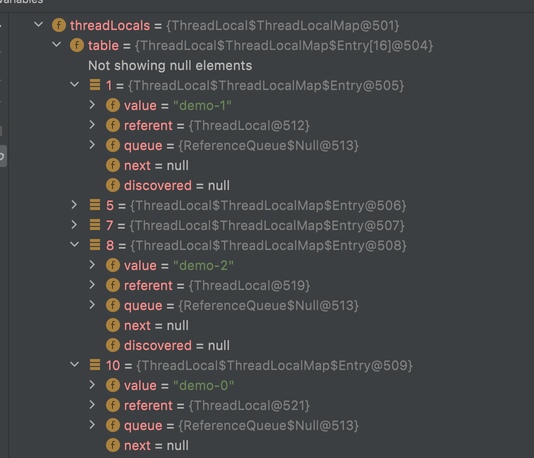

If the static keyword is used for modification, one thread only corresponds to one thread variable. Otherwise, the threadlocal semantics becomes perThread-perInstance, making memory leak occurrences easier, as shown in the following example:

public class ThreadLocalTest {

public static class ThreadLocalDemo {

private ThreadLocal<String> threadLocalHolder = new ThreadLocal();

public void setValue(String value) {

threadLocalHolder.set(value);

}

public String getValue() {

return threadLocalHolder.get();

}

}

public static void main(String[] args) {

int count = 3;

List<ThreadLocalDemo> list = new LinkedList<>();

for (int i = 0; i < count; i++) {

ThreadLocalDemo demo = new ThreadLocalDemo();

demo.setValue("demo-" + i);

list.add(demo);

}

System.out.println();

}

}In the debug above (on line 22 of the main method), you can see there are three threadlocal instances in the threadLocals variable of the thread. In engineering practice, when we use threadlocal, it is expected that a thread only has one threadlocal instance. Therefore, if a static keyword is not used for modification, the expected semantics will change, and memory leakage will occur.

If there is no cleanup operation, the results are listed below:

1) Memory Leakage: The Key in ThreadLocalMap is a weak reference, while the value is a strong reference. This leads to a problem. When ThreadLocal does not have a strong reference to an external object, the weak reference Key will be recycled when GC occurs, while the Value will not be recycled, so the elements in Entry will appear . If the thread that creates ThreadLocal runs continuously, the value in this Entry object may not be recycled, which may lead to memory leakage.

2) Dirty Data: Due to thread reuse, when user 1 requests, business data may be saved in ThreadLocal. If it is not cleaned up, when the request of user 2 comes in, the data of user 1 may be read.

It is recommended to use try...finally to clean up.

When we use ThreadLocal, we usually expect the semantics to be perThread. If static is not used for modification, the semantics will be perThread-perInstance. In the thread pool scenario, if static is not used for modification, the number of thread-related instances may reach M * N (where M is the number of threads and N is the number of instances of the corresponding class). It is easy to cause memory leakage: https://errorprone.info/bugpattern/ThreadLocalUsage

In the application, use ThreadLocal.withInitial(Supplier<? extends S> supplier) carefully to create ThreadLocal objects. Once ThreadLocal of different threads uses the same Supplier object, isolation is impossible, such as:

// Counterexample, in fact, the shared object obj is used without isolation.

private static ThreadLocal<Obj> threadLocal = ThreadLocal.withIntitial(() -> obj);Thread pools and thread variables are widely used in Java engineering practices. Since improperly using thread pools and thread variables often causes safety production accidents, correctly using thread pools and thread variables is a basic skill that every developer must master. This article briefly introduces the principles and usage practices of thread pools and thread variables. Developers can combine best practices and practical application scenarios to use threads and thread variables correctly to build stable and efficient Java application services.

Olympic Esports Week Taps Alibaba's Sustainability Platform to Measure Carbon Emissions

1,340 posts | 470 followers

FollowAlibaba Cloud Community - July 14, 2023

Alibaba Cloud Community - August 20, 2024

Alibaba Cloud Community - December 16, 2024

Alibaba Clouder - June 20, 2017

Alibaba Cloud Community - July 29, 2024

Alibaba Cloud Community - January 5, 2023

Great breakdown of Java thread pool usage and thread-local variables. These practices are essential for building scalable and maintainable applications, but they also highlight the importance of following a well-structured software development process. A clear process ensures that concurrency handling, testing, and optimization are integrated seamlessly into the project’s lifecycle.Here’s a useful guide on the topic: https://www.cleveroad.com/blog/software-development-process/

1,340 posts | 470 followers

Follow Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Web Hosting

Web Hosting

Explore how our Web Hosting solutions help small and medium sized companies power their websites and online businesses.

Learn MoreMore Posts by Alibaba Cloud Community

5788954051016980 August 8, 2025 at 9:03 am

Great breakdown of Java thread pool usage and thread-local variables. These practices are essential for building scalable and maintainable applications, but they also highlight the importance of following a well-structured software development process. A clear process ensures that concurrency handling, testing, and optimization are integrated seamlessly into the project’s lifecycle.Here’s a useful guide on the topic: https://www.cleveroad.com/blog/software-development-process/