By Wang Xianghu, from Apache Hudi Community

Apache Hudi is an open-source data lake framework developed by Uber. It has been incubated in the Apache Incubator since January 2019 and graduated as a top-level Apache project in May 2020. It is currently one of the most popular data lake frameworks.

Since its inception, Hudi has been using Spark as its data processing engine. If users want to use Hudi as their data lake framework, they must introduce Spark into the technology stacks of their platforms. A few years ago, it was common to use Spark as a processing engine for big data it can perform batch processing, micro-batch streaming stimulation, and unification of stream and batch processing to solve stream and batch problems. However, with the development of big data technology in recent years, Flink, which is also a big data processing engine, has entered people's views gradually. It occupied a certain market in the field of computing engines, so the dominance in big data processing engines no longer existed. In communities, forums, and other platforms of big data technologies, demands of the support for the Flink in Hudi have emerged and spread. Therefore, it is valuable to enable Hudi to support Flink, while decoupling Hudi from Spark is the prerequisite for integrating Flink.

Throughout the big data field, all mature, active, and vital frameworks are designed elegantly. They can all be integrated with other frameworks. They rely on each other's capabilities and have specific strengths. Therefore, decoupling Hudi from Spark is a method of turning Hudi into an engine-independent data lake framework. This undoubtedly makes more possibilities for Hudi integration with other components, enabling Hudi to better integrate into the big data ecosystem.

The use of Spark API in Hudi is as common as the use of List in our daily development. Spark RDD is used everywhere as the main data structure, whether reading data from the data source or writing data to the table. Even common tool classes can be implemented through Spark API. Hudi is a general-purpose data lake framework implemented by Spark with deep-rooted binding to Spark.

Flink is the primary engine being integrated after this decoupling. The core abstraction of Flink is quite different from that of Spark. Spark considers that data is bounded, and its core abstraction is a limited data set. In essence, Flink considers data to be the stream itself. The core abstraction DataStream has various operations on data. In addition, Hudi needs to operate multiple Resilient Distributed Datasets (RDDs) at different points simultaneously. It also needs to combine the processing result of one RDD with another RDD for joint processing. Abstraction differences and the reuse of intermediate results during implementation make it difficult for Hudi to use a unified API on decoupling abstraction. Thus, it is hard to operate RDD and DataStream simultaneously.

Theoretically, Hudi uses Spark as its computing engine for the distributed computing capability of Spark and the rich operator capabilities of RDD. Aside from the distributed computing capability, RDD is more of a data structure abstraction in Hudi and is essentially a bounded data set. Therefore, it is feasible to replace RDD with List, but performance may be reduced in this case. We can retain the setting of the bounded dataset as a basic operating unit to ensure the performance and stability of the Hudi Spark. The API for the main operation is unchanged in Hudi. RDD is extracted as a generic, and the Spark engine implementation still uses RDD. Other engines use List or other bounded datasets based on actual conditions.

Decoupling Principles:

1) Unify Generics: JavaRDD, JavaRDD, and JavaRDD used in the Spark API are replaced by unified generics, such as I, K, and O.

2) Remove Spark: All APIs in the abstraction layer must be irrelevant to Spark. When it comes to specific operations that are difficult to implement in the abstraction layer, rewrite them as abstract methods, and introduce subclasses of Spark.

For example, the JavaSparkContext#map() method is used in multiple places in Hudi. Removing Spark means that JavaSparkContext needs to be hidden. We introduce the HoodieEngineContext#map() method to solve this problem. This method shields the implementation details of the map object for removing Spark in the abstraction layer.

3) The abstraction layer minimizes changes to ensure the original functions and performance of Hudi.

4) Replace JavaSparkContext with the abstract class HoodieEngineContext to provide running environment context.

In essence, write operations of Hudi are batch processing. The continuous mode of DeltaStreamer is implemented by circular batch processing. To use unified APIs, Hudi accumulates a batch of data during the integration with Flink, processes them, and submits them. Here, we use List to accumulate data for Flink.

The batch collection is implemented by using the time window. However, when a window has no data input, there is no data output. Thus, it is difficult for Sink to determine whether the same batch of data has been processed. Through the Flink checkpoint mechanism to accumulate batches, data between every two barriers is a batch. When there is no data in a subtask, it will use the mock result data. By doing so, once each subtask is distributed with the result data, the batch of data can be considered processed in Sink. Then, the commit operation can be executed.

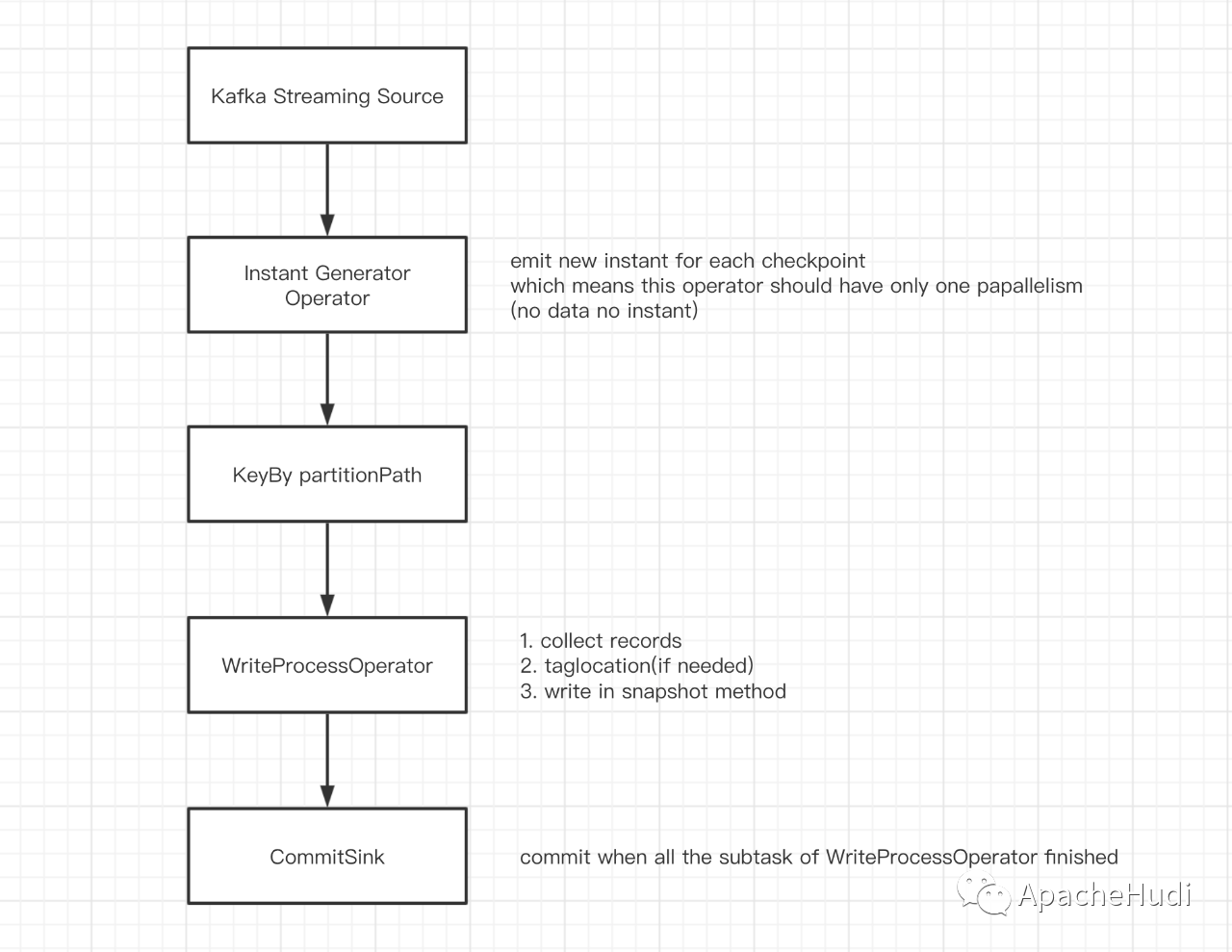

The Directed Acyclic Graph (DAG) is listed below:

InstantGeneratorOperator generates a globally unique instant. When the last instant is not completed, or there is no data in the current batch, it does not create any new instant.KeyBy partitionPath divides partitions based on partitionPath to avoid writing to the same partition by multiple subtasks.WriteProcessOperator performs write operations. If the current partition has no data, it sends empty result data to the downstream.CommitSink receives the computing results from upstream tasks. When the parallel computing results are received, it considers that all the upstream subtasks have been completed and executes the commit operation.Note: The InstantGeneratorOperator and WriteProcessOperator are custom Flink operators. The former checks the status of the last instant in case of internal process blocking, so there is only one instant in inflight or requested status. WriteProcessOperator performs the write operations, which are triggered at the checkpoint.

HoodieTable

/**

* Abstract implementation of a HoodieTable.

*

* @param <T> Sub type of HoodieRecordPayload

* @param <I> Type of inputs

* @param <K> Type of keys

* @param <O> Type of outputs

*/

public abstract class HoodieTable<T extends HoodieRecordPayload, I, K, O> implements Serializable {

protected final HoodieWriteConfig config;

protected final HoodieTableMetaClient metaClient;

protected final HoodieIndex<T, I, K, O> index;

public abstract HoodieWriteMetadata<O> upsert(HoodieEngineContext context, String instantTime,

I records);

public abstract HoodieWriteMetadata<O> insert(HoodieEngineContext context, String instantTime,

I records);

public abstract HoodieWriteMetadata<O> bulkInsert(HoodieEngineContext context, String instantTime,

I records, Option<BulkInsertPartitioner<I>> bulkInsertPartitioner);

......

}HoodieTable is one of the core abstractions of Hudi, which defines the insert, upsert, bulkInsert, and other operations supported by the table. Let's take upsert as an example. The input data is changed from JavaRDD inputRdds to I records. During runtime, JavaSparkContext jsc is replaced with the HoodieEngineContext context.

From the class annotations, we can see that T, I, K, and O represent the load data type, input data type, primary key type, and output data type of Hudi operations, respectively. These generics will run through the entire abstraction layer.

HoodieEngineContext

/**

* Base class contains the context information needed by the engine at runtime. It will be extended by different

* engine implementation if needed.

*/

public abstract class HoodieEngineContext {

public abstract <I, O> List<O> map(List<I> data, SerializableFunction<I, O> func, int parallelism);

public abstract <I, O> List<O> flatMap(List<I> data, SerializableFunction<I, Stream<O>> func, int parallelism);

public abstract <I> void foreach(List<I> data, SerializableConsumer<I> consumer, int parallelism);

......

}HoodieEngineContext acts as JavaSparkContext, which provides all the information that JavaSparkContext can provide but also provides encapsulates map, flatMap, foreach, and many other methods. It also hides detailed implementations of many methods, including JavaSparkContext#map(), JavaSparkContext#flatMap(), and JavaSparkContext#foreach().

Lets's take the map method as an example, the map method implementation of HoodieSparkEngineContext in Spark is listed below:

@Override

public <I, O> List<O> map(List<I> data, SerializableFunction<I, O> func, int parallelism) {

return javaSparkContext.parallelize(data, parallelism).map(func::apply).collect();

}In the engine that operates List, different methods need to pay attention to thread safety issues and use parallel() with caution. The implementation is listed below:

@Override

public <I, O> List<O> map(List<I> data, SerializableFunction<I, O> func, int parallelism) {

return data.stream().parallel().map(func::apply).collect(Collectors.toList());

}Note: Exceptions thrown in the map function can be solved by packing the SerializableFunction func.

This is a brief introduction of the SerializableFunction:

@FunctionalInterface

public interface SerializableFunction<I, O> extends Serializable {

O apply(I v1) throws Exception;

}This method is a variant of java.util.function.Function. Unlike java.util.function.Function, SerializableFunction can be serialized and throws exceptions. The function is introduced because the input parameters that the JavaSparkContext#map() function can receive must be serializable. In addition, multiple places need to throw exceptions in Hudi logic. However, the code of try catch in Lambda expressions is slightly bloated and less elegant.

In April 2020, Yang Hua (@vinoyang) and Wang Xianghu (@wangxianghu) from T3 Travel designed and finalized the decoupling scheme together with Li Shaofeng (@leesf) from Alibaba and other partners.

In April 2020, Wang Xianghu completed the coding implementation internally and conducted preliminary verification, concluding that the scheme was feasible.

In July 2020, Wang Xianghu introduced the design implementation and Spark version based on the new abstract implementation to the community HUDI-1089.

On September 26, 2020, SF Technology publicly released PR on Apache Flink Meetup in Shenzhen, which was based on the enhanced version of T3 internal branches. This movement made SF the first enterprise in the industry to use Flink to write data online to Hudi.

On October 2, 2020, HUDI-1089 was merged into the main Hudi branch, marking the completion of Hudi-Spark decoupling.

1) Promotion on the Integration of Hudi and Flink

We want to introduce the integration of Flink and Hudi to the community as soon as possible. Only Kafka data sources may be supported initially.

2) Performance Optimization

The potential performance issues of Flink were not considered in this decoupling process to ensure the stability and performance of Hudi-Spark.

3) Third-Party Package Development of Flink-Connector-Hudi

Bind Hudi-Flink to a third-party package. Users can read any data source in the Flink application by encoding and using this third-party package to write data to Hudi.

Building an Enterprise-Level Real-Time Data Lake Based on Flink and Iceberg

Apache Iceberg 0.11.0: Features and Deep Integration with Flink

206 posts | 54 followers

FollowApache Flink Community - May 10, 2024

Apache Flink Community - June 11, 2024

ApsaraDB - February 29, 2024

Apache Flink Community - July 5, 2024

Apache Flink Community China - September 26, 2021

Apache Flink Community China - August 12, 2022

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Apache Flink Community