By Geng Jiangtao, an Engineer in the Intelligent Customer Services Team at Alibaba Cloud

The following content is based on the video by Geng Jiangtao and the accompanying PowerPoint slides.

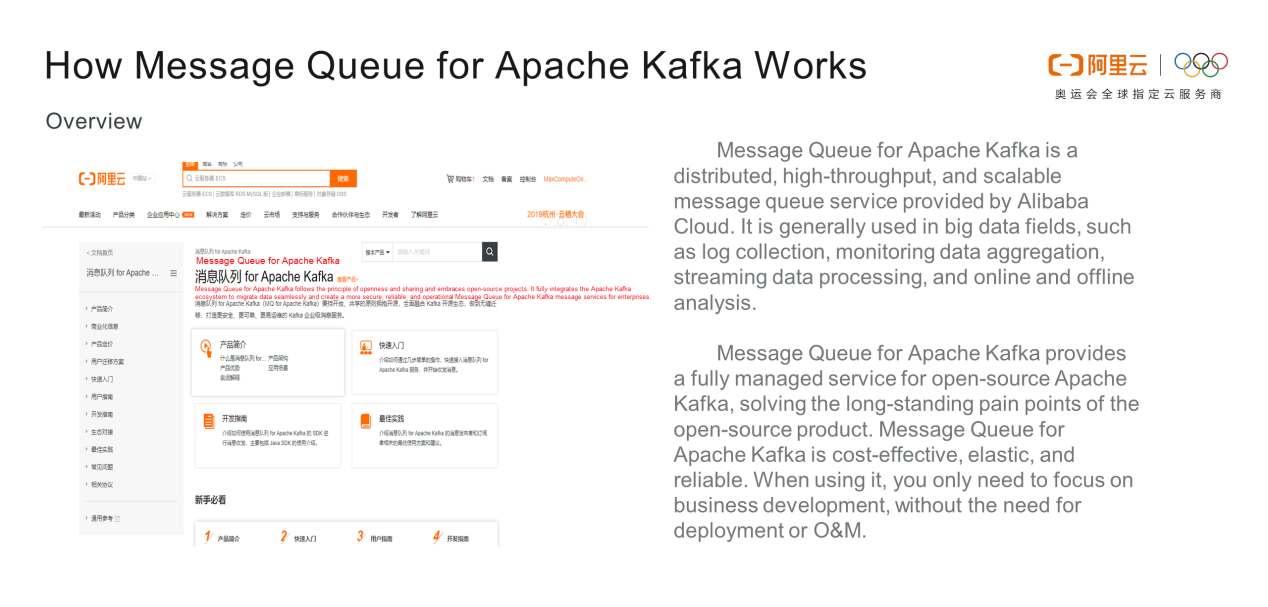

For daily operations, many enterprises use Message Queue for Apache Kafka to collect the behavior logs and business data generated by apps or websites and then process them offline or in real-time. Generally, the logs and data are delivered to MaxCompute for modeling and business processing to obtain user features, sales rankings, and regional order distributions, and the data is displayed in data reports.

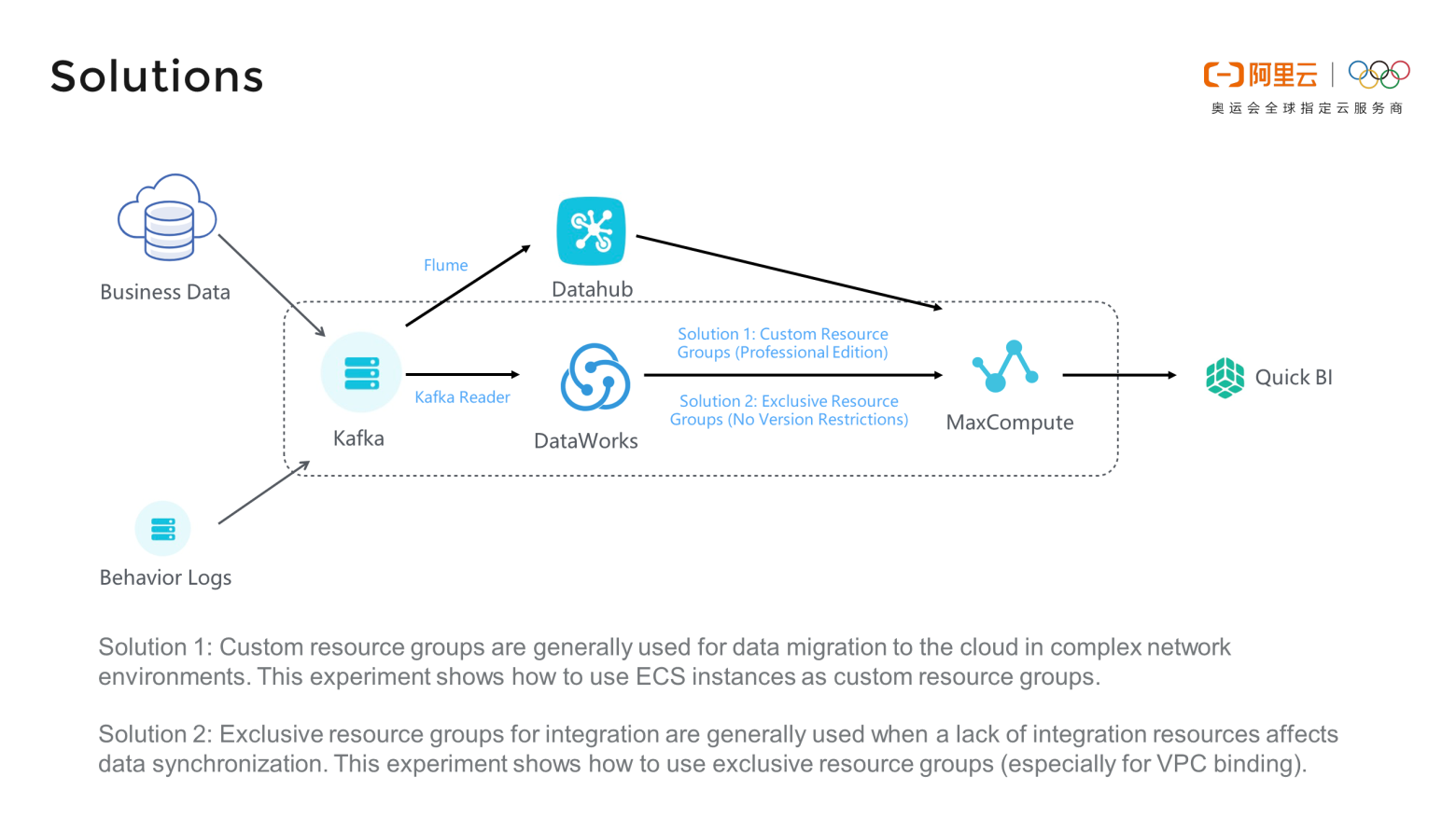

There are two ways to synchronize data from Message Queue for Apache Kafka to DataWorks. In one process, business data and behavior logs are uploaded to Datahub through Message Queue for Apache Kafka and Flume, then transferred to MaxCompute, and finally displayed in Quick BI. In the second process, business data and action logs are transferred through Message Queue for Apache Kafka, DataWorks, and MaxCompute, and finally displayed in Quick BI.

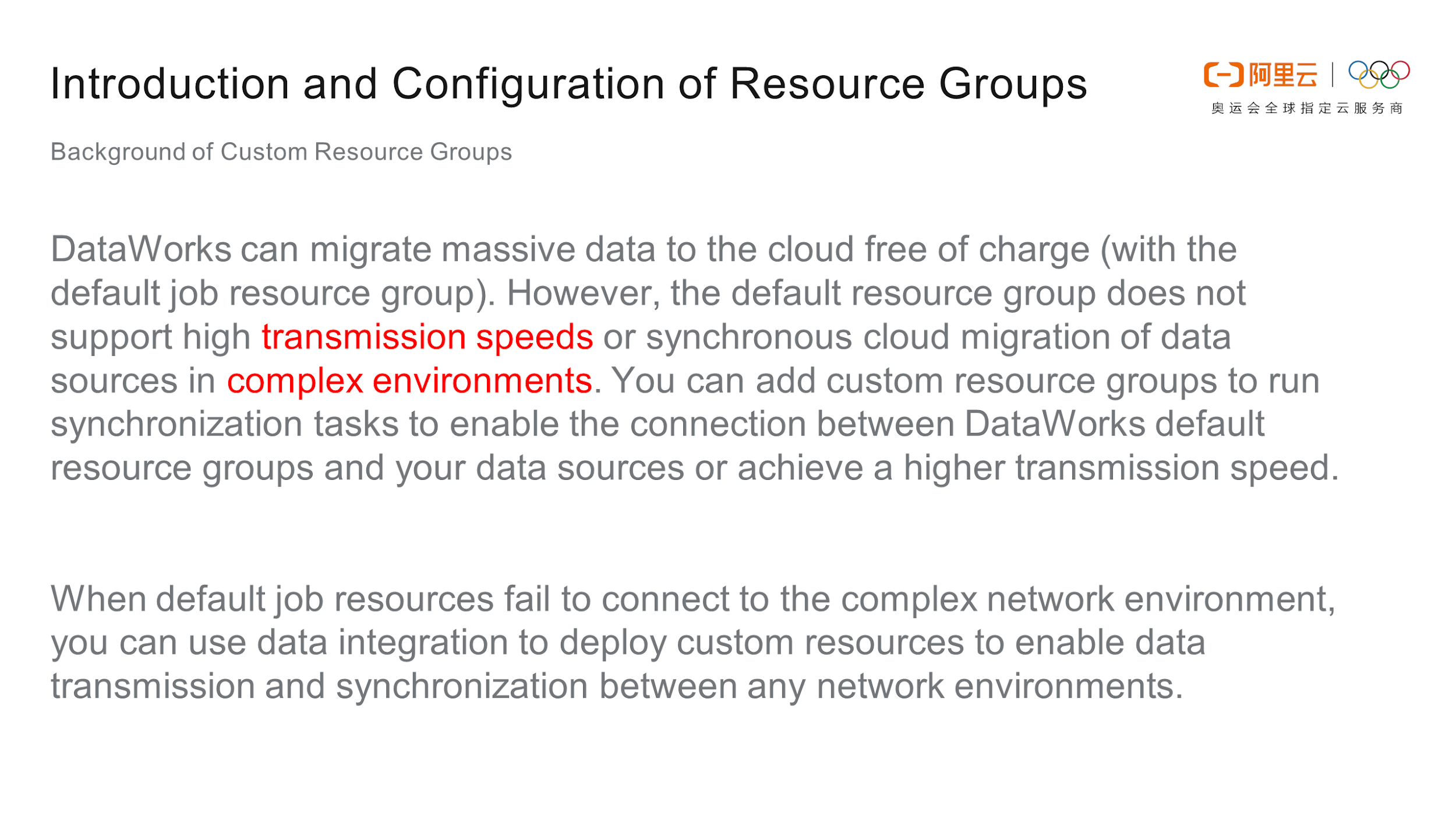

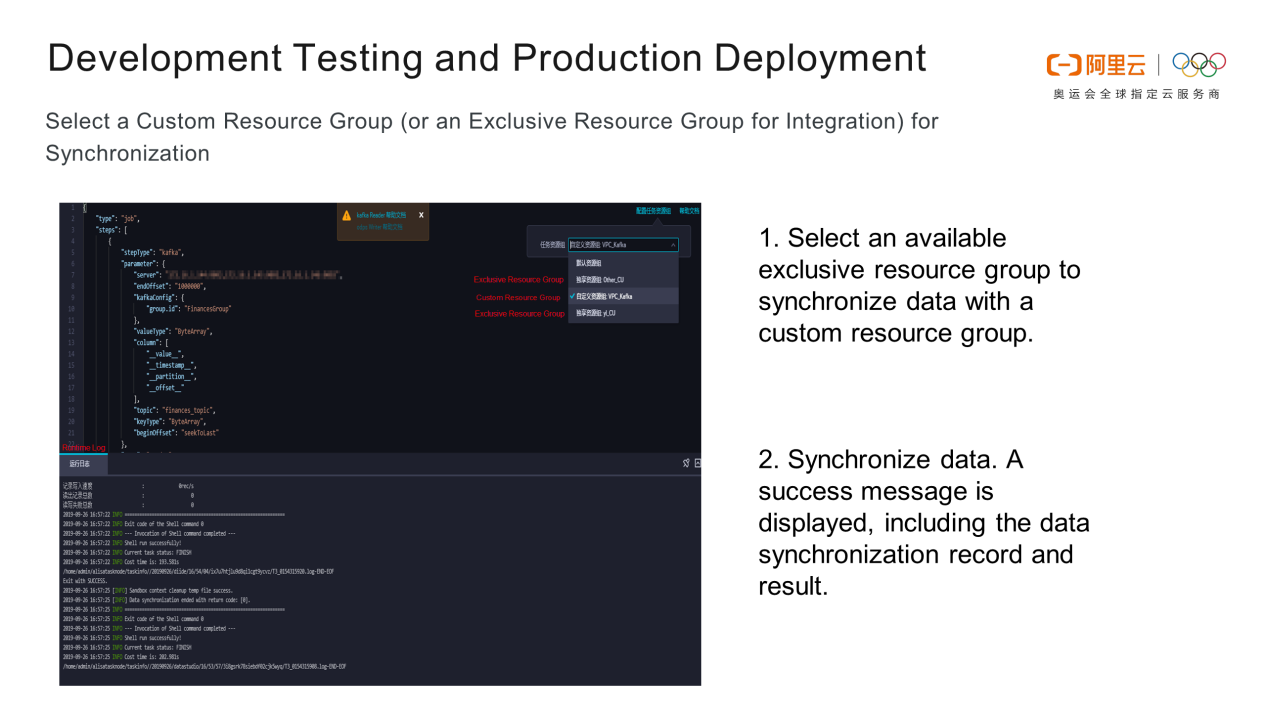

In the following description, I will use the second process. Synchronize data from DataWorks to MaxCompute using one of two solutions: custom resource groups or exclusive resource groups. Custom resource groups are used to migrate data to the cloud on complex networks. Exclusive resource groups are used when integrated resources are insufficient.

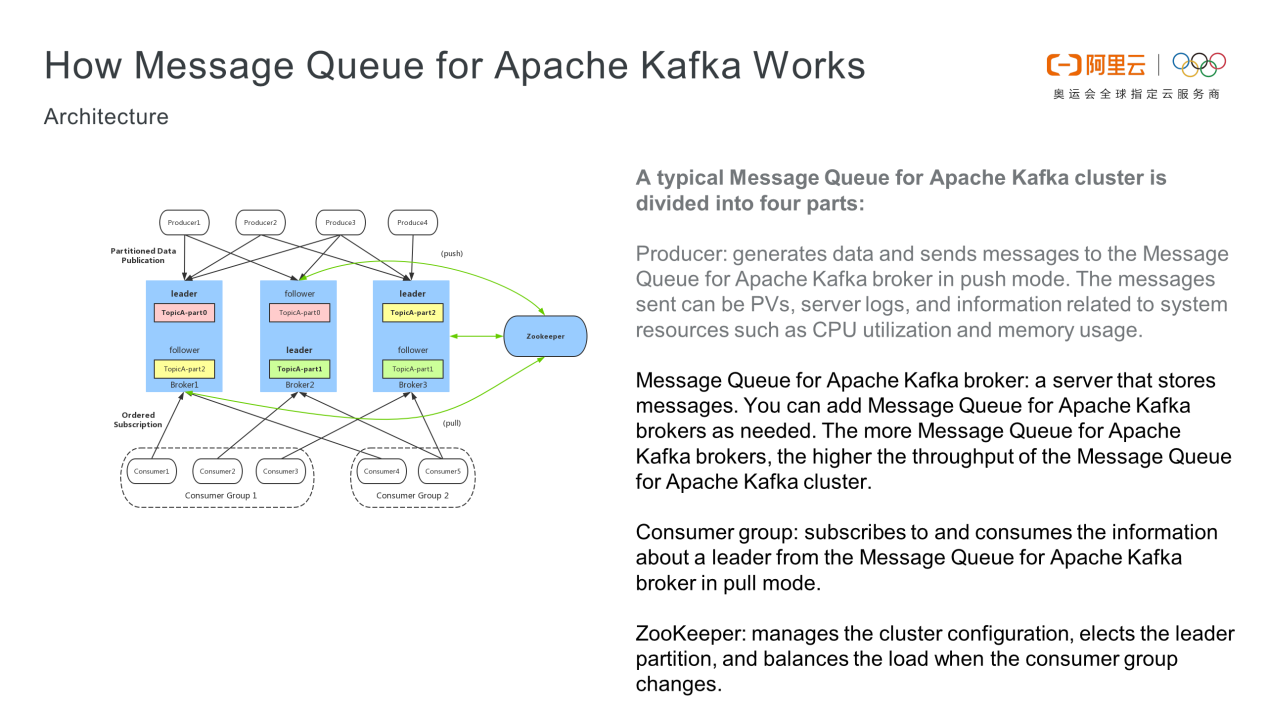

partition_leader when the consumer group changes.

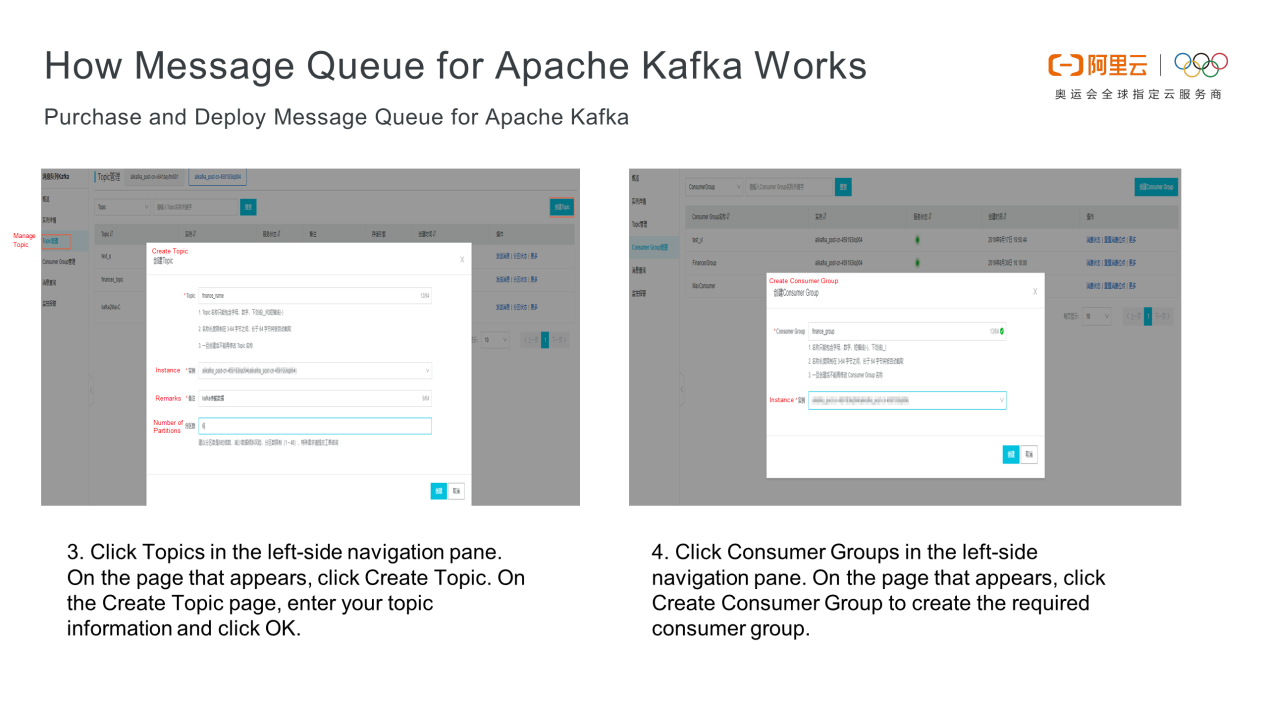

Click Topics in the left-side navigation pane. On the page that appears, click Create Topic. On the Create Topic page, enter your topic information and click OK. There are three notes below the topic name. Set a topic name in line with your business. For example, you should try to separate financial businesses and commercial businesses. Click Consumer Groups in the left-side navigation pane. On the page that appears, click Create Consumer Group to create a required consumer group. Set the consumer group name as appropriate for your topic. For example, financial businesses and commercial businesses should have their own topics.

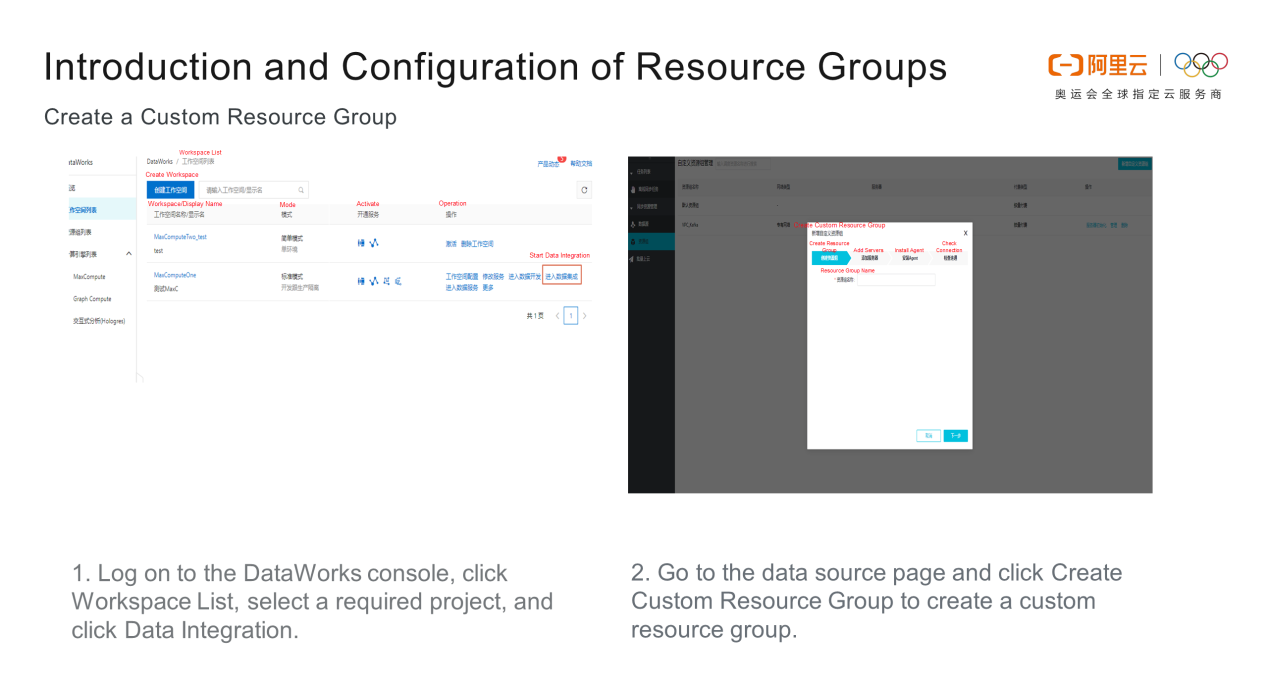

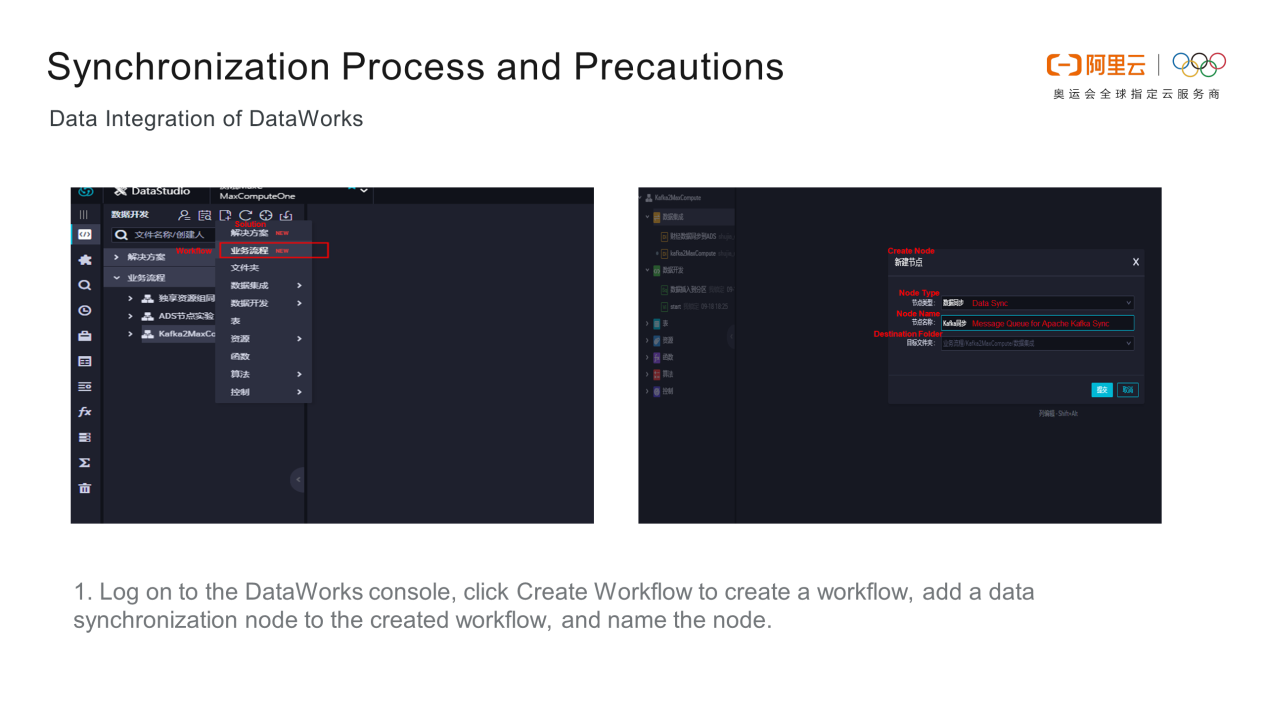

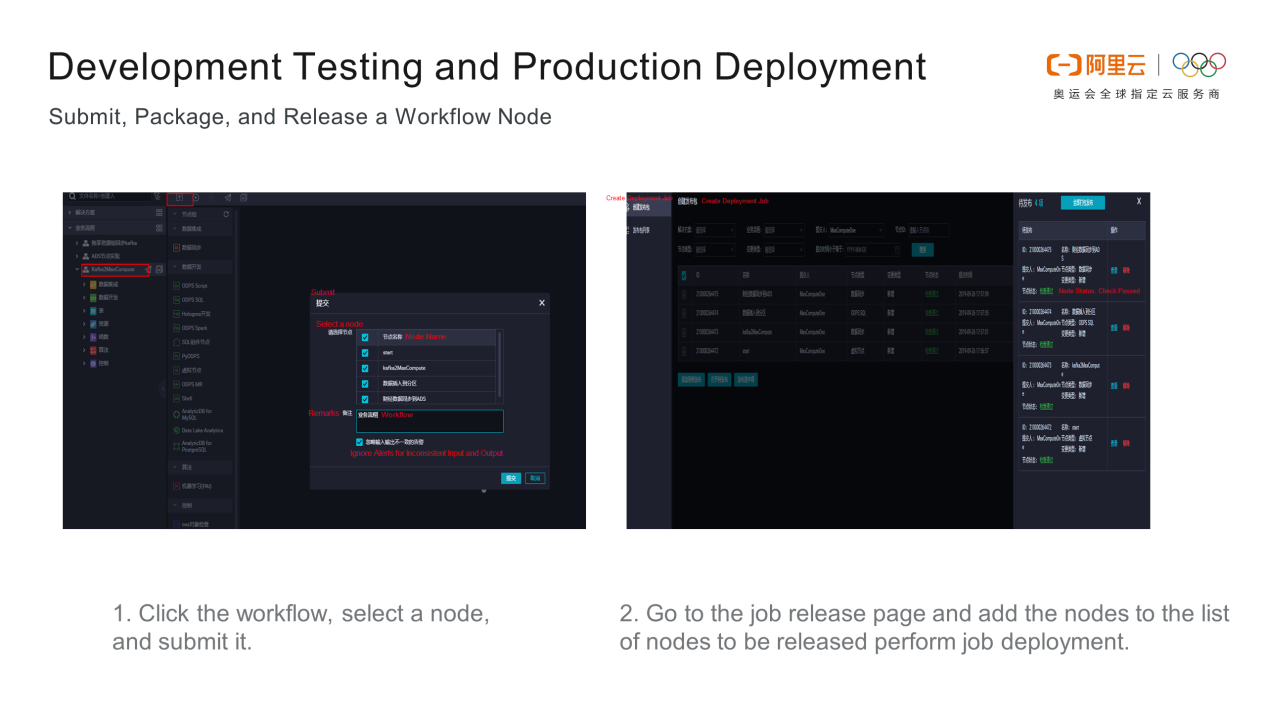

1) Log on to the DataWorks console, click Workspace List, select the required project, and click Data Integration to determine the project where data is to be integrated.

2) Go to the data source page and click Create Custom Resource Group to create a custom resource group. Only the project administrator can add a custom resource group in the upper-right corner of the page.

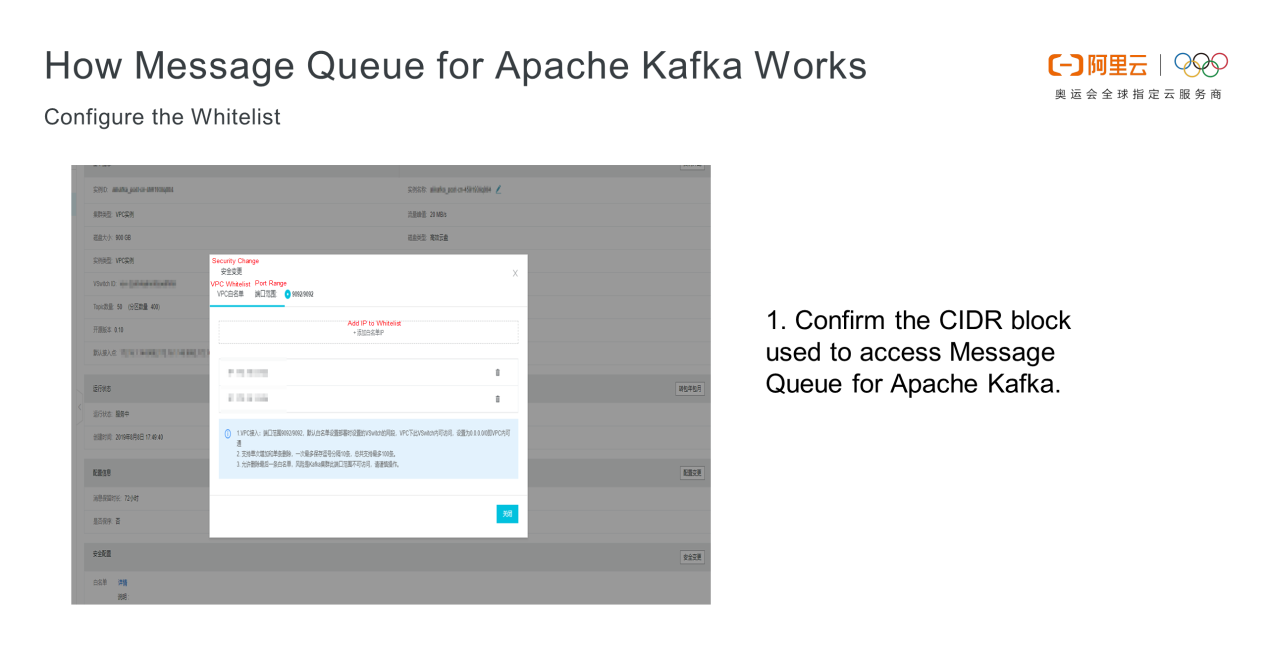

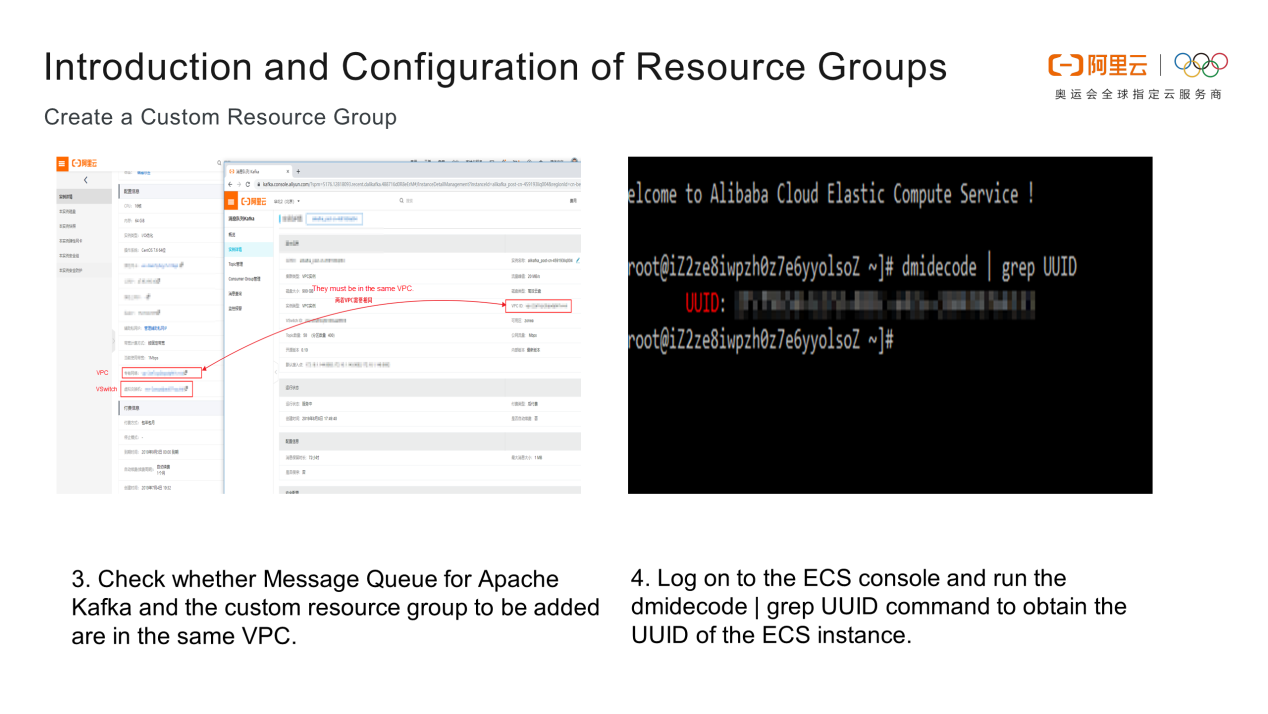

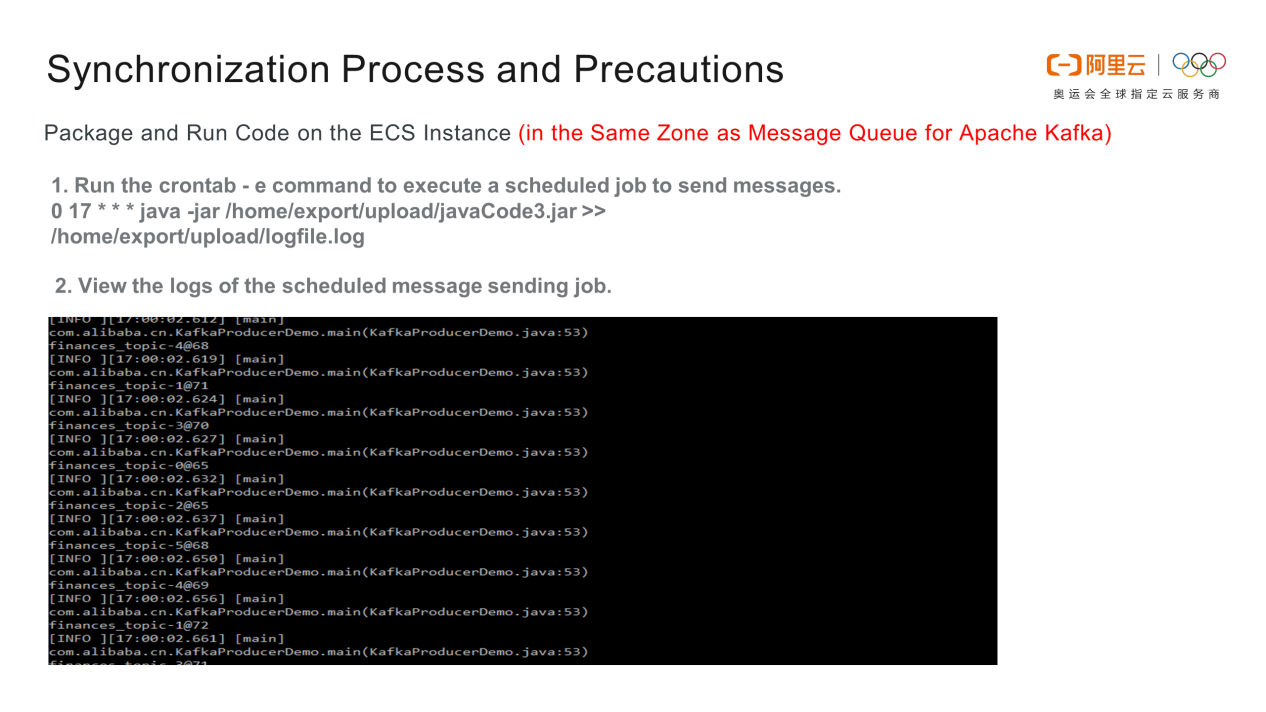

3) Check whether Message Queue for Apache Kafka and the custom resource group to be added are in the same VPC. In this experiment, an Elastic Compute Service (ECS) instance sends messages to Message Queue for Apache Kafka, and they are in the same VPC.

4) Log on to the ECS console to configure the custom resource group. Run the dmidecode|grep UUID command to obtain the UUID of the ECS instance.

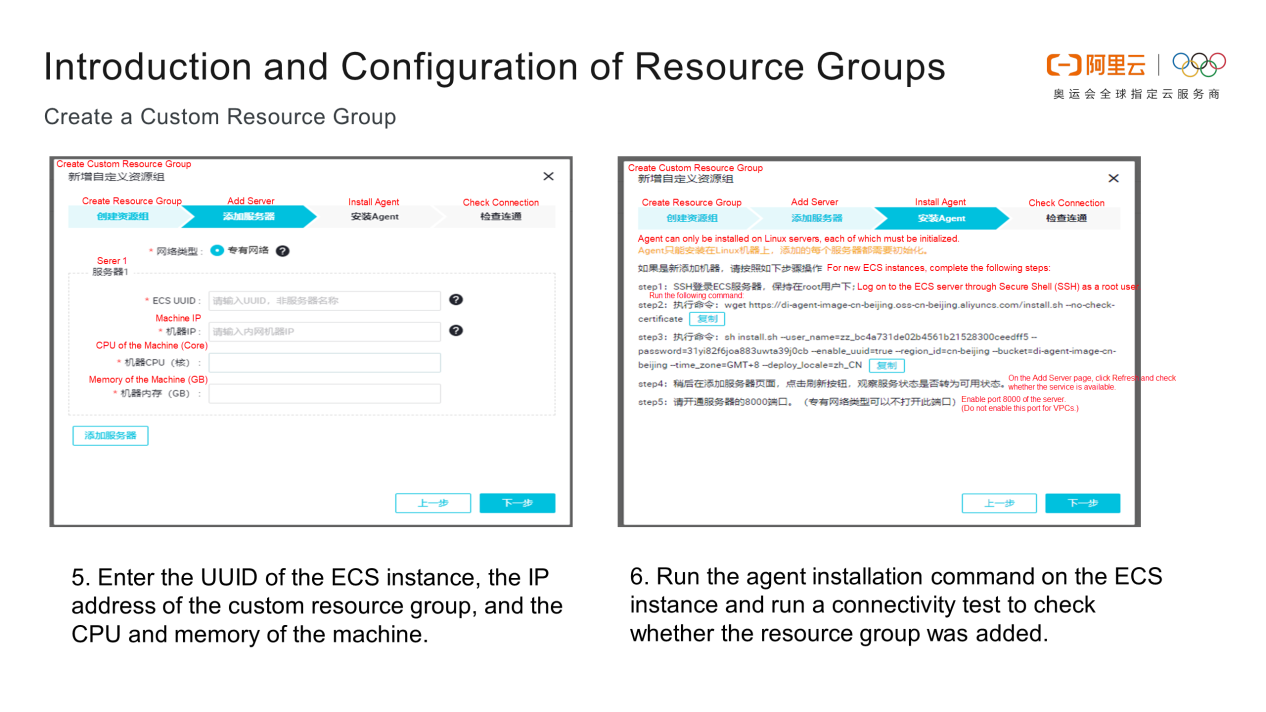

5) Enter the UUID of the instance, the IP address of the custom resource group, and the CPU and memory of the machine.

6) Run the relevant commands on the ECS instance. The agent is installed in five steps. After the fourth step, click Refresh and check whether the service is available. Run a connectivity test to check whether the resource group has been added.

An exclusive resource group accesses data sources in VPCs in the same region and public endpoints of Relational Database Service (RDS) instances in other regions.

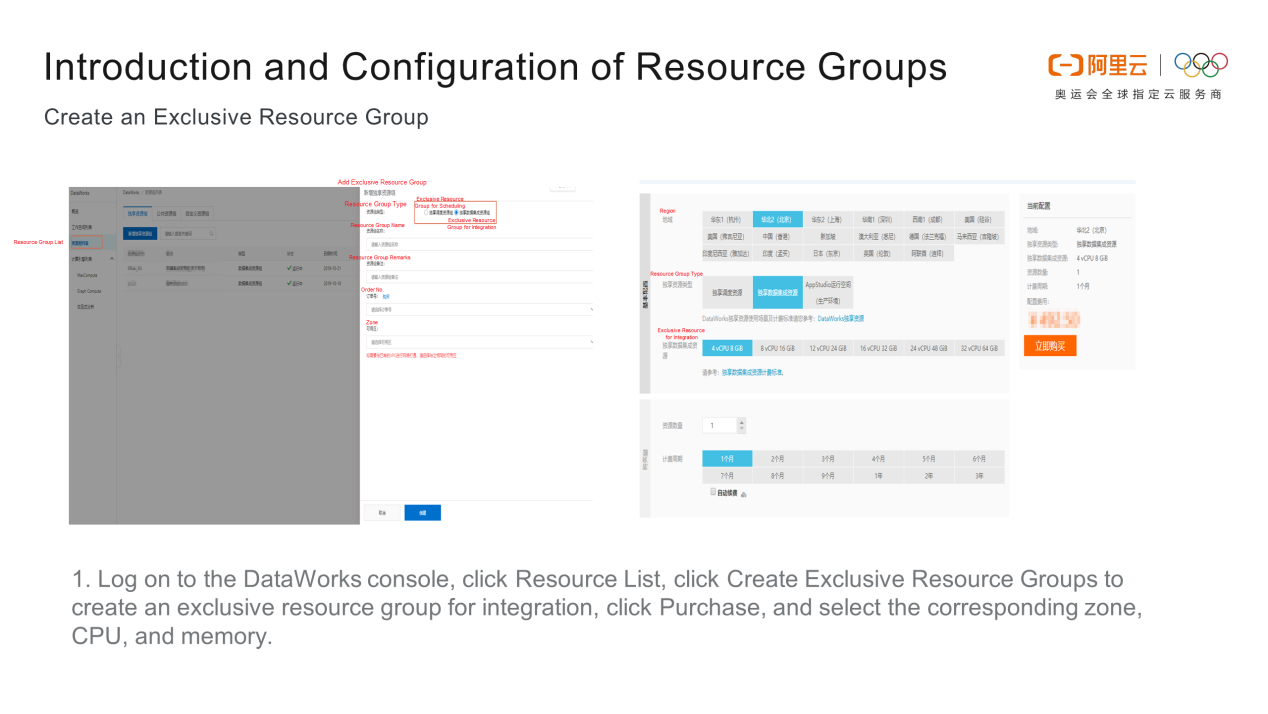

1) Log on to the DataWorks console, click Resource List, and click Create Exclusive Resource Groups to create an exclusive resource group for integration or scheduling. Here, we will add an exclusive resource group for integration. Before clicking Purchase, set the Purchase Mode, Region, Resources, Memory, Validity Period, and Quantity.

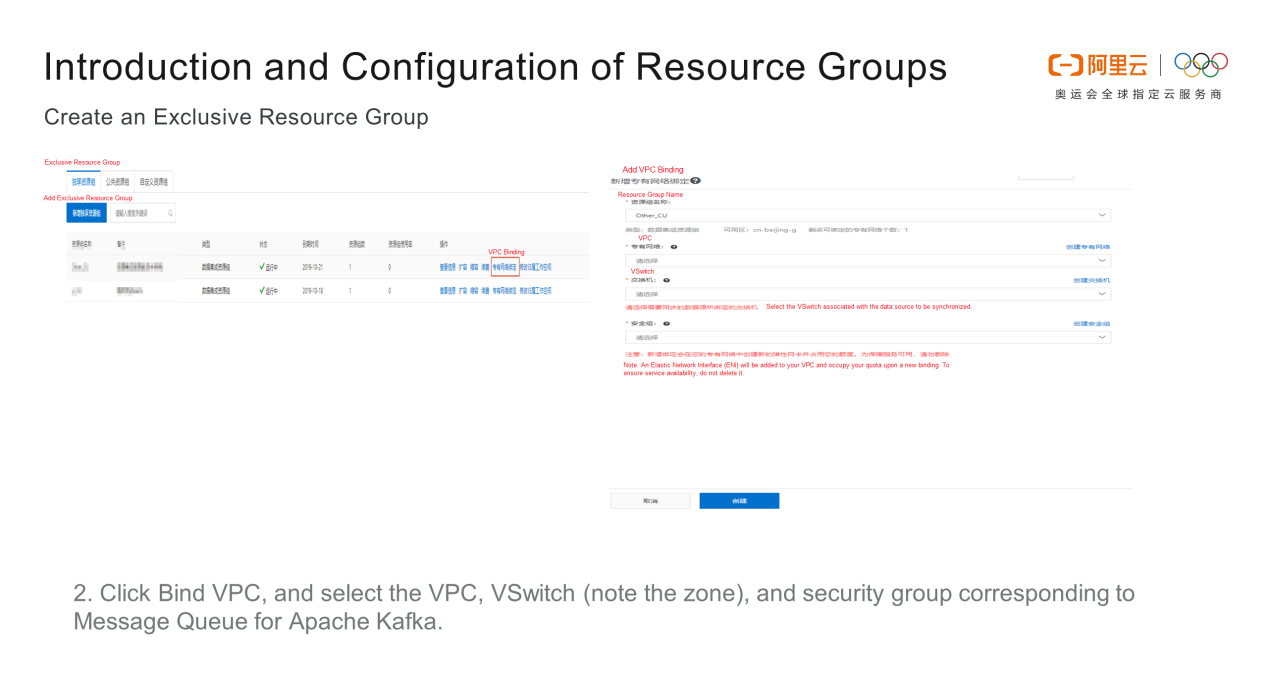

2) Bind the purchased exclusive resource group to the VPC corresponding to Message Queue for Apache Kafka. Click Bind VPC, and select the VSwitch (note the zone) and security group corresponding to Message Queue for Apache Kafka.

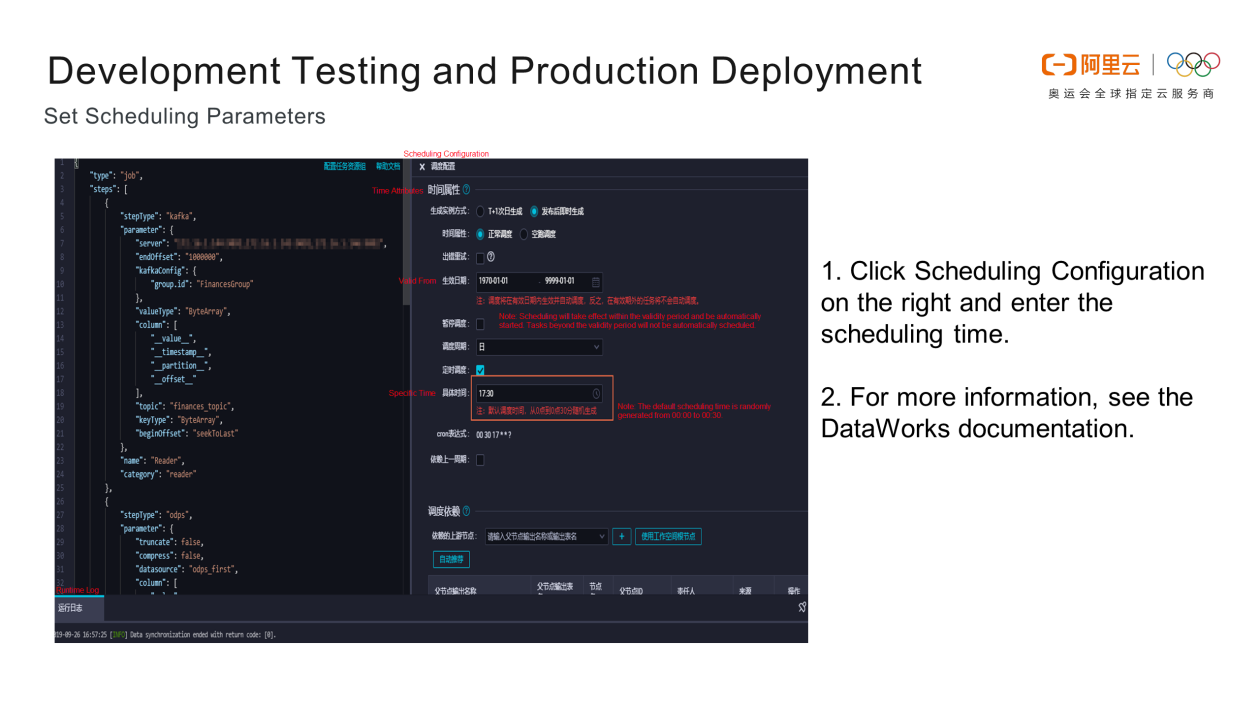

Note the following when configuring parameters for synchronizing data from Message Queue for Apache Kafka to MaxCompute:

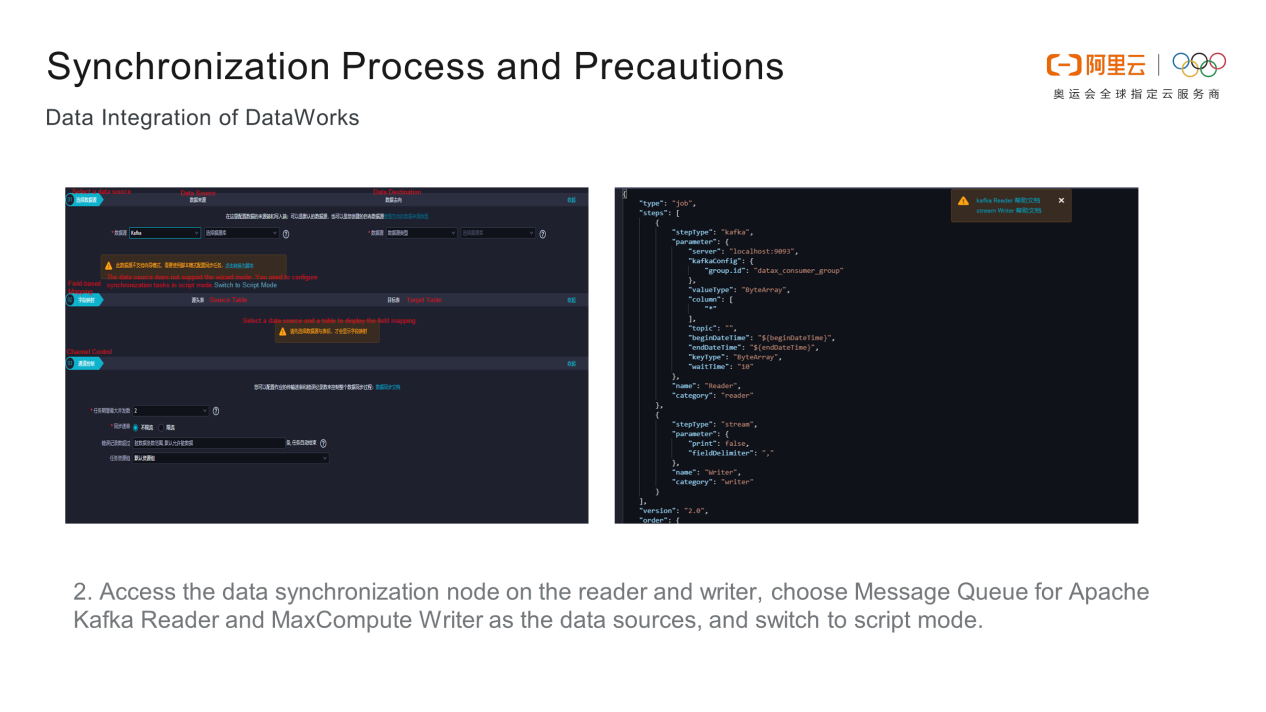

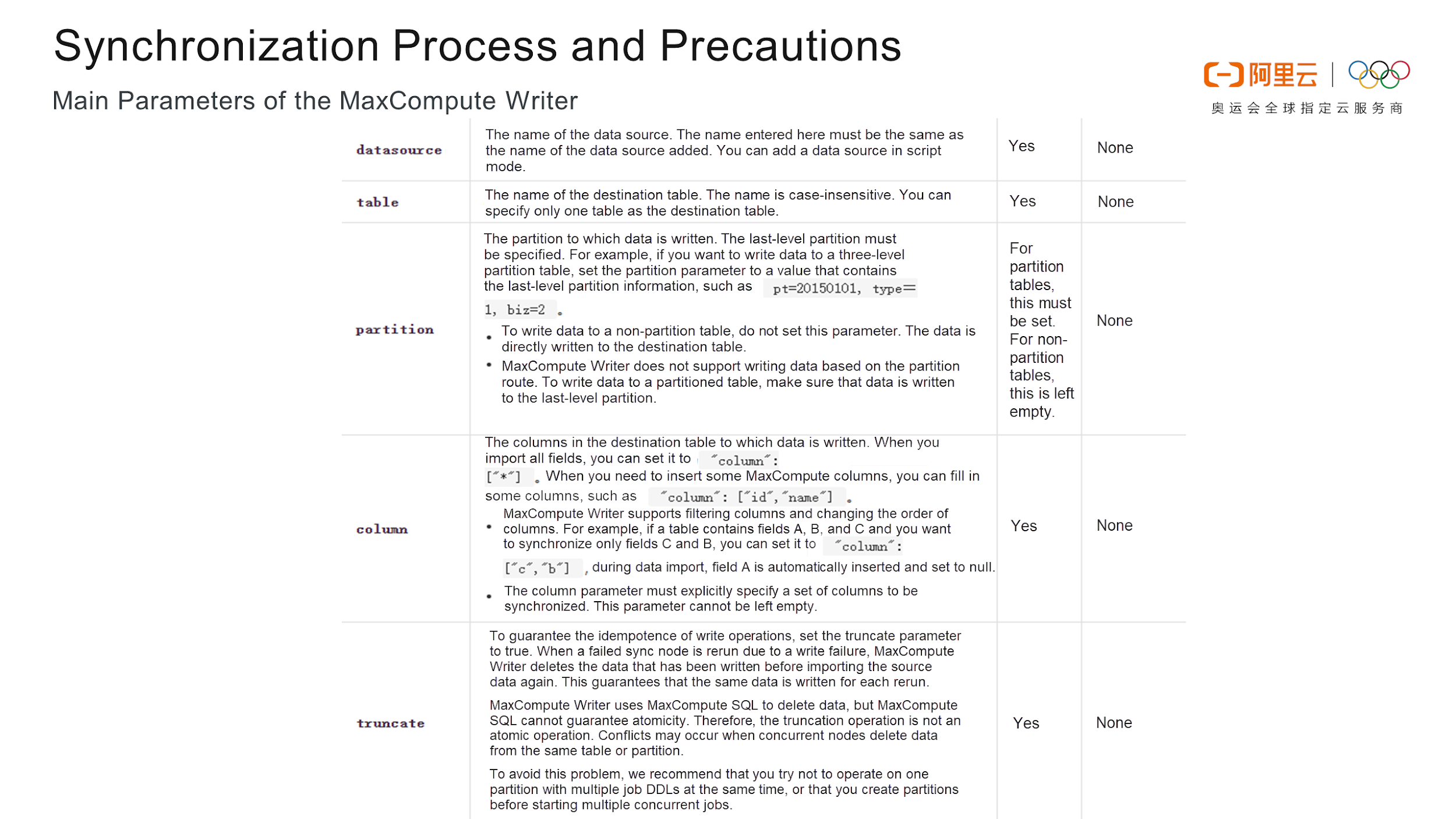

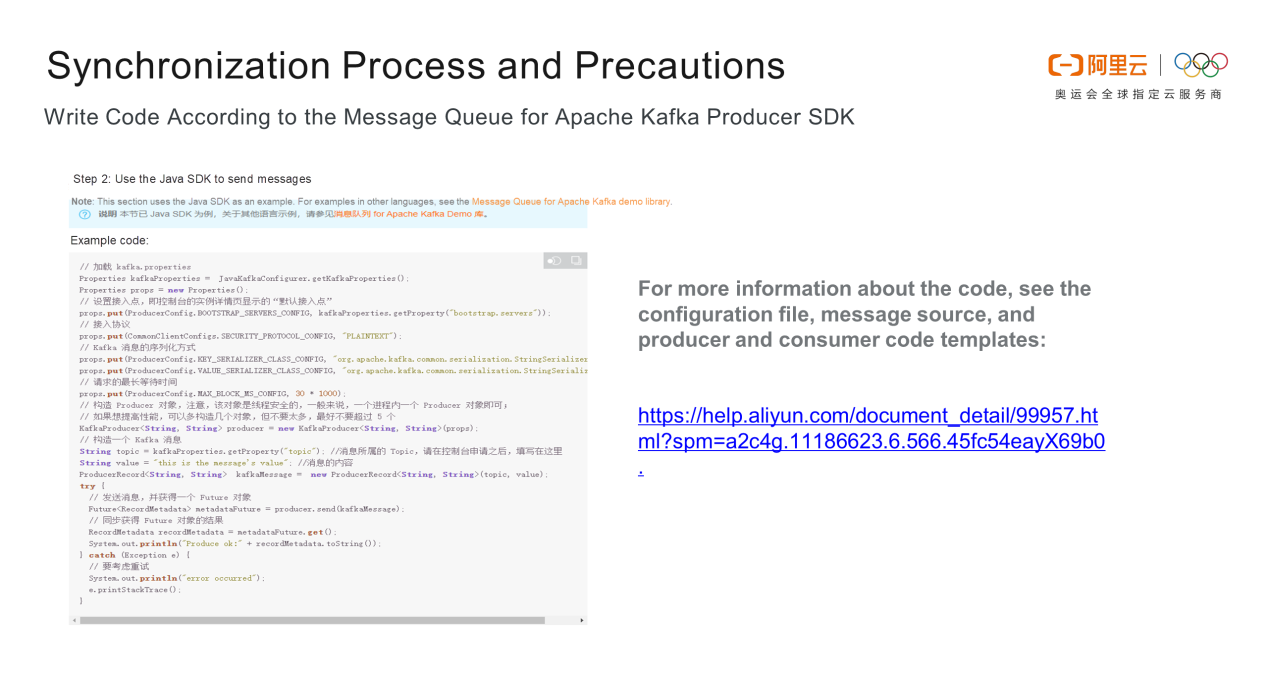

Access the data synchronization node on the Reader and Writer and choose Message Queue for Apache Kafka Reader and MaxCompute Writer as the data sources, as shown in the following figure. Switch to script mode. The help document is displayed in the upper-right corner of the following figure. Click some synchronization parameters in the Reader and Writer dialog boxes to facilitate reading, operation, and understanding.

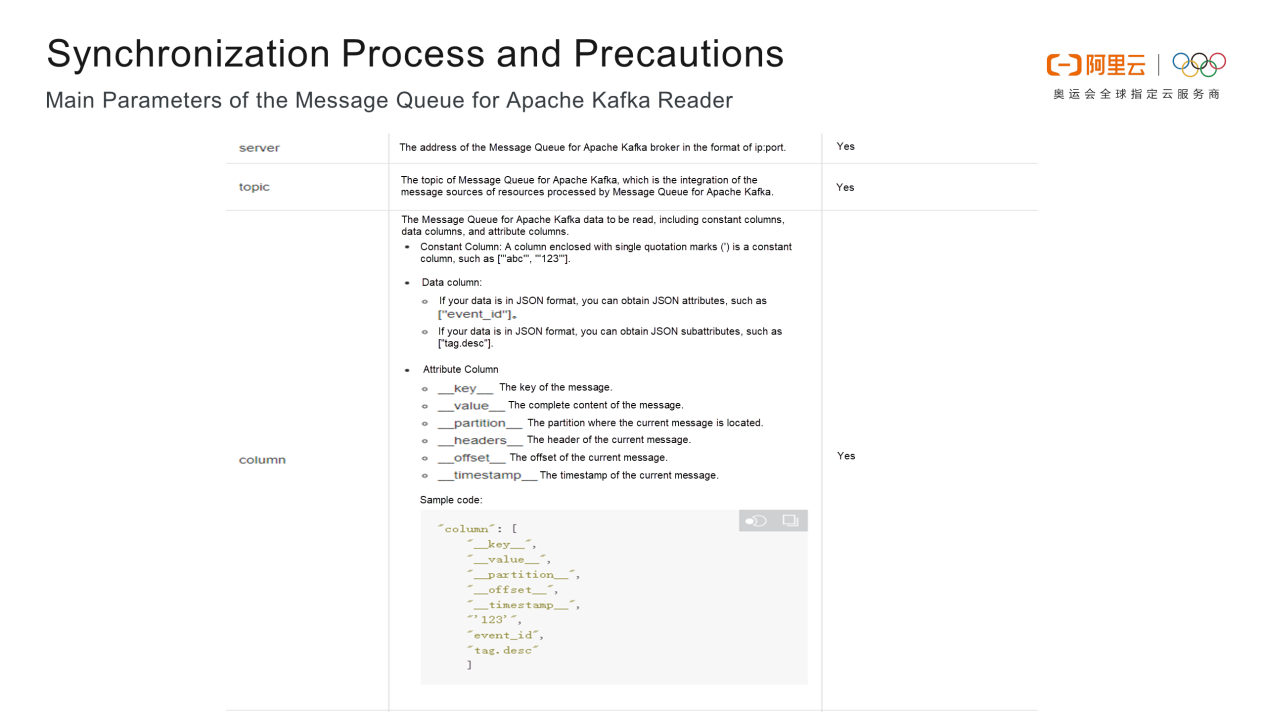

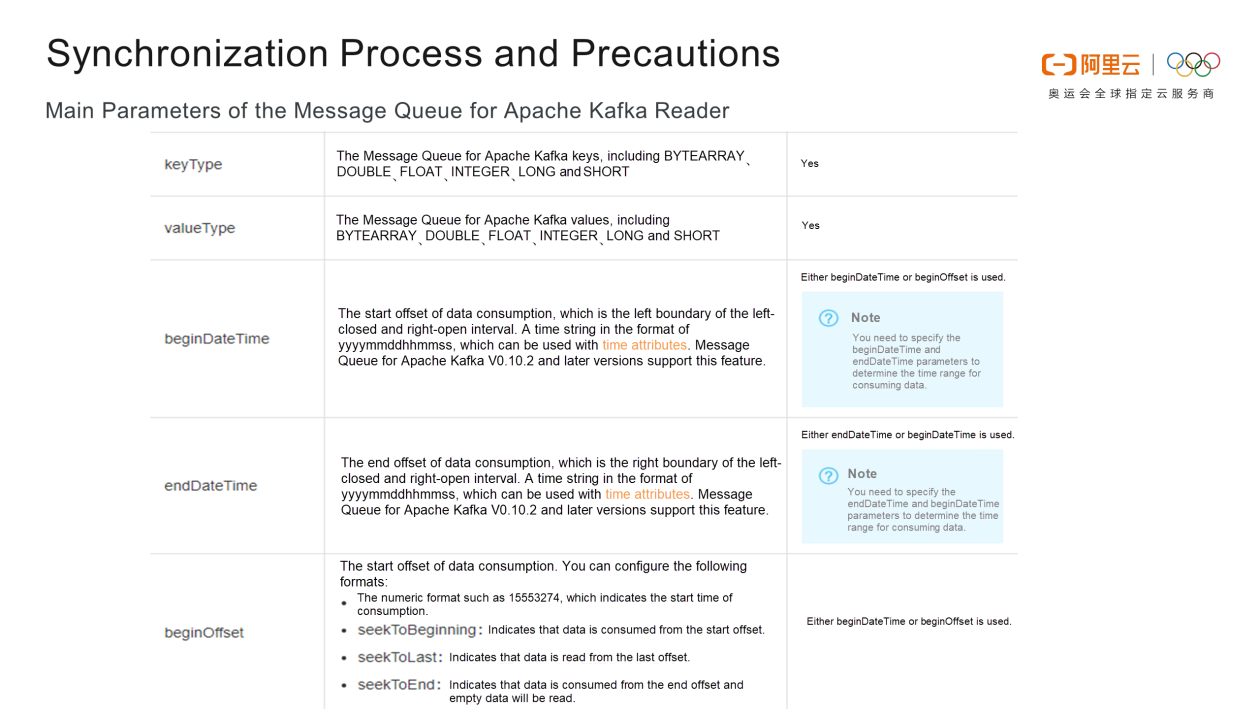

ip:port. The default endpoint of Message Queue for Apache Kafka is a server. The server parameter is required. The topic parameter indicates the topic of the Message Queue for Apache Kafka data source after the deployment of Message Queue for Apache Kafka. This parameter is also required. The column parameter can be a constant column, a data column, or an attribute column. Constant columns and data columns are less important. Completely synchronized messages are stored in value in the attribute column. If you need other information, such as the partition, offset, or timestamp, filter it in the attribute column. The column parameter is required.

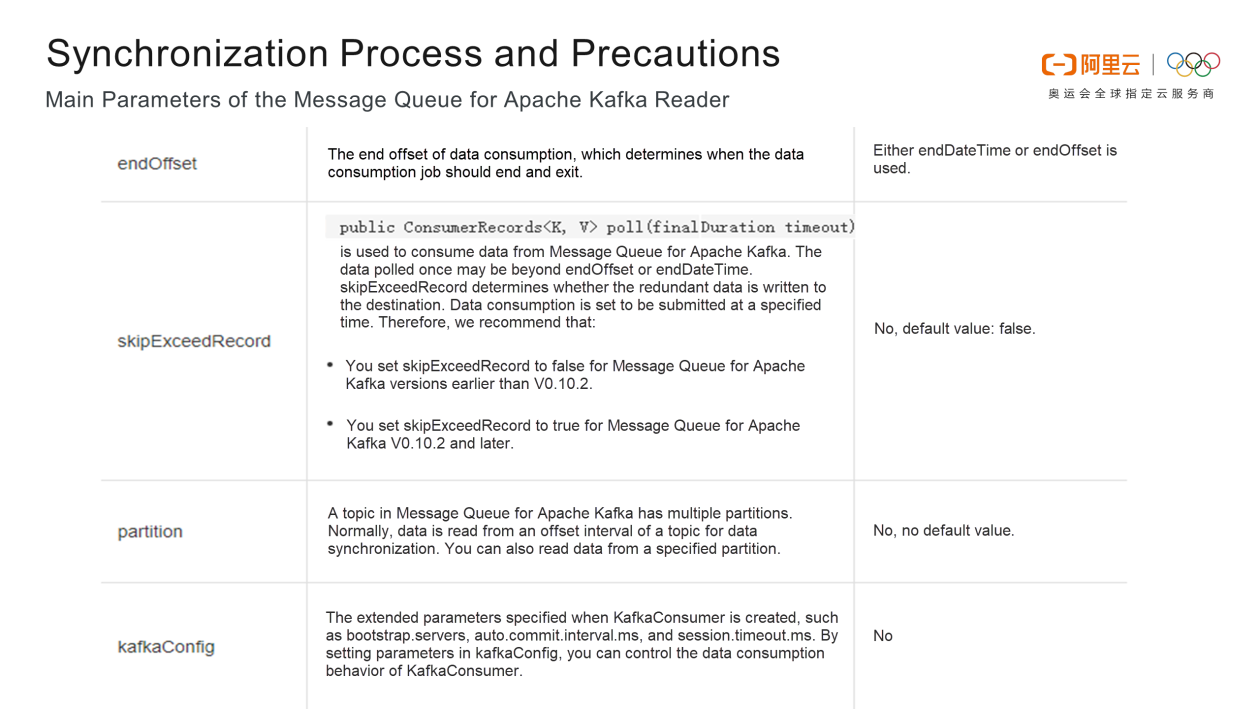

There are six values for keyType and valueType. Select the appropriate value based on the synchronized data to synchronize data of the type. Note whether the data is synchronized by message time or consumer offset. Data synchronization by consumer offset involves beginDateTime, endDateTime, beginOffset, and endOffset. Select either beginDateTime or beginOffset as the start point for data consumption. Select either endDateTime or endOffset. When using beginDateTime and endDateTime, note that only Message Queue for Apache Kafka V0.10.2 or later supports data synchronization by consumer offset. In addition, beginOffset has three special forms: seekToBeginning indicates that data is consumed from the start point. seekToLast indicates that data is consumed from the last consumer offset. Based on beginOffset, data can be consumed only once from the last consumer offset. If beginDateTime is used, data can be consumed multiple times, depending on the message storage time. seekToEnd indicates that data is consumed from the last consumer offset and empty data is read.

The skipExceeedRecord parameter is optional. The partition parameter is optional as the data in multiple partitions of a topic is read and consumed. The kafkaConfig parameter is optional. Other relevant parameters can be extended from this parameter.

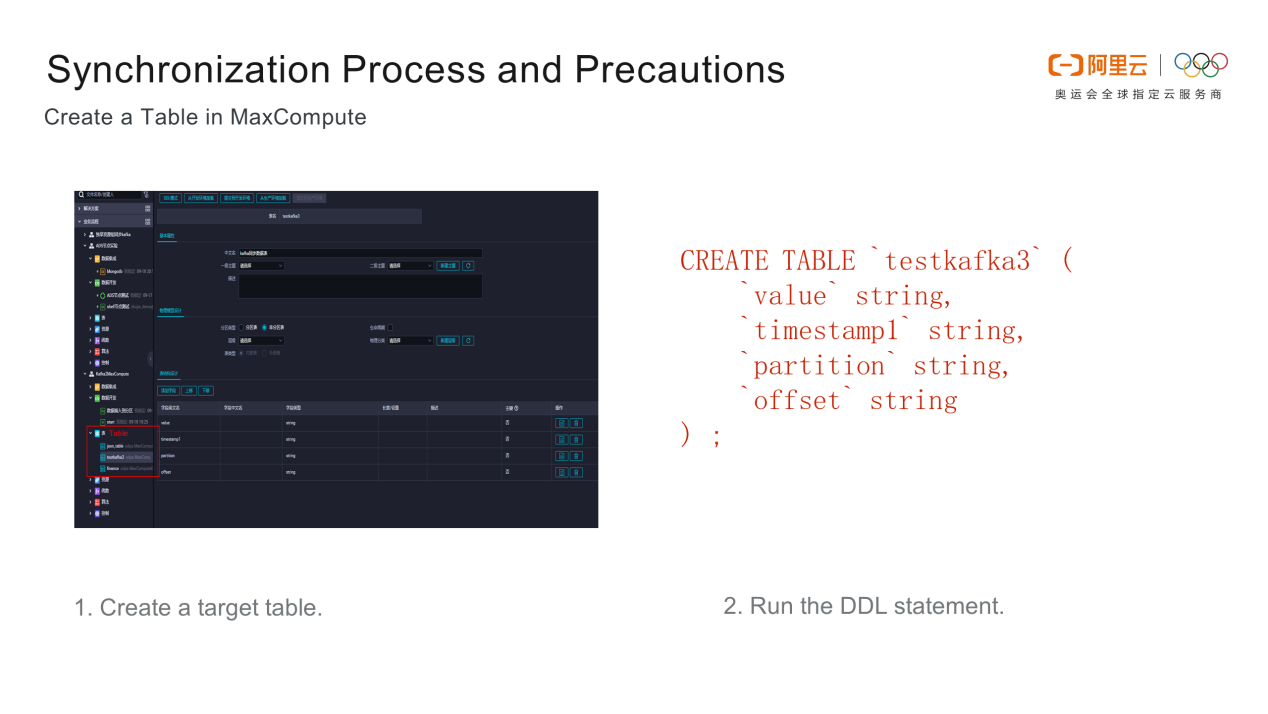

If the table is a partition table, the partition parameter must be set to the last-level partition to determine the synchronization point. If the table is a non-partition table, the partition parameter is optional. The column parameter needs to be consistent with the relevant fields in Message Queue for Apache Kafka columns. Information is synchronized only when the relevant fields are consistent. The truncate parameter specifies whether to write data in append mode or overwrite mode. If possible, prevent multiple Data Definition Language (DDL) operations on one partition at the same time, or create partitions before starting multiple concurrent jobs.

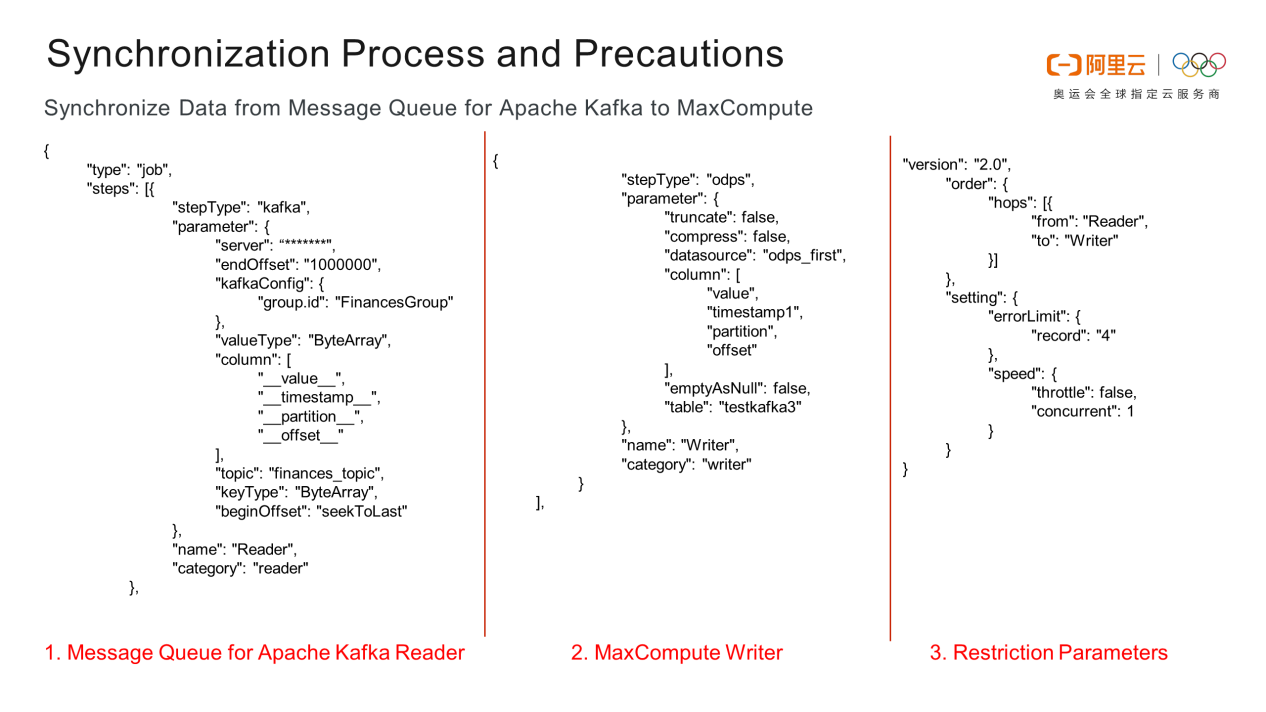

group.id, valueType, ByteArray, column, topic, beginOffset, and seekToLast. MaxCompute Writer includes the truncate, compress, and datasource fields, which must be consistent with those of Message Queue for Apache Kafka Reader. The value data must be synchronized. Restriction parameters are as follows: errorlimit specifies the number of data errors before an error is reported, and speed restricts the traffic rate and concurrency.

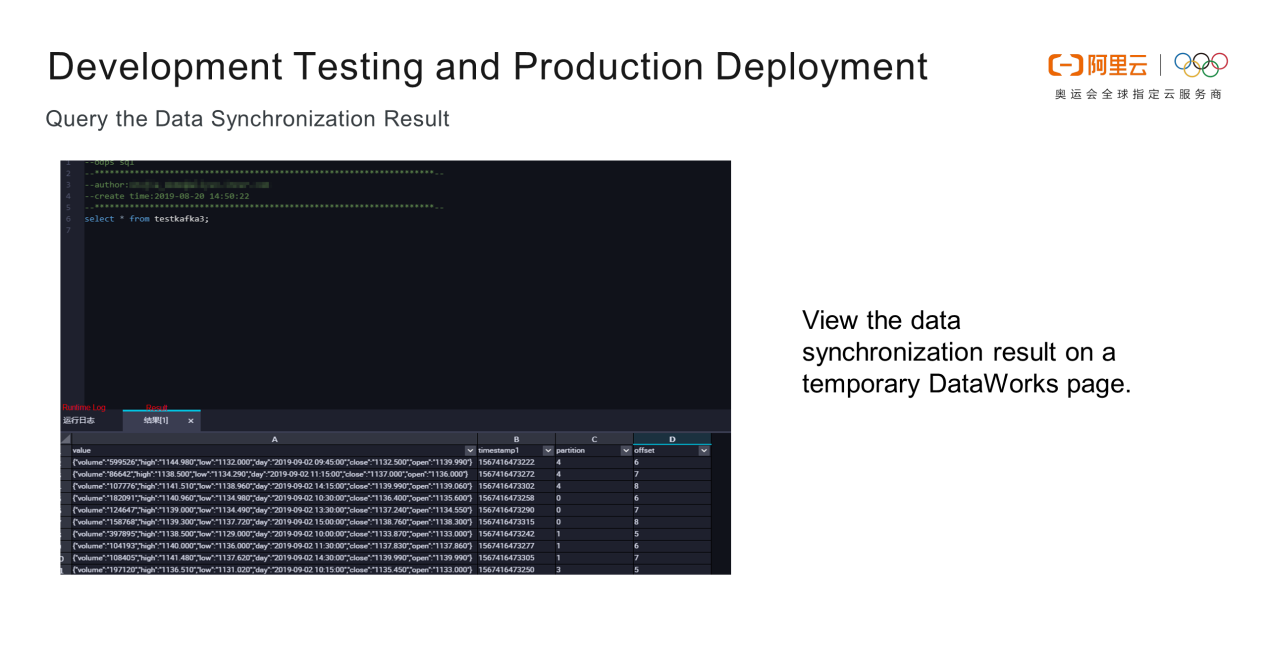

select * from testkafka3 command on a temporary node to view the data synchronization result. If the data is synchronized, the test is successful.

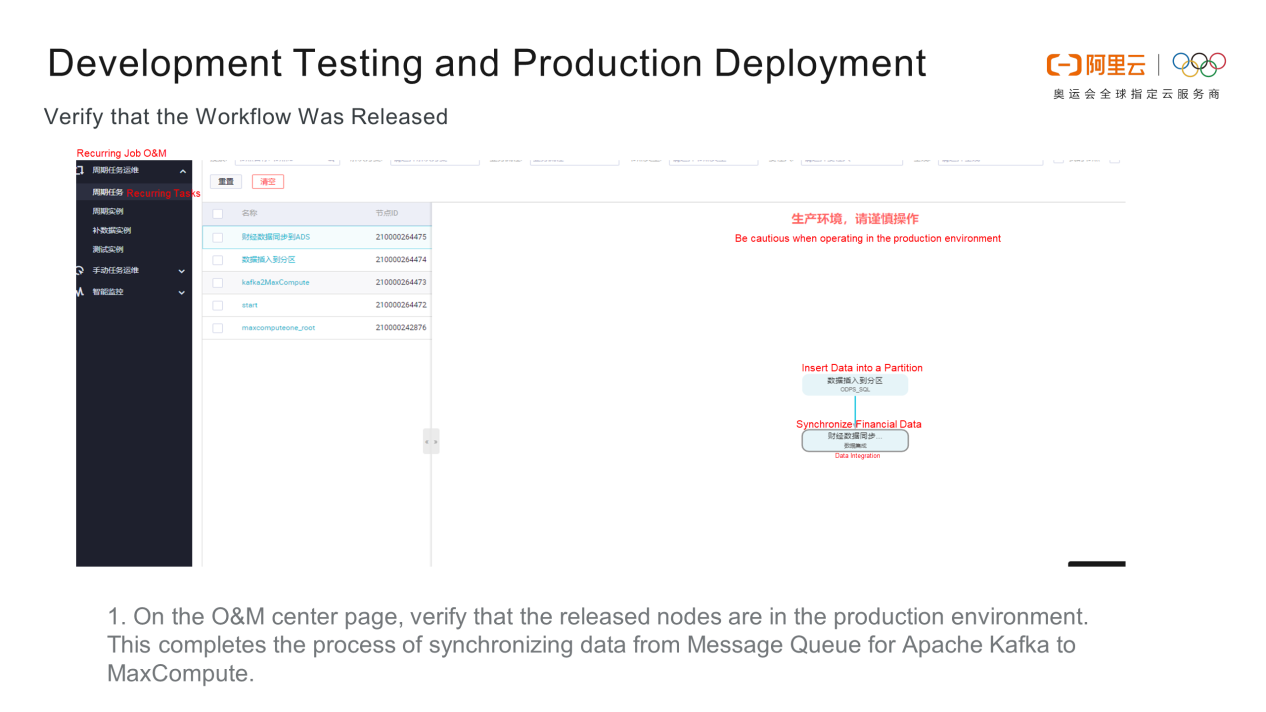

In this article, you gained an overall understanding of Message Queue for Apache Kafka and learned how to synchronize Message Queue for Apache Kafka to MaxCompute on Alibaba Cloud. Additionally, we covered various configuration methods and deployment operations involved right from development to production.

What's New with Mars - Alibaba's Distributed Scientific Computing Engine

137 posts | 21 followers

FollowAlibaba Clouder - March 31, 2021

Alibaba Cloud Indonesia - January 20, 2023

Alibaba Cloud MaxCompute - February 17, 2021

Alibaba Cloud Native - July 5, 2023

JJ Lim - January 11, 2022

Apache Flink Community China - May 13, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud MaxCompute