The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1. Do not miss the chance!

By Yu'an

The peak transaction volume during the 2020 Double 11 reached 583 thousand per second. Message-oriented middleware, RocketMQ, has perfectly maintained stability for all kinds of businesses with no faults during Double 11 for consecutive years. During 2020 Double 11, there were several changes in RocketMQ:

As an important part of the current cloud-native technology stack practice, the Kubernetes ecosystem has been gradually shaped and enriched. Currently, due to the large-scale RocketMQ clusters and various historical factors, there are considerable pain points in O&M. So, it is expected to use the cloud-native technology stack to find corresponding solutions to reduce costs, improve efficiency, and achieve automatic operation and maintenance (O&M).

Early in 2016, message-oriented middleware (MOM) was containerized and automatically released through the middleware deployment platform provided by Alibaba Cloud's internal team. Compared to 2016, the overall O&M of MOM has been greatly improved. However, like a stateful service, it still has many problems in the O&M layer.

The middleware deployment platform helps us with some basic work such as resource application, container creation, initialization, and image installation. However, due to the different deployment logics of different middleware products, the middleware is customized for the release of each application. As such, the developers of the middleware deployment platform do not fully understand the deployment process of RocketMQ within the group.

Therefore, in 2016, we had to implement the application release code of message-oriented middleware as required by the deployment platform. Although the deployment platform greatly improves the O&M efficiency and enables one-click release, there are still a lot of problems. For example, when the release logic changes, the corresponding code on the deployment platform needs to be modified and the deployment platform itself requires an upgrade to support us, which is not a cloud-native solution.

Likewise, some operations, such as faulty server replacement and cluster scaling, involve a little manual work, such as traffic shifting and the confirmation of accumulated data. We have tried to integrate more O&M logic of message-oriented middleware into the deployment platform. However, it is not a good idea to write our own business code in other teams' projects. Therefore, we want to use Kubernetes to implement the operator of the message-oriented middleware, and also to use the multi-copy capability of the cloud-based cloud disks to reduce machine costs and the complexity of master-replica O&M.

After some discussion, the internal team was chosen to take charge of building the cloud-native application O&M platform. With the help of the experience concerning middleware deployment platforms and cloud-native technology stacks, several breakthroughs in the automatic O&M of stateful applications have been made.

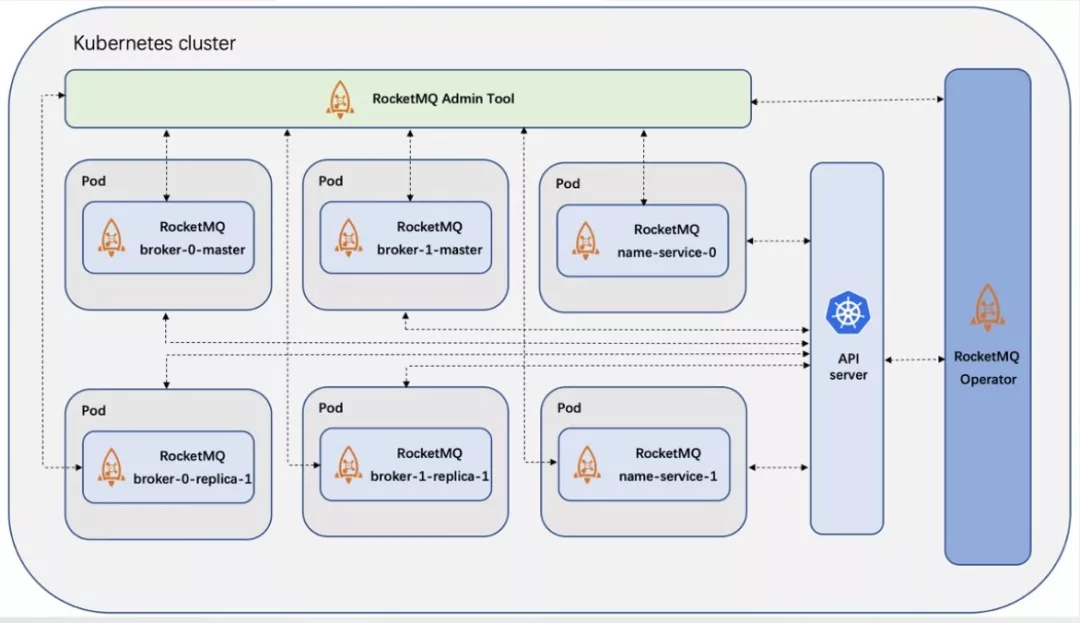

The overall implementation solution is shown in the preceding figure. Abstracting the business model of message-oriented middleware by a custom CRD, the original business release and deployment logic on the middleware deployment platform is sunk to the operator of the message-oriented middleware, and hosted on the internal Kubernetes platform. This platform is responsible for container production and initialization, and baseline deployment for all online environments within Alibaba Group, while shielding all details at the IaaS layer.

The Operator takes on all the logic of creating clusters, scaling up, scaling down, and migration, including the automatic file generation and configuration of the broker name corresponding to each Pod, various switches configured according to different cluster functions, and synchronous replication of metadata, etc. At the same time, some previous manual operations, such as the traffic observation during traffic shifting and the observation of accumulated data before disconnection, are all integrated into the Operator. When modifying various O&M logics, it is only needed to modify our own operators instead of relying on the specific implementations for common use.

All the replica Pods shown in the figure are removed in the final online deployment. In Kubernetes, each instance in a cluster is of the same state without dependencies. On the contrary, in the master-replica pairing deployment of the message-oriented middleware, there is a strict corresponding relationship between the master and replica Pods. In addition, the online and offline releases must be in strict sequence. This type of deployment is not recommended in the Kubernetes system. If the old architecture is still adopted, it will lead to the complexity and uncontrollability of instance control. Besides, more Kubernetes O&M concepts are expected to be included.

Cloud-based ECS that uses high-speed cloud disks backs up data in the underlying layer, which ensures data availability. In addition, the high-speed cloud disk could fully support the MQ synchronous flushing. Therefore, the previous asynchronous flushing can be changed to the synchronous one to avoid message loss during message writing. In the cloud-native mode, all instance environments are the same. With container technology and Kubernetes technology, any instance faults, including faults caused by downtime, can automatically recover fast.

With data reliability and service availability ensured, the entire cloud-native architecture is streamlined. It involves only a broker rather than a master broker and replica broker.

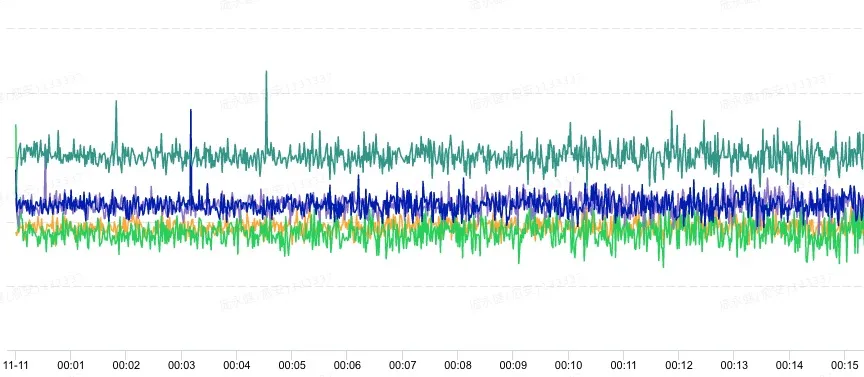

The preceding figure shows the response time (RT) on the day of the Double 11 after the launch of Kubernetes. The RT was relatively stable as expected, which marked a critical milestone for cloud-native practices.

Since the launch of RocketMQ, the original upper-layer protocol logic has been reused to fully support various key services including the core link of Alibaba Group business middle ground transaction messages. At the same time, it enables various business sides to implement an imperceptible switch to RocketMQ while benefiting from diverse characteristics of the more stable and powerful RocketMQ.

At present, the number of business sides that subscribe to core transactional messages in the business middle ground is continuously increasing. As various businesses become more intricate, the configuration of message subscriptions also becomes more complicated, so does the computational logic of transaction clusters for filtering. Some of these business sides use the old protocol logic (Header filtering) and some use SQL filtering from RocketMQ.

Currently, most of the RocketMQ machine costs during Alibaba Group's promotion come from the clusters related to transaction messages. During the peak hours of Double 11, the peak value of the transaction cluster is proportional to the peak transaction value. Together with the additional computing logic for CPU filtering brought by annually increasing complicated subscriptions, the transaction clusters witness the fastest growth during the promotion.

For some reason, most business sides use Header for filtering. The internal implementation is actually the aviator expression. For most of the filtering expressions used by the business sides of the transaction clusters, conditions such as MessageType = = XXXX are specified. Checking the source code of the aviator, it can be found that this condition eventually calls Java's String.compareTo().

Transaction messages contain a large number of MessageType of different businesses. There are at least thousands of records alone. With the increasing complexity of transaction business processes, the number of MessageType increases significantly. The peak value of transaction messages is proportional to the increase of the transaction peak value. Coupled with the more complex filtering for transaction messages, the cost of the transaction clusters is very likely to increase exponentially with the transaction peak value in the future. Therefore, we've decided to do some optimizations on this part.

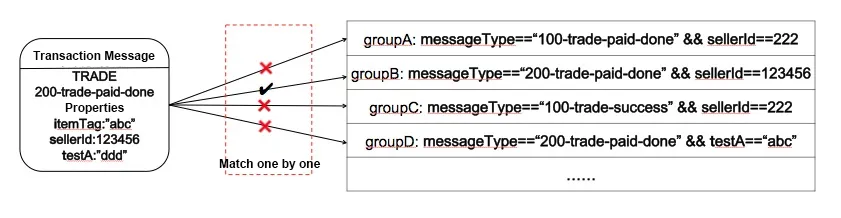

The original filtering process is as follows. Each transaction message needs to match the subscription expression of different groups one by one. If the transaction message matches the expression, the machine in the corresponding group is selected for delivery, as shown in the following figure.

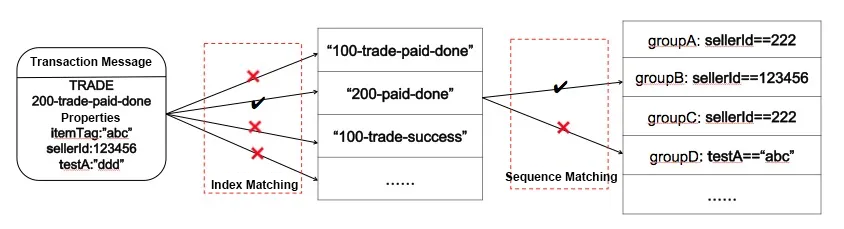

The optimization of this process is inspired by database indexing. For the original process, the filtering expressions of all subscribers can be regarded as records of the database. Each message filtering is equivalent to a database query with a specific condition, which selects out all records (filtering expressions) that match the query (message) as the result. To speed up the query, MessageType can be selected as an index field for indexing. As such, the MessageType primary index is first matched in each query. Then, the records matched with the primary index are rematched by other conditions (such as by sellerId and testA in the following figure). The optimization process is shown as follows:

Once the above optimization process is determined, pay attention to two technical points:

For the first point, the compilation process of aviators needs to be hooked. After going deep into the aviator source code, it can be found that the compilation of aviator is a typical recursive descent, which requires addressing the short circuit of the parent expression after extracting the MessageType field.

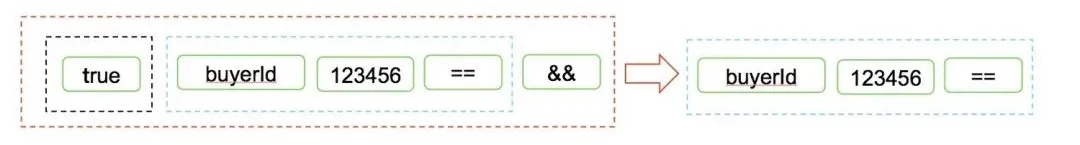

After extracting the type messageType==XXX during compilation, the original message==XXX is converted into two types, true or false. According to the two types, short-circuit the expression to obtain the expression after optimization and extraction.

Example:

Expression:

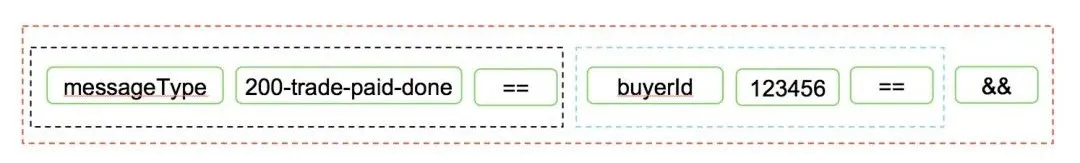

messageType=='200-trade-paid-done' && buyerId==123456

Two sub-expressions are extracted:

Sub-expression 1(messageType==200-trade-paid-done):buyerId==123456

Sub-expression 2(messageType!=200-trade-paid-done):falseSpecifically, in the implementation of aviator, expression compilation will build a list of each token, as shown in the following figure. For better understanding, the green box indicates the token, and the other boxes indicate the specific combinations of conditions in the expression:

There are two cases after extracting messageType:

Now, the messageType extraction is completed. Some people may wonder why we need to take case two into consideration. The reason is that it must be used when multiple conditions are considered, for example:

(messageType=='200-trade-paid-done' && buyerId==123456) || (messageType=='200-trade-success' && buyerId==3333)

Also, consider the situation of not being equal. Similarly, providing that multiple expressions are nested, the short-circuit calculation needs to be carried out step by step. However, the overall logic is similar, which will not be described in detail here.

Now, let's focus on the second point. For filtering efficiency, the HashMap structure can be directly used for indexing. Thus, use the value of messageType as the key of HashMap, and the extracted subexpressions as the value of the HashMap. As such, the majority of unsuitable expressions can be filtered out through one hash operation each time, which greatly improves the filtering efficiency.

This optimization mainly reduces the CPU computing logic. Comparing the performance before and after the optimization, it is found that the more complex the subscriber's subscription expression is for different transaction clusters, the better the optimization effect, which is in line with our expectations. The best optimization effect lies in a 32% increase in CPU performance, greatly reducing the cost of RocketMQ machine deployment this year.

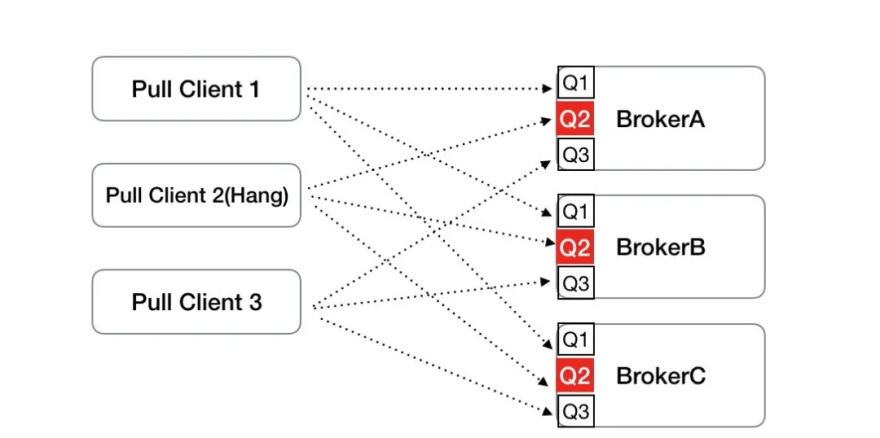

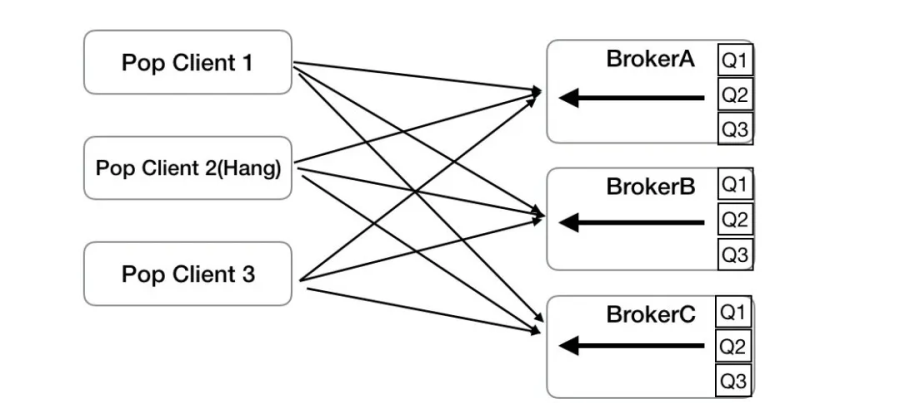

The RocketMQ PULL consumption is not very helpful when the machine is abnormally hung. If the client machine is hung but still connected to the broker, the client rebalancing will still allocate consumption queues to the hung machine. As the hung machine consumes data slowly or even stalls, the consumption will pile up. It is also true of the scenario where the server broker is released. Issues such as consumption latency may occur due to multiple rebalance events on the client, as shown in the following figure.

When a machine is hung on Pull Client 2, serious pileups occur on Q2s of the three brokers that Pull Client 2 is assigned to. Therefore, a new consumption model – POP consumption has been added, which can address the stability problem, as shown in the following figure:

In POP consumption, the three clients do not need the rebalance to allocate consumption queues. Instead, they request all brokers to obtain messages for consumption through POP. The broker allocates the messages of its three queues to the requesting POP Client according to a certain algorithm. Even if Pop Client 2 hangs, Pop Client 1 and Pop Client 2 will consume the messages of internal queues. As such, consumption pileups caused by hung machines are avoided.

The biggest difference between POP consumption and PULL consumption is that the former weakens the concept of a queue. PULL consumption requires the client to allocate the broker's queues through rebalancing so as to consume the specific queues allocated to it. For new POP consumption, the client machine directly requests each broker queue for consumption. The broker allocates the messages to the waiting machine. After the consumption is complete, the corresponding Ack results are returned to the broker, and the broker marks the message consumption results. If no response is received when time is up or the consumption fails, the consumption is retried.

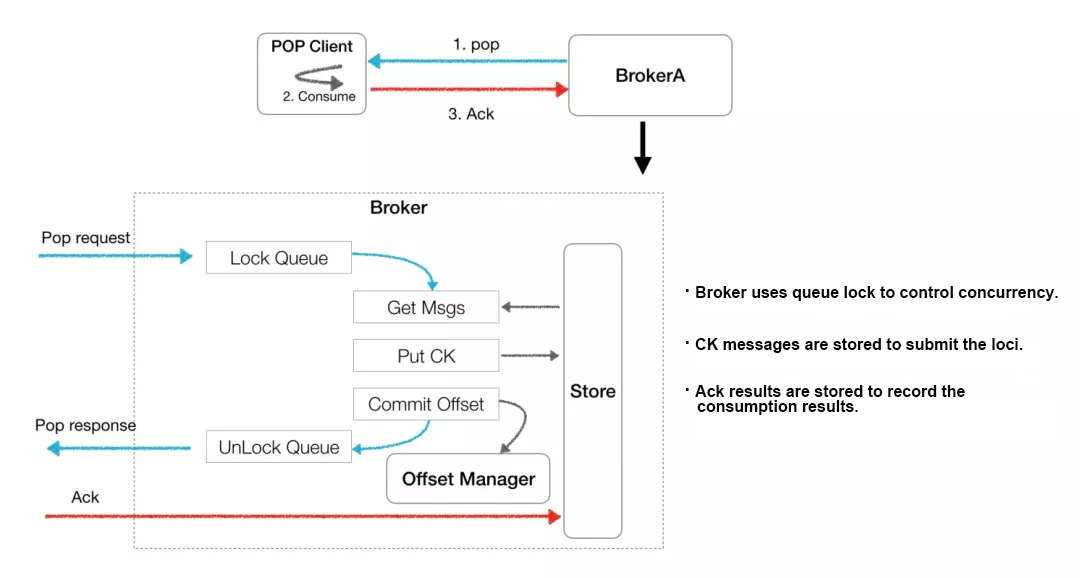

The preceding figure shows the POP consumption architecture. For each POP request, there are three steps:

The CK message is actually a timed message which records the specific loci of the POP message. When the client does not respond, the CK message is consumed by the broker again, and the message at the CK message loci is written into the retry queue. If the broker receives the Ack consumption result from the client, it deletes the corresponding CK message and determines whether to retry based on the result.

The overall process shows that no rebalance is required for POP consumption, which can avoid the consumption latency caused by rebalancing. At the same time, the client can consume all queues of the broker, thus avoiding pileups caused by hung machines.

An Introduction to the Spring Cloud Stream System and Its Principles

508 posts | 49 followers

FollowAlibaba Clouder - February 4, 2019

Alibaba Clouder - November 16, 2020

Alibaba Cloud Native - October 12, 2024

Alibaba Clouder - December 3, 2020

Aliware - November 10, 2020

Alibaba Clouder - November 20, 2020

508 posts | 49 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community