The tenth Double 11 Shopping Festival in 2018 was a huge success; many records were smashed and the gross merchandise value (GMV) hit over $30.8 billion in sales. The surge in transaction amounts recorded at midnight during the previous Double Eleven has not only proven Alibaba's breakthroughs in database technologies, but also demonstrated the resilient spirit of Alibaba's technologists. Turning each breakthrough from "impossibility" into "possibility" is the goal of Alibaba's technologists.

Prior to the launch of the 2018 Double 11 Shopping Festival, Alibaba Tech launched a forum called, "A Decade of Stories Around Coders". During this session, key technical experts who have provided technical support for the Double Eleven over the last decade were invited to review the technical transformation of Alibaba. In this article, Zhang Rui, a researcher from Alibaba's Database Division, will share a story about the database technologies used for Double 11 in the past ten years.

Zhang Rui, a researcher from Alibaba's Database Division

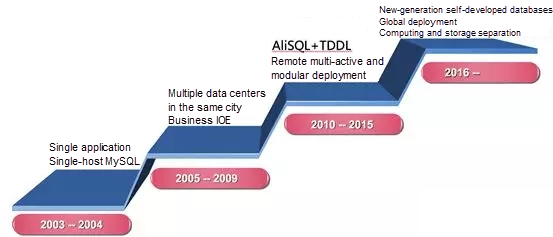

Alibaba's database team has been on a mission to lead the transformation of database technology in China for the past decade. From commercial databases to open source databases, and to self-developed databases, we have worked hard to meet this goal.

The history of the development of Alibaba's databases can be divided into the following phases:

• Phase I (2005 to 2009): the era of commercial databases.

• Phase II (2010 to 2015): the era of open source databases.

• Phase III (2016 until now): the era of self-developed databases.

The era of commercial databases is also known as the era of IBM, Oracle, and EMC (IOE), with "De-IOE" becoming a movement later in this phase. The joint efforts of DBAs and business development personnel in developing distributed database middleware TDDL, open source database AliSQL (Alibaba's MySQL branch), and high-performance x86 servers and SSDs let to the successful replacement of Oracle commercial database products, IBM minicomputers, and EMC high-end storage products. This opened the era of open source databases.

De-IOE was significant for the following reasons:

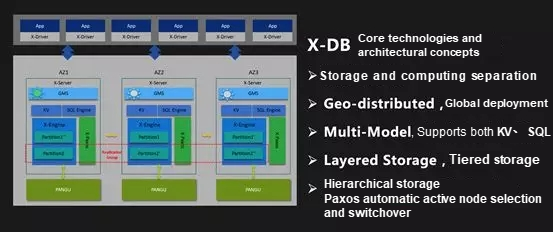

In 2016, we started developing our own database product, coded X-DB. Why did we want to develop our own database product? Some of the reasons are as follows:

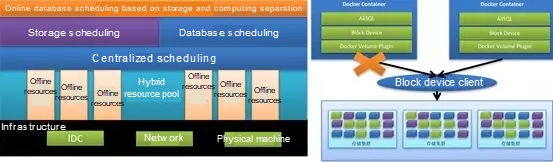

For each Double Eleven, the preparation of machine resources is critical. Reducing the costs of machine resources for Double Eleven presented a challenge for Alibaba's technologists. The first solution is to use cloud resources. Since early 2016, the database team had tried to apply high-performance ECS instances to Double Eleven. After years of refinement, during Double Eleven in 2018, we can now directly use ECS instances on the public cloud and integrate VPC instances with the Group's internal environment to create a hybrid cloud. This provides elastic capabilities for major sales campaigns during Double Eleven.

The second solution is to deploy online and offline hybrid servers. Daily offline tasks are executed on online (application and database) servers, while online applications for Double Eleven use offline computing resources. To implement this elasticity, the core technical problem for the database is the isolation of storage and computing resources. By implementing the isolation of storage and computing resources, the database can use offline computing resources during Double Eleven, supporting excellent elasticity. By using cloud resources and the hybrid deployment technology, the cost of the peak transaction traffic during Double Eleven can be minimized.

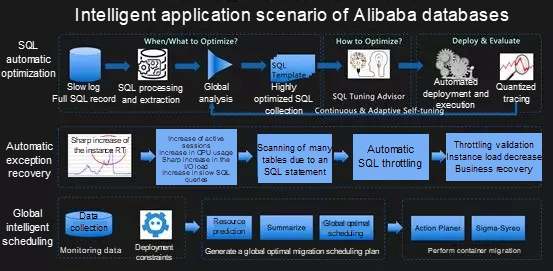

Another important technical trend in preparation for Double Eleven is intelligence. Integrating databases with intelligence is another development target, an example being the self-driving database. In 2017, we used intelligent technology for automatic SQL optimization for the first time. In 2018, we plan to enhance automatic SQL optimization and automatic space optimization throughout the network. This will hopefully mitigate the workload of DBAs, improve developer efficiency, and effectively promote database stability. We believe that, in the future, more work can be done by machines during Double Eleven.

I have been providing technical support for Double Eleven since 2012. As the leader of both the database team and the technical support department for a time, I have many interesting stories to share with you.

2012: My first experience on Double Eleven

I began to provide technical support for Double Eleven in 2012. Before that, I was working for the B2B database team. In early 2012, all the infrastructure teams of the Group were aggregated to the technical support department, which was then led by Liu Zhenfei. Before that, I had no experience with Double Eleven. In this first year, Hou Yi (director of the database team) appointed me the team leader, which gave me tremendous pressure at that time. During that time, we had to start preparing for Double Eleven from May or June. Our most important task was to identify risks and accurately assess the stress on each database.

We also needed to convert the inbound traffic into the QPS for each business system, and convert the business system QPS to the database QPS. In 2012, there was no full-link stress testing technology. This meant that, to identify potential risks, we had to rely on offline tests of each business system and review seminars hosted by the team leader for each product line.

At that time, we could not predict whether these processes could be successfully completed, but no one wanted the business they were responsible for to fall short. However, our machine resources were limited, and we could only rely on the leader's experience. This put each team leader was under a lot of pressure. I remember that the chief director of the preparation for Double Eleven in 2012 was Li Jin. People said that he was totally stressed out and could not even sleep at nights. To deal with this, he drove to the top of the Longjing Mountain at night and took a nap in his car.

What was the situation for me? This was the first year that I participated in the preparations for Double Eleven. With no experience, I was quite anxious. Fortunately, I had a bunch of reliable teammates who had de-IOE experience and had worked in business development for a long time. They all had solid knowledge of the business architecture and the database performance. With their help, I was basically able to figure out the architecture of the entire transaction system, which was very helpful for my future work.

After months of work, we were fully prepared for Double Eleven that year. However, everything was uncertain, making us very uneasy. When the clock hit 00:00, the worst thing happened, namely, the stress on the inventory database exceeded the capacity, and the inbound traffic to the NIC of the IC (commodity) database was full. I clearly remember looking at the monitoring indexes of the database unable to do a thing. Here is one detail: this huge traffic was so far beyond our expectations, that the stress index on the monitor showed that the database stress had exceeded the threshold.

At that crucial moment, Li Jin, the chief technical director, yelled "Calm down!" Then we looked up and saw the transaction amount continuously rising to a new level. We calmed down a bit. In fact, it was beyond our expectation that the inbound traffic to the IC database could become full and that the traffic to the inventory database would exceed the threshold. Finally, we did nothing but endure the peak traffic at 00:00.

Because of this issue, a huge number of instances of overselling occurred during Double Eleven in 2012, creating large losses for the company. After Double Eleven, our colleagues from the inventory, commodity, refund, and corresponding database departments worked overtime for two weeks to deal with the problems caused by overselling. Many of my friends complained how terrible the user experience was during the peak period. Because of these factors, we decided to resolve these problems for the next Double Eleven.

2013: Inventory hotspot optimization and unremarkable shadow tables

After the 2012 Double Eleven, we started to improve the performance of the inventory database. Inventory reduction is a typical hotspot problem. In this scenario, users compete to deduct the inventory of the same commodity (for the database, the inventory of a commodity is simply a row of records in the database), and the concurrent updates of the same row in the database are controlled by the row lock. We found that when a single thread (a queue) was used to update a row of records, the performance was excellent. However, when multiple threads were used to concurrently update a row of records, the performance of the entire database was downgraded significantly.

To resolve this problem, the database kernel team prepared two technical implementations: queuing and concurrent control. Both implementations had their pros and cons, and the core idea for resolution was to turn the disordered competition into an orderly queue, improving the performance of hotspot inventory deduction. Through continuous improvements and comparison, both technical solutions were finally made sophisticated and stable, meeting the performance requirements for the business. To find all the exceptions, we applied both solutions to AliSQL (Alibaba's MySQL branch) and controlled them using certain toggles. Through a whole year of work on this task, we finally resolved this inventory hotspot problem in time for Double Eleven in 2013, during which we made the first improvement in inventory performance. In 2016, we implemented another major optimization, which improved the inventory deduction performance by 10 times that in 2013. This was the second improvement of inventory performance.

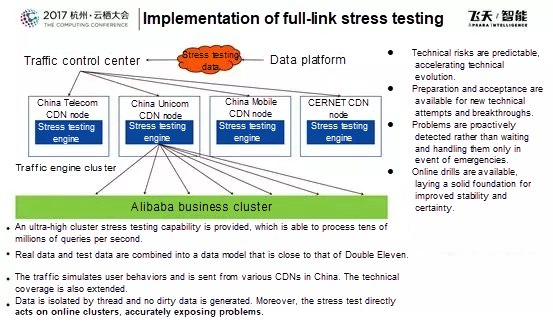

2013 was a milestone in the history of Double Eleven because of a breakthrough technology launched this year: full-link stress testing. This reminds me of Li Jin, who first proposed the concept of full-link stress testing. One day, he asked us whether it was possible to perform a full simulation test in the online environment. Everyone answered: no way! Reasonably enough, I think this was even more impossible for the database. At that time, the biggest concern was processing the data generated by the stress testing traffic, and I had never heard of any of such practices on an online system by another company. In the event of data problems, the consequences would be disastrous.

I remember that, on a hot afternoon in 2013 when I was struggling with inventory database problems, Shu Tong (who was in charge of the full-link stress testing technology) came to me to discuss the ongoing technical solution of the full-link database stress testing. This discussion revolved around a "shadow table" plan, in which a "shadow table" is set up in the online system to store and process all stress testing data and complete the synchronization between both tables by the system. We had no idea whether this plan was going to work. I think that in the weeks before Double Eleven, few of us believed that full-link stress testing could ever be implemented. Most of our preparations involved manual reviewing and offline stress testing. However, with everyone's hard work, we had a breakthrough in the plan two weeks before Double Eleven. No one could believe that the first full-link stress test was successful.

We conducted full-link stress tests almost every night in the days leading up to that Double Eleven. Everyone celebrated that moment, leaving me deeply impressed. However, this process was not smooth. During this process, we encountered a lot of faults, and many incorrect data writes occurred, even affecting the reports generated for the next day. Meanwhile, the prolonged and intensive stress tests had affected the lifespans of machines and SSDs. Despite all these setbacks, we were still moving forward. I think this is what makes Alibaba different. We believe in our vision and we will make it a reality. Actual practice proved that full-link stress testing has become the most effective tool in the preparation for Double Eleven.

Today, the full-link stress testing technology has become an Alibaba Cloud product, sharing the technological benefits with many more businesses.

2015: The story of the dashboard

In 2015, I began to serve as the director of the technical support department. In this role, I was responsible for all the technical facilities for Double Eleven, including the IDC, network, hardware, databases, CDN, applications, and other professional technologies. Undoubtedly, I was facing a brand new challenge. This time, I was stumped by a new problem: the dashboard.

In 2015, we held our first Tmall Double Eleven Party, which was located, with the media center, in the Water Cube in Beijing, rather than the Alibaba Campus in Hangzhou. The media center was to be on a 26-hour global live broadcast, with the world watching the gala night. This required close cooperation between our colleagues in Beijing and Hangzhou, presenting us with extreme challenges. We all know that the most important moments for the media live-broadcast dashboard are 00:00, when Double Eleven starts, and 24:00, when Double Eleven ends. During these moments, the GMV on the media live-broadcast dashboard must be displayed in as close to real-time as possible. That year, to improve the onsite experience in the Water Cube in Beijing and the interaction with the general command center in Hangzhou, we prepared a countdown link before 00:00. In the general command center at the Bright Summit of the Alibaba Campus in Hangzhou, Daniel Zhang would unveil the Double Eleven. Then, the live broadcast would be directly switched to our media dashboard. This required zero latency for the GMV, presenting a tremendous challenge. Even worse, the result of the first full-link stress test was unsatisfactory, with latency reaching tens of seconds. Liu Zhenfei, the director of the campaign, emphasized that the first GMV figure must be displayed within five seconds. This means that the real-time transaction amount must be accurately displayed within five seconds. Yet again, no one thought this was possible. At that time, the director team proposed another plan. A figure flipping animation (with a fake figure) could be displayed on the dashboard at 00:00 on Double Eleven. Then, we could switch to display the actual transaction volume if we were prepared. For Alibaba's staff, however, all data displayed on the dashboard had to be real and accurate. Obviously, it was impossible for us to adopt this plan. Everyone was thinking about how to reduce the latency to less than five seconds. That night, all the responsible teams set up a dashboard technology research group and began to tackle the technical problems. For the dashboard, even one figure could involve all the processes over the full link of real-time computing, storage, and presentation of applications and database logs. Finally, we reached our goal. The first GMV figure was displayed on the dashboard within three seconds after 00:00 on the Double Eleven, which fell within the required five seconds, and was made possible by our hard work. Additionally, to ensure zero exceptions, we enabled dual-link redundancy. Similarly to two engines in an airplane, both links could be used for computing, supporting real-time switchover.

The audience might not have imagined that there could be so many stories and technical challenges behind each figure on the dashboard. However, this is Double Eleven, which consists of many different links with contributions from each Alibaba employee.

How to Build the Most Effective Backup System - A Conversation with the Expert

2,599 posts | 769 followers

FollowAlibaba Clouder - February 5, 2019

Apache Flink Community - December 17, 2024

Qiyang Duan - May 28, 2020

Alibaba Clouder - February 9, 2021

Alibaba Clouder - August 28, 2019

Alipay Technology - August 21, 2019

2,599 posts | 769 followers

FollowLearn More

CDN(Alibaba Cloud CDN)

CDN(Alibaba Cloud CDN)

A scalable and high-performance content delivery service for accelerated distribution of content to users across the globe

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Clouder

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free

5025726126502591 February 13, 2019 at 8:45 pm

Medialine network business