By Mofeng

This article introduces how SRE of the Alibaba Serverless Infrastructure (ASI), the unified infrastructure designed by Alibaba for cloud-native applications, explores ways to develop the grayscale capability of the ASI infrastructure in large-scale cluster scenarios under the Kubernetes system.

ASI emerged when Alibaba Group was fully migrating to the cloud. While hosting a large number of Alibaba Group's cloud-native infrastructure, ASI also has its own architecture and constantly evolving forms.

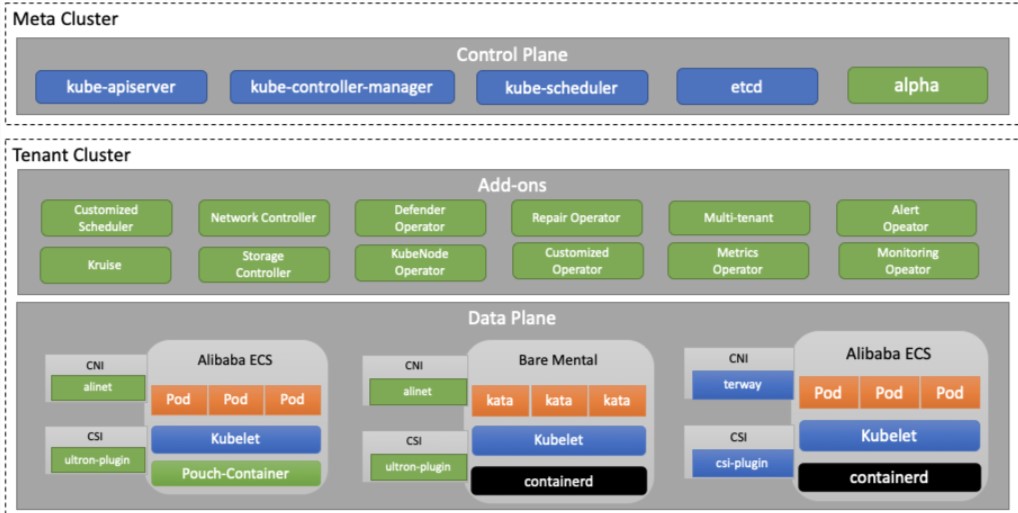

ASI mainly adopts a Kube-on-Kube architecture, maintaining a core Kubernetes meta cluster at the bottom, and deploying the master control components of each tenant cluster, including APIServer, controller-manager, scheduler, and etcd. In each business cluster, various add-on components, such as controllers and webhooks, are deployed to support various ASI capabilities jointly. In terms of data-plane components, some ASI components are deployed on nodes in the form of DaemonSets, while others are deployed with RPM packages.

Meanwhile, ASI carries hundreds of clusters and hundreds of thousands of nodes in the group and sales area scenarios. Even in the early days of ASI construction, the nodes under its control reached tens of thousands. With the rapid development of the ASI architecture, components and online changes are frequently varied. In the early days, hundreds of component changes could be made to ASI in a single day. No matter what, the fault changes to any of the basic components of ASI, such as the CNI plug-in, CSI plug-in, etcd, Pouch, may cause the fault of the whole cluster and irretrievable losses to the upper-level business.

In short, large cluster size, large numbers of components, frequent changes, and complex business forms are the major challenges for building grayscale and changing systems at ASI or other Kubernetes infrastructure layers. At that time, several change systems had already been available for ASI or Sigma within Alibaba, but they all had certain limitations.

Therefore, we hope to learn from the release platform history of several generations of sigma/ASI. Starting from the time of change, we will construct the grayscale system under the ASI system and build the O&M change platform under the Kubernetes technology stack, focusing on the system capability and supplementing with the process specification. By doing so, the stability of thousands of large-scale clusters can be ensured.

The development of the architecture and forms of ASI will affect the way its grayscale system is built. Therefore, we made the following bold assumptions about the future forms of ASI in the early stage of ASI development:

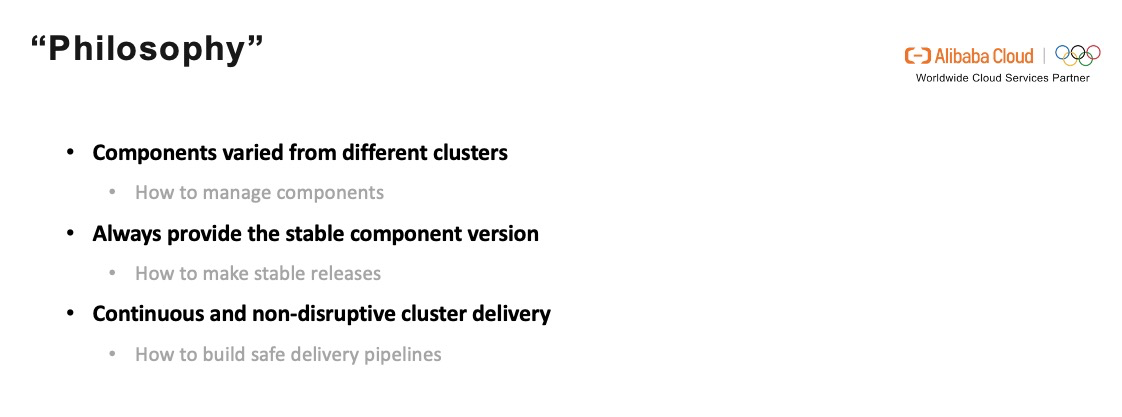

Based on the preceding assumptions, we can solve the following urgent problems in the initial stage of ASI construction:

Let's switch away from the cluster perspective and try to solve the complexity of change from the component perspective. The lifecycle of a component can be divided into the requirement, design, R&D, and release phases. For each phase, we want to standardize and drop fixed specifications into the system based on the characteristics of Kubernetes itself to ensure the grayscale process with system capabilities.

Based on the shape of ASI and the particularity of change scenarios, we have developed a grayscale system for ASI from the following ideas:

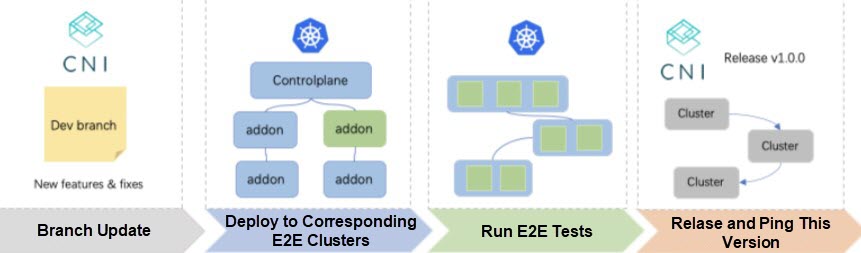

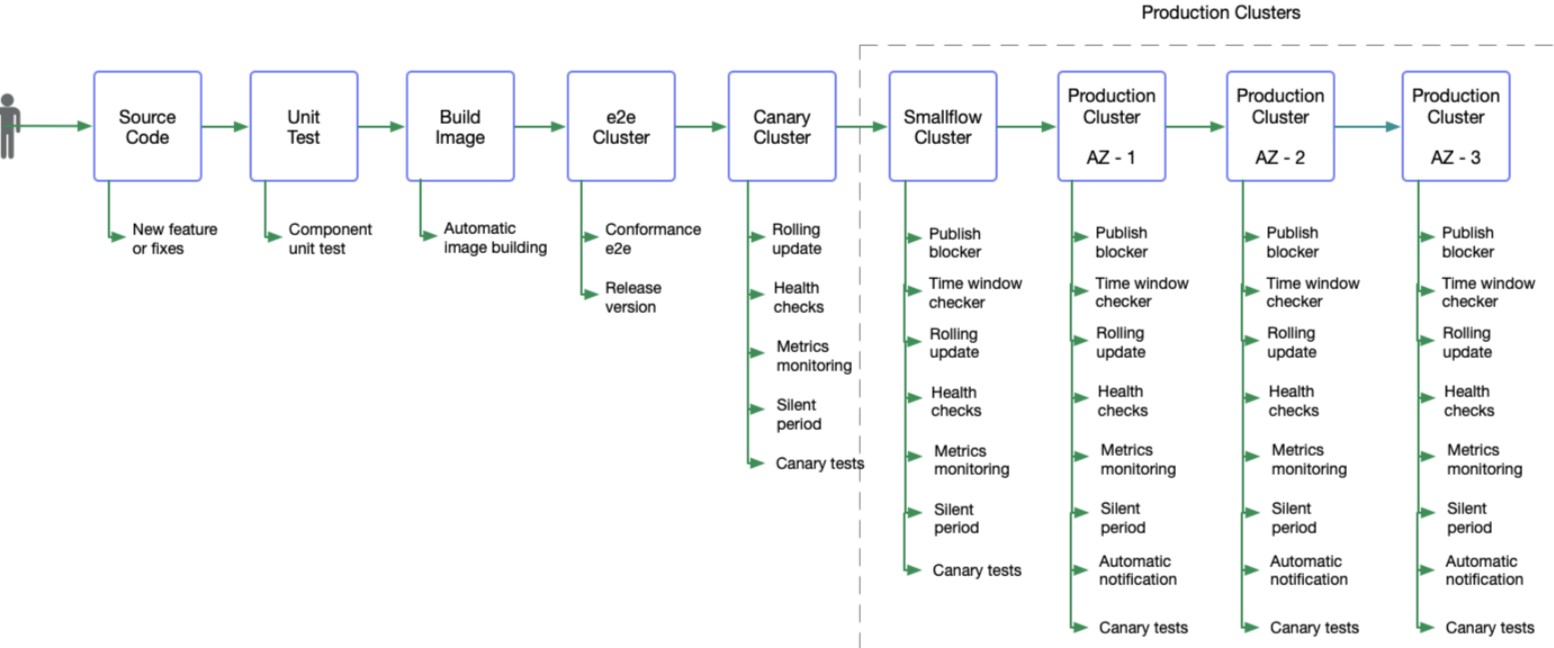

The R&D process of the core components of ASI are summarized below:

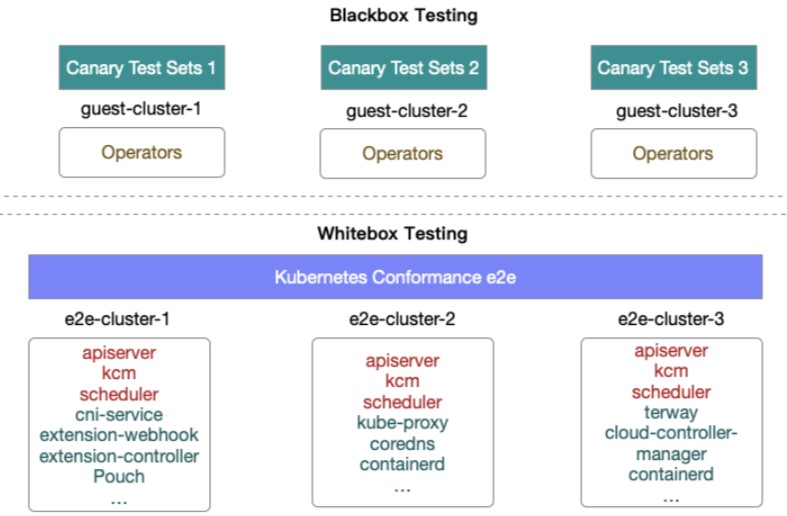

For the core components of ASI itself, we have built the e2e testing process for ASI components jointly with the quality technology team. In addition to the unit test and integration test of components, we have set up a separate e2e cluster for the normal conduct of the overall ASI functional verification and e2e test.

Starting from the perspective of a single component, after the new function of each component is developed, code review is performed and merged into the develop branch. Then, the e2e process will be triggered immediately. After building the image through the chorus (cloud-native test platform) system, ASIOps (ASI O&M and control platform) will be deployed to the corresponding e2e cluster to perform standard Kubernetes conformance suite tests to verify that the functions within Kubernetes are working. Only when all test cases pass can the version of the component be marked as pingable. Otherwise, subsequent releases will be restricted.

However, as mentioned above, the open architecture of Kubernetes means that it contains core components, such as control and scheduling, and relies on upper-layer operators to implement cluster functions. Therefore, Kubernetes white-box tests cannot cover all application scenarios of ASI. Changes to the underlying component functions will affect the use of the upper-layer operators significantly. So, we have added black-box test cases to the white-box conformance, which include the functional verification of various operators, such as the scaling initiated from the upper-layer PaaS, and the quota validation of the verification and release link, normally running in the clusters.

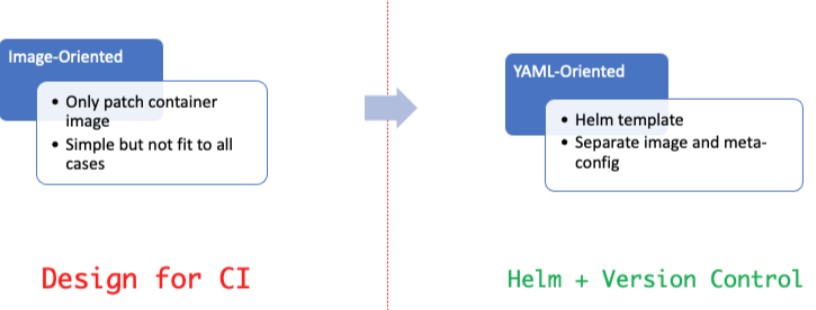

Based on the features of a large number of ASI components and clusters, we have expanded the existing asi-deploy functions by taking components as an entry point to improve the management of components among multiple clusters from image management to YAML management.

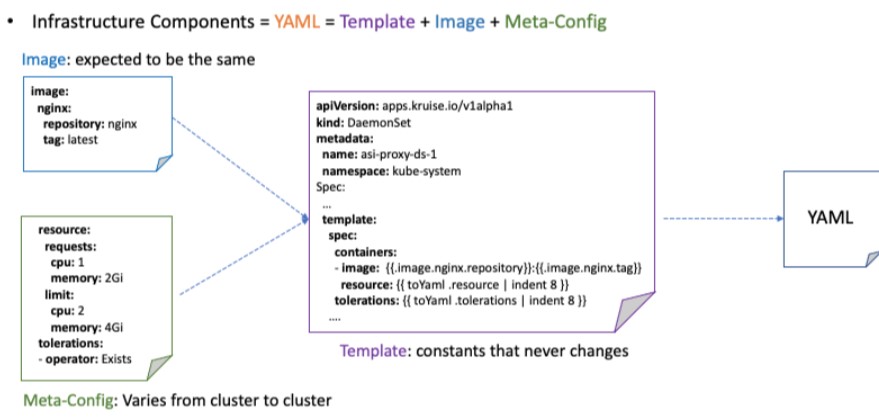

Based on the Helm Template, we can extract the YAML file of a component into the template, image, and meta-configuration, showing the following information below:

Therefore, a complete YAML file is rendered by the template, image, and meta-configuration. Then, ASIOps manages the image and configuration information of the YAML file from the cluster dimension and the time dimension (multiple versions). After that, compute the distribution of the component's current version information across many clusters and the consistency of the component's version in a single cluster.

For image versions, we systematically promote consistency to ensure that there are no online issues due to low versions. In contrast, we simplify its complexity administratively and prevent configuration errors from being sent to the cluster for configuration versions.

With the basic prototype of the component in place, we hope that releasing is more than just replacing the image fields in the workload. Currently, the YAML information contains configurations other than the image, which supports changes other than the image changes. Therefore, we try to distribute YAML data as close as possible to the kubectl apply command.

Three types of YAML specifications are recorded:

For a YAML built from the image, meta-configuration, and template, we take all three of these specs and perform a diff to get the resource diff patch. Then, the system filters out dangerous fields that are not allowed to be changed and finally sends the overall patch to the APIServer in the form of a strategic merge patch or merged patch. The trigger makes workload re-enter the reconciliation, changing the status of this workload in the cluster.

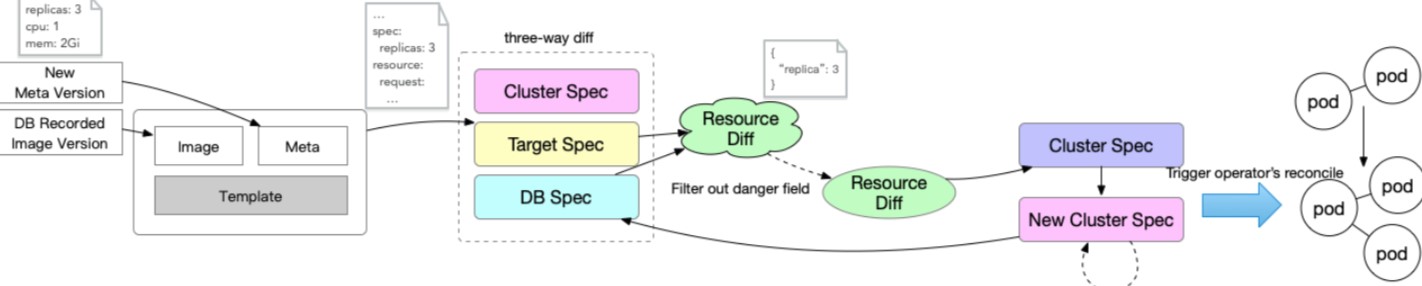

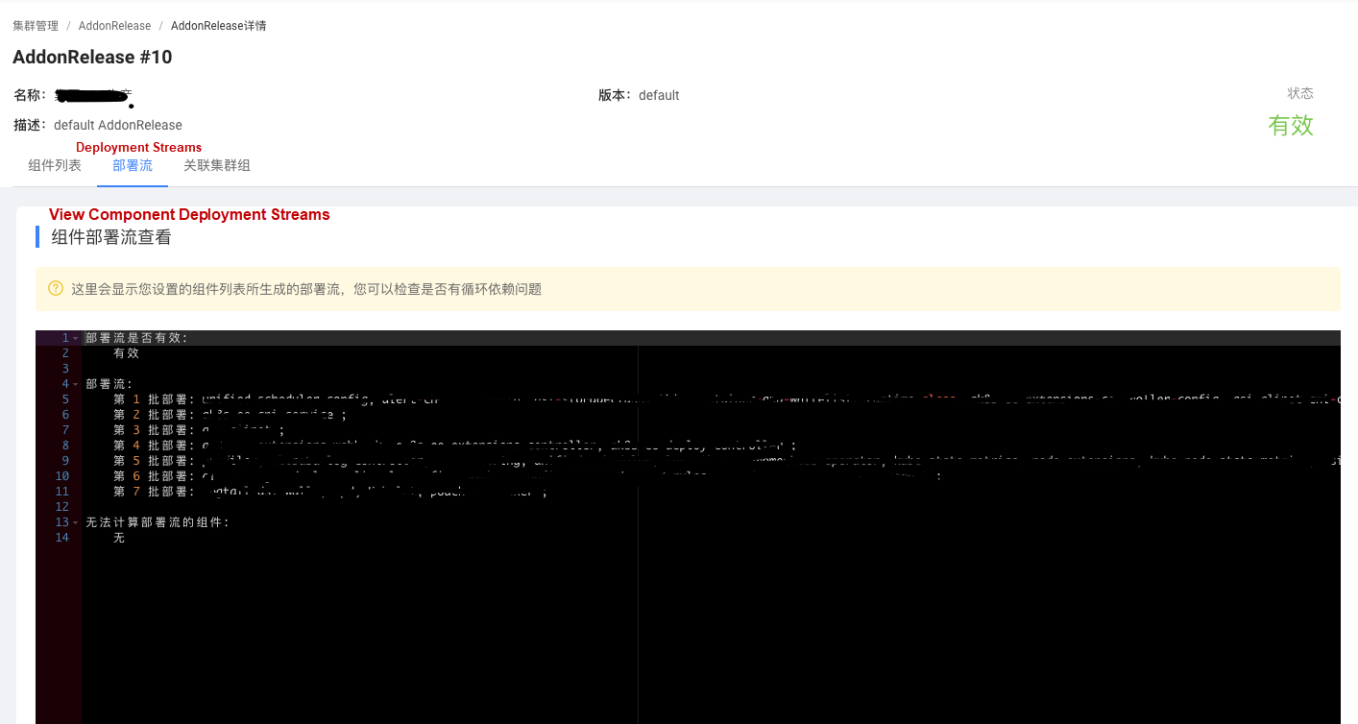

In addition, due to the strong correlation between ASI components, it is necessary to release multiple components at a time in many scenarios. For example, when a cluster is initialized or an overall release is made, we should add the concept of Addon Release to the deployment of a single component, indicating the release version of the entire ASI as a collection of components and generating a deployment flow based on the dependencies of each component automatically. This ensures that the overall release process does not involve circular dependencies.

In the cloud-native environment, we describe the deployment of applications in the final state, while Kubernetes provides the ability to maintain various workloads. The Operator compares the gap between the current state and the final state of the workload and coordinates the state. In other words, this process, the process of workload release or rollback, can be handled by the Operator-defined release strategy for this process-oriented workflow within an end-state-oriented scenario.

Compared with the application load at the upper layer of Kubernetes, the grayscale release strategies and the grayscale pause capability of the component are more important for the release of the underlying infrastructure component. It means regardless of any type of component, it all must be able to stop releasing in a timely manner during the release process, providing more time for feature testing, decision-making, and rollback. Specifically, these capabilities can be grouped into the following categories:

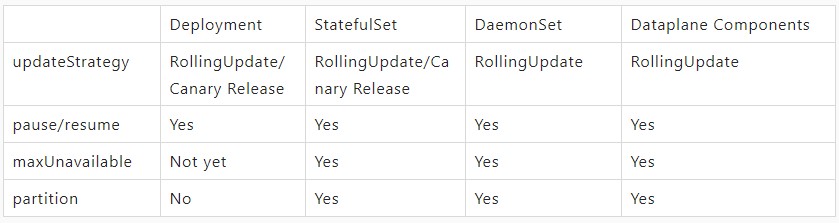

ASI enhances Kubernetes-native workload and node capabilities. Based on the capabilities of Operators, such as Kruise and KubeNode in the cluster and the joint cooperation of the upper-layer ASIOps, we have supported the grayscale capabilities for Kubernetes infrastructure components mentioned above. For the components of Deployment, StatefulSet, DaemonSet, and Dataplane, the following capabilities are supported when publishing in a single cluster:

The following sections briefly describe how to implement grayscale for components of different workload types. For more details, please see the OpenKruise project and the open-source KubeNode project in the future.

Most Kubernetes operators are deployed with deployment or StatefulSet. During the release process of an Operator, all Operator copies are upgraded once the image fields are changed. Once there is a problem with the new version in this process, it will cause irreparable problems.

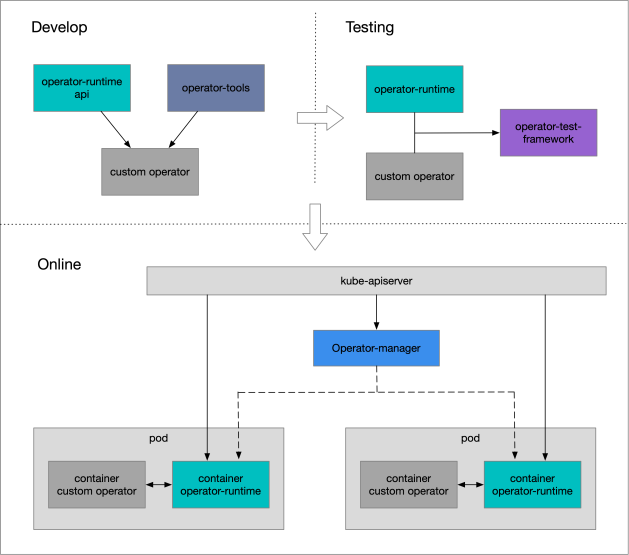

For such Operators, we separate controller-runtime from them to build a centralized component, operator-manager, which is the controller-mesh in the open-source implementation of OpenKruise. At the same time, an operator-runtime sidecar container is added to each Operator pod, and the core capabilities of the operator are provided to the master container of the component through the gRPC interface as well.

After the Operator establishes a Watch connection to the APIServer, events are monitored and transformed into a stream of tasks to be coordinated by the operator, known as the operator's traffic. Besides, the operator-manager is responsible for centrally managing the traffic for all Operators, sharding the traffic according to rules, and distributing it to different operator-runtimes. Then, a workerqueue in the runtime triggers the real operator's coordination task.

During the grayscale release, the operator-manager can allocate the traffic of the Operator to both copies of the old and new versions based on the namespace level and the hash sharding. Therefore, the grayscale release can be verified from the workloads processed by the two copies to check whether the release is faulty.

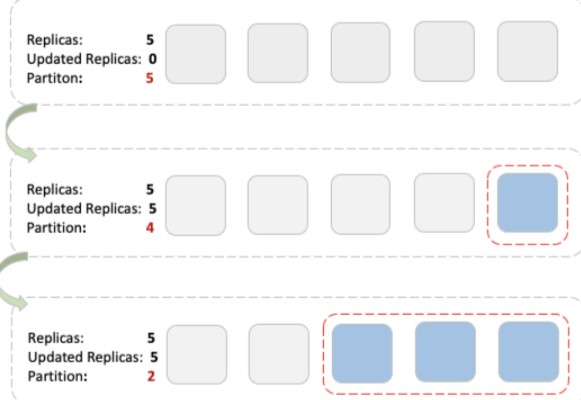

The native DaemonSet supports RollingUpdate, but its rolling upgrade only supports maxUnavailable, which is unacceptable for ASI models that use a single cluster with thousands of nodes. Once the images are updated, all DaemonSet Pods will be upgraded and cannot be suspended. They are only protected by maxUnavailable strategies. As soon as a bugged version of DaemonSet is released, and the process can start normally, maxUnavailable will not work either.

In addition, the community provides the onDelete mode, which allows users to delete a Pod and create a new Pod manually, with the release order and grayscale controlled by the central site of the release platform. This mode fails to achieve a self-closing loop in a single cluster, with all the pressure rising to the release platform. Pod eviction through the upper-layer release platform is risky. The best way is to allow the workload to provide component update capabilities in a closed loop. Therefore, we have strengthened the DaemonSet in Kruise to support the aforementioned important grayscale release capabilities.

The following is a basic example of Kruise Advanced DaemonSet:

apiVersion: apps.kruise.io/v1alpha1

kind: DaemonSet

spec:

# ...

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 5

partition: 100

paused: falseThe partition indicates the number of pods that retain the image of the earlier version. During the Rolling upgrade, no image will be updated for the new Pods once the specified number of Pod copies has been upgraded. In the upper layer of ASIOps, we control the number of partitions to roll up the DaemonSet, match other UpdateStrategy parameters to ensure the grayscale release progress, and perform some orientation verification on the newly created Pod.

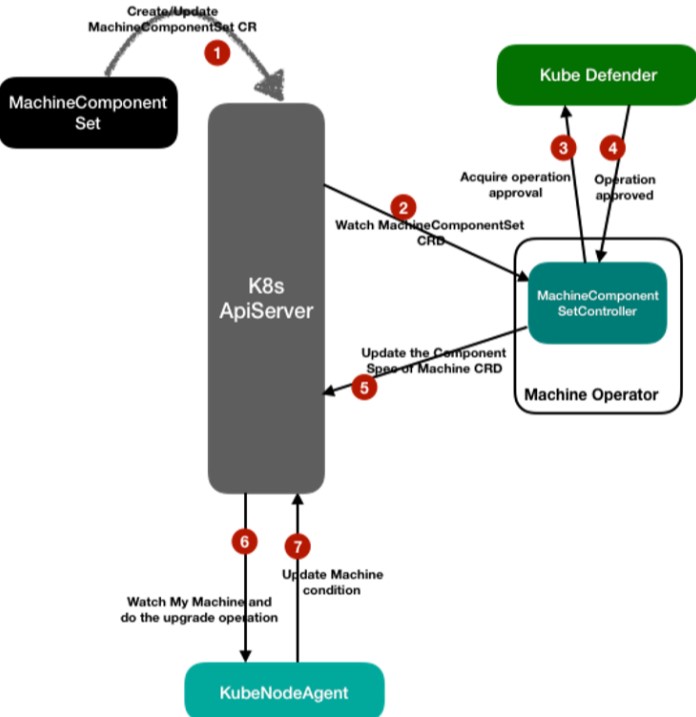

MachineComponentSet is the workload of the KubeNode system, node components in ASI that are outside of Kubernetes for those components cannot be released with the workloads of Kubernetes. For example, components such as Pouch, Containerd, and Kubelet are released through this workload.

Node components are represented by MachineComponent, a custom resource of Kubernetes. It contains information such as the installation script and environment variables for node components of a specified version, such as pouch-1.0.0.8. By contrast, MachineComponentSet is the mapping between node components and node collections, indicating that the machines must be installed with this version of node components. The central Machine-Operator coordinates this mapping and compares the differences between the component version on the node and the target version in the final state, trying to install the specified version of node components.

In the grayscale release, the design of MachineComponentSet is similar to Advanced DaemonSet. MachineComponentSet provides the RollingUpdate mode, which includes the partition mode and maxUnavailable mode. The following is an example of MachineComponentSet mode:

apiVersion: kubenode.alibabacloud.com/v1

kind: MachineComponentSet

metadata:

labels:

alibabacloud.com/akubelet-component-version: 1.18.6.238-20201116190105-cluster-202011241059-d380368.conf

component: akubelet

name: akubelet-machine-component-set

spec:

componentName: akubelet

selector: {}

updateStrategy:

maxUnavailable: 20%

partition: 55

pause: falseIn the cluster, the upper-layer ASIOps controller interacts with the Machine-Operator when controlling the grayscale upgrade of node components, modifying related fields, such as the partition field in the MachineComponentSet for rolling upgrades.

Compared with the traditional node component release mode, the KubeNode system also synchronizes the lifecycle of nodes into the Kubernetes cluster and implements the control of grayscale release to the cluster. By doing so, the pressure on the center to manage node metadata can be reduced.

Alibaba has established an in-house redline 3.0 of change for cloud and basic services. The red line presents requirements for batch grayscale, control interval, observability, suspension, and rollback of change operations for control plane and data plane components. However, the grayscale of the changed objects based on region units does not meet the requirements of complex scenarios of ASI. So, we try to refine the types of change units to which the changes of the control plane and data plane belong on ASI.

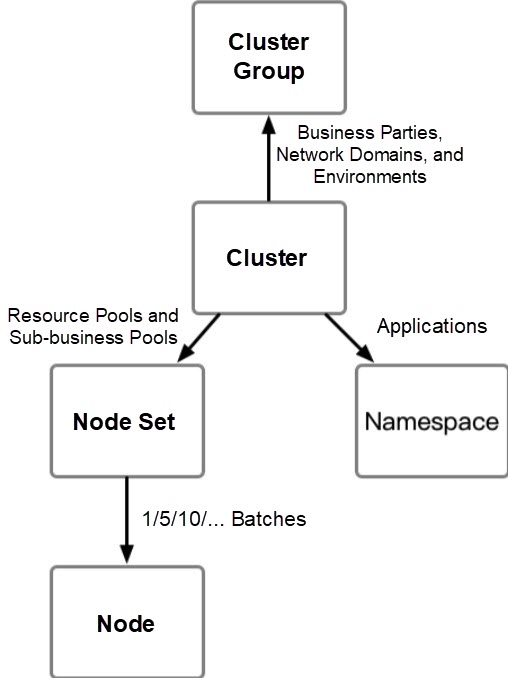

We obtain the following basic units by abstracting up and down based on the basic unit of the cluster:

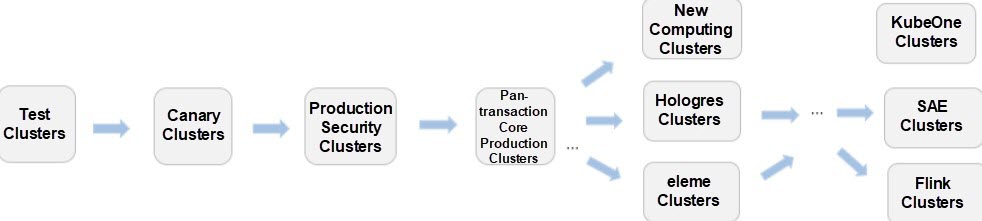

For each release mode with a control component or node component, we take the minimum explosion radius as the principle and arrange their corresponding grayscale units in series. In this system, the grayscale process can be incorporated into the system. During the release, component development must follow the process and be deployed on a unit-by-unit basis. In the orchestration process, the following factors are mainly considered:

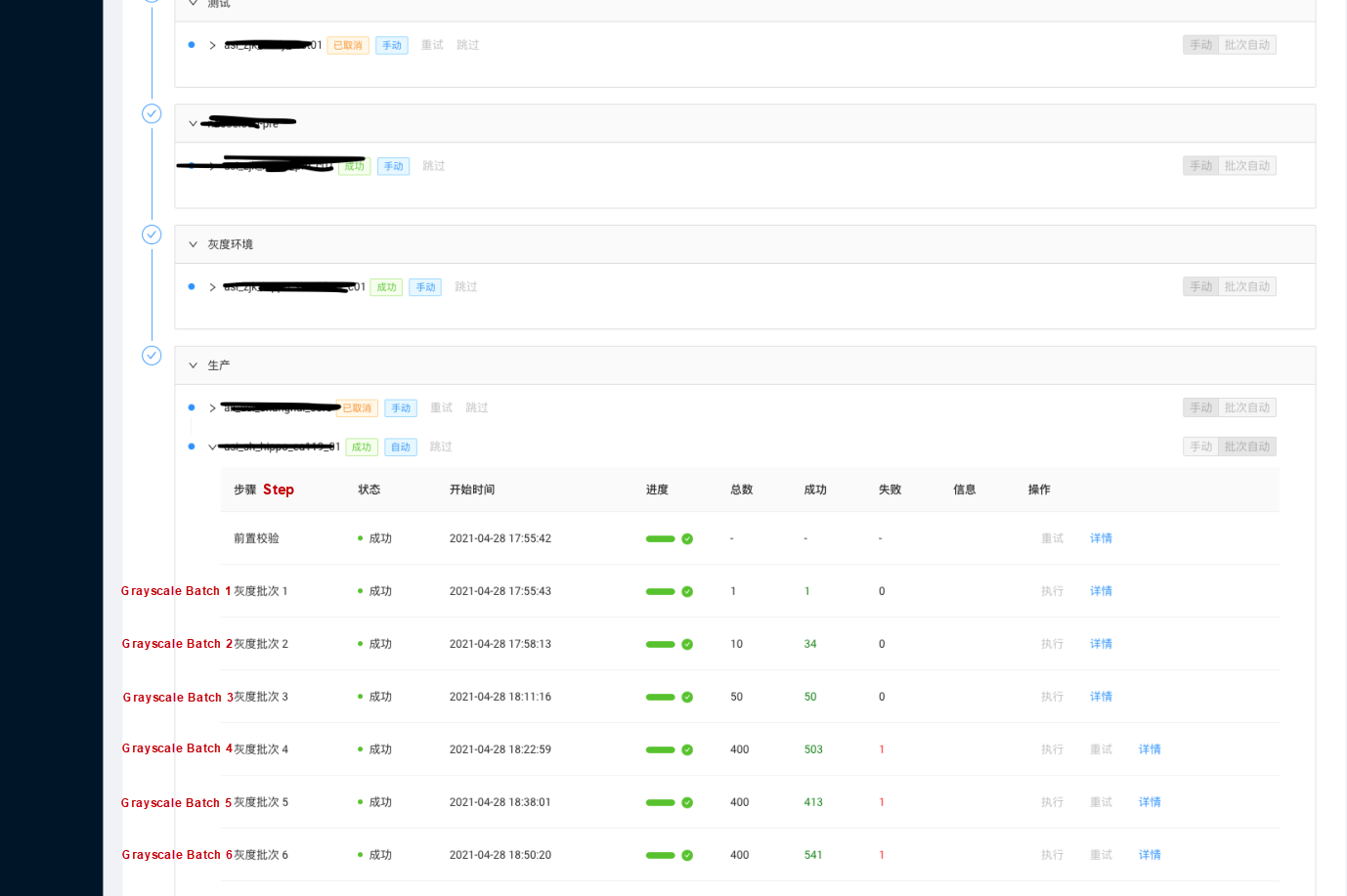

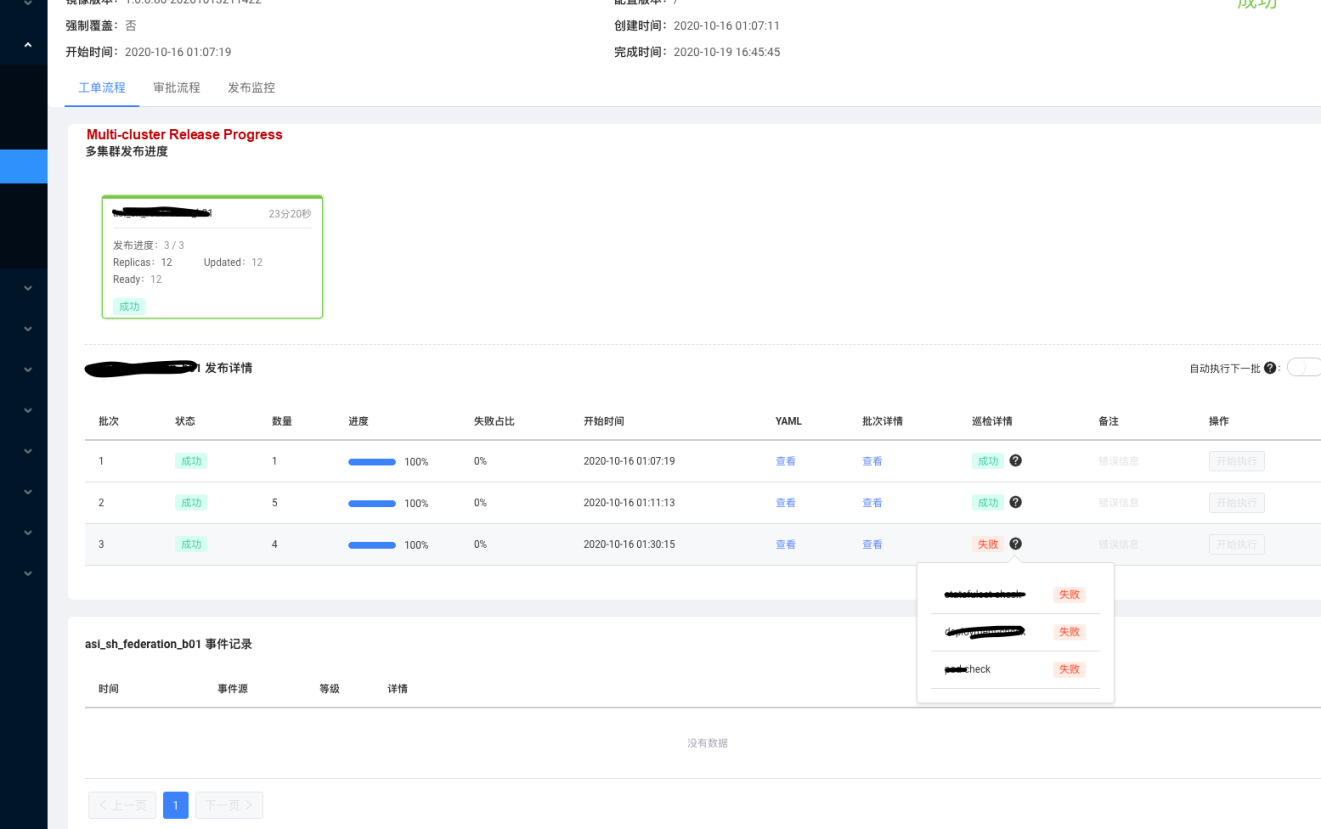

In addition, we score the weights of each unit and orchestrate the dependencies between the units. For example, the following is the release pipeline of the ASI monitoring component. Since the same solution is used in all ASI scenarios, it is pinged to all ASI clusters. During this process, the component will be verified by the pan e-commerce trading cluster, released by the second party within the group VPC, and published by the selling area cluster.

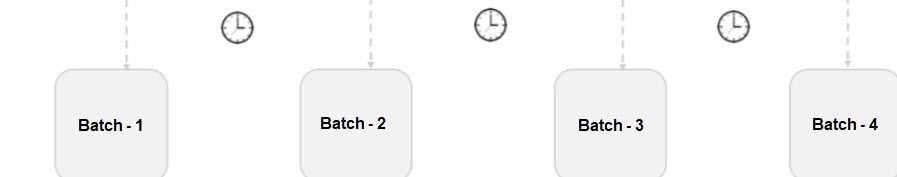

In each cluster, this component is phased released in one, five, or ten batches individually, following the grayscale approach within a single cluster that was discussed in the previous section.

After the grayscale unit orchestration, we can obtain the basic skeleton of the component pingable pipeline. For each grayscale unit in the skeleton, we try to enrich its pre-check and post-check. By doing so, it can confirm the success of the grayscale after each release and block the change effectively. Moreover, we set a certain silence period for a single batch to allow enough time for post-verification to run and provide enough time for component development to verify. Currently, each batch of pre-and post-verification includes:

We can get a whole process of events from development by connecting the whole multi-cluster release process in series, including testing and the online release of a component. The following figure shows the entire process:

In terms of the implementation of the pipeline orchestration, we conducted a selection survey on the existing tekton and argo in the community. Furthermore, we have considered that many logics in the release process are not suitable for execution in containers alone. Our requirements during the release process are not only CI/CD, and there are two unstable projects in the community at the initial stage of design. Therefore, we have implemented the basic design of tekton, including task, taskrun, pipeline, and pipelinerun, and maintained the design direction in common with the community. In the future, we will adjust the implementation closer to the community in a more cloud-native way.

After nearly a year and a half of construction, ASIOps currently hosts nearly a hundred control clusters, about a thousand business clusters, including ASI cluster, multi-rent virtual cluster, Sigma 2.0 virtual cluster, and more than 400 components, including ASI core components and second-party components. Meanwhile, ASIOps contains nearly 30 pingable pipelines suitable for different release scenarios of ASI and service providers.

Moreover, there are nearly 400 component changes, including image changes and configuration changes every day, and more than 7,900 times are pinged by the pipeline. We have enabled the automatic grayscale in a single cluster under the condition of perfect pre-check to improve the release efficiency. Currently, this capability is used by most ASI data plane components.

The following is an example of version pinging of a component through ASIOps:

The batch grayscale release on ASIOps and post-check change blocking also help us prevent certain faults caused by component changes. For example, when the pouch component performs grayscale, the cluster is unavailable due to incompatible versions. This condition is discovered through the post-inspection after the release, and the grayscale process is blocked.

Most of the components on ASIOps are the underlying infrastructure components of ASI/Kubernetes. There have been no faults caused by component changes over the past year and a half. We strive to solidify the specified specifications through system capabilities to reduce and prevent changes that violate the red line of changes. Thus, the occurrence of faults is gradually shifted to the right, from low-level faults caused by changes to complex faults caused by the code bugs themselves.

With the increasing coverage of ASI, ASIOps, as its control platform, needs to meet the challenges of more complex scenarios, larger clusters, and components.

First, we need to solve the trade-off between stability and efficiency urgently. When the number of clusters that ASIOps integrates into management reaches a certain level, the time taken to perform a component pinging will be considerable. We hope to fully manage changes after sufficient pre-and post-verification is built. In other words, the platform can ping the components within the release scope automatically and block changes effectively, realizing CI/CD automation at the Kubernetes infrastructure layer.

Meanwhile, we need to orchestrate grayscale units manually to determine the order. In the future, we want to build metadata for the entire ASI, and filter, score, and orchestrate all units within each release range automatically.

Finally, current ASIOps only supports grayscale release for changes related to components, while changes within ASI go far beyond components. A grayscale system is universal since grayscale pipelines need to be enabled to inject into other scenarios where resource O&M and preplanned execution are concerned.

In addition, the grayscale release capabilities of the entire control platform have not been coupled tightly with those of Alibaba. They are completely based on workloads such as Kruise or KubeNode. In the future, we will explore ways to output the entire set of open-source capabilities to the community.

Cluster Images: Achieve Efficient Distributed Application Delivery

Cloud-Native Affects the Technology Stack and Every Related Position

664 posts | 55 followers

FollowAlibaba Cloud Native Community - May 17, 2022

Alibaba Cloud Native Community - March 2, 2023

Alibaba Clouder - July 16, 2019

Alibaba Cloud Community - November 25, 2021

Alibaba Cloud Native - May 11, 2022

三辰 - July 6, 2020

664 posts | 55 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Native Community