The Luogic Show is a company that provides knowledge services such as talk shows and podcasts, advocates the concept of "time-saving acquisition of knowledge". How does the technical team implement the concept of time-saving in technical practice? This article describes the build process of the full link stress testing by the Luogic Show technology team. Let's find out.

The higher the visibility of the service, the greater the pressure on its technical team. Once a technical problem occurs, it may be magnified, especially when it serves a group of users with high requirements for knowledge acquisition experience.

Ensuring the availability and stability of services is the top priority and one of the technical challenges for the technical team. For example, Luogic Show provides knowledge services for global users who use fragmented time to learn in places, such as high-speed trains, subways and buses, who are likely to open the App in the early morning and late night, and who are located overseas. This requires the App to provide stable and high-performance services and experience around the clock.

In the actual production environment, and in the event of user access, the entire link from CDN to access layer, front-end applications, backend services, cache, storage, and middleware faces uncertain traffic. Whether it is a public cloud, a private cloud, a hybrid cloud, or a self-built IDC, the global bottleneck identification, and the overall service capacity exploration and planning need to be tested by a highly simulated full link stress testing. The uncertain traffic here refers to the irregular and unknown traffic caused by a large promotion activity, a regular high-concurrency period, and other unplanned scenarios.

As we all know, the service status of an app is not only affected by its own stability, but also affected by the traffic and other environmental factors, and the impact continues to be transmitted to the upstream and downstream. Even if a slight error occurs in one link, no one can be sure the impact the error will cause after several layers of accumulation in the upstream and downstream.

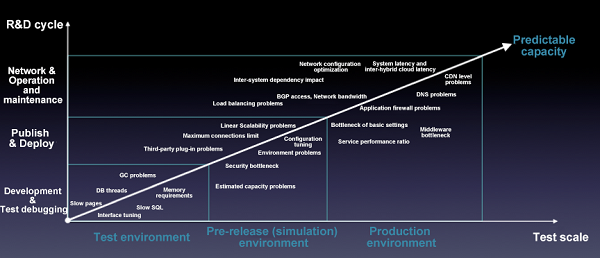

Therefore, the establishment of a verification mechanism in the production environment to verify that each production link can withstand all kinds of traffic access has become a top priority to ensure the availability and stability of services. The best verification method is to make the event occur in advance, that is, to allow real traffic to access the production environment, to implement a comprehensive simulation of real service scenarios, ensuring that the performance, capacity, and stability of each link are safe. This is the background of the full link stress testing and an all-round upgrade of the performance test to make it "predictable".

Implementation path of service stress testing

It can be seen that if the full link stress testing is well performed, the system only needs to go through the scenario that has been repeatedly verified and take the "tested questions" again in the event of traffic in the real environment. It is possible that no problem is expected to occur.

The path is very important to implement a complete service stress testing.

To achieve the goal of accurately measuring the supporting capacity of the service, the service stress testing requires the same online environment, the same user scale, the same service scenario, the same service level and the same traffic source, so that the system can perform a "simulation test" in advance, thus accurately measuring the actual processing capacity of the service model. Its core elements are: stress testing environment, stress testing basic data, stress testing traffic (model, and data), traffic initiation and control, and problem locating.

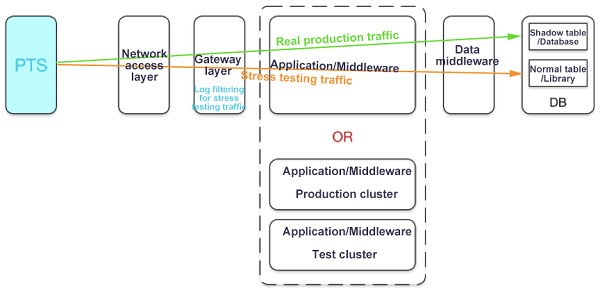

Stress testing environment and basic data

The processing of the basic data in the production environment is basically divided into two types. One is that the database level does not need to be modified and the test is directly executed based on the test account in the basic table (relevant data integrity is also required). After the stress testing, the flow data generated by relevant tests is cleared (the clearing method can solidify SQL scripts or fall on the system); The other is to separately label the stress testing traffic (such as the separately defined Header), and then the label is identified and passed in the service processing process, including asynchronous messages and middleware, and finally it falls into the shadow table or shadow library of the database. For more information about this method, see Alibaba full link stress testing practices. We also choose this method. In addition, the stress testing for the production environment should be carried out during the low peak period of the service to avoid affecting the service in production.

At present, Luogic Show has already provided multiple traffic portals, such as Dedao APP, Dedao for Junior, and Dedao Store in the WeChat Official Account "Dedao".

Every year, Luogic Show holds a New Year's speech. In the first year, the speech was watched online by more than 2 million people on Youku, and in the second year, it was broadcast live on Shenzhen Satellite TV in cooperation with video websites, such as Youku. When the QR code was released during the live broadcast, we encountered a large number of user requests at the same time. This means that if the traffic during this period is not accurately estimated and the performance bottleneck is evaluated, a large service interruption may occur during the live broadcast.

It is the top priority of the technical team to carry out a full link stress testing on the whole system to effectively ensure the service stability of each traffic portal. Therefore, we planned to introduce the full link stress testing in 2017. During this period, we also made a service-oriented rebuild of the system. The content of the rebuild was disseminated in the media before. This time, we mainly talk about the core of ensuring service stability - the full link stress testing. The general build process is as follows:

After more than 3 months of centralized implementation, we have connected the full link stress testing to 174 links, and created 44 scenarios. The stress testing consumes 120 million VUM , and has found more than 200 various problems.

If the full link stress testing facilities for Luogic Show in 2017 is from 0 to 1, then 2018 is from 1 to N.

Starting from 2018, the full link stress testing has entered a relatively mature stage. Based on PTS and previous experience, our test team quickly applied the full link stress testing to daily activities and New Year's activities, and to the newly-launched service "Dedao for Junior". At present, the full link stress testing has become one of the core infrastructures to ensure service stability.

The build of the full link stress testing is not so much a project as an engineering. It is impossible to accomplish this only by our own technical accumulation and staffing. We would like to thank Alibaba Cloud PTS and other technical teams for their support and help in implementing the full link stress testing in Luogic Show. Below, we share the experience accumulated in the implementation process with you in the form of work notes.

When the traffic is large, it can be quickly determined through logging and monitoring. However, the daily peak value may not be that high, but the traffic volume of an activity to be dealt with is very large. One method is to calculate the peak values of each interface within a time period based on the service peak value, and finally combine them into a traffic model of stress testing.

It is possible to generate dirty data whether the production environment is reformed to identify the stress testing traffic or the test account is used in the production environment. The best way is:

It is a full link stress testing, the purpose of which is to identify and find problems comprehensively, so the coverage required for monitoring is very high. From network access to database, from network layer 4 to layer 7 and the service, with the deepening of stress testing, you may find that monitoring isn't always enough.

For example, we perform the stress testing to compare the selection of some technologies. At this time, we need to ensure the same traffic model and level. The speed and stability of automatic resizing or pre-planned manual resizing can be tested through the full link stress testing. In the later stage of the full link stress testing, important operations, such as the inspection of traffic limiting capacity, the actual inspection of various fault effects, and the exercise of pre-plans, should also be carried out.

If more nodes are connected to the network, some DIS re-pressure tests can be conducted respectively to determine the capability and eliminate problems one by one. Then, after the whole is enabled, pressure tests can be conducted together to determine whether the overall setting and matching are correct.

For example, CDN dynamic acceleration, WAF, anti-DDoS pro, SLB and others are used on the network. If the overall stress test results are unsatisfactory, it is recommended to shield some links for stress testing and convergence problems. For example, session persistence between WAF and SLB may cause load imbalance. The capacity and specifications of these products also require stress testing and verification. For example, the CPS, QPS, bandwidth and connections of SLB may become bottlenecks. By integrating relevant SLB monitoring in PTS testing scenarios, you can easily view all the data all in one place, and you can choose the usage mode with the lowest cost in combination with pressure testing.

In addition to the network access mentioned above, when Nginx has a heavy internal load it may also cause imbalance, especially under high concurrent traffic, which also demonstrates the value of the full link and highly-simulated stress testing.

In particular, for stress testing of some important activities, it is recommended to test the actual traffic pulse in the service.

Alibaba Cloud PTS has this capability. It can observe the system performance under the peak pulse in the context of progressively increasing capacity, such as verifying the traffic limiting capability, and see if the peak pulse is recognized as DDOS.

After the stress testing, we can find a large number of unreasonable parameter settings. Our tuning mainly involves the following: kernel network parameter tuning (such as fast recovery of connections), Nginx common parameter tuning, and PHP-FPM parameter tuning. A lot of relevant information about these exists on the Internet.

Generally, texting and payment depend on the third party. In this case, stress testing requires some special processing, such as setting up a separate Mock service, and then routing the stress testing traffic. This Mock service involves the simulation of a third-party service, so it needs to be as authentic as possible. For example, the delay of simulation should be close to the real three-party service. This Mock service is likely to encounter bottlenecks, so it is necessary to ensure its capacity and the stability of the interface delay under high concurrency. After all, the capacity, traffic limiting capacity and stability of some third-party payment and SMS interfaces are relatively good.

Our experience is that the recommended threshold for CPU is between 50% and 70%, mainly considering the environment of the container. Then, it is an Internet service, so the response time is also set at 1 second as the upper limit. During the stress testing, the actual hands-on testing experience is also carried out synchronously. (For detailed metric interpretation and threshold values, click to read the original article.)

At present, the full link stress testing has become one of the core technical facilities of Luogic Show, greatly improving the service stability. With Alibaba Cloud PTS, the automation of the full link stress testing is further improved, accelerating the build process and reducing the manpower investment. We are very concerned about the efficiency and concentration of the technical team. Not only for the build of the full link stress testing system, but also for the system building of many other service levels, we have all relied on the technical strength of our partners to support the rapid development of services within a controllable time.

When the business runs fast, the path and manner of technology construction are determined by the fundamental tone of the team.

Solving Data Consistency Problems in a Microservices Architecture

2,593 posts | 793 followers

FollowAlibaba Clouder - April 3, 2020

Alibaba Clouder - March 26, 2020

Alibaba Clouder - December 1, 2020

zcm_cathy - December 7, 2020

Alibaba Cloud Native - September 4, 2024

Alibaba Cloud Native - July 12, 2024

2,593 posts | 793 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreLearn More

CloudMonitor

CloudMonitor

Automate performance monitoring of all your web resources and applications in real-time

Learn MoreMore Posts by Alibaba Clouder