By Sheng Dong, Alibaba Cloud After-Sales Technical Expert

Alibaba Cloud Customer Services deal with various online issues every day. Common issues include network connection failure, server breakdown, poor performance, and slow response. However, the issue that seems insignificant but racks our brains is deletion failure, for example, file deletion failure, process ending failure, and driver uninstallation failure. The logic of such issues is more complex than one would expect.

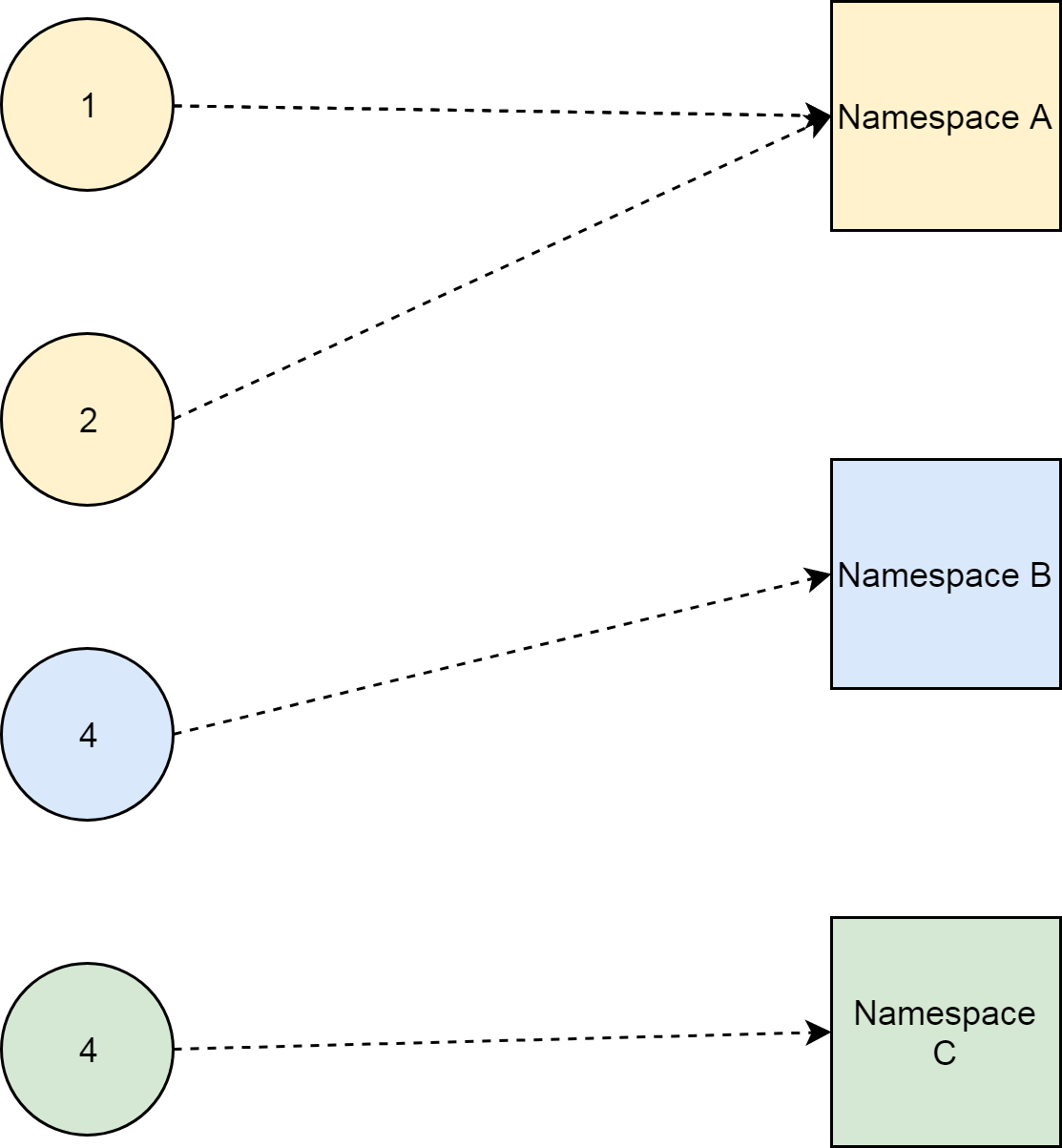

For this article, let's discuss the issue related to the namespaces of Kubernetes clusters. A namespace is a storage mechanism for Kubernetes cluster resources. Store related resources in the same namespace to prevent unnecessary impact on unrelated resources.

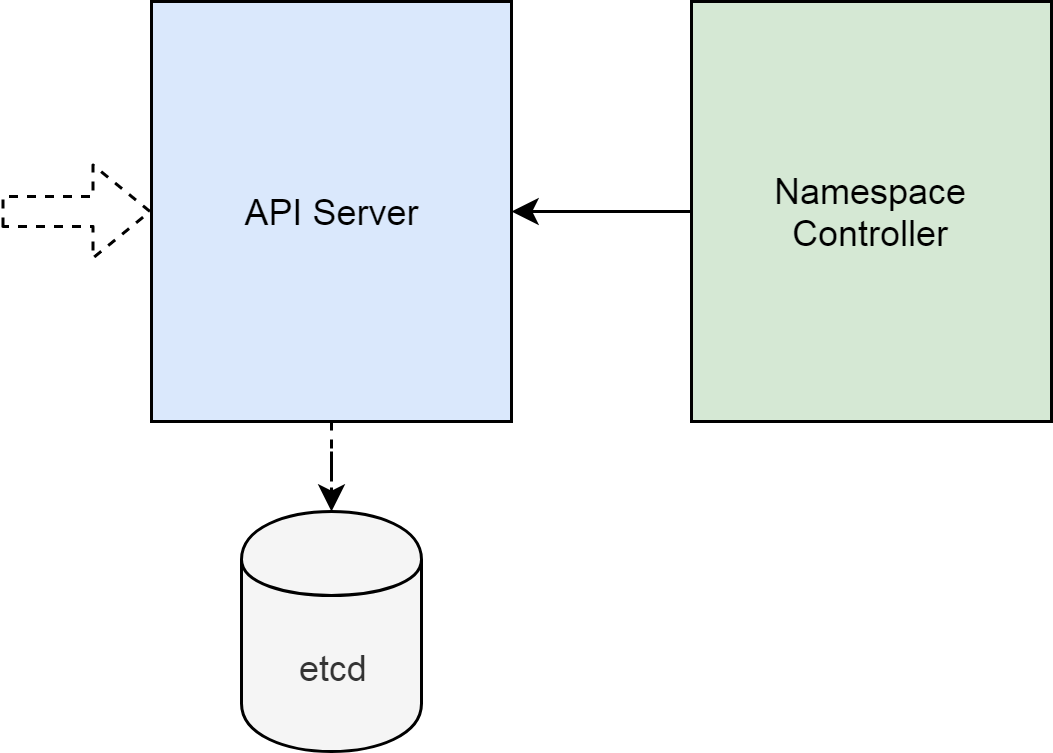

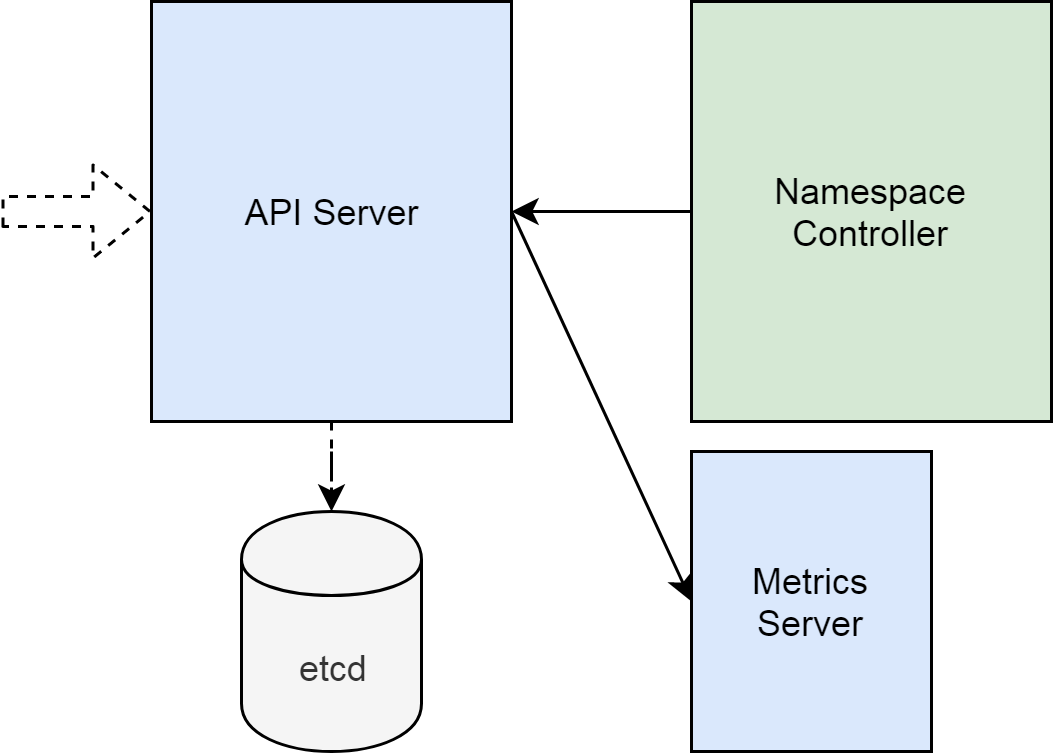

A namespace is also a resource. Create a namespace on the portal of the cluster API server. You must delete the namespaces that are no longer in use. The namespace controller monitors changes in a cluster namespace and then executes pre-defined actions according to the changes on the API server.

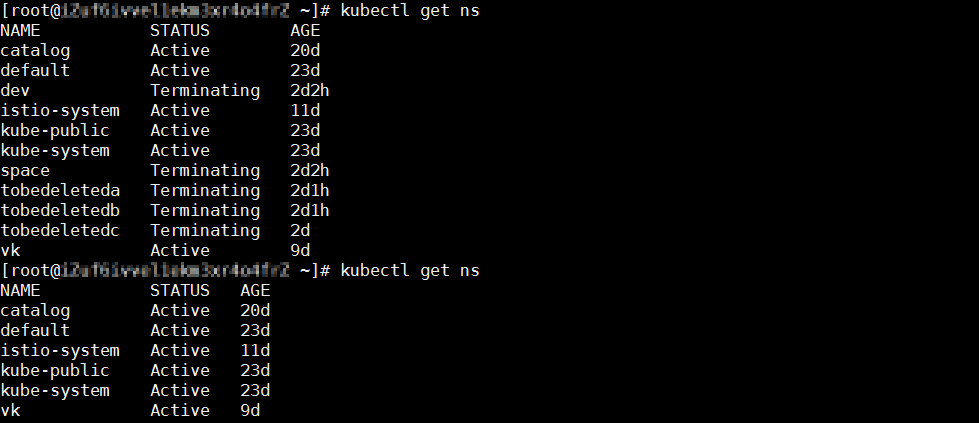

Sometimes, you may encounter the issue shown in the following figure, where the namespace is in the Terminating state but cannot be deleted.

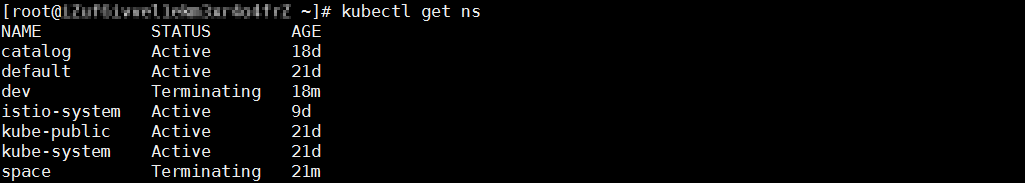

We must analyze the behavior of the API server because we delete namespaces on the cluster API server. Similar to most cluster components, the API server outputs logs of different levels. To understand the behavior of the API server, we need to change the log level to the highest level. Then we can create and delete the tobedeletedb namespace to recur the issue.

However, the API server outputs only a few logs related to this issue. Related logs are divided into two parts. One is the namespace deletion record, indicating that the client tool is kubectl and the source IP address for initiating the operation is 192.168.0.41, which is as expected. The other indicates that kube-controller-manager obtains the namespace information repeatedly.

kube-controller-manager implements functions of most controllers in the cluster. When kube-controller-manager obtains the namespace information repeatedly, determine that the namespace controller obtains the namespace information.

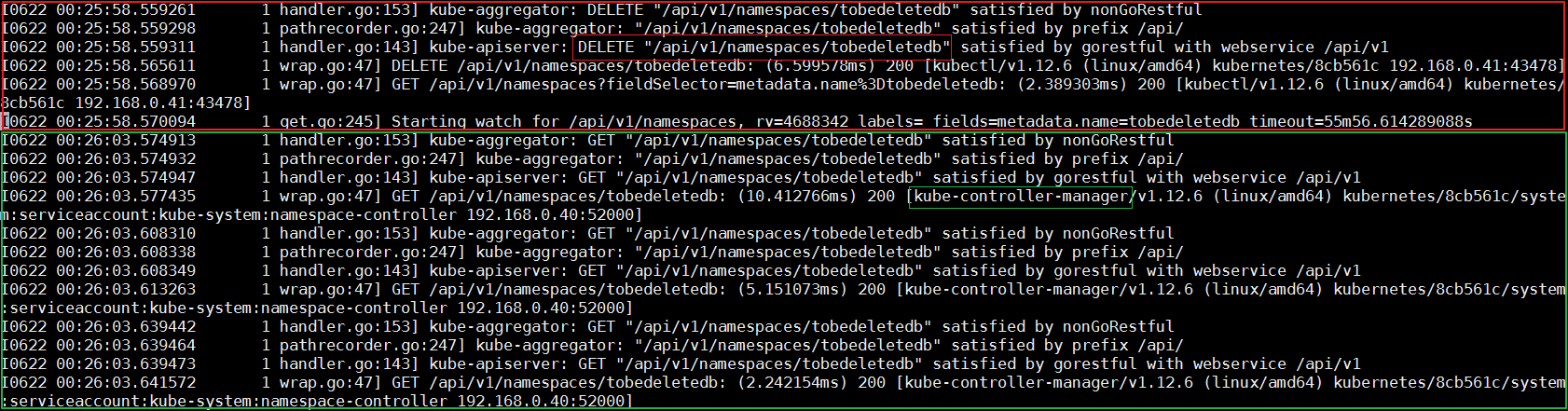

Change the log level to the highest level on kube-controller-manager to study the component behavior. According to kube-controller-manager logs, determine that the namespace controller fails to delete resources from the tobedeletedb namespace and constantly attempts to delete them.

Use a namespace as a storage box, which is not a storage tool that physically stores small objects. A namespace actually stores mappings.

It determines how to delete resources from a namespace. For physical storage, only delete the storage box, following this the resources in the storage box are also deleted. For logical storage, list all resources and delete the resources that are mapped to the namespace for deletion.

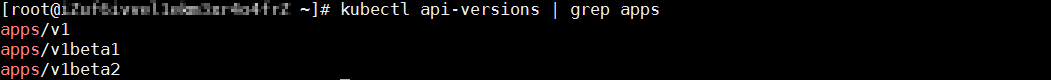

Learn the cluster API organization method before listing all resources in a cluster. APIs of Kubernetes clusters are organized by group and version rather than as a whole. In this case, APIs of different groups are iterated independently, without affecting each other. A common group is app, which has three versions: v1, v1beta1, and v1beta2. Run the kubectl api-version command to view the complete group and version list.

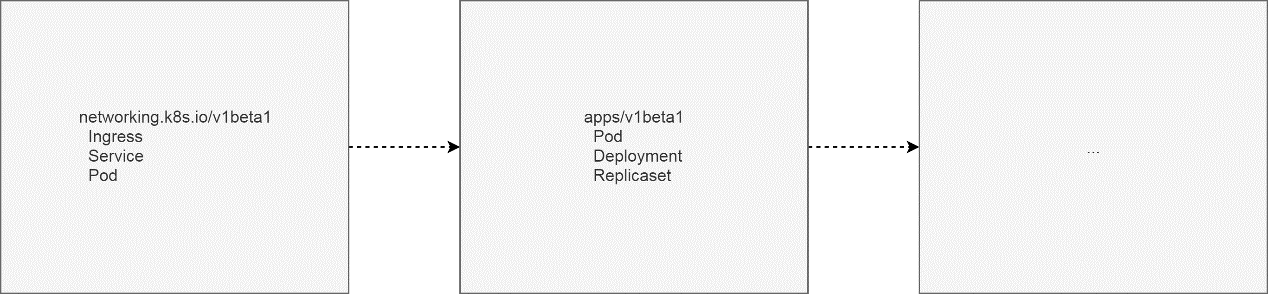

Each created resource belongs to an API group and version. The resource Ingress, with the specified group networking.k8s.io and version v1beta1, is used as an example.

kind: Ingress

metadata:

name: test-ingress

spec:

rules:

- http:

paths:

- path: /testpath

backend:

serviceName: test

servicePort: 80

The following figure summarizes the API groups and versions.

In fact, a cluster has many API groups and versions and each API group or version supports resources of a specified type. While using YAML to orchestrate resources, specify the resource type and the API groups and versions. However, to list resources, obtain the API group and version list.

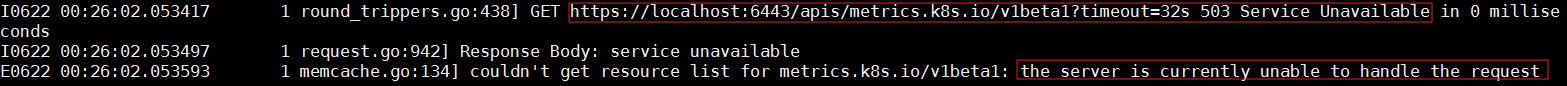

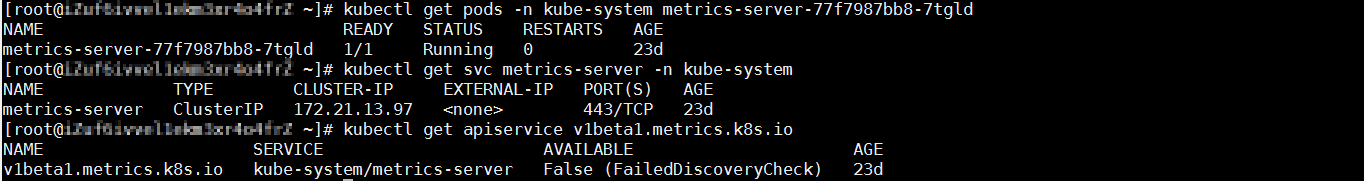

After learning about the API groups and versions, it is easy to understand kube-controller-manager logs. The namespace controller attempts to obtain the API group and version list, but the query fails when the API group is metrics.k8s.io and the version is v1beta1. The reason for query failure is reported as, "the server is currently unable to handle the request."

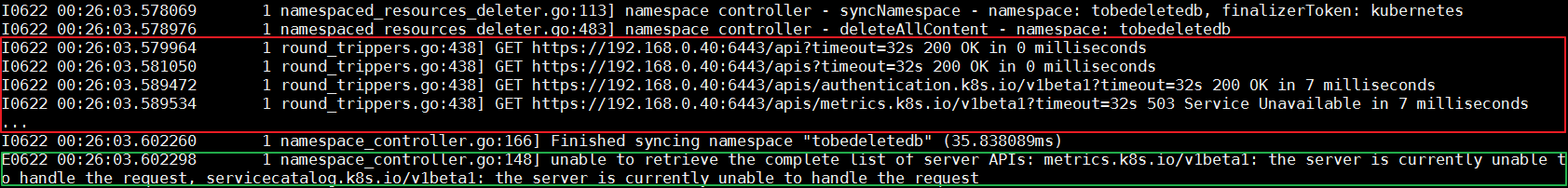

In the preceding section, kube-controller-manager fails to obtain the API group metrics.k8s.io and version v1beta1. However, the query request is sent to the API server. Now we can view the logs of the API server to analyze records related to the API group metrics.k8s.io and version v1beta1. The API server reports the same error, "the server is currently unable to handle the request" at the same time.

The API server is working properly, but an error indicating that it is unavailable is reported when kube-controller-manager obtains the API group metrics.k8s.io and version v1beta1. This is unreasonable. To rectify the issue, we need to understand the plug-in mechanism of the API server.

The cluster API server has an extension mechanism. Developers use the mechanism to implement the API server plug-in. The plug-in provides the function of implementing new API groups and versions. As a proxy, the API server forwards the corresponding API calls to its plug-in.

The metrics server that implements the API group metrics.k8s.io and version v1beta1 is used as an example. All calls for the API group and version are forwarded to the metrics server. The metrics server uses a service and a pod to implement the API group and version, as shown in the following figure.

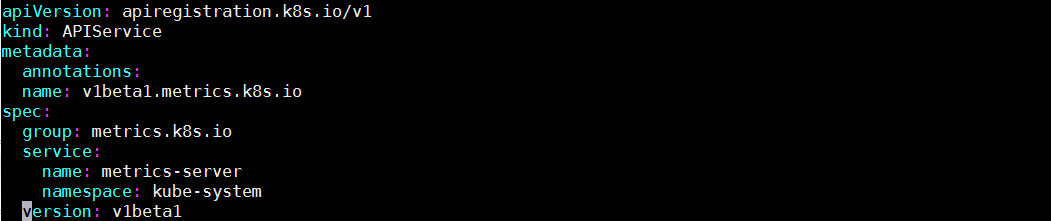

In the preceding figure, apiservice is the mechanism associating plug-in with the API server. The following figure shows the details of the mechanism, including the API group and version and the name of the service that implements the metrics server. According to the details, the API server forwards calls of the API group metrics.k8s.io and version v1beta1 to the metrics server.

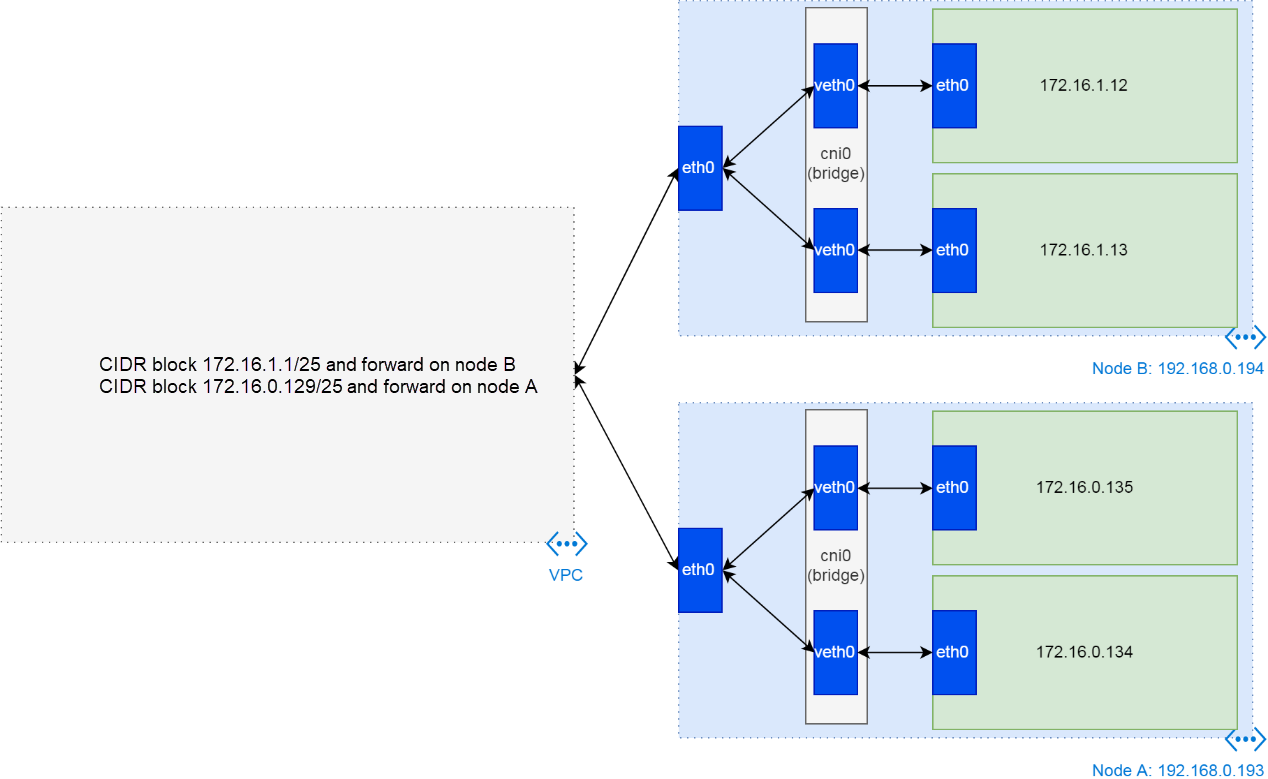

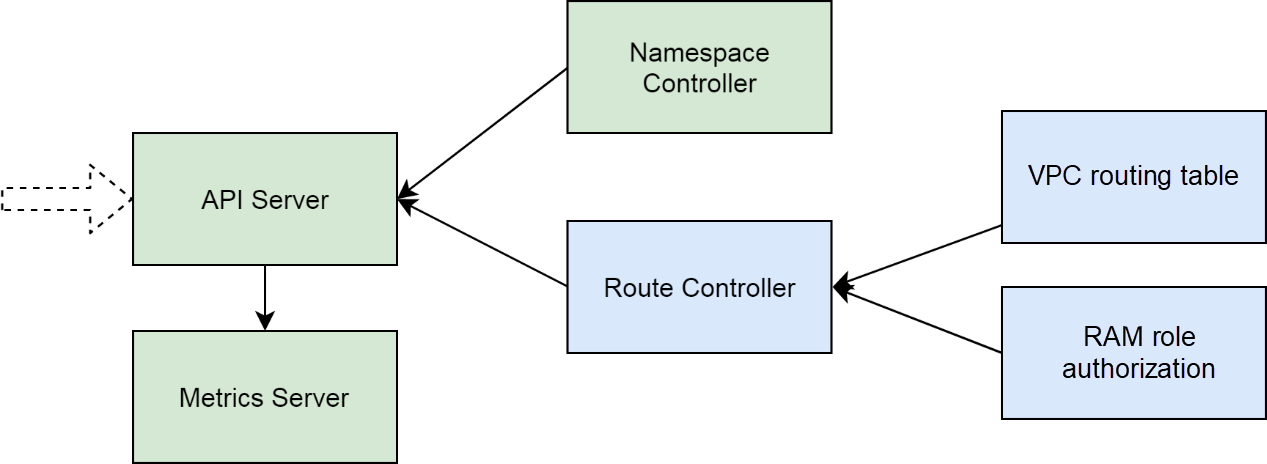

A test shows that the issue is about the communication between the API server and the metrics server pod. In Alibaba Cloud's Kubernetes cluster, the API server uses the host network- the Elastic Compute Service (ECS) network, while the metrics server uses the pod network. The API server communicates with the metrics server based on the forwarding of the Virtual Private Cloud (VPC) routing table.

As shown in the preceding figure, if the API server runs on node A, its IP address is 192.168.0.193. If the IP address of the metrics server is 172.16.1.12, the second routing rule in the VPC routing table must be forwarded for the network connection from the API server to the metrics server.

While checking the cluster VPC routing table, it is observed that a routing table entry (RTE) of the node where the metrics server is located is missing. Therefore, the communication between the API server and the metrics server is faulty.

To maintain the correctness of RTEs in a cluster, Alibaba Cloud implements the route controller function in Cloud Controller Manager (CCM). The route controller monitors the node status in the cluster and the VPC routing table status all the time. When an RTE is missing, the route controller automatically adds it to the routing table.

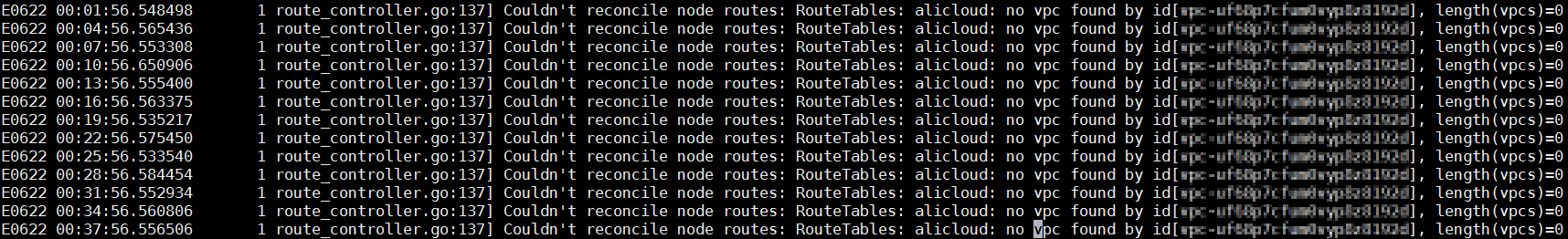

The current situation is not as expected. The route controller is not working properly. Check the CCM logs for the issue to find that while using the cluster VPC ID to search for a VPC instance, the route controller cannot obtain the instance information.

The cluster and ECS instance are running. Therefore, the VPC must be running. Check the result in the VPC console based on the VPC ID. The following describes why CCM fails to obtain the VPC information.

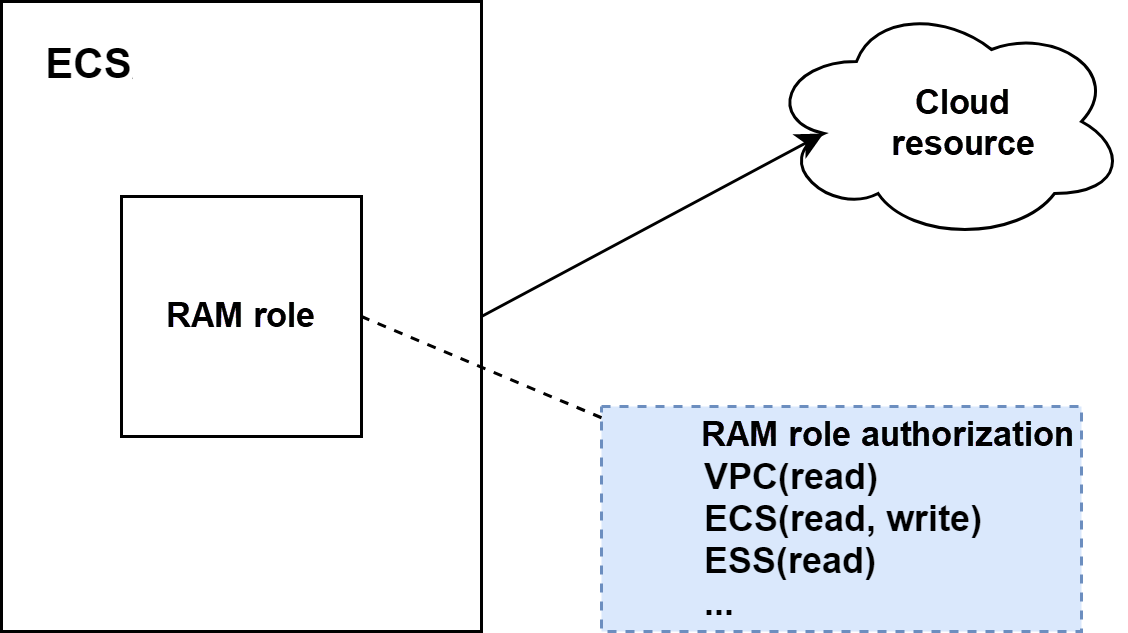

Alibaba Cloud provides APIs for CCM to obtain VPC information. It is similar to the fact that to obtain information about a VPC from an ECS instance in the cloud, the ECS instance must be authorized. The common practice is to assign a Resource Access Management (RAM) role to the ECS instance and bind the RAM role with the corresponding permissions.

There are several possible reasons if a cluster component fails to obtain cloud resource information as the node where it is located.

The ECS instance is not bound with the correct RAM role, or no authorization rule is correctly defined for RAM role authorization. When checking the node RAM role and RAM role authorization, we find that the authorization policy for the VPC has been changed.

No namespace in the Terminating state remains after we change the value of Effect to Allow.

This issue is related to the six components of the Kubernetes cluster, including the API server, metrics server, namespace controller, route controller, VPC routing table, and RAM role authorization.

By analyzing the behavior of the API server, metrics server, and namespace controller, we determine that the cluster network fault causes the failure of connection between the API server and the metrics server. By checking the route controller, VPC routing table, and RAM role authorization, we find the root cause of the issue, which is that the VPC routing table is deleted and the RAM role authorization policy is changed.

Deletion failure of a Kubernetes cluster namespace is a common online issue. This issue that seems insignificant is complex and indicates that a key function of a cluster is missing. This article analyzes the issue and provides the troubleshooting method and principle, which may be helpful for troubleshooting other similar issues.

From Confused to Proficient: Always Ready for Kubernetes Cluster Nodes

From Confused to Proficient: Details of the Kubernetes Cluster Network

2,599 posts | 762 followers

FollowAlibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

2,599 posts | 762 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Clouder