By Sheng Dong, Alibaba Cloud After-Sales Technical Expert

Generally, it is not easy to understand the concept of the Kubernetes cluster service. In particular, troubleshooting service-related issues with a faulty understanding leads to more challenges. For example, beginners find it hard to understand why a service IP address fails to be pinged and for experienced engineers, understanding the service-related iptables configuration is a great challenge.

This article explains the key principles and implementation of the Kubernetes cluster service.

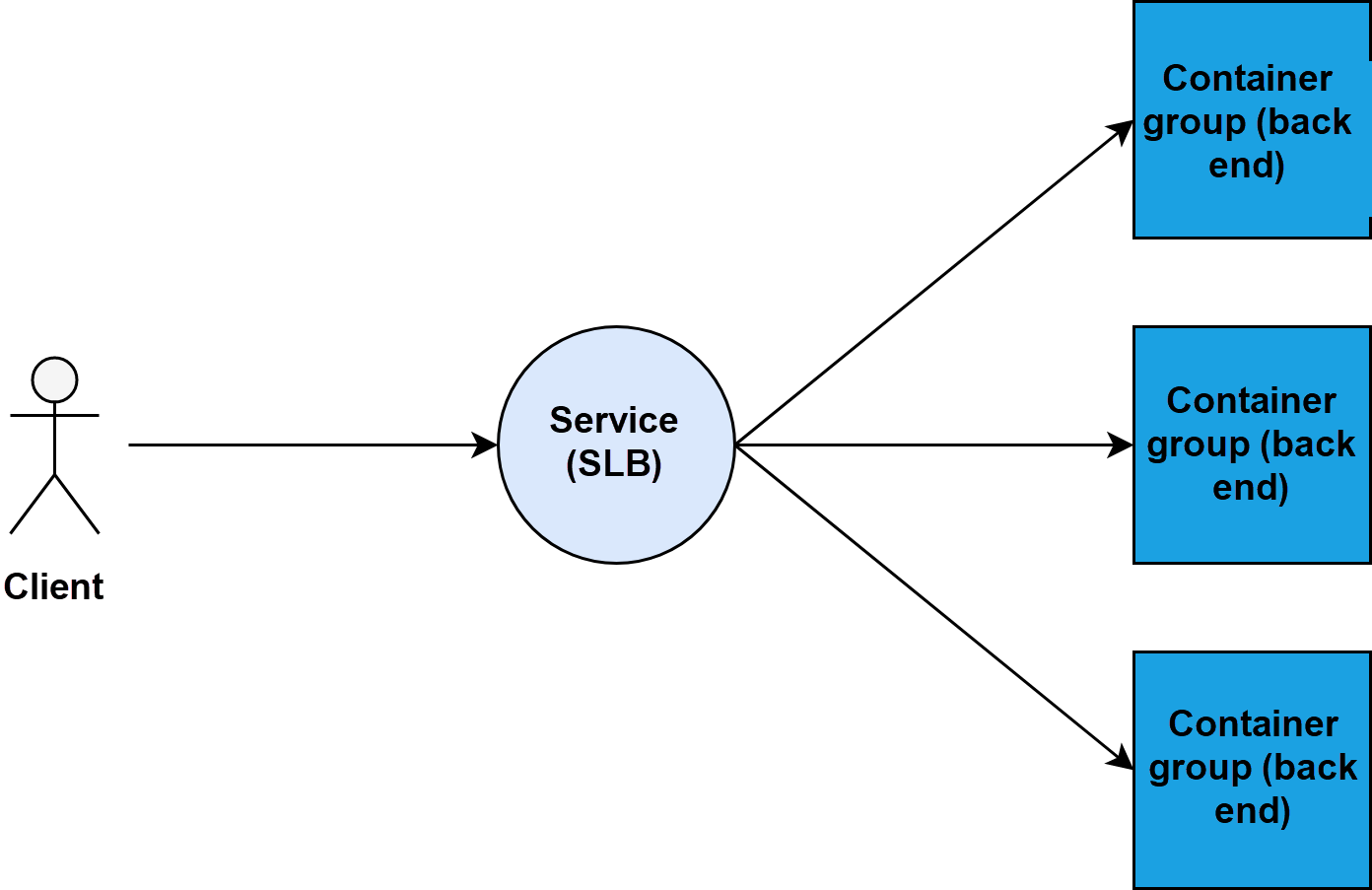

Theoretically, the Kubernetes cluster service works as a Server Load Balancer (SLB) or a reverse proxy. It is similar to Alibaba Cloud SLB and has its IP address and front-end port. Multiple pods of container groups are attached to the service as back-end servers. The back-end servers have their IP addresses and listening ports.

When the architecture of SLB plus back-end servers are combined with a Kubernetes cluster, it results in the most intuitive implementation method. The following diagram represents the method.

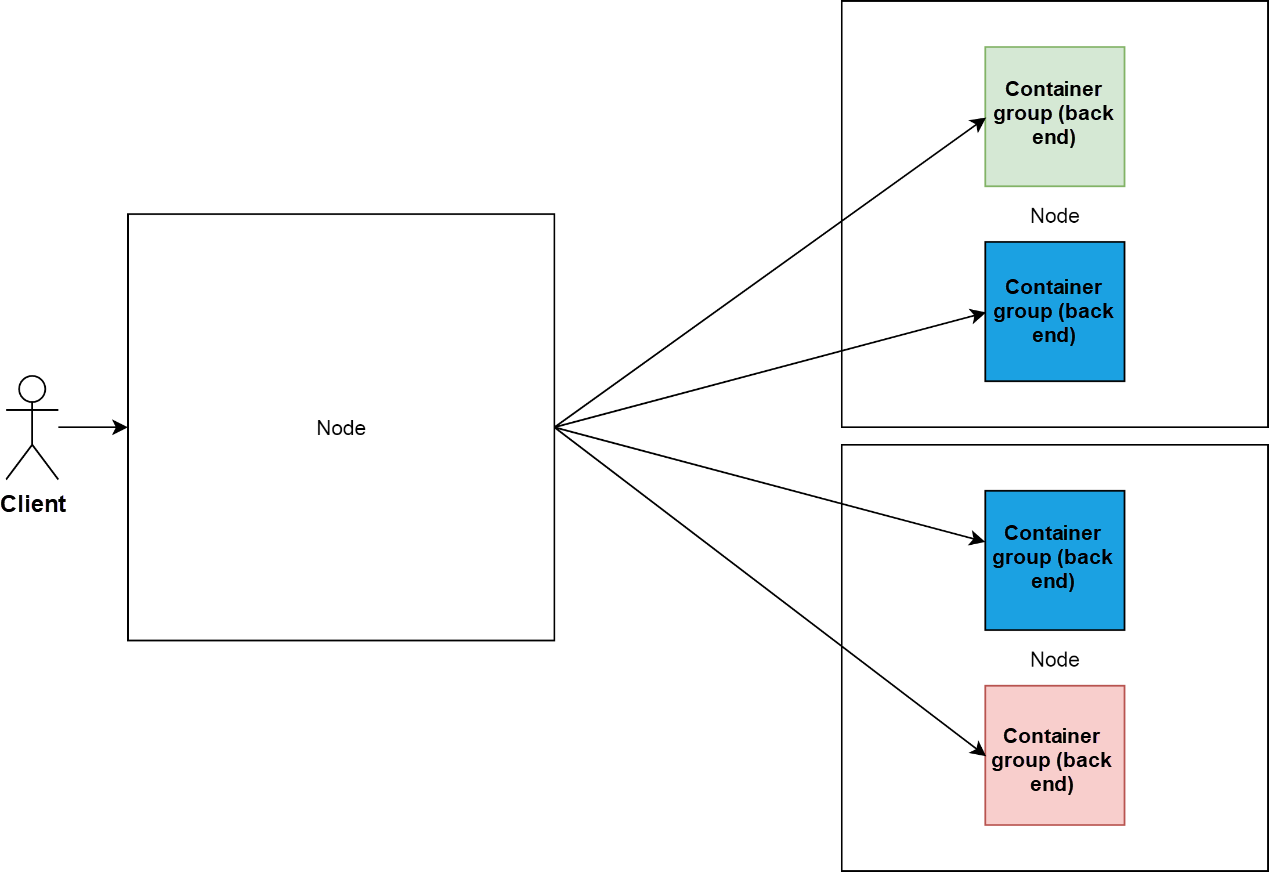

A node in the cluster functions as SLB, which is similar to Linux Virtual Server (LVS), and other nodes load back-end container groups.

This implementation method has the single point of failure (SPOF). Kubernetes clusters are the result of Google's automated O&M over many years. Their implementation deviates from the philosophy of intelligent O&M.

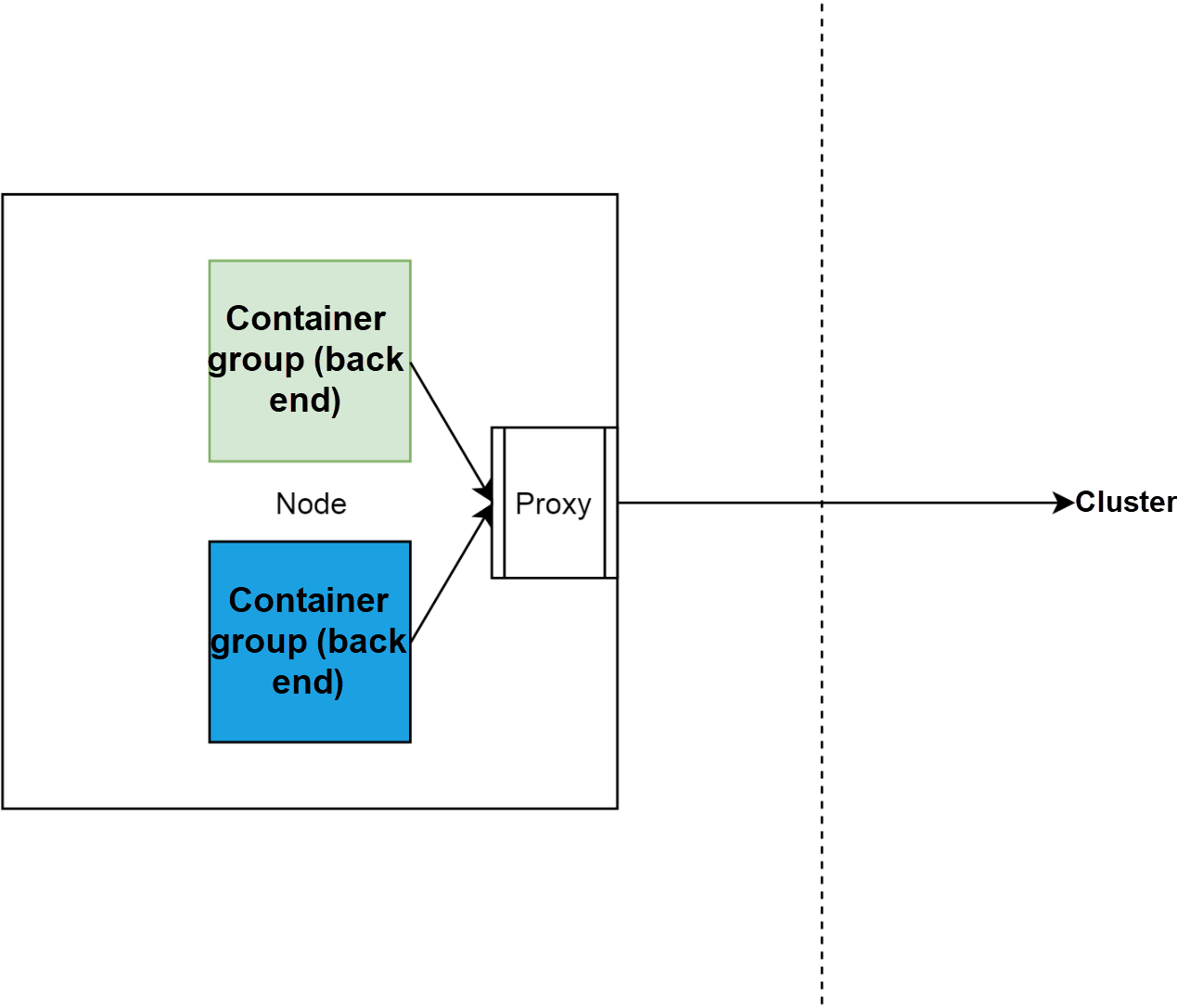

The Sidecar mode is the core concept in the microservices field and indicates that a correspondent is built-in. Those who are familiar with the service mesh must be familiar with the Sidecar mode. However, only a few people notice that the original Kubernetes cluster service is implemented based on the Sidecar mode.

In a Kubernetes cluster, a Sidecar reverse proxy is deployed on each node for service implementation. Access to cluster services is converted by the reverse proxy on the node to the access to back-end container groups of the services.

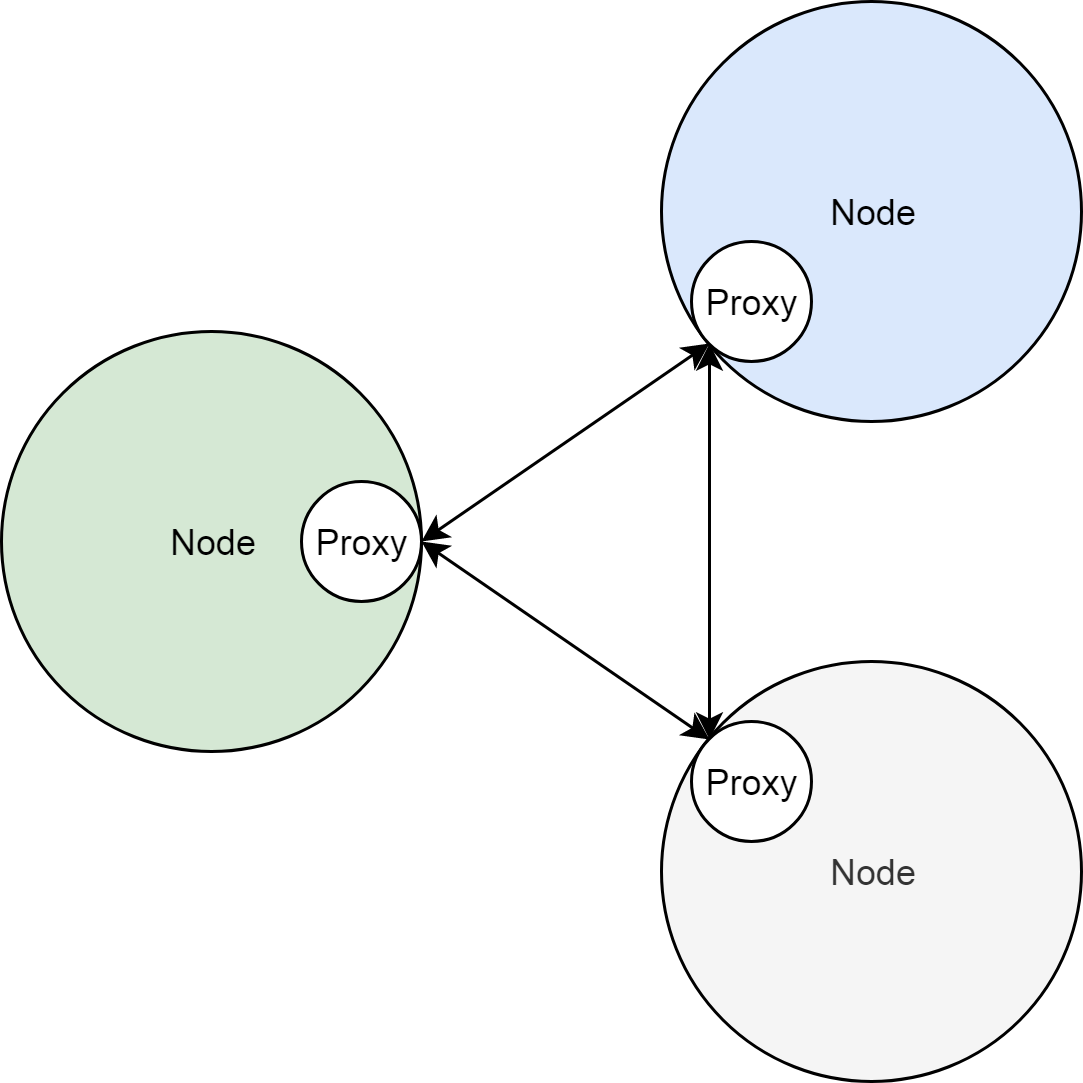

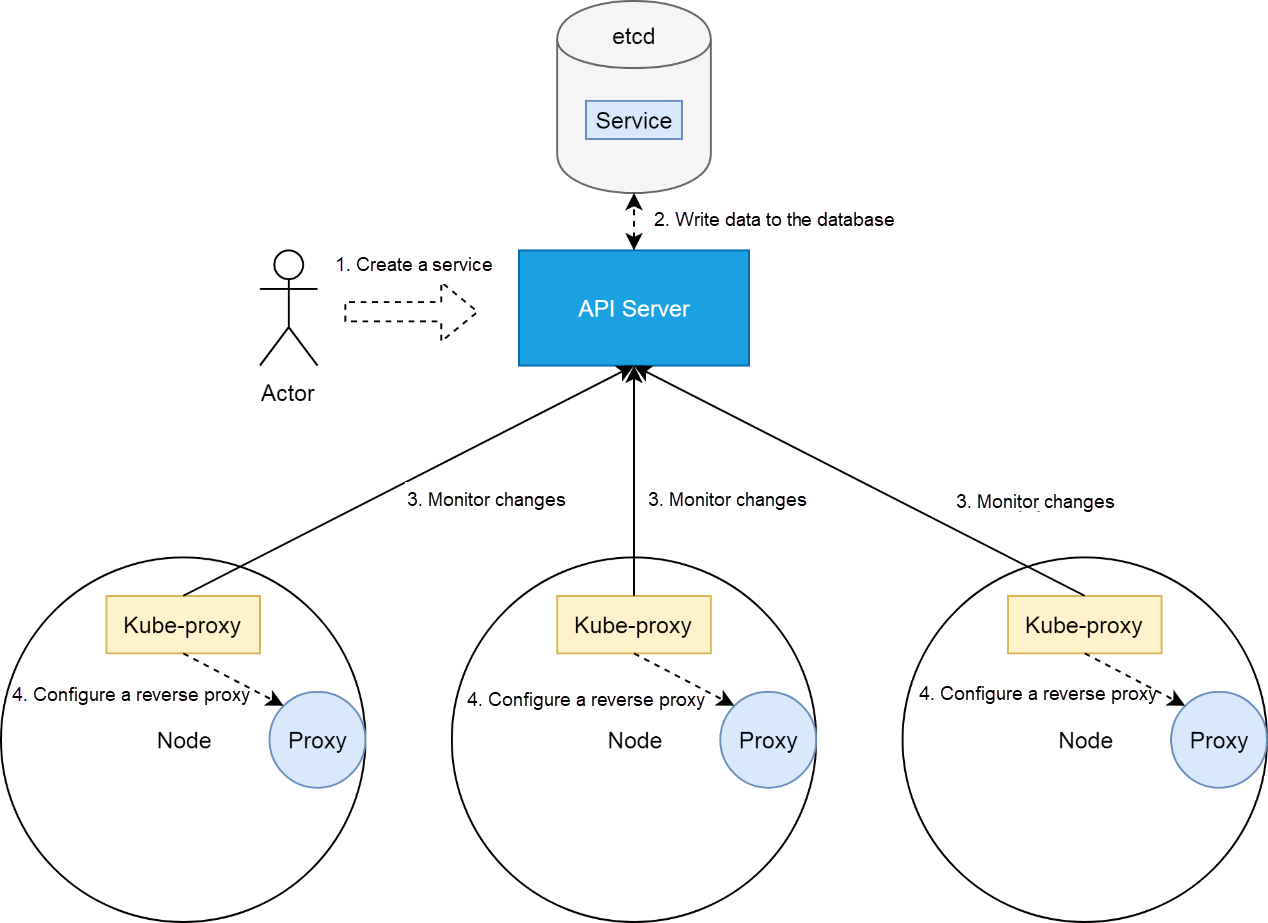

The following figure shows the relationships between nodes and the Sidecar proxies.

The preceding two sections introduce the Kubernetes cluster service works as an SLB or a reverse proxy. In addition, the reverse proxy is deployed on each cluster node as the Sidecar of the cluster nodes.

In this case, the kube-proxy controller of the Kubernetes cluster translates the service into the reverse proxy. For more information about how a Kubernetes cluster controller works, refer to the article about controllers in this series. The kube-proxy controllers are deployed on cluster nodes and monitor cluster status changes through API servers. When a service is created, kube-proxy translates the cluster service status and attributes into the reverse proxy configuration. Next, the reverse proxy implementation is done as shown in the following figure.

Currently, the reverse proxy is implemented for Kubernetes cluster nodes in three modes, including userspace, iptables, and ipvs. This article further analyzes the implementation in iptables mode. The underlying network is based on the Flannel cluster network of Alibaba Cloud.

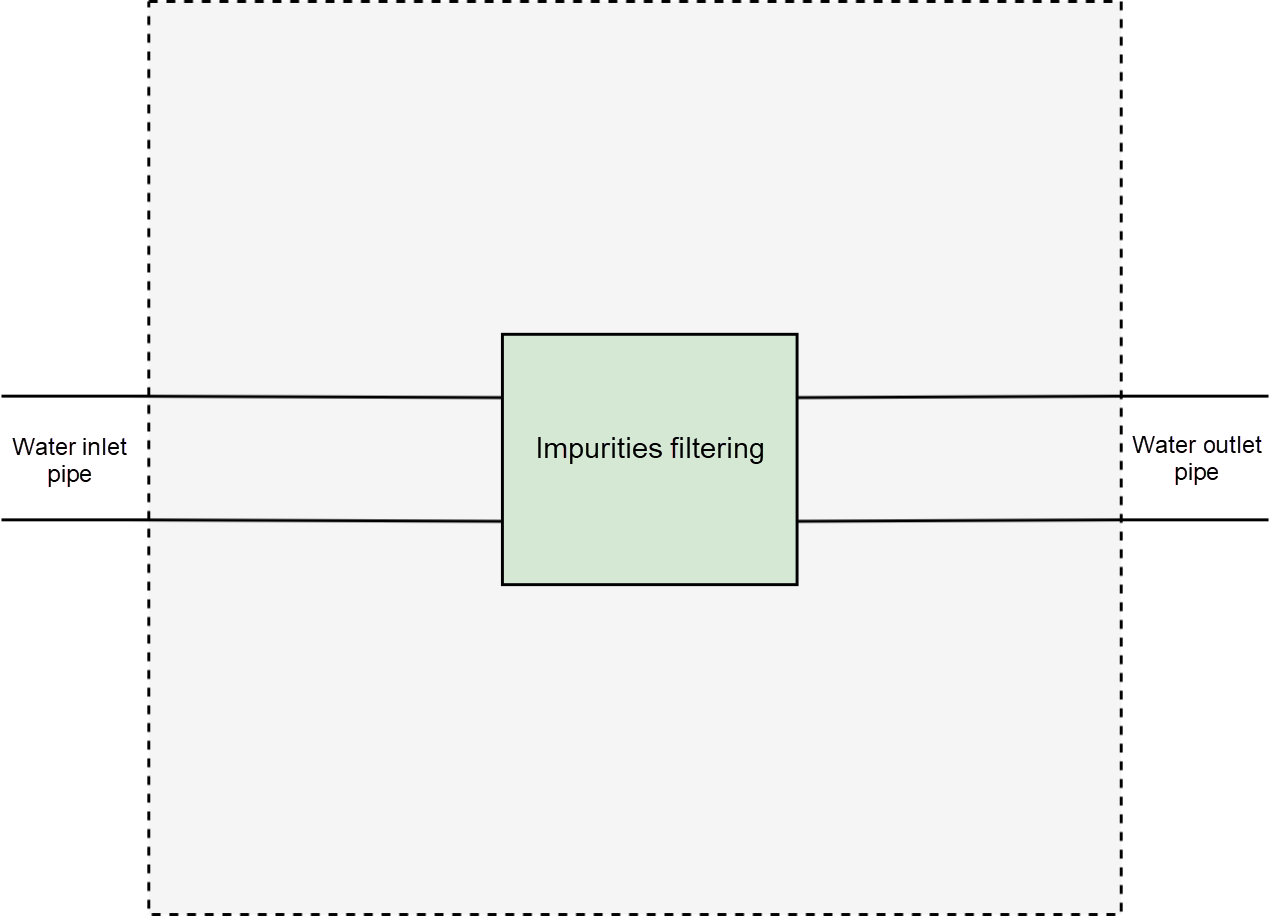

Assume a scenario, where there is a house with a water inlet pipe and a water outlet pipe. Since, you cannot directly drink the water that enters the inlet pipe because it contains impurities, you might want to directly drink the water from the outlet pipe. Therefore, you cut the pipe and add a water filter to remove impurities.

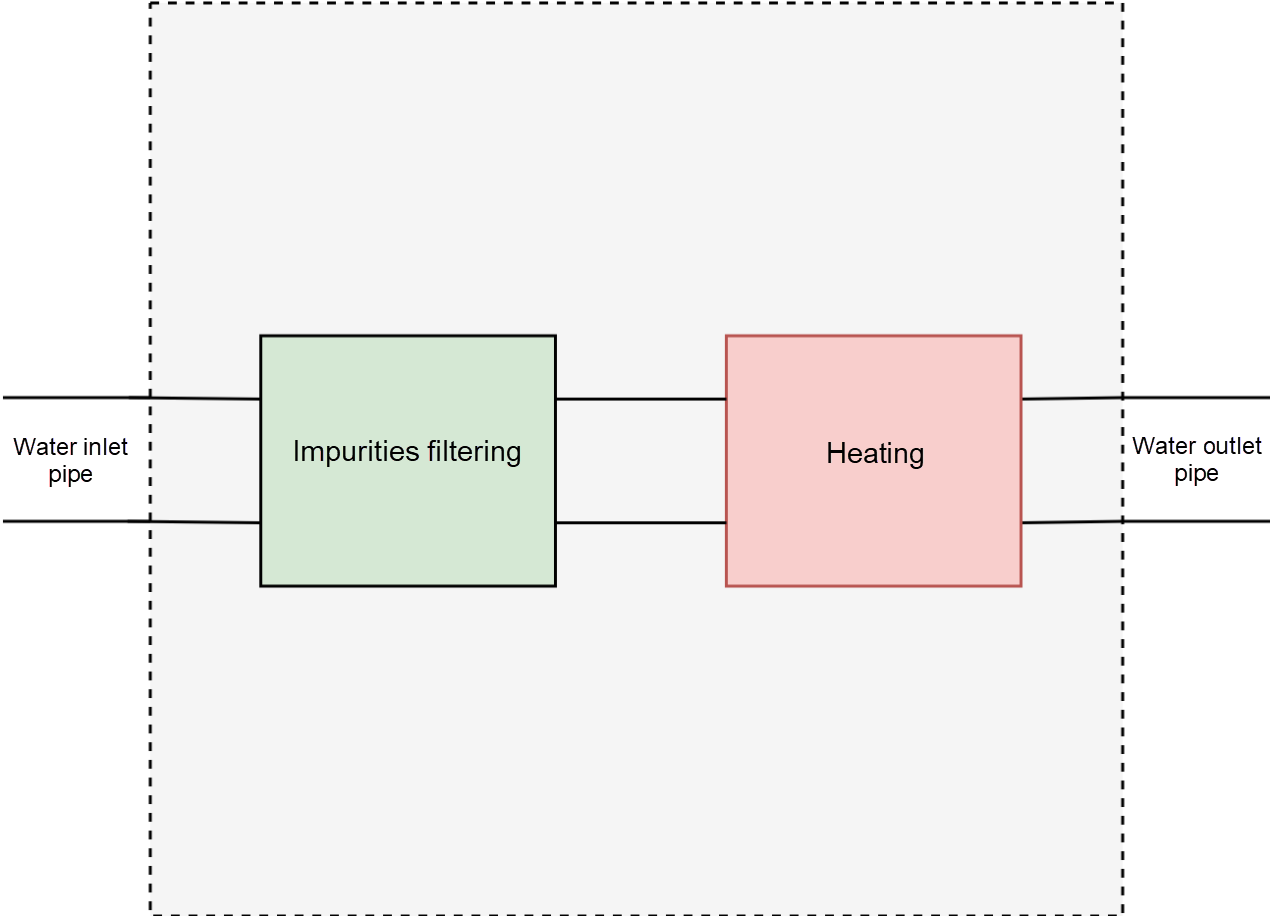

After a few days, in addition, to directly drinking the water from the outlet pipe, you might want the water to be hot. Therefore, you must cut the pipe again and add a heater to it.

Certainly, it is not feasible to cut water pipes every time and add new functions to suit the changing demands. Also, it is not possible to cut the pipe further after a certain period

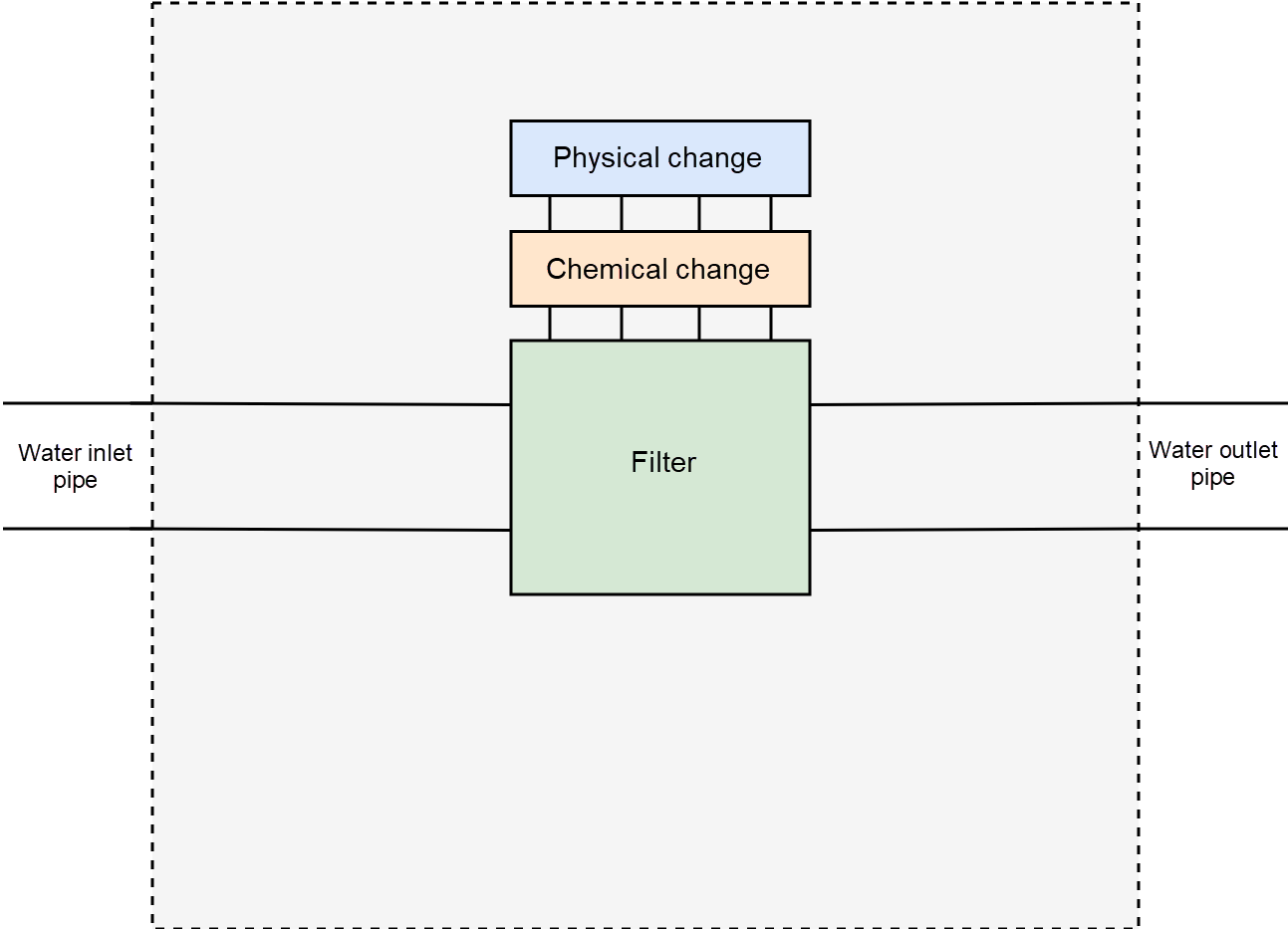

Therefore, there is a need for a new design. First, fix the incision of the water pipe. Use the preceding scenario as an example and ensure that the water pipe has only one incision position. Then, abstract two water processing methods, including physical change and chemical change.

Based on the preceding design, filter impurities, by adding an impurity filtering rule to the chemical change module. To increase the temperature, add a heating rule to the physical change module.

The filter framework is much better than the pipe cutting method. To design the framework, fix the pipe incision position and abstract two water processing methods.

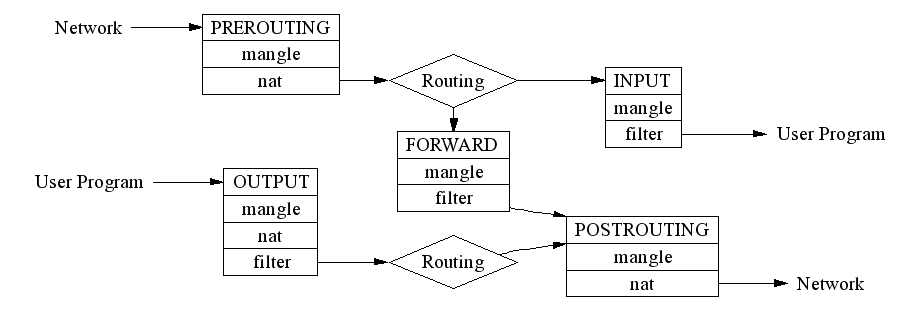

Now check the iptables mode, or more accurately, how Netfilter works. Netfilter is a filter framework with five incisions on the pipeline for network packet sending and receiving and routing, including PREROUTING, FORWARD, POSTROUTING, INPUT, and OUTPUT. In addition, Netfilter defines several processing methods of network packets, such as NAT and filter.

Note that PREROUTING, FORWARD, and POSTROUTING greatly increase the complexity of Netfilter. Barring these functions, Netfilter is as simple as the water filter framework.

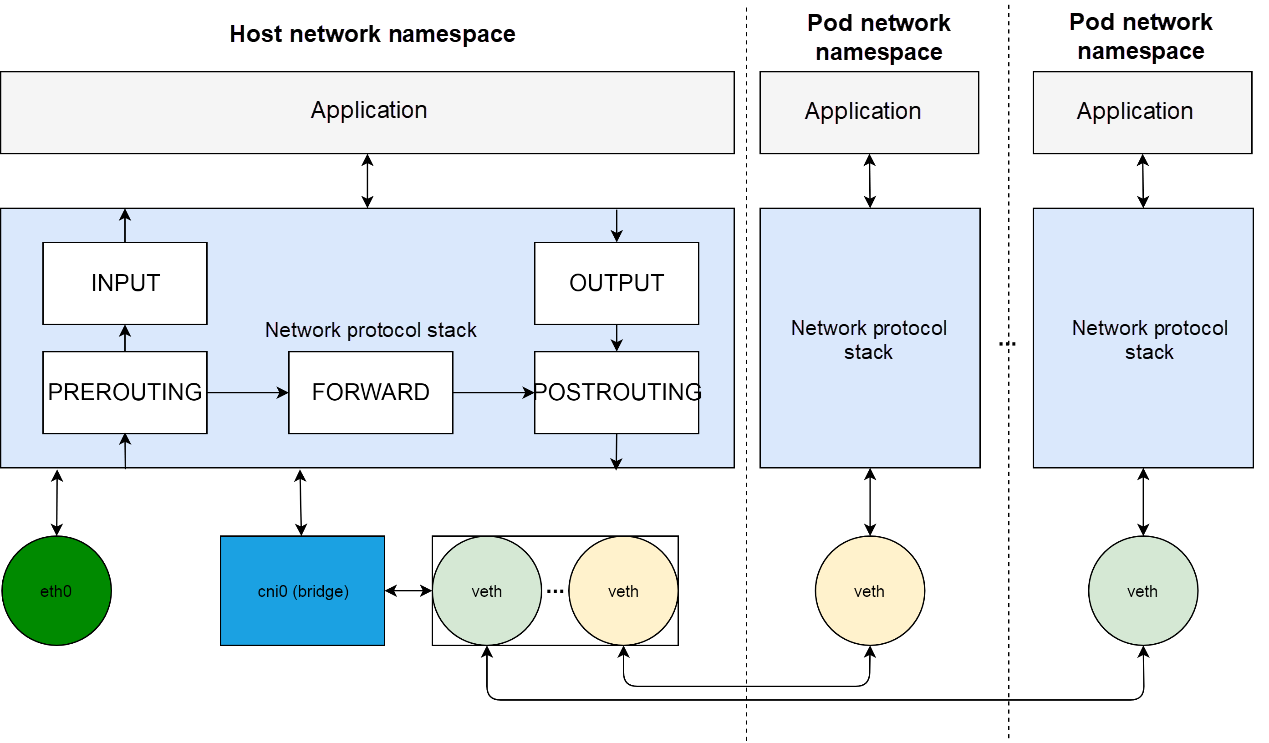

This section describes the overall network of Kubernetes cluster nodes. Horizontally, the network environment on nodes is divided into different network namespaces, including host network namespaces and pod network namespaces. Vertically, each network namespace contains a complete network stack, from applications to protocol stacks and network devices.

At the network device layer, use the cni0 virtual bridge to build a virtual LAN (VLAN) in the system. The pod network is connected to the VLAN through the veth pair. The cni0 VLAN communicates externally through the host route and the eth0 network port.

At the network protocol stack layer, implement the reverse proxy of cluster nodes by programming Netfilter.

Implementation of the reverse proxy indicates Destination Network Address Translation (DNAT). This implies changing the destination of a data packet from the IP address and port of the cluster service to that of a specific container group.

As shown in the figure of Netfilter, add the NAT rule to PREROUTING, OUTPUT, and POSTROUGING to change the source or destination address of a data packet.

Implement DNAT to change the destination before PREROUTING and POSTROUGING to ensure that the data packet is correctly processed by PREROUTING or POSTROUGING. Therefore, the rules for implementing the reverse proxy must be added to PREROUTING and OUTPUT.

Use the PREROUTING rule to process the data flow for access from a pod to the service. After moving from veth in the pod network to cni0, a data packet enters the host protocol stack and is first processed by PREROUTING in Netfilter. After DNAT, the destination address of the data packet changes to the address of another pod, and then the data packet is forwarded to eth0 by the host route and sent to the correct cluster node.

The DNAT rule added to OUTPUT processes the data packet sent from the host network to the service in a similar way. Thus, before PREROUTING and POSTROUGING, the destination address changes to facilitate forwarding by the route.

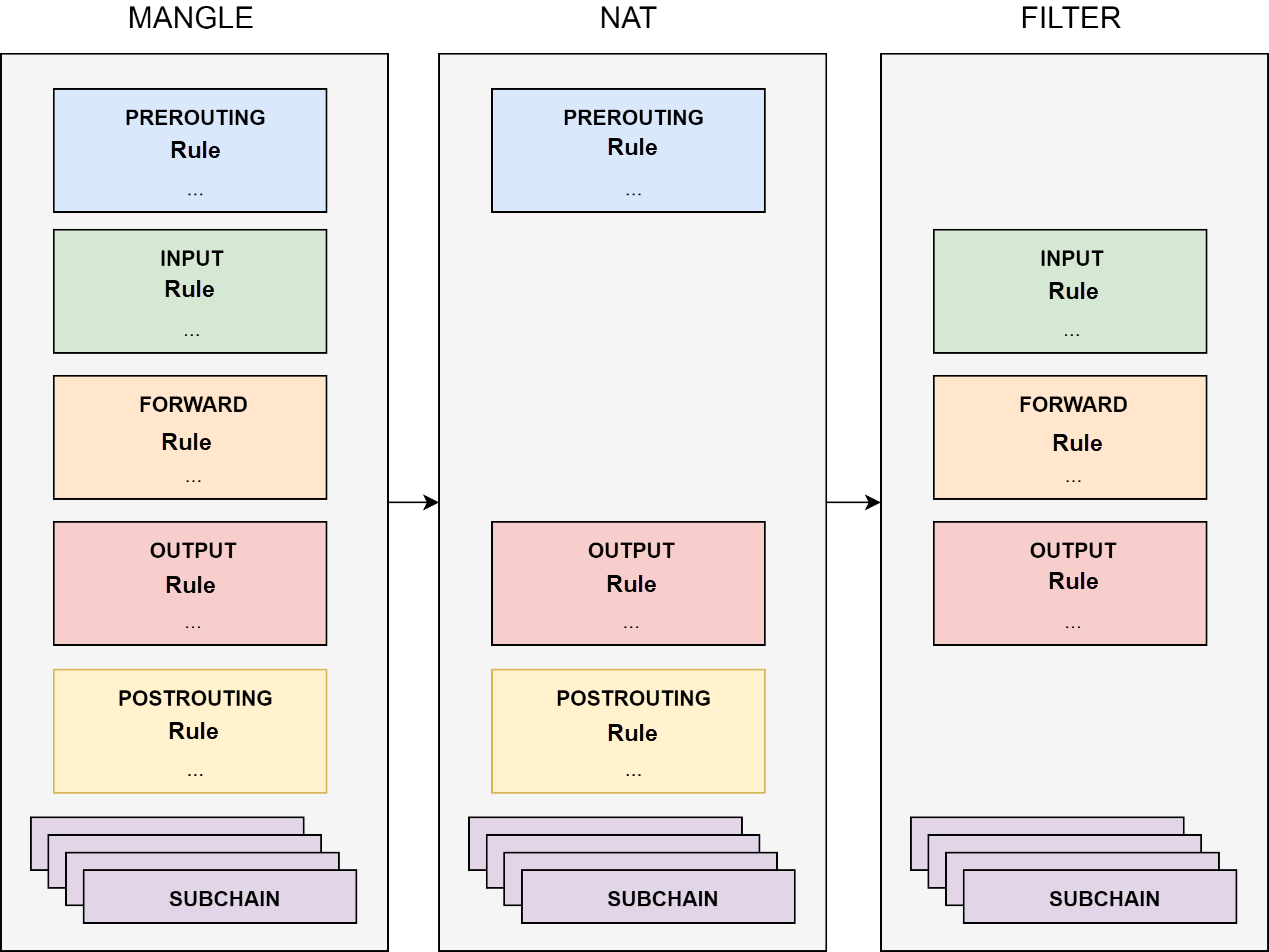

This section introduces Netfilter as a filter framework. In Netfilter, there are five incisions on the data pipeline and they process data packets. Although the fixed incision positions and the classification of network packet processing methods greatly optimize the filter framework, there is a need to modify the pipeline to handle new functions. In other words, the framework does not completely decouple the pipeline and the filtering function.

To decouple the pipeline and the filtering function, Netfilter uses the table concept. The table is the filtering center of Netfilter. The core function of the table is the classification (table) of filtering methods and the organization (chain) of filtering rules for each filtering method.

After the filtering function decouples from the pipeline, all the processing of data packets becomes the configuration of the table. The five incisions on the pipeline change to the data flow entry and exit, which send data flow to the filtering center and transmit the processed data flows along the pipeline.

As shown in the preceding figure, Netfilter organizes rules into a chain in the table. The table contains the default chains for each pipeline incision and the custom chains. A default chain is a data entry, which jumps to a custom chain to implement some complex functions. Custom chains bring obvious benefits.

To implement a complex filtering function, such as implementing the reverse proxy of Kubernetes cluster nodes, use custom chains to modularize the rules.

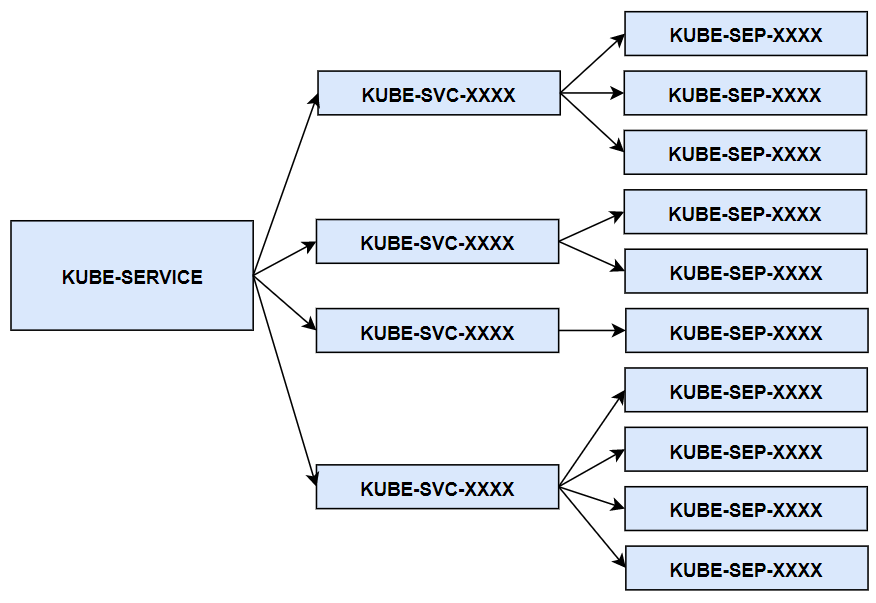

Implementation of the reverse proxy of cluster service indicates that custom chains implement DNAT of data packets in modularization mode. KUBE-SERVICE is the entry chain of the entire reverse proxy, which is the total entry of all services. The KUBE-SVC-XXXX chain is the entry chain of a specific service.

The KUBE-SERVICE chain jumps to the KUBE-SVC-XXXX chain of a specific service based on the service IP address. The KUBE-SEP-XXXX chain represents the address and port of a specific pod, that is, the endpoint. The KUBE-SVC-XXXX chain of a specific service uses a certain algorithm (generally a random algorithm) to jump to the endpoint chain.

As mentioned above, implement DNAT. Change the destination address, before PREROUTING and POSTROUTING to ensure that data packets are correctly processed by PREROUTING or POSTROUTING. Therefore, the KUBE-SERVICE is called by the default chains of PREROUTING and OUTPUT.

This article provides a comprehensive understanding of the concept and implementation of the Kubernetes cluster service. The key points are as follows:

1. The service essentially works as SLB.

2. To implement service load balancing, use the Sidecar mode which is similar to the service mesh Instead of an exclusive mode of the LVS type.

3. kube-proxy is essentially a cluster controller. Additionally, think about the design of the filter framework and understand the principle of service load balancing implemented by iptables.

From Confused to Proficient: Methods to Understand Controllers of Kubernetes Clusters

Deploy Geonode Project with Geoserver, Tomcat8, and PostgreSQL on ECS

2,599 posts | 762 followers

FollowAlibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

2,599 posts | 762 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Clouder