By Sheng Dong, Alibaba Cloud After-Sales Technical Expert

Istio is the future! It saves the day when a container cluster, especially a Kubernetes cluster is a norm resulting in increasingly complex applications with a strong demand for service governance.

The status quo of Istio is that many people talk about it but only a few use it. As a result, there are many articles sharing facts and reasons but very few articles sharing practical experience.

As a frontline team, the Alibaba Cloud Customer Services team has the responsibility to share its troubleshooting experience, and this article explains how to troubleshoot Istio issues.

Generally, to start Istio, a user installs Istio in an internal test cluster and deploys the bookinfo application according to the official documentation. While running the 'kubectl get pods' command after deployment, the user finds that only 1/2 containers of each pod are in the READY state.

# kubectl get pods

NAME READY STATUS RESTARTS AGE

details-v1-68868454f5-94hzd 1/2 Running 0 1m

productpage-v1-5cb458d74f-28nlz 1/2 Running 0 1m

ratings-v1-76f4c9765f-gjjsc 1/2 Running 0 1m

reviews-v1-56f6855586-dplsf 1/2 Running 0 1m

reviews-v2-65c9df47f8-zdgbw 1/2 Running 0 1m

reviews-v3-6cf47594fd-cvrtf 1/2 Running 0 1m

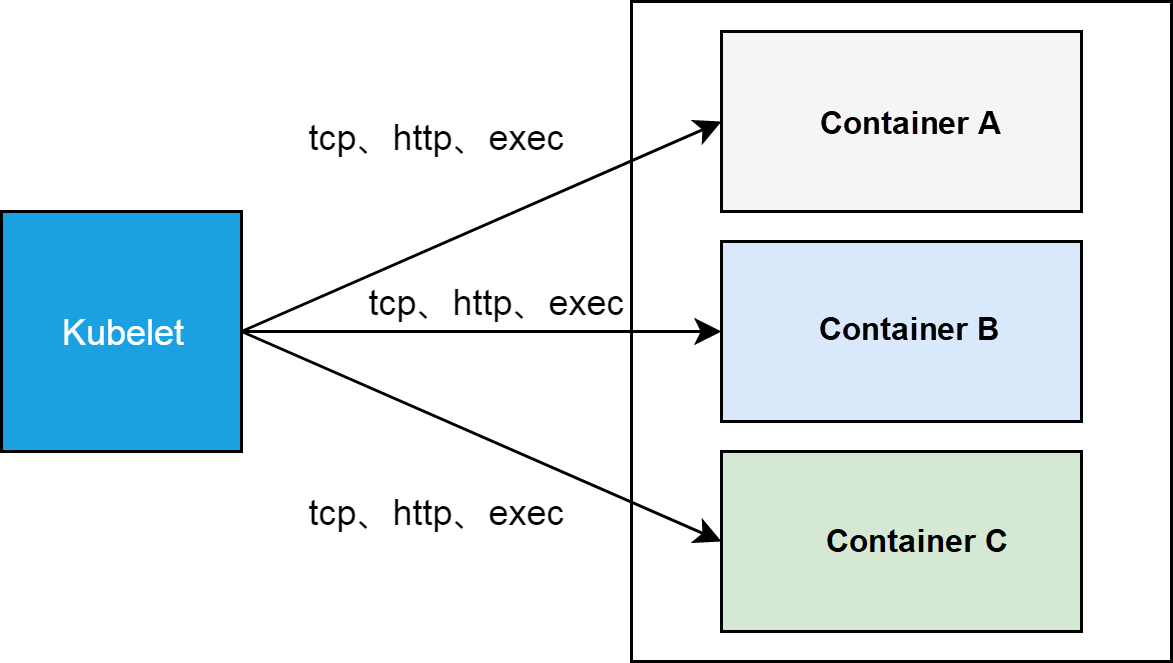

The READY column indicates the readiness of containers in each pod. Kubelet on each cluster node determines the readiness of the corresponding container based on the readiness rules of containers, including TCP, HTTP, and EXEC.

As a process running on each node, kubelet accesses a container interface through TCP or HTTP (from the node network namespace to the pod network namespace) or runs the exec command in a container namespace to determine whether the container is ready.

If you have never noticed the READY column, you may ask what does "2" and "1/2" mean.

"2" indicates that each pod has two containers and "1/2" indicates that one of the two containers is ready. Therefore, only one container passes the readiness test. We will further introduce the meaning of "2" in the next section. In this section, we discuss why one container in each pod is not ready.

While using the kubectl tool to obtain the orchestration template of the first details pod, you can see that the pod contains two containers and the readiness probe is defined for only one container. For the container with the undefined readiness probe, kubelet considers that the container is ready when the process in the container starts. Therefore, 1/2 means that the container with the defined readiness probe fails the kubelet test.

The container that fails the readiness probe test is the Istio-proxy container. The readiness probe rule is defined as follows.

readinessProbe:

failureThreshold: 30

httpGet:

path: /healthz/ready

port: 15020

scheme: HTTP

initialDelaySeconds: 1

periodSeconds: 2

successThreshold: 1

timeoutSeconds: 1

Log on to the node where this pod is located, use the curl tool to simulate kubelet access to the following URI, and test the readiness status of the Istio-proxy container.

# curl http://172.16.3.43:15020/healthz/ready -v

* About to connect() to 172.16.3.43 port 15020 (#0)

* Trying 172.16.3.43...

* Connected to 172.16.3.43 (172.16.3.43) port 15020 (#0)

> GET /healthz/ready HTTP/1.1

> User-Agent: curl/7.29.0

> Host: 172.16.3.43:15020

> Accept: */*>

< HTTP/1.1 503 Service Unavailable< Date: Fri, 30 Aug 2019 16:43:50 GMT

< Content-Length: 0

< *

Connection #0 to host 172.16.3.43 left intact

The preceding section describes the issue symptom but overlooks the question of why the number of containers in a pod is 2. Each pod essentially has at least two containers, including a placeholder container, Pause, and a working container. However, when we run the kubectl command to obtain the list of pods, containers in the READY column do not include the Pause container.

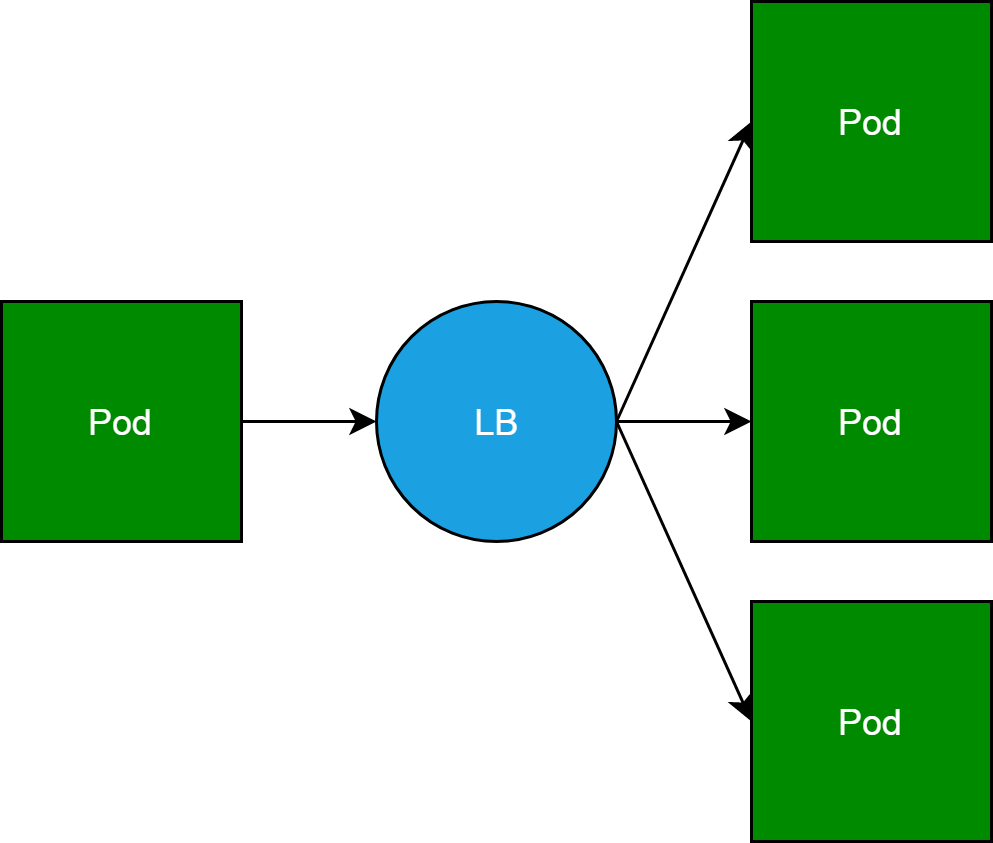

The working container is Sidecar, the core concept of the service mesh. The Sidecar name does not reflect the nature of the container. The Sidecar container, Server Load Balancer (SLB) for a pod to access other service back-end pods, is essentially a reverse proxy.

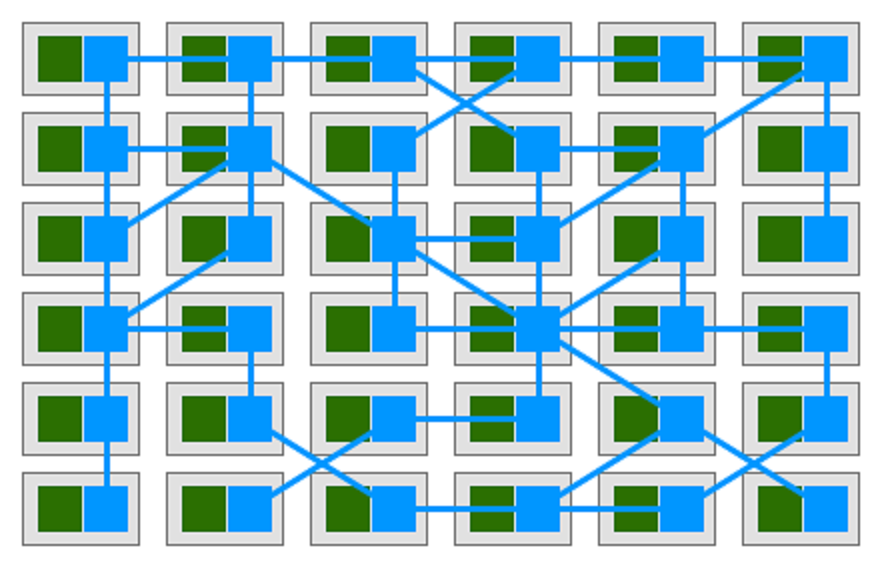

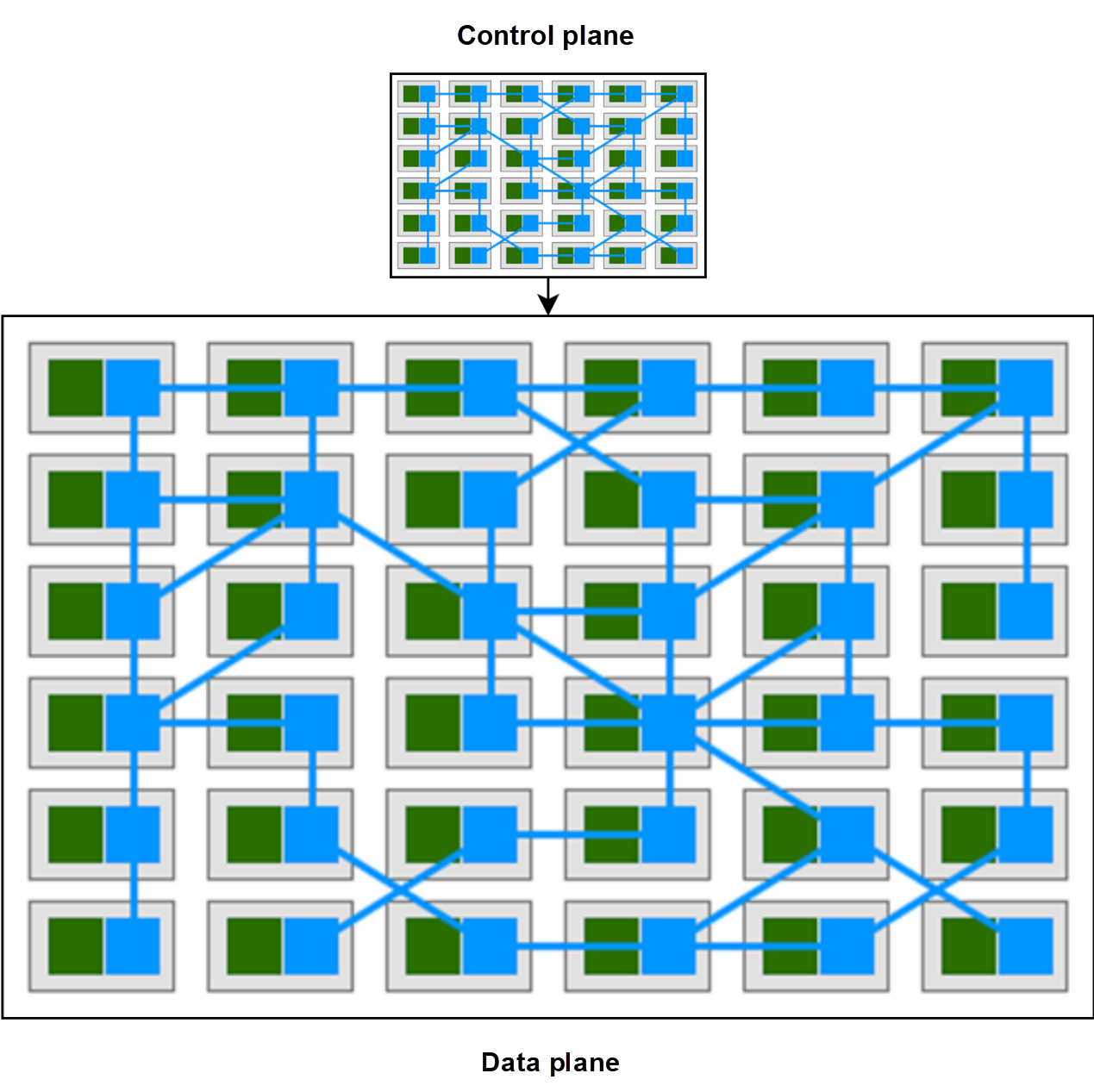

However, when each pod in a cluster carries a reverse proxy, the pod and the reverse proxy form a service mesh, as shown in the following figure.

Therefore, the Sidecar mode is the correspondent-carried mode. When we bind Sidecar with the pod, Sidecar assumes the role of a reverse proxy during outbound traffic forwarding, and can do something out of reverse proxy responsibilities while receiving inbound traffic. We will discuss this in detail in upcoming articles.

Istio implements the service mesh based on Kubernetes. The Sidecar container used by Istio is the one that is not ready in the first section. Therefore, the issue is that all Sidecar containers are not ready in the service mesh.

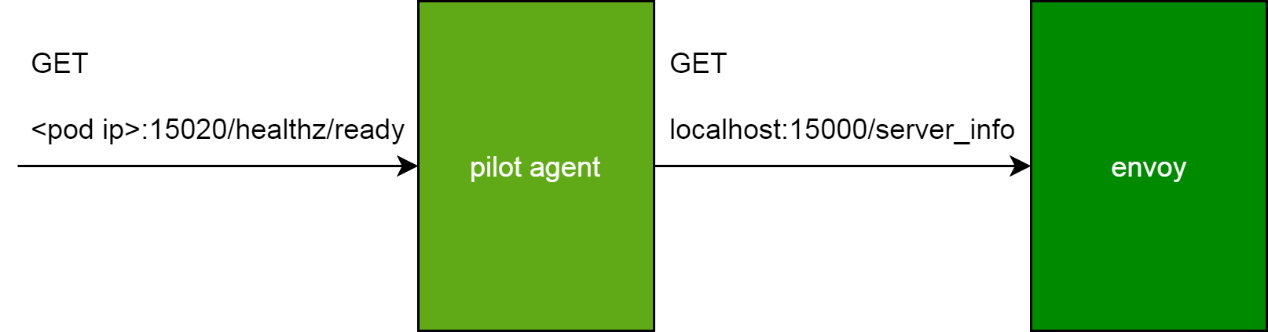

In the preceding section, each pod in Istio comes with a reverse proxy. The issue here is that all Sidecar containers are not ready. As defined in the readiness probe, the method to determine whether a Sidecar container is ready is to access the following website.

http://<pod ip>:15020/healthz/ready

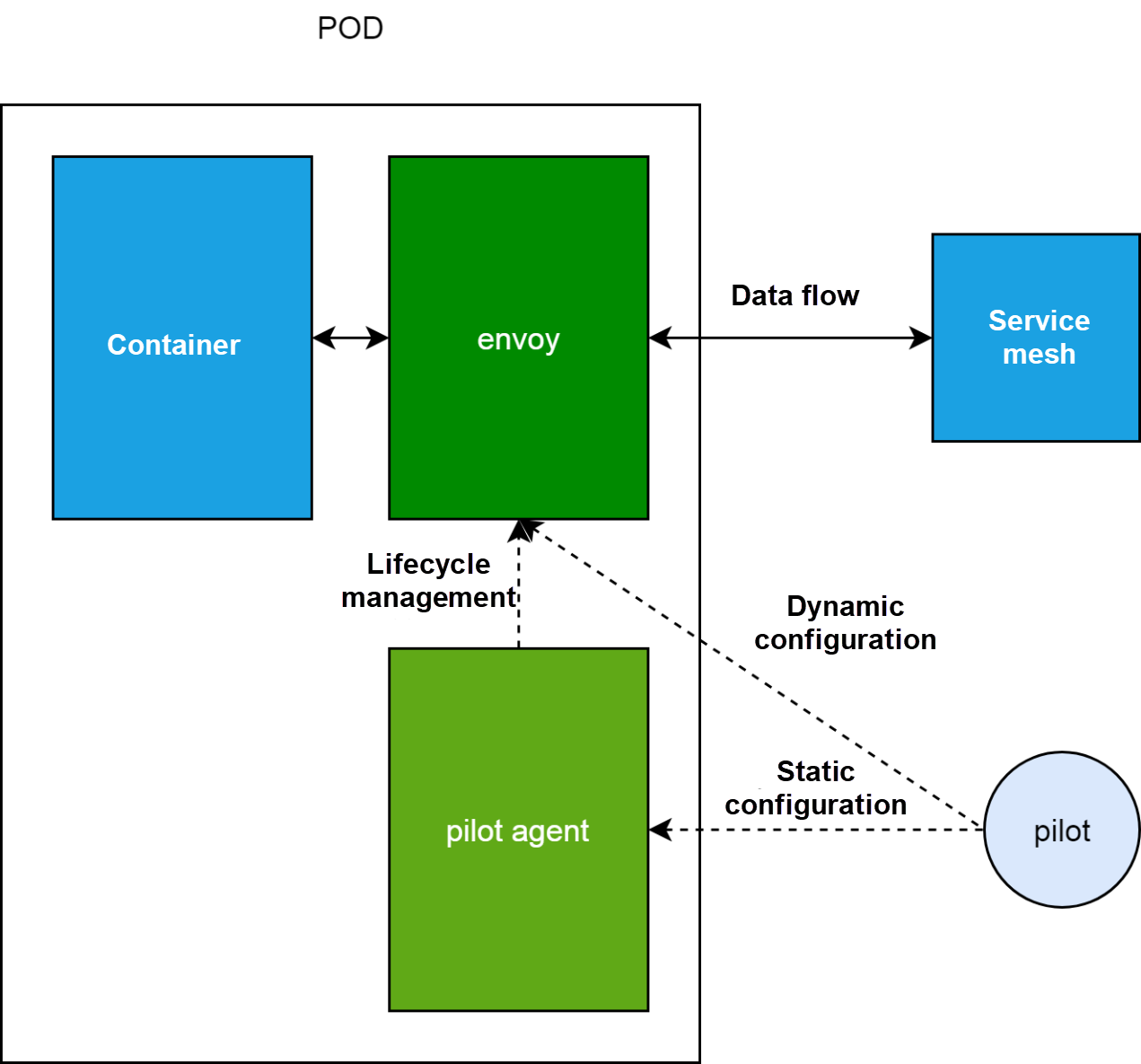

Next, let's discuss the composition and working principle of a pod and its Sidecar. In the service mesh, a pod not only contains the container that processes businesses but also the Istio-proxy Sidecar container. Generally, the Istio-proxy container starts the pilot agent and envoy processes.

The envoy process is the proxy for functions such as data flow management. Data flows must be output from and input to the business container through the envoy process. The pilot agent process maintains the static configuration of the envoy process and manages the lifecycle of the envoy process. We will discuss the dynamic configuration in the next section.

Run the following command to access the Istio-proxy container for further troubleshooting.

Tip: Access the Istio-proxy container in the privilege mode as the 1337 user, to ensure that you run the commands that can be run only in the privilege mode, for example, the iptables command.

docker exec -ti -u 1337 --privileged <istio-proxy container id> bash

The 1337 user is an Istio-proxy user with the same name defined in the Sidecar image, which is the default user for the Sidecar container. If the user option 'u' is not used in the preceding command, the privilege mode is granted to the root user. Therefore, after accessing the container, you need to switch to the root user to run a privilege command.

After accessing the container, when you run the netstat command to check the listener, you find that the pilot agent process listens to the 15020 readiness probe port.

istio-proxy@details-v1-68868454f5-94hzd:/$ netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:15090 0.0.0.0:* LISTEN 19/envoy

tcp 0 0 127.0.0.1:15000 0.0.0.0:* LISTEN 19/envoy

tcp 0 0 0.0.0.0:9080 0.0.0.0:* LISTEN -

tcp6 0 0 :::15020 :::* LISTEN 1/pilot-agent

Error 503 is reported if you access the readiness probe port in the Istio-proxy container.

After learning the Sidecar proxy and the pilot agent process that manages the proxy lifecycle, consider how to use the pilot agent process to implement the healthz/ready interface. If the interface returns OK, the pilot agent process is ready and the proxy is running.

The readiness check interface of the pilot agent process is implemented as mentioned above. After receiving a request, this interface calls the server_info interface of the envoy proxy. The IP address used for calling the interface pertains to the localhost because the communication is between processes in the same pod. The port used for calling the interface is proxyAdminPort 15000 of the envoy process.

Now, moving ahead can check the logs of the Istio-proxy container. An error repeatedly occurs as the output in the container log, which contains two parts. The pilot agent process generates the "Envoy proxy is NOT ready" part when responding to the updatz/ready interface, indicating that the envoy proxy is not ready.

The pilot agent process brings back the "config not received from Pilot (is Pilot running?): cds updates: 0 successful, 0 rejected; lds updates: 0 successful, 0 rejected" part when accessing server_info through proxyAdminPort, indicating that the envoy process cannot obtain the configuration from the pilot agent process.

Envoy proxy is NOT ready: config not received from Pilot (is Pilot running?): cds updates: 0 successful, 0 rejected; lds updates: 0 successful, 0 rejected.

In the preceding section, we selectively ignore the dotted line from pilot agent to envoy, indicating the dynamic configuration. The error indicates that envoy fails to obtain the dynamic configuration from pilot agent on the control plane.

Before further analysis, let's talk about the control plane and data plane. The control plane and data plane modes are ubiquitous. Let's consider two extreme examples in this section.

Take the DHCP server as the first example. As we all know, the DHCP server can be configured to obtain the IP address for a computer in a local area network (LAN). In this example, the DHCP server manages and allocates IP addresses to computers in the LAN. The DHCP server is a control plane and each computer that obtains an IP address is a data plane.

For the second example, consider a film script and a film performance. A script is considered as a control plane, while a film performance, including each character's speech and film scene layout, is considered as a data plane.

In the first example, the control plane affects only one attribute of a computer. In the second example, the control plane is almost a complete abstraction and copy of the data plane, affecting all aspects of the data plane. The control plane of the Istio service mesh is similar to the one in the second example, as shown in the following figure.

The pilot agent process of the Istio control plane uses the GRPC protocol to expose the Istio-pilot.istio-system:15010 port. The envoy process cannot obtain the dynamic configuration from the pilot agent process because the cluster DNS cannot be used in any pods.

In the istio-proxy Sidecar container, the envoy process cannot access the pilot agent process because the cluster DNS cannot parse the istio-pilot.istio-system service name. In the container, the IP address of the DNS server in the resolv.conf file is 172.19.0.10, which is the default IP address of the kube-dns service of the cluster.

istio-proxy@details-v1-68868454f5-94hzd:/$ cat /etc/resolv.conf

nameserver 172.19.0.10

search default.svc.cluster.local svc.cluster.local cluster.local localdomain

However, the customer deletes and rebuilds the kube-dns service without specifying the service IP address. As a result, the cluster DNS address change and hence no Sidecar container can access the pilot agent process.

# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 172.19.9.54 <none> 53/UDP,53/TCP 5d

Finally, Sidecar containers are recovered after the kube-dns service is modified and the IP address is specified.

# kubectl get pods

NAME READY STATUS RESTARTS AGE

details-v1-68868454f5-94hzd 2/2 Running 0 6d

nginx-647d5bf6c5-gfvkm 2/2 Running 0 2d

nginx-647d5bf6c5-wvfpd 2/2 Running 0 2d

productpage-v1-5cb458d74f-28nlz 2/2 Running 0 6d

ratings-v1-76f4c9765f-gjjsc 2/2 Running 0 6d

reviews-v1-56f6855586-dplsf 2/2 Running 0 6d

reviews-v2-65c9df47f8-zdgbw 2/2 Running 0 6d

reviews-v3-6cf47594fd-cvrtf 2/2 Running 0 6d

In practice, the troubleshooting for the issue takes only a few minutes. However, it takes time to understand the principle, reason, and result behind the process. This is the first article about Istio in our series, and we hope helps you in troubleshooting.

From Confused to Proficient - Principle of Kubernetes Cluster Scaling

From Confused to Proficient: Always Ready for Kubernetes Cluster Nodes

2,599 posts | 762 followers

FollowAlibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

Alibaba Clouder - December 19, 2019

2,599 posts | 762 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Alibaba Clouder