By Jiongsi and Jueli

Topics covered:

At present, two prominent directions dominate reasoning scenarios for foundation models: Retrieval-Augmented Generation (RAG) and Agent. In this article, we will delve into applications related to the Agent direction. RAG-related scenarios will be discussed in subsequent series.

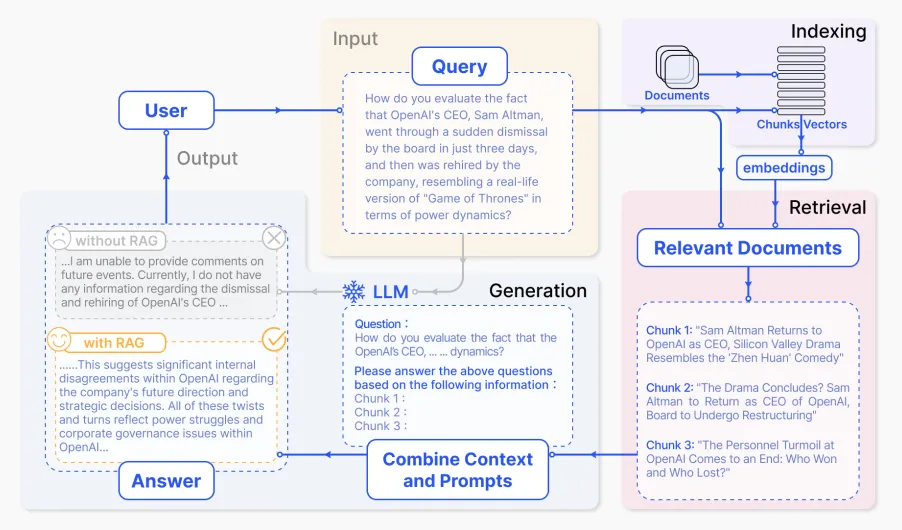

RAG: a foundation model project that combines information retrieval and text generation.

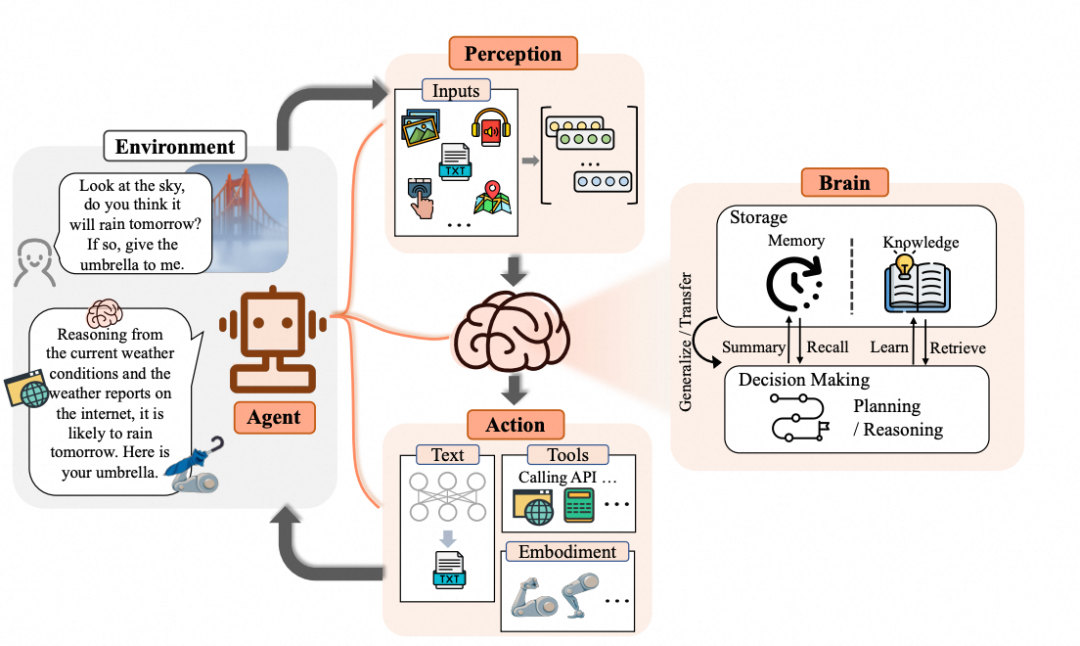

Agent: a foundation model project capable of autonomous task execution.

Diagram of RAG Project Topology

Diagram of Agent Project Topology

We're familiar with agents. When we visit a website, the backend service identifies our operating system and browser based on UserAgent.

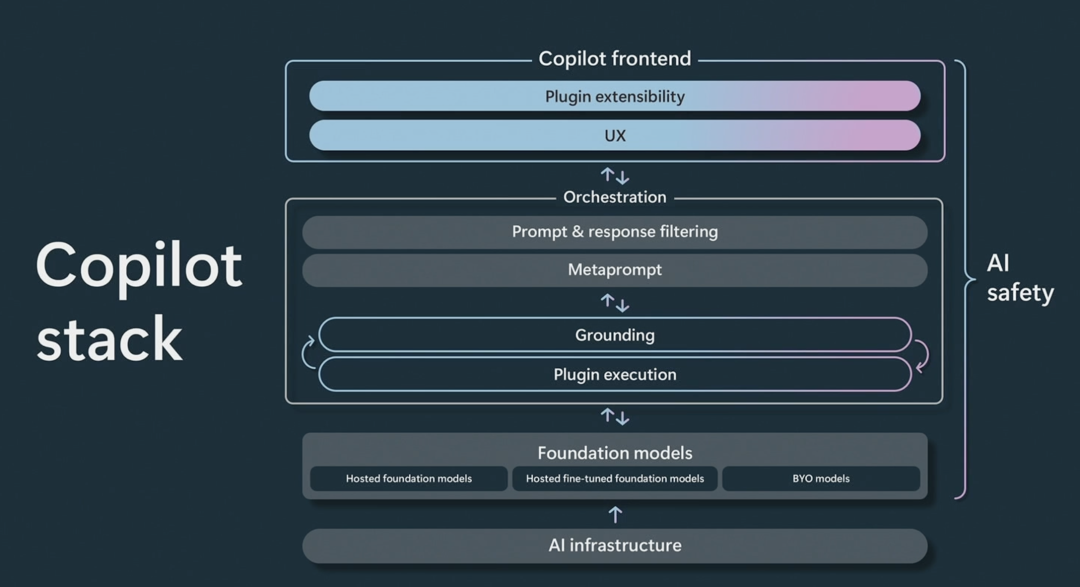

Currently, one of the common applications associated with many foundation models is Copilot, akin to a co-pilot on an aircraft. However, in practical use, if Copilot were limited to mere code completion, it would fall short of our expectations. Our daily work environment encompasses not only code but also numerous tools, platforms, and processes. Unfortunately, in many repetitive scenarios where tools are readily available, Copilot faces challenges in significantly enhancing efficiency.

As a copilot, if it can assist us in our daily work, it makes sense for its operational scope to encompass tools, platforms, and processes. However, do we truly need a copilot to write change processes or audit risks?

At this juncture, a copilot transcends mere assistance and becomes an agent.

The key distinction lies in their roles: primary and secondary.

• A copilot is just an assistant, following instructions and providing feedback during complex executions.

• An agent has a subjective initiative and selects tools to achieve goals based on requirements.

In the previous two articles, we explored some basic applications using LangChain. In this article, we will construct an agent project framework based on LangChain specifically for O&M diagnosis. For readers unfamiliar with LangChain, here is a brief introduction: LangChain is a project tool designed to explore, develop, and facilitate the use of large language models for programming, creative tasks, and automation.

Let's consider a fully open source HDFS cluster as our diagnostic target.

First, let's analyze our daily O&M diagnosis scenarios:

In some cases, steps 2 and 3 may repeat, and step 3 does not need exclusively target Linux OS — it can apply to other types of O&M objects as well.

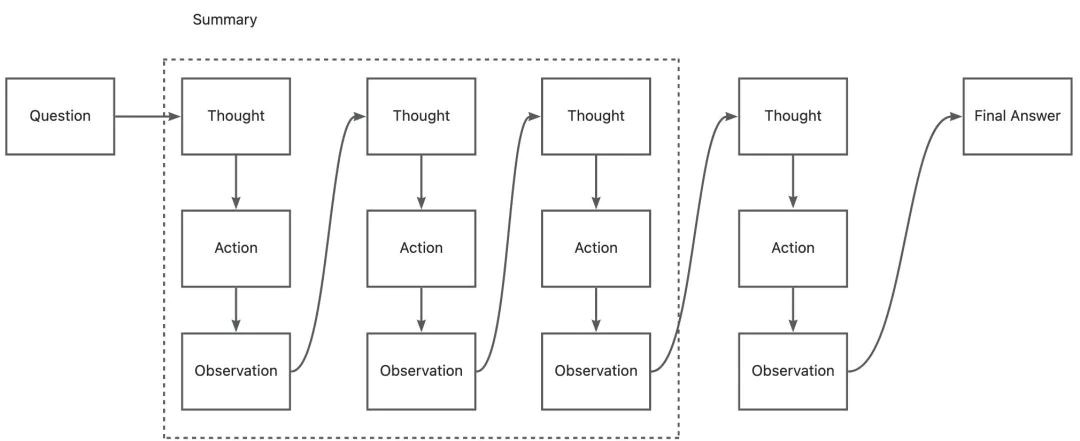

LangChain, a framework that integrates tools with large models, operates based on its core principle: Reasoning and Acting (ReAct). For further details on this principle, refer to the first article in this series.

Let's discuss O&M diagnostic scenarios and how we can build an agent based on LangChain.

First, based on our analysis of previous scenarios, we recognize the need for two tools: one to check logs and another to execute commands. Consequently, we've developed these tools:

| Parameter | Purpose |

exec_command(command, host, timeout) |

Execute shell commands on any machine. |

get_namenodes() |

Obtain the namenode list of the current cluster. |

hdfs_touchz() |

Run touchz in an HDFS cluster to check whether the cluster is writable. |

namenode_log(host) |

Retrieve the most recent 30 lines from the specified namenode log. If the content exceeds 2,000 characters, truncate it. |

get_local_disk_free(host) |

Run the df command on the specified machine to view the disk capacity and return the result. |

To facilitate in-depth exploration, the diagnostic tool code is open sourced as follows:

https://github.com/alibaba/sreworks-ext/blob/master/aibond/cases/hdfs-analyse/hdfscluster.py

Now, some of you might wonder: since we already have exec_command, which can execute any command, why bother creating specific tools like namenode_log and get_local_disk_free? In practice, we've discovered that more specialized tools yield better results. Foundation models share a common trait with humans: while most O&M problems can be solved using Linux commands, encapsulating O&M tools serves a purpose. It reduces the need to remember numerous commands, allowing O&M experts to focus on critical issues without worrying about missing symbols in recently entered commands.

If you examine the code closely, you will notice a detail: our tools are not identical to the native tools of LangChain. We have introduced the concept of classes atop the LangChain framework. As a result, our tools are no longer mere common functions; they can now instantiate classes.

In the first article, we introduced the concept of object-oriented AI programming. At that time, you might not have fully grasped its significance. Now, let's explore an example that highlights the advantages of this approach.

Consider an HDFS cluster where direct logon to each node is not feasible. Instead, you must first log on to a jump server and then access individual machines. Consequently, every function call must include the IP address of the jump server. For instance, the function for querying logs would transform into the following:

namenode_log(gateway_host, host)Consider this scenario: when programming, if a function has two input parameters with similar formats, it is easy to make mistakes in variable positions without the aid of an IDE prompt.

The same issue arises with foundation models. Therefore, object-oriented function calls are also beneficial for foundation models. By writing functions in an object-oriented manner, we can increase the success rate of function calls by these models.

cluster = new HDFSCluster(gateway_host)

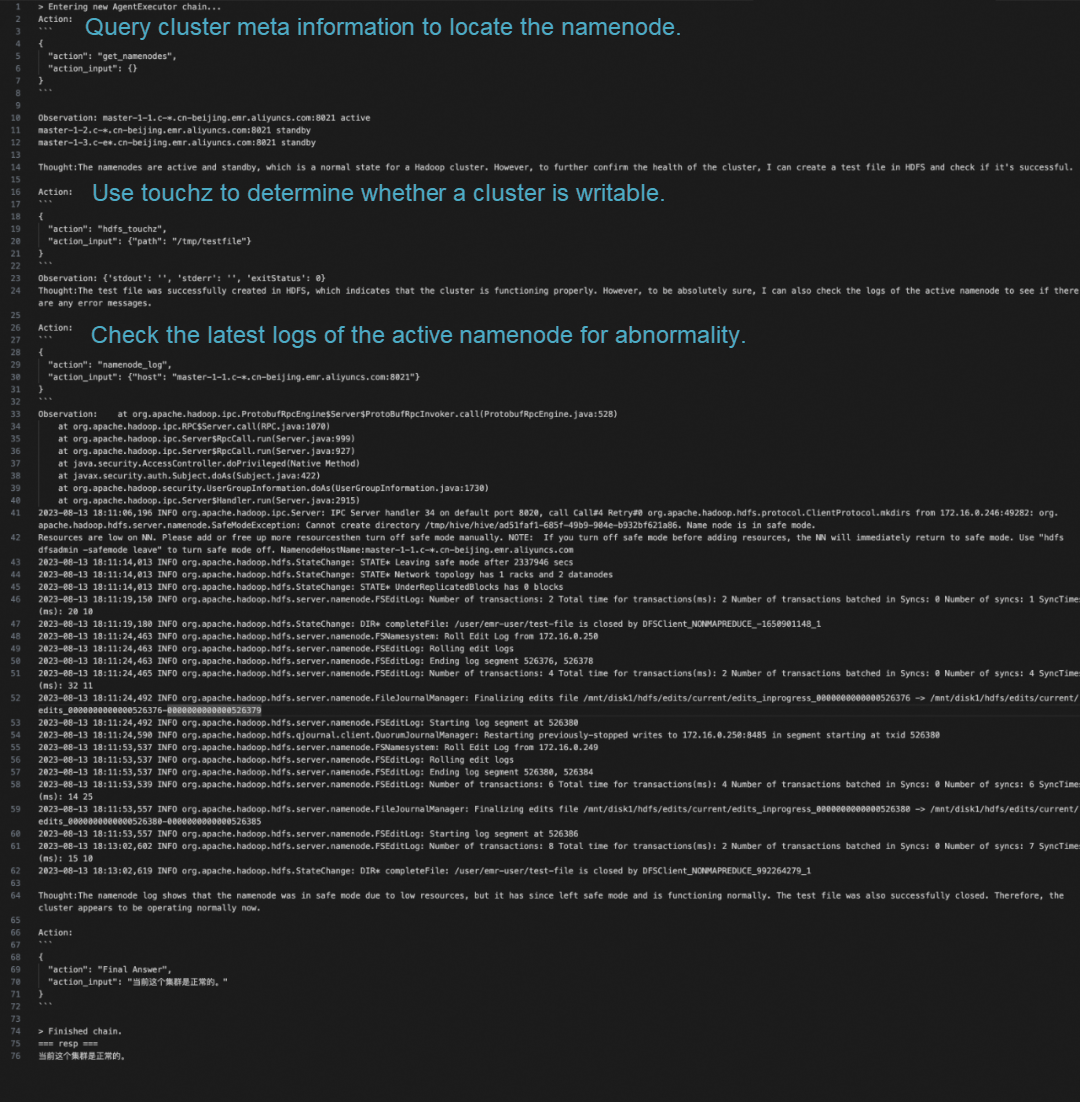

cluster.namenode_log(host)Now that we have constructed a basic agent project framework, let's put it to the test and determine if it effectively addresses our needs. We will start by intentionally creating a fault and then observe whether the agent can identify it.

For this practice series, we will use a three-node HDFS cluster obtained through the quick build function of the purchased open source big data platform E-MapReduce.

| Node Name | Private IP Address | Public IP Address | Disk Information |

| master-1-3 | 172.16.0.250 | 47.*.25.211 | System disk: 80 GB × 1; Data disk: 80 GB × 1 |

| master-1-2 | 172.16.0.249 | 47.*.18.251 | System disk: 80 GB × 1; Data disk: 80 GB × 1 |

| master-1-1 | 172.16.0.246 | 47.*.10.121 | System disk: 80 GB × 1; Data disk: 80 GB × 1 |

In order to make it easier for readers to reproduce this practice, we have also open sourced the code of the fault injection tool:

https://github.com/alibaba/sreworks-ext/blob/master/aibond/cases/hdfs-analyse/fault_injector.pyThis fault injection tool utilizes the fallocate command to rapidly occupy the hard disk, rendering the file system incapable of normal read and write operations.

To verify the agent's ability to analyze problems, let's conduct three fundamental experiments:

Despite identical question prompts, the cluster scenarios differ significantly. Let's observe whether the agent can effectively analyze these situations.

from aibond import AI

from hdfscluster import HDFSCluster

from langchain import OpenAI

ai = AI()

resp = ai.run("Is the current cluster normal?", llm=OpenAI(temperature=0.2, model_name="gpt-4"), tools=[HDFSCluster("47.93.25.211")], verbose=True)

print("=== resp ===")

print(resp)

Diagnostic of a normal HDFS cluster

The current cluster is normal.

We use the fault injection tool to fill the /mnt/disk1 directory of all nodes. We run the diagnostic again, ask the agent, and check its response.

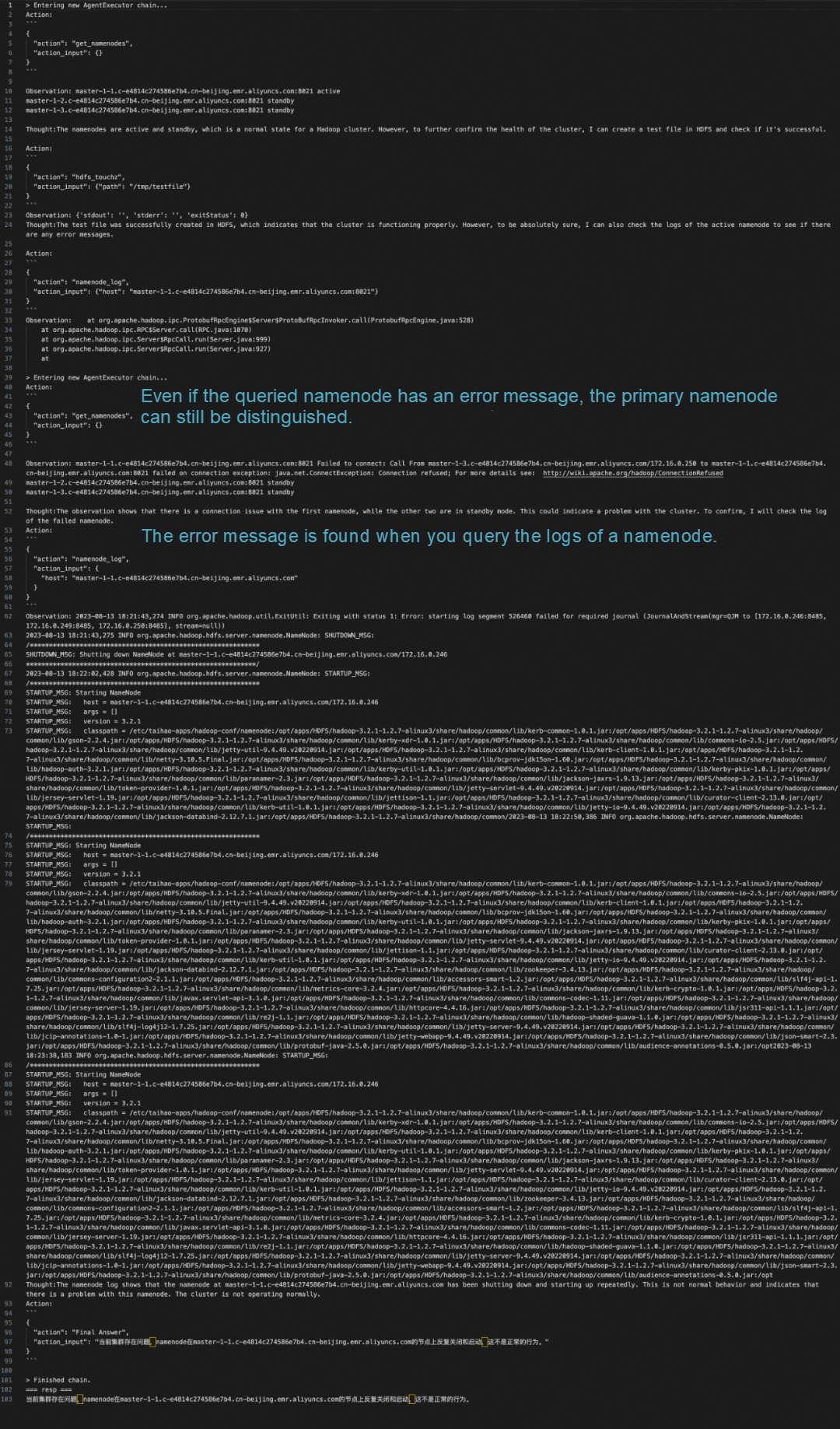

First diagnostic after injecting the hard disk full failure

There is a problem with the current cluster. The namenode is repeatedly shut down and started on the master-1-1.c-e4814c274586e7b4.cn-beijing.emr.aliyuncs.com node, which is not normal behavior.

An intriguing situation unfolded: during the agent's initial test to determine if the cluster could be written to as planned, it unexpectedly succeeded. Now, would the agent conclude that the cluster is normal? The agent maintained a rigorous approach and proceeded to examine the logs of the namenode. Through this meticulous analysis, it accurately identified that repeated shutdowns and node restarts within the cluster were abnormal behavior. Remarkably, this resembles the expertise of a seasoned professional.

Given the uncertainty arising from the initial diagnostic, we decided to run another diagnostic to assess whether the results would differ:

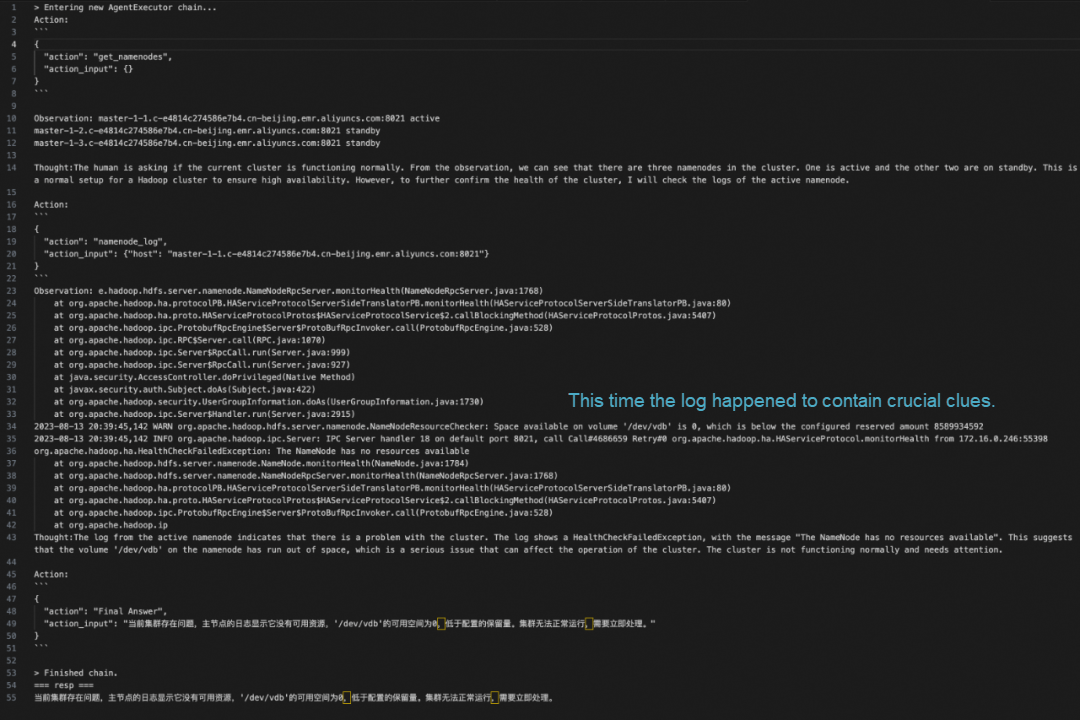

Second diagnostic after injecting the hard disk full failure

There is a problem with the current cluster. The logs of the master node show that it has no available resources, and the available space on /dev/vdb is 0, which is below the configured reserved amount. The cluster cannot run properly and needs to be fixed immediately.

The key distinction between the second and first diagnostics lies in the additional clues captured in the log. Specifically, the agent directly identifies that the /dev/vdb partition has an available space of 0. This crucial clue allows the agent to provide a more specific diagnostic conclusion.

When we consider both diagnostics together, we notice that the agent's diagnostic approach closely resembles that of human experts:

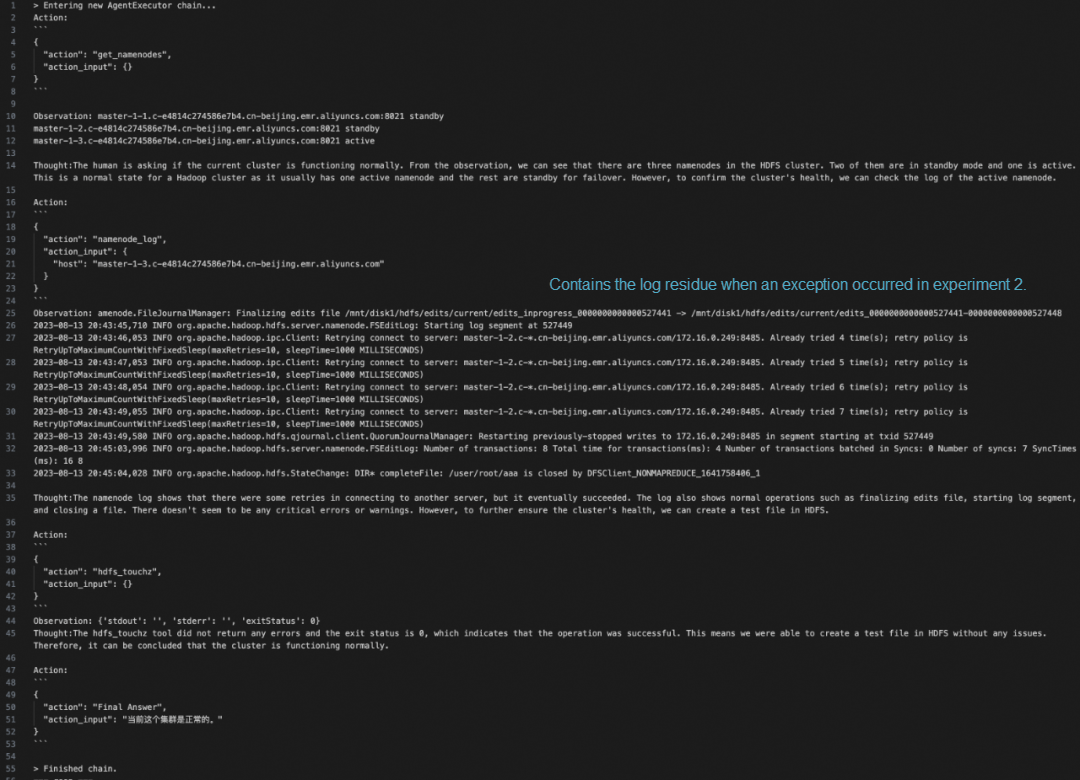

It is not enough to analyze the cluster when it has a problem. If the cluster is recovered, the agent must also be able to analyze its health. So we conducted Experiment 3. The failures injected in Experiment 2 are all removed.

Diagnostic after failure recovery

The current cluster is normal.

In our diagnostic analysis of the agent, we discovered that despite numerous interferences in the log, it consistently delivers accurate judgments—confirming the cluster's normalcy. Frankly, its performance may even surpass that of certain O&M personnel. During fault analysis and processing, valuable error clues often appear amidst miscellaneous information, and only seasoned experts can effectively filter out irrelevant details to arrive at a conclusive decision.

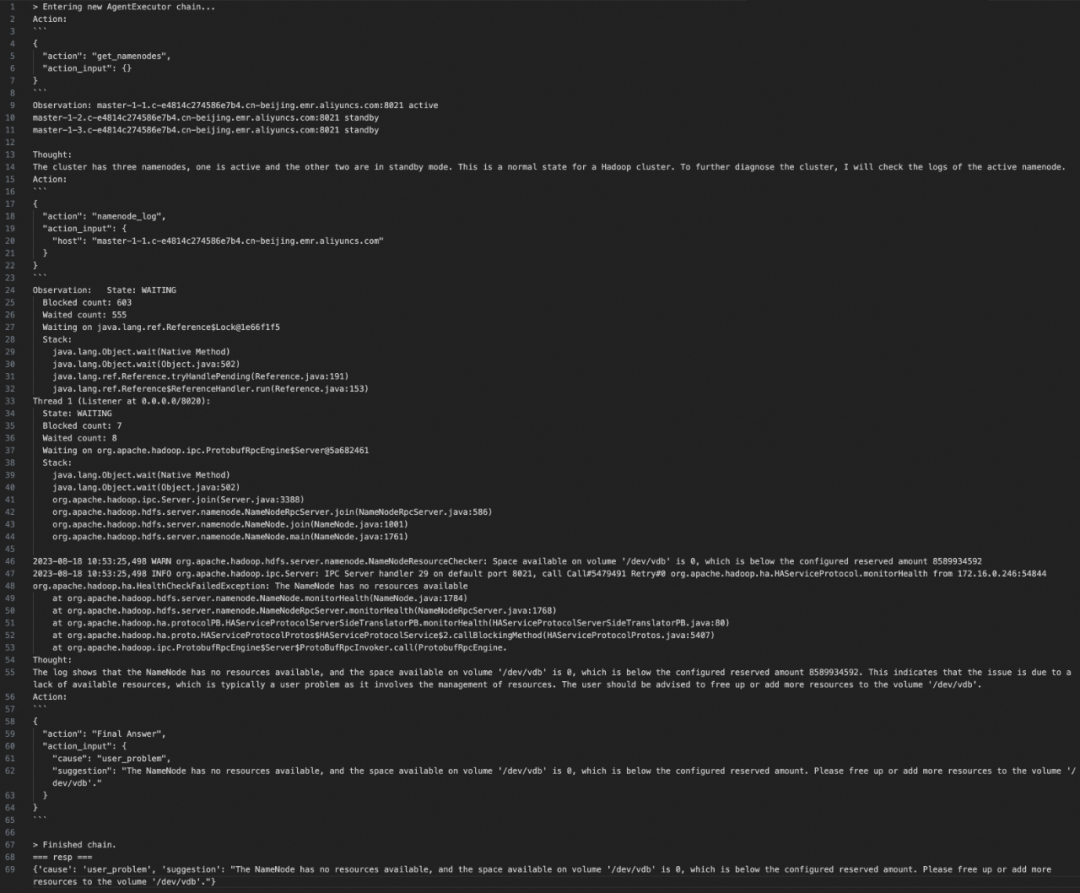

In our three basic experiments, the agent's diagnoses consistently met or even exceeded our expectations. Remarkably, we provided minimal guidance in the prompts—simply asking whether the cluster was normal. Now, the question arises: Can we achieve even better results by posing more complex queries? Our attempt involved leveraging the agent to pinpoint the root cause of problems. When issues arise, identifying the culprit can be a daunting task. Is it a software glitch or a user error? The distinction isn't always clear. Let's explore whether the agent can shed light on this matter. For this experiment, we've set the scene with full hard drives. Our revised question prompt reads as follows:

Please diagnose this cluster and determine the root cause: software bug (software_bug) or user problem (user_problem). Return the findings in the JSON format:

{"cause": "software_bug|user_problem", "suggestion": "Suggestions"}.

{"cause": "user_problem", "suggestion": "The NameNode has no resources available, and the space available on volume '/dev/vdb' is 0, which is below the configured reserved amount. Please free up or add more resources to the volume '/dev/vdb'."}The agent provides the conclusion directly in the JSON format, explicitly identifying the issue as a user problem. This structured format can seamlessly integrate into the ticket system.

1. The agent's process of troubleshooting and drawing conclusions closely mirrors human behavior.

2. Rather than relying on general tools like exec_command, the agent strategically chooses specialized vertical tools such as get_namenodes and namenode_log. This approach aligns with human decision-making.

3. The foundation model's existing knowledge data is ample for professional analysis, rendering an additional knowledge base unnecessary.

In the previous chapters, we focused on a simple three-node HDFS cluster. However, practical production clusters are significantly more complex. To address this, we need to create an agent project framework tailored to our specific scenario. So how do we build it?

When I first encountered LangChain, I was amazed by its project framework—it felt like a kind of cheat code. The original foundational model could not interact with the outside world, but with LangChain tools, it gained the ability to do so freely. Later, after reading ReAct papers, I realized that by structuring prompts in a fixed format, we could achieve the desired effects using the chain of thought, continuously invoking relevant tools to reach our goals. Below is a prompt containing the framework's crucial components. Let's take a look:

System: Respond to the human as helpfully and accurately as possible. You have access to the following tools:

get_namenodes: get_namenodes() -> str - Get the namenode list of the HDFS cluster., args: {{}}

hdfs_touchz: hdfs_touchz() -> str - Create a test file in HDFS., args: {{}}

namenode_log: namenode_log(host: str) -> str - get one HDFS cluster namenode's log., args: {{'host': {{'title': 'Host', 'type': 'string'}}}}

Use a json blob to specify a tool by providing an action key (tool name) and an action_input key (tool input).

Valid "action" values: "Final Answer" or get_namenodes, hdfs_touchz, namenode_log

Provide only ONE action per $JSON_BLOB, as shown:

{

"action": $TOOL_NAME,

"action_input": $INPUT

}

Follow this format:

Question: input question to answer

Thought: consider previous and subsequent steps

Action:

$JSON_BLOB

Observation: action result

... (repeat Thought/Action/Observation N times)

Thought: I know what to respond

Action:

{

"action": "Final Answer",

"action_input": "Final response to human"

}

Begin! Reminder to ALWAYS respond with a valid json blob of a single action. Use tools if necessary. Respond directly if appropriate. Format is Action:```$JSON_BLOB```then Observation:.

Thought:

Human: Please diagnose this cluster and determine the root cause: software bug (software_bug) or user problem (user_problem). Return the findings in the JSON format: {"cause": "software_bug|user_problem", "suggestion": "Suggestions"}.This prompt structure has 2 key points:

Therefore, if you need to embed the agent in the existing system, you can implement the chain of thought even without LangChain, only by writing a framework for parsing strings. At the same time, the foundation models connected to production are all domestic foundation models, and some of them may not yet be able to reach the standard chain of thought interaction. At this time, you need to make more effort to create the framework for parsing strings, such as abandoning the JSON structure and using more regular parsing, as well as using string similarity to modify the incorrect calling parameters. We do not discuss this in detail here. You can explore on your own with the foundation models you can use.

In the practice of using the agent, we find some very interesting appearances: in foundation models, the memory state reduces the model's reasoning ability. This appearance is especially significant in O&M diagnosis. If 20 machines are to be checked, the token limit may not be reached when the 10th machine is checked, however, the foundation model may be somewhat confused. The same is true for human beings. When the amount of information is large, if the attention is not highly concentrated and the IP addresses across the distributed systems are so similar, it is likely that people will be a little confused after several rounds of checking. One thing that people do at this moment is to take notes, summarizing the previous troubleshooting process into a document. If the entire troubleshooting links are too long, resulting in a long summary in the document, we will summarize it again, removing all the intermediate details and leaving only a few concise conclusions.

In fact, the framework of the agent can also be optimized in such a way. The process of Question -> Thought -> Action in the chain of thought is like our first round of notes. If the process is long, we need to summarize the notes again so that the agent can continue to focus on the main links of the problem. Therefore, we can see that timely summarization can reduce the state memory burden of foundation models and improve their reasoning ability.

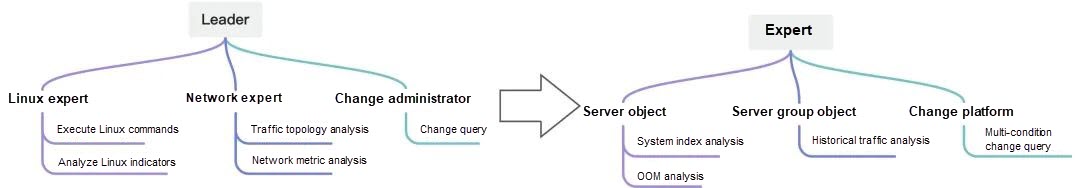

Another approach to lightening the state memory burden of foundation models, as if we can stand on the shoulders of giants, is to allow the agent to have a conversation in a business object-oriented way. From Chapter 3, we can see that our entire agent experiment is based on object-oriented tools, but I think this is not really object-oriented. So what is object-oriented? Here's an example to give you a better idea:

We build a dialogue among the agents, and there are 4 objects with these roles in the whole dialogue: the expert, the service 1 machine group, the server 10.1.1.2, and the change platform.

• Expert: has expert experience and can analyze and solve complex problems. However, for many O&M details, it needs support from other O&M objects.

• Service 1 machine group: manages four servers in its group. If a single server in the group fails, it automatically cuts traffic based on health check results on port 80.

• Server 10.1.1.2: knows the health of the various on-premises systems very well.

• Change platform: manages all O&M changes and records the O&M object entity for each O&M change.

Here is the dialogue between the 4 objects:

Expert: At 23:30 last night, the traffic dropped 20% for about an hour. @ Service 1 machine group, was there anything unusual in your group's service yesterday?

Service 1 machine group: Around this time point yesterday, the server 10.1.1.2 has several exceptions on health check. The related problems were not found on other servers.

Expert: @ Server 10.1.1.2, check if there's any abnormality around this time point on your side.

Server 10.1.1.2: The load of the machine was very high yesterday. At 23:29, the memory usage of the whole machine exceeded the threshold, and the main service process was killed by the out of memory mechanism. It was restarted repeatedly for a long time before recovering.

Expert: @ Server 10.1.1.2, have you always had such a high load? When did it start?

Server 10.1.1.2: From my system metrics, the load has been high for 27 hours.

Expert: @ Change platform, check if there was any change related to server 10.1.1.2 27 hours ago.

Change platform: 2024/3/10 13:35, that is, two days ago, a canary deployment of the network probing module was made on three servers, including the server 10.1.1.2.

Expert: Preliminary conclusion: The network probing module of the 10.1.1.2 server causes the main service process to restart repeatedly, resulting in a drop in traffic. Roll back the network probing module to stop the deterioration.

We didn't come up with solving problems through dialogue among agents at the beginning. We planned to use a collaborative topology that imitates daily work (this idea is mentioned in the first article): a leader leads multiple experts to analyze and solve problems and makes the final summary. In the end, each conversation costs a lot of tokens, and the effect is not ideal. The most critical problem here is that after adding an additional layer of expert abstraction, every analysis is done by experts using tools to give you conclusions. It takes a long time and more tokens, and sometimes experts will make mistakes in parameters. At the same time, the expert roles are generally categorized as Linux experts, network experts, and the like, but from the above example, we can see that such a categorization of roles can also be very awkward to solve problems. A problem may require a discussion between Linux experts and network experts for a long time before making progress, and their conclusions have to be summarized by the leader. It's so complicated thinking about it.

Therefore, we consider reducing the topology level of collaboration, hoping to solve the problem in a single session flow, and not to create an architecture similar to MapReduce anymore. Then, we think of object-oriented programming: the concept of objects itself exists only in the programming stage. If we introduce the concept of objects into the runtime, each O&M object will be able to speak, manage, and describe its own situation. So is it possible that as long as an expert asks them at the top, they can figure out the problem? You've seen the above example.

In this example, it is important that as an expert, it does not need to know too many technical details: it does not need to know how the traffic switching works, whether to use TCP Layer 4 probe or HTTP Layer 7 probe. This makes the experience of the expert layer versatile enough to analyze traffic drops, job failures, functional failures, etc. using similar analysis behavior.

It cannot be said that such an example is applicable in all scenarios, at least in O&M analysis and diagnosis scenarios, it can play its role. Furthermore, these agents can also be directly connected to the work group. If you don't think this agent expert gives a clear analysis, you can make a sample, personally @ each O&M entity to analyze, all of these are completely feasible.

This article shows you a case of complete problem diagnostics of an HDFS cluster through an agent, which is good at calling the appropriate tools to solve the problem. Moreover, from the perspective of onlookers, the whole process is also completely in line with the reasoning process of daily troubleshooting, and some of the performances can even reach the level of experts.

Generally, companies will consider data security risks when accessing foundation models and will use self-developed or controllable foundation models. Therefore, the prompt project framework becomes critical for self-developed foundation models.

Through a lot of practice, and from the above two examples, we found that the state memory burden in the agent reasoning process can be reduced through engineering approaches in two ways:

Welcome to build more agent projects in industry verticals according to your different requirements.

• Zhiheng Xi, et al. "The Rise and Potential of Large Language Model Based Agents: A Survey" https://arxiv.org/pdf/2309.07864.pdf

• https://python.langchain.com/docs/use_cases/tool_use/

• https://generativeai.pub/rags-from-scratch-indexing-dab7d83a0a36

• https://thenewstack.io/microsoft-one-ups-google-with-copilot-stack-for-developers/

Exploring DevOps in the Era of AI Foundation Models Part Ⅱ: Data Warehouse Based on LLMs

43 posts | 1 followers

FollowAlibaba Cloud Data Intelligence - August 7, 2024

Alibaba Cloud Community - September 6, 2024

Alibaba Cloud Community - August 9, 2024

Alibaba Cloud Data Intelligence - August 8, 2024

Alibaba Cloud Community - December 9, 2024

Alibaba Cloud Native - November 3, 2022

43 posts | 1 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Alibaba Cloud Data Intelligence