By Wang Siyu (Jiuzhu), Alibaba Cloud technical expert

When discussing in-place updates, the term "update" means to change from an earlier version of an application instance to a later version. This is easy enough to understand. However, it is difficult to understand what "in-place" means in a Kubernetes environment. In this blog, we'll explore why we need to use in-place updates in a Kubernetes cluster and discusses how OpenKruise can help you implement them.

First, let's see how Kubernetes native workloads deploy applications. For example, assume we need to deploy an application to a pod that has the foo and bar containers. The foo container used image version v1 when it was first deployed, and now we want to update the image version to v2.

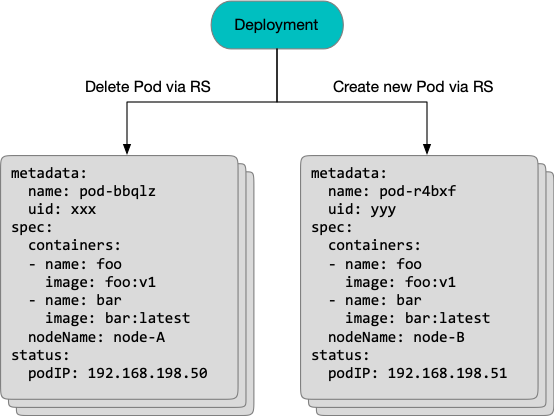

If we use a Deployment workload to deploy the application, the Deployment workload will trigger the ReplicaSet of the new version to delete the original pod and create a new pod, as shown in the following figure.

During this update, the original pod is deleted and a new pod is created. The new pod is scheduled to another node, assigned a new IP address, and pulls the images and starts the foo and bar containers on the new node.

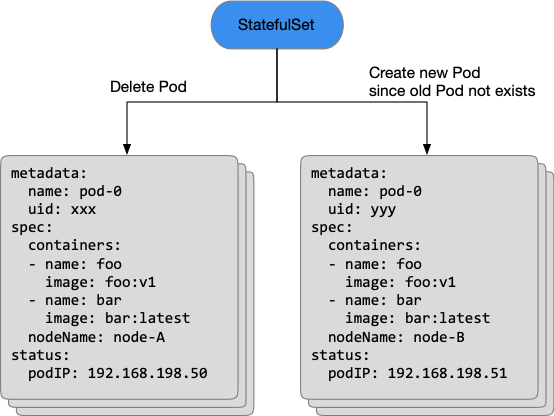

If we use a StatefulSet workload to deploy the application, the StatefulSet workload will delete the original pod and create a pod with the name same as the deleted pod, as shown in the following figure.

Even though the old and new pods are both called pod-0, they are two different pods with different uids. The StatefulSet workload creates a pod-0 object only after the original pod-0 object is deleted from the Kubernetes cluster. The new pod is rescheduled, assigned a new IP address, and pulls the images and starts the containers.

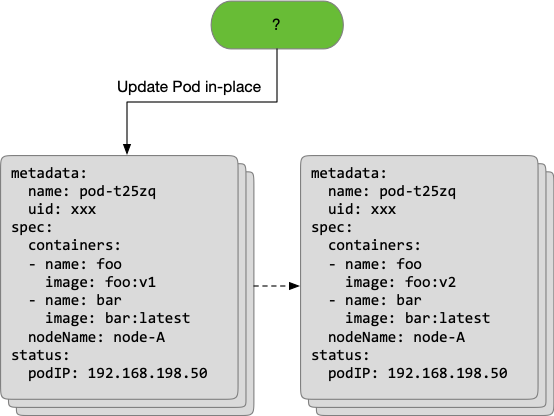

The in-place update mode avoids pod deletion and recreation during an application upgrade. It only updates the image version of one or more containers in the pod.

During an in-place update, we only update the image field of the foo container in the pod to upgrade the foo container to a new version. The pod, node, and pod IP address remain unchanged, and the bar container continues to run during the foo container upgrade.

Therefore, updating the version of one or more containers in a pod without affecting the whole pod or other containers in the pod is called an in-place update in a Kubernetes cluster.

Why do we need to use in-place updates in a Kubernetes cluster?

First, the in-place update mode significantly improves application deployment efficiency. Statistical data shows that in-place pod update improves the deployment speed by more than 80% in Alibaba environments when compared with an update method that rebuilds the whole pod. In-place pod updates provide the following advantages in deployment efficiency:

Second, when we update sidecar containers, as log collection and monitoring, we do not want to influence service containers in the same pod. If a Deployment or StatefulSet workload is used in this scenario, the whole pod will be rebuilt and services will be affected. However, if the in-place update mode is used, only the containers that need to be updated are rebuilt. Other containers and their networks and mounted disks are not affected.

Finally, the in-place update mode also ensures cluster stability and certainty. When a large number of applications in a Kubernetes cluster trigger pod recreation, large-scale pod migration may occur and some low-priority task pods on nodes may be repeatedly preempted and migrated. Large-scale pod recreation brings great pressure to central components, such as the API server, scheduler, and network or disk allocation, and the latency of these components will prolong the pod recreation time. If the in-place update mode is used, the process only involves the controller, which updates the pod, and the kubelet, which recreates the corresponding containers.

In Alibaba, most e-commerce applications are deployed in cloud-native environments using the in-place update mode. The OpenKruise open-source project provides a controller that supports in-place updates.

This means extended workloads in OpenKruise centrally manage the deployment of cloud-native applications in Alibaba, instead of native Deployment or StatefulSet workloads

How does OpenKruise implement in-place updates? Before introducing the implementation principles of in-place updates, let's take a look at the features of native Kubernetes that in-place updates depend on.

The kubelet on each node calculates a hash value for each container in Pod.spec.containers and records the hash value in the created container.

If we modify the image field of a container in a pod, the kubelet will detect the hash value change of the container. Then, the kubelet will kill the container and create a new container based on the container in the latest pod.spec.

This feature is the basis that allows us to update a single pod in place.

The native kube-apiserver has a strict validation logic for requests to update a pod.

// validate updateable fields:

// 1. spec.containers[*].image

// 2. spec.initContainers[*].image

// 3. spec.activeDeadlineSecondsThis means you can only modify the image and activeDeadlineSeconds fields in containers or initContainers of the pod.spec. The kube-apiserver will reject updates to other fields in the pod.spec.

The kubelet will report containerStatuses in pod.status, which indicates the running statuses of all containers in a pod.

apiVersion: v1

kind: Pod

spec:

containers:

- name: nginx

image: nginx:latest

status:

containerStatuses:

- name: nginx

image: nginx:mainline

imageID: docker-pullable://nginx@sha256:2f68b99bc0d6d25d0c56876b924ec20418544ff28e1fb89a4c27679a40da811bIn most cases, spec.containers[x].image and status.containerStatuses[x].image are the same.

However, in some cases, the image reported by the kubelet may be different from the image in the pod.spec. For example, the image in pod.spec may be nginx:latest, but the image reported by the kubelet in the pod.status is nginx:mainline.

This is because the image reported by the kubelet is that of the container obtained over the CRI. If multiple images on a node have the same imageID, the kubelet may report any of the images.

$ docker images | grep nginx

nginx latest 2622e6cca7eb 2 days ago 132MB

nginx mainline 2622e6cca7eb 2 days agoTherefore, different image fields in pod.spec and pod.status of a pod do not mean that the image version of a container on the host is not as expected.

In versions before Kubernetes 1.12, the kubelet determined whether a pod was ready based on the container status. If all containers in the pod were ready, the pod was ready.

However, in most cases, the upper-layer operator or user needed to control pod readiness. Kubernetes 1.12 and later versions provide the readinessGates feature to address this situation.

apiVersion: v1

kind: Pod

spec:

readinessGates:

- conditionType: MyDemo

status:

conditions:

- type: MyDemo

status: "True"

- type: ContainersReady

status: "True"

- type: Ready

status: "True"Currently, the kubelet determines that a pod is ready by reporting the Ready condition as True only if the following conditions are met:

After now that we understand the background conditions, let's see how OpenKruise implements in-place pod updates in a Kubernetes cluster.

According to "Background 1", when we modify fields in spec.containers[x] of an existing pod, the kubelet will detect the hash value change of the container. Then, it will kill the container, pull the image based on the new container, and create and start a new container.

According to "Background 2", we can only modify the image field in spec.containers[x] of an existing pod.

Therefore, we can only modify the spec.containers[x].image field to trigger image updates of a container in an existing pod.

After we modify the spec.containers[x].image field of a pod, we need to determine whether the kubelet has successfully rebuilt the corresponding container.

According to "background 3", comparing the image fields in the pod.spec and pod.status is not always effective because another image with the same imageID as the target image on the node may be reported in the pod.status.

Therefore, to determine whether a pod was successfully updated in place, we must record status.containerStatuses[x].imageID before the in-place update. If the status.containerStatuses[x].imageID of the pod is changed after we update the image in pod.spec, the corresponding container has been rebuilt.

However, we cannot update images that have different names but the same imageID in place. Otherwise, the in-place update may be regarded as unsuccessful because the image ID in the pod.status will not change.

In the future, we will continue to provide new features. OpenKruise will provide the image preload feature. A NodeImage pod will be deployed on each node using a DaemonSet workload. Through the NodeImage pod, we can know the imageID of an image in pod.spec and compare it with the imageID in pod.status to accurately determine whether an in-place update was successful.

In a Kubernetes cluster, a pod's readiness indicates whether it can provide services. Therefore, traffic entries, such as services, will determine whether to add a pod to serving endpoints based on the pod's Ready status.

According to "background 4", in Kubernetes 1.12 and later versions, operators and controllers can set readinessGates and update custom type statuses in pod.status.conditions to control whether a pod is available.

Therefore, we can define a conditionType of InPlaceUpdateReady in pod.spec.readinessGates.

Then, during an in-place update:

After the in-place update is completed, set the InPlaceUpdateReady condition to True to allow the pod to return to the Ready state.

When the pod status is changed to NotReady, it takes time for the traffic components to detect the change and remove the pod from serving endpoints. Therefore, we also need to configure gracePeriodSeconds to set a silent time between the change of the pod status to NotReady and the image update, which triggers an in-place update.

Just like a pod rebuilding update, an in-place pod update can be combined with the following deployment policies:

OpenKruise uses kubelet container version management, readinessGates, and other features of native Kubernetes to update pods in place.

In-place updates also significantly improve the efficiency and stability of application deployment. With the growing scale of clusters and applications, the benefits of this feature are becoming more and more obvious. In recent two years, in-place updating has helped Alibaba smoothly migrate hyperscale application containers to Kubernetes-based cloud-native environments. This would have been impossible with native Deployment or StatefulSet workloads.

Deep Dive into OAM: Using OAM to Define and Manage Kubernetes Built-in Workloads

640 posts | 55 followers

FollowAlibaba Developer - October 13, 2020

Alibaba Developer - October 13, 2020

Alibaba Cloud Native Community - May 27, 2025

Alibaba Developer - April 15, 2021

Alibaba Developer - October 13, 2020

Alibaba Developer - August 18, 2020

640 posts | 55 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn MoreMore Posts by Alibaba Cloud Native Community