By Jiuzhu, Alibaba Cloud Technical Expert, and Mofeng, Alibaba Cloud Development Engineer

On May 28, we livestreamed the third SIG Cloud-Provider-Alibaba webinar. This livestream introduced the core deployment problems encountered during the large-scale cloud migration of the Alibaba economy and their corresponding solutions. It also explained how these solutions helped improve the efficiency and stability of application deployment and release after they were transformed into universal capabilities and made open-source.

This article summarizes the video and data download of the conference livestream, and lists the questions and answers collected during the livestream. We hope it is helpful for you.

As Kubernetes has gradually become a de facto standard and a large number of applications have become cloud-native in recent years, we have found that the native workload of Kubernetes is not very "friendly" to support large-scale applications. We are now exploring ways to use Kubernetes to provide improved, more efficient, and more flexible capabilities for application deployment and release.

This article describes the application deployment optimization we have made during the process of fully connecting the Alibaba economy to cloud-native. We have implemented an enhanced version of the workload with more complete functions, and have opened its source to the community. This makes the deployment and release capabilities used by Alibaba's internal cloud-native applications available to every Kubernetes developer and Alibaba Cloud user.

Alibaba's containerization has taken a big leap all over the world in recent years. Although the technical concept of containers emerged early, it was not well known until Docker products appeared in 2013. Alibaba began to develop LXC-based container technology as early as 2011. After several generations of system evolution, Alibaba now has more than one million containers, with scale leading in the world.

With the development of cloud technologies and the rise of cloud-native applications, we have gradually migrated Alibaba's containers to a Kubernetes-based cloud-native environment over the past two years. Throughout the migration process, we have encountered many problems in application deployment. First of all, application developers have the following expectations for the application migration to the cloud-native environment:

Alibaba has complex application scenarios. Many different Platform as a Service (PaaS) layers grow based on Kubernetes, for example, the operation and maintenance (O&M) mid-end, large-scale O&M, middleware, serverless, and function computing for the e-commerce business. Each platform has different requirements for deployment and release.

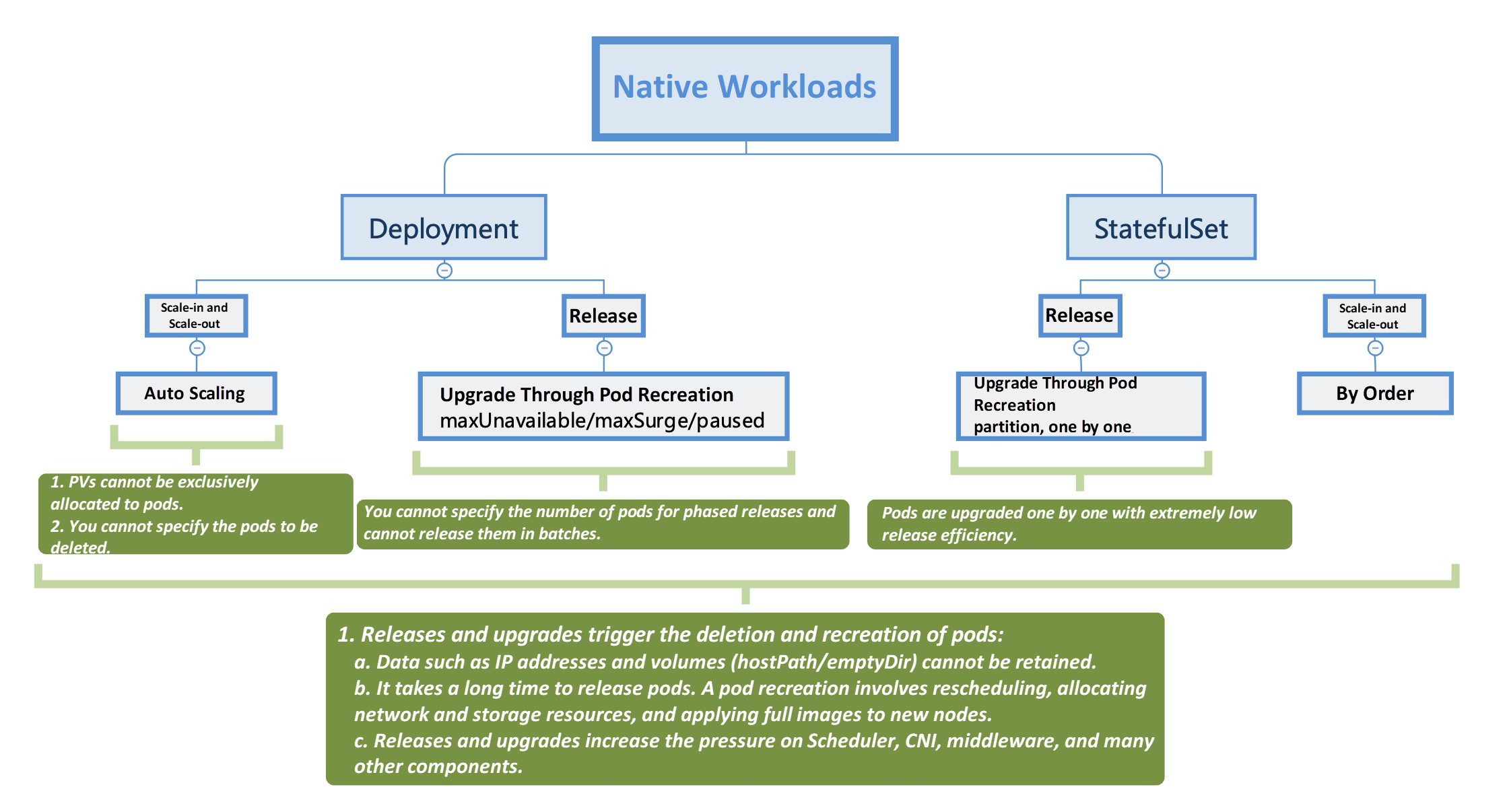

Let's take a look at the capabilities of two commonly used workloads provided by the Kubernetes native:

To put it simply, Deployment and StatefulSet work well in small-scale scenarios. However, for large-scale applications and containers like Alibaba's, it is infeasible to only use native workloads. Currently, the number of applications in Alibaba container clusters exceeds 100,000 and the number of containers reaches millions. For some core applications, a single core application even has tens of thousands of containers. Taking the problems shown in the preceding figure into consideration, we find that the release capability of a single application is insufficient. Moreover, when a large number of applications are being upgraded at the same time during the release peak time, ultra-large-scale pod recreation becomes a "disaster".

Native workloads are far from meeting requirements in application scenarios, so we abstract common application deployment requirements from a variety of complex business scenarios and develop multiple extended workloads accordingly. While we have significantly enhanced these workloads, we ensure the generalization of the functions and avoid the coupling of business logic.

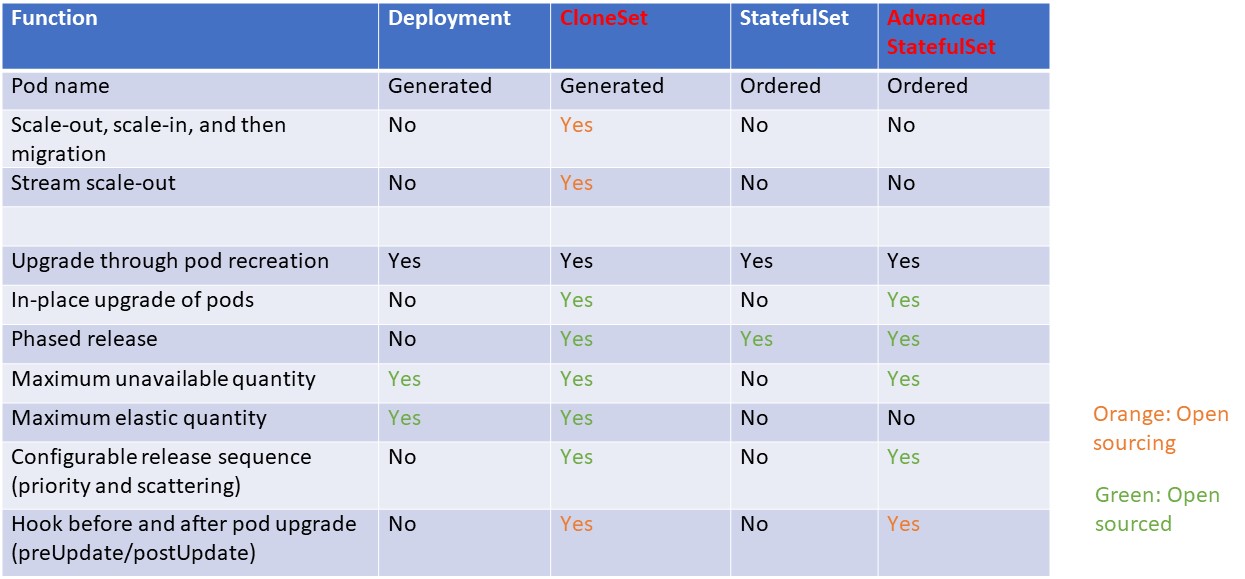

Here, we focus on CloneSet and Advanced StatefulSet. In Alibaba's cloud-native environment, almost all e-commerce-related applications are deployed and released using CloneSet, while stateful applications, such as middleware are managed using Advanced StatefulSet.

As the name implies, Advanced StatefulSet is an enhanced version of the native StatefulSet. Its default behavior is the same as the native StatefulSet. In addition, it provides features, such as in-place upgrade, parallel release (maximum unavailable), and release pause. CloneSet, on the other hand, targets native Deployment. It serves stateless applications and provides the most comprehensive deployment and release strategies.

Both CloneSet and Advanced StatefulSet support specifying pod upgrade methods:

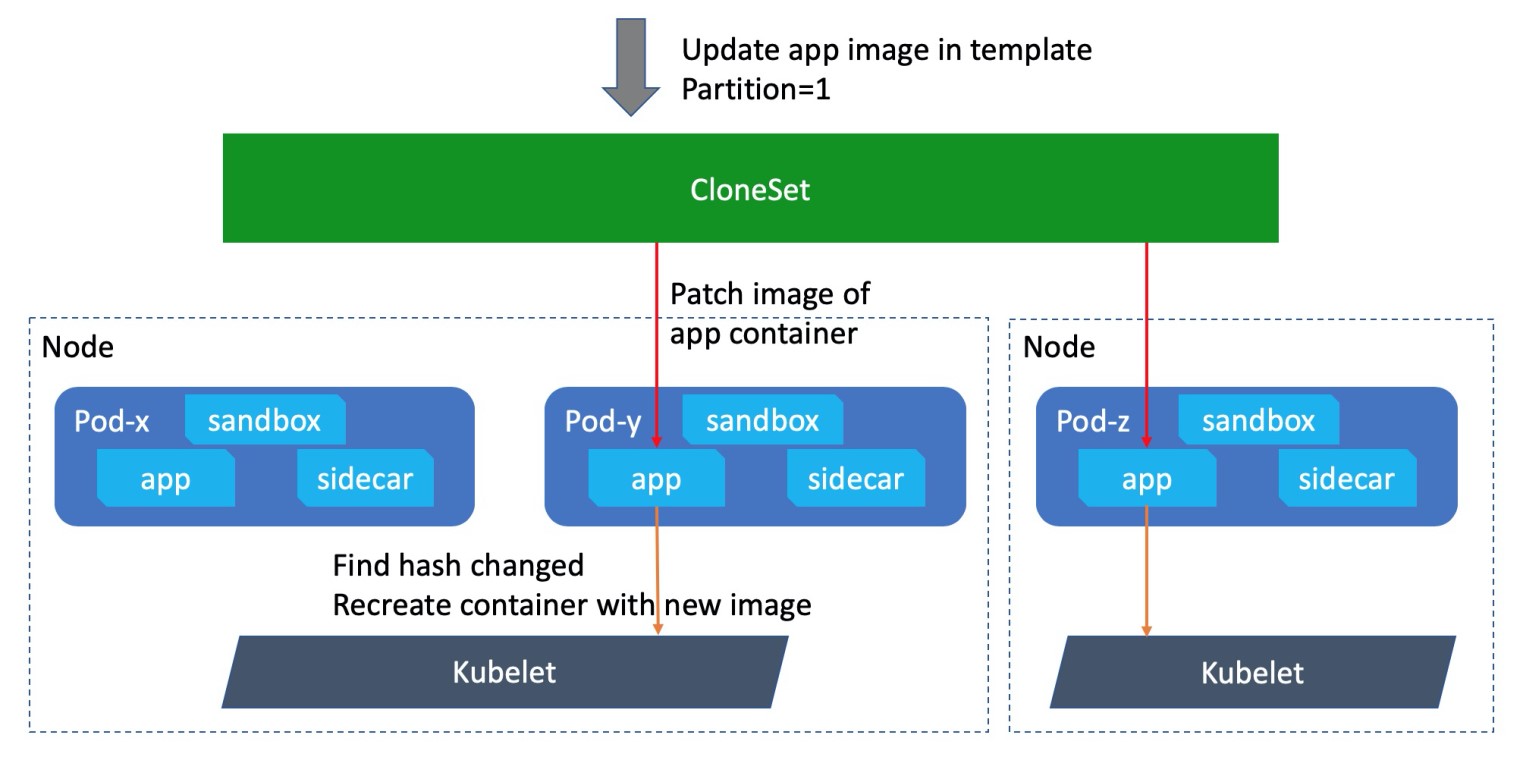

During an in-place upgrade, the workload does not delete or create the original pods when the template is upgraded. Instead, it directly updates the image and other data on the original pods.

As shown in the preceding figure, CloneSet only updates the image of the corresponding container in the pod spec during an in-place upgrade. When the kubelet detects the change in the definition of the container in the pod, it stops the container, pulls a new image, and uses the new image to create and start a container. In addition, we can see that the sandbox container of this pod and other containers that are not upgraded this time continue running normally during this process. Only the containers that need to be upgraded are affected. In-place upgrades have brought many benefits:

Later, we will provide a dedicated article explaining Alibaba's in-place upgrade on Kubernetes, which is of great significance. Without in-place upgrades, it is impossible for Alibaba's ultra-large-scale application scenarios to be implemented in the native Kubernetes environment. We recommend that every Kubernetes user "experience" the in-place upgrade, which brings a change that is different from the traditional release mode of Kubernetes.

As mentioned in the previous section, Deployment supports stream upgrade of maxUnavailable and maxSurge currently, while StatefulSet supports phased upgrade of partitions. However, Deployment does not support phased release, and StatefulSet can only release pods sequentially, so it's not possible to perform stream upgrade in parallel.

Therefore, we introduced maxUnavailable to Advanced StatefulSet. Native StatefulSet uses a one-by-one release. In this case, we can consider that maxUnavailable is set to 1. If we configure a larger value for maxUnavailable in Advanced StatefulSet, more pods can be released in parallel.

Let us take a look at CloneSet. It supports all the release policies of native Deployment and native StatefulSet, including maxUnavailable, maxSurge, and partition. So, how does CloneSet integrate these policies? Here is an example:

apiVersion: apps.kruise.io/v1alpha1

kind: CloneSet

# ...

spec:

replicas: 5 # The total number of pods is 5.

updateStrategy:

type: InPlaceIfPossible

maxSurge: 20% # The number of expanded pods is 1 (5 x 20% = 1) (rounding up).

maxUnavailable: 0 # Ensure that the number of pods available during release is 5 (5 - 0 = 5).

partition: 3 # Reserve 3 pods of the earlier version, and release only 2 pods (5 - 3 = 2).The CloneSet has five replicas. Assume that we modify the image in the template, and set maxSurge to 20%, maxUnavailable to 0, and partition to 3.

When the release begins:

If we change the number of partitions to 0, CloneSet will create an extra pod from the later version first, then upgrade all of the pods to the later version, and finally delete one pod to reach the final state of full upgrades of the five replicas.

We cannot configure the release sequence for native Deployment and Statefulsets. The release sequence of pods under Deployment depends entirely on the scaling order after ReplicaSet modification, while StatefulSet upgrades pods one-by-one in a strict reverse order.

Therefore, in CloneSet and Advanced StatefulSet, the release sequence is configurable so users can customize their release sequences. Currently, the release sequence can be defined based on either of the following two release priority policies and one release scattering policy:

apiVersion: apps.kruise.io/v1alpha1

kind: CloneSet

spec:

# ...

updateStrategy:

priorityStrategy:

orderPriority:

- orderedKey: some-label-keyapiVersion: apps.kruise.io/v1alpha1

kind: CloneSet

spec:

# ...

updateStrategy:

priorityStrategy:

weightPriority:

- weight: 50

matchSelector:

matchLabels:

test-key: foo

- weight: 30

matchSelector:

matchLabels:

test-key: barapiVersion: apps.kruise.io/v1alpha1

kind: CloneSet

spec:

# ...

updateStrategy:

scatterStrategy:

- key: some-label-key

value: fooYou may ask why it is necessary to configure the release sequence. For example, before you release applications, such as ZooKeeper, you need to upgrade all the non-primary nodes first and then upgrade the primary node. This ensures that the primary node switchover takes place only once in the entire release process. You can label the node responsibilities on ZooKeeper pods through the process or write an operator to do it automatically. Then, you can configure larger release weights for non-primary nodes to minimize the number of times of switching over to the primary node during the release.

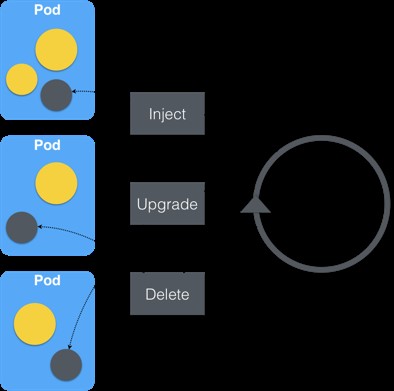

Making containers lighter is also a major reform at Alibaba in the cloud-native stage. In the past, most Alibaba containers ran as "rich containers". A rich container is a container that runs both businesses and various plug-ins and daemons. In the era of cloud-native, we have gradually separated bypass plug-ins from rich containers and incorporated them into independent sidecar containers so that main containers only serve businesses.

We do not further describe the benefits of function separation. Let's look at another question – how are these sidecar containers managed? The most straightforward method is to explicitly define the sidecars required by pods in the workload of each application. However, this causes many problems:

Therefore, we have designed SidecarSet to decouple the sidecar container definition from the application workload. Application developers no longer need to care about which sidecar containers need to be written in their workloads. Instead, sidecar maintainers can independently manage and upgrade sidecar containers through in-place upgrades.

You should now have a better understanding of the basics of Alibaba's application deployment mode. The above capabilities have been open-sourced to the community, with a project called OpenKruise. The project currently provides five extended workloads:

In addition, we have more extended capabilities on the way to open-source! Soon, we will make open-source the internally used Advanced DaemonSet to OpenKruise. Advanced DaemonSet provides release policies, such as phased release and selector release based on maxUnavailable of the native DaemonSet. The phased release function allows DaemonSet to upgrade some of the pods during a release. The selector release function allows preferential upgrading to pods on the nodes that meet certain labels during a release. This brings the phased release capability and stability guarantee for upgrading DaemonSet in large-scale clusters.

In the future, we also plan to make Alibaba's generalization capabilities, such as internally extended HPA and scheduling plug-ins open-source for the community, to make the cloud-native enhancements developed and used by Alibaba available to every Kubernetes developer and Alibaba Cloud user.

Finally, we welcome every cloud-native enthusiast to participate in the construction of OpenKruise. Unlike other open-source projects, OpenKruise is not a copy of Alibaba's internal code. On the contrary, the OpenKruise Github repository is the upstream of Alibaba's internal code repository. Therefore, every line of code you contribute will run in all Kubernetes clusters within Alibaba, and will jointly support Alibaba's world-leading application scenarios!

Q1: What is the number of pods for Alibaba's current largest-scale business and how long does a release take?

A1: Currently, the largest number of pods for a single application is in the unit of ten thousand. The time a release takes depends on the duration of specific phased releases. If many phased releases are scheduled and the observation time is long, the release may last for a week or two.

Q2: How are the request and limit parameters configured for a pod? By what ratio are requests and limits configured? Too many requests cause waste, while too few requests may cause an overload on hotspot nodes.

A2: The ratio is determined based on application requirements. Currently, most online applications are configured with a ratio of 1:1. For some offline applications and job-type applications, the configuration of requests greater than the limit is required.

Q3: How do I upgrade the deployment of the current version when I upgrade the kruise apiVersion?

A3: Presently, the apiVersion of resources in kruise is unified. We plan to upgrade the versions of some mature workloads in the second half of this year. After you upgrade your Kubernetes clusters, the resources of earlier versions are automatically upgraded to later versions through conversion.

Q4: Does OpenKruise provide go-client?

A4: Currently, two methods are provided:

Q5: How is Alibaba's Kubernetes version upgraded?

A5: Alibaba Group uses the Kube-On-Kube architecture for large-scale Kubernetes cluster management and uses one meta-Kubernetes cluster to manage hundreds of thousands of business Kubernetes clusters. The meta cluster version is relatively stable, while business clusters are frequently upgraded. The upgrade process of business clusters is to upgrade the versions or configurations of the workloads (native workloads and kruise workloads) in the meta cluster. This process is similar to the process of upgrading business workloads in normal conditions.

Q6: After the phased release begins, how do you control the inlet flow?

A6: Before an in-place upgrade, kruise first uses readinessGate to set pods to the not-ready state. At this point, controllers, such as endpoints, will perceive the setting and remove the pods from the endpoints. Then, kruise updates the pod images to trigger container recreation and sets the pods to the ready state.

Q7: Are phased releases of DaemonSet implemented in a way similar to the pause function of Deployment? DaemonSet counts the number of released pods, pauses, continues the release, and then pauses again.

A7: The overall process is similar to the pause function of Deployment. During an upgrade, earlier and later versions are counted to determine whether the desired state has been reached. However, compared with Deployment, DaemonSet needs to handle more complex boundary cases. For example, the specified pod is not in the cluster in the first release. For more information, pay close attention to the code that is open-sourced.

Q8: How does the release start on the multi-cluster release page?

A8: The livestream demonstrates an example where a demo release system is integrated with Kruise Workloads. In terms of interaction, the user selects the corresponding cluster and clicks to execute the release. In terms of implementation, the difference between the YAML in the new version and the YAML in the cluster is calculated and then patched into the cluster. After that, the control field (partition or paused) of DaemonSet is operated to control the phased release process.

Lecturer: Jiuzhu

Jiuzhu is an Alibaba Cloud Technical Expert, OpenKruise maintainer, and the Leader of cloud-native workload development at Alibaba Group. He has many years of experience in supporting the ultra-large-scale container clusters for the Alibaba Double 11 Global Shopping Festival.

Lecturer: Mofeng

Mofeng is an Alibaba Cloud Development Engineer. He is responsible for building the phased release system for the Alibaba Cloud-Native Container Platform and developing etcd. He also participated in the stability construction of Alibaba's large-scale Kubernetes clusters.

Cloud-Native Storage: The Cornerstone of Cloud-Native Applications

640 posts | 55 followers

FollowAlibaba Developer - September 23, 2020

Alibaba Clouder - October 9, 2020

OpenAnolis - April 7, 2023

Alibaba Clouder - December 3, 2020

Alibaba Cloud Native Community - May 27, 2025

Alibaba Cloud Native - November 13, 2024

640 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn MoreMore Posts by Alibaba Cloud Native Community