By Sun Jianbo, a Technical Expert at Alibaba, and Zhao Yuying

In the cloud-native era, Kubernetes is becoming increasingly important. However, most Internet companies have not successfully explored Kubernetes as expected, and the complexity of Kubernetes is enough to discourage a group of developers. In this article, Alibaba Technical Expert Sun Jianbo provides some experience and suggestions based on Alibaba Kubernetes application management practices to help developers.

In the Internet era, developers are more likely to achieve the quick switch when resource-related problems occur through top-level architecture design, such as multi-cluster deployment and distributed architecture. Developers have done a lot to make elasticity simpler and improve resource utilization by hybrid-deployment computing tasks. The emergence of cloud computing has solved the transformation problem from Capital Expenditure (CAPEX) to Operating Expense (OPEX).

In the cloud computing era, developers can focus on the value of applications. In the past, in addition to service modules, developers had to devote a lot of time to infrastructures, such as storage and networks. Today's infrastructures are as easy to use as water, electricity, and gas. The cloud computing infrastructure is stable, highly available, and auto-scaling. It also addresses best practices in application development, such as monitoring, auditing, log analysis, canary release. Originally, an engineer needed to be an overall-developed talent to develop a highly reliable application. Now, as long as the engineer knows enough infrastructure products, these best practices can be quickly realized. However, many developers are helpless in the face of naturally complex Kubernetes.

Atlassian is behind JIRA and Bitbucket. Nick Young, the Chief Engineer of the Kubernetes Team at Atlassian, said in an interview,

"Although the Kubernetes strategy is correct (at least no other possible options have been found) and it has solved many existing problems, the deployment process is extremely difficult."

So, is there a good solution?

"If I were to say that Kubernetes has a problem, of course, it would be too complicated," Sun Jianbo said in an interview. "However, this is caused by the positioning of Kubernetes itself."

Sun Jianbo added that Kubernetes is positioned as a platform for platform. Its direct users are neither application developers nor application O&M personnel, but platform builders (Infrastructure or platform engineers.) However, for a long time, Kubernetes has been used incorrectly. A large number of application O&M personnel and R&D engineers collaborate on the underlying APIs of Kubernetes. This is one of the fundamental reasons why many people complain that Kubernetes is too complicated.

This is similar to a Java web engineer that must use the Linux kernel system call to deploy and manage business code. He will naturally feel that Linux is too complicated. Therefore, the Kubernetes project currently lacks a higher level of encapsulation to make the project friendlier to upper-level software developers and O&M personnel.

Similarly for Kubernetes, we need to be able to provide a consistent experience for developers, similar to the API of Linux kernel, without the need for distinguishing the user's preferences. When developers need to manage applications based on Kubernetes and connect with R&D and O&M engineers, they must consider this issue. It is also necessary for them to consider how to solve this issue in a standard and unified way (like the API of the Linux kernel.) This is also why Alibaba Cloud and Microsoft work jointly on the cloud-native Open Application Model (OAM) project.

In addition to the inherent complexity issue, the support of Kubernetes for stateful applications has also been an issue for many developers. There's no optimal solution. Currently, the mainstream solution for stateful applications in the industry is Operator, which is very difficult to compile.

In an interview, Sun Jianbo said, "The Operator is essentially an advanced Kubernetes client." However, the Kubernetes API server design model focuses on the client. This simplifies the complexity of the API server. However, both the Kubernetes client library and operators are based on this, and are extremely complex and difficult to understand. They include a large number of implementation details of Kubernetes, such as reflector, cache store, and informer. These should not be the concern of Operator compilers. Operator compilers should be experts (such as engineers in TiDB) in stateful applications, rather than in the Kubernetes field. This is currently the biggest pain point for stateful application management in Kubernetes, and this may require a new Operator framework to solve this problem.

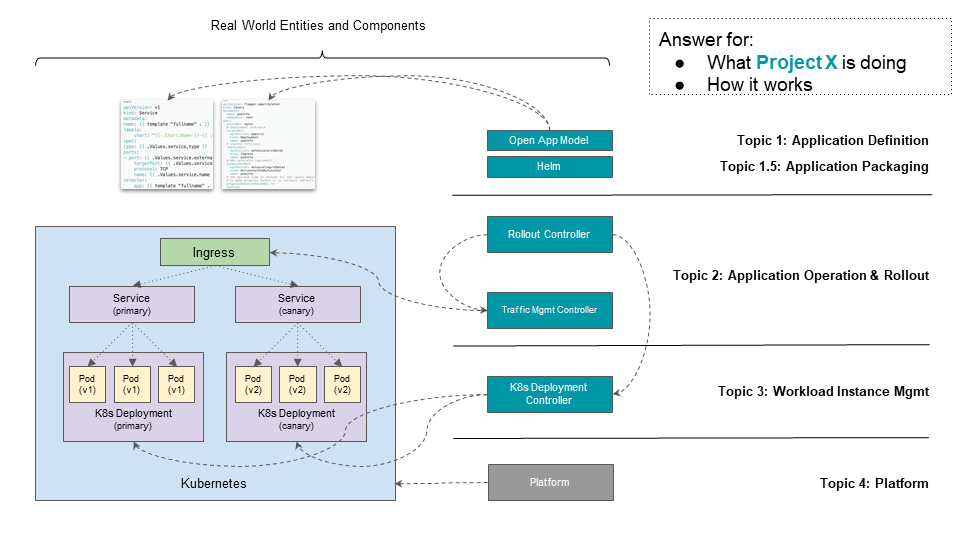

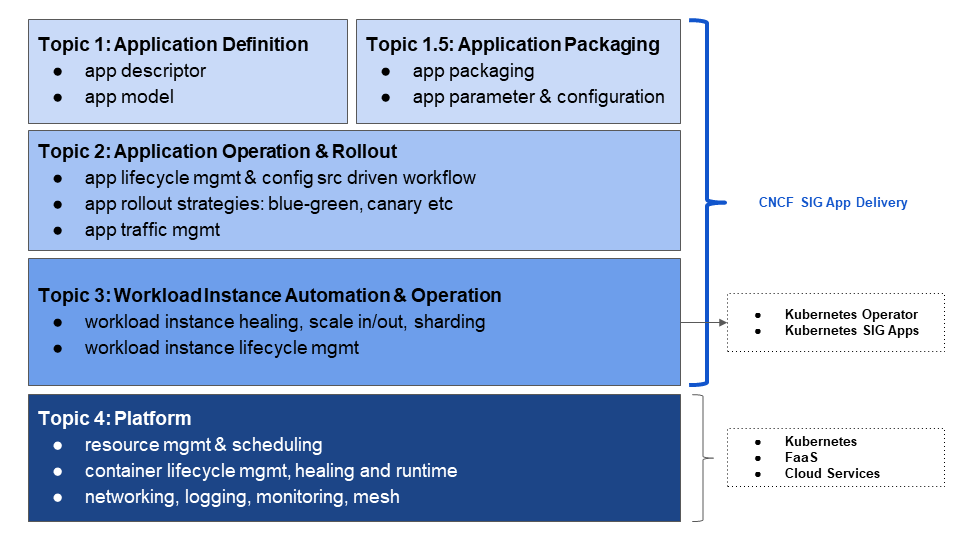

On the other hand, the support of complicated applications is not as simple as writing Operator. It also needs the technical support of stateful application delivery, which is intentionally or unintentionally ignored by various continuous delivery projects in the community. The technical challenge of continuous delivery of an Operator-based stateful application is different from the technical challenge of delivering a stateless Kubernetes deployment. This is why Sun Jianbo's team proposed the "application delivery hierarchical model" to the CNCF SIG App Delivery team. As shown in the following figure, the four layers of the model are application definition, application delivery, application O&M and automation, and platform. This delivers high quality and efficient stateful applications.

For example, the Kubernetes API object is designed as an all-in-one. All participants in the application management process must collaborate on the same API object. Therefore, in API object descriptions (like Kubernetes Deployment), developers can view the fields that are required for application development, the fields that are required for O&M, and the other important fields.

Application development, application O&M, and Kubernetes automation capabilities, such as Horizontal Pod Autoscaling (HPA), may all need to control the same field in an API object. The most typical scenario is the replica number parameter. However, owning this field is a difficult problem.

In summary, since Kubernetes is positioned as the Linux kernel of the cloud era, Kubernetes must make continuous breakthroughs in improving Operator support, the API layer, and the definitions of various interfaces. This will allow more ecosystem participants to build their capabilities and values based on Kubernetes.

Today, Kubernetes' application scenarios in the Alibaba economy cover all aspects of Alibaba business, including e-commerce, logistics, and off-line computing. Kubernetes is also one of the main forces supporting Alibaba 618, Double 11, and other Internet-level promotions. Alibaba Group and Ant Financial run dozens of ultra-large Kubernetes clusters. The largest cluster has about 10,000 machine nodes, and this is not its upper limit of capabilities. Each cluster serves tens of thousands of applications. In addition, we maintain Kubernetes clusters with tens of thousands of users on Alibaba Cloud Container Service for Kubernetes (ACK). The scale and technical challenges are second to none.

According to Sun Jianbo, Alibaba began to implement application containerization as early as 2011. At that time, it built containers based on Linux Container (LXC) technology and then adopted its own container technology and orchestration and scheduling system. There is nothing wrong with the system, but the Infrastructure Technical Team wants the basic technology stack of Alibaba to support a broader upper-layer ecosystem and evolve and upgrade the system. Therefore, the entire team took over another year to gradually improve the scale and performance shortcomings of Kubernetes. Generally, upgrading to Kubernetes is a natural process. The whole process is simple:

After completing the preceding steps, the product can connect with R&D, O&M, and upper-layer Platform as a Service (PaaS), and developers can clarify the platform values. Then, we can start the pilot project to replace the infrastructure step-by-step without affecting the existing application management system.

Kubernetes does not provide a complete application management system. The system is built from the entire cloud-native ecosystem based on Kubernetes. The following figure shows the application management system:

Helm is one of the most successful examples. It is located at the top of the entire application management system, which is layer 1. There are also many YAML management tools (such as Kustomize) and packaging tools (such as Cloud Native Application Bundle (CNAB)) that are all located at layer 1.5. Then, there are application delivery projects (such as Tekton, Flagger, and Kepton) that are corresponding in layer 2. Operator and various workload components of Kubernetes (such as Deployment and StatefulSet) are located at layer 3. The last is the core function of Kubernetes, which manages the workload containers, encapsulates infrastructure capabilities, and provides APIs for different workloads to connect to the underlying infrastructure.

Initially, the team's biggest challenge came from the scale and performance bottlenecks, but the solution for this challenge was also the most direct. According to Sun Jianbo, as Kubernetes scales larger, the biggest challenge ahead for its scale is to manage applications and integrate with upper-layer ecosystems based on Kubernetes. For example, we need unified control of controllers from dozens of teams for hundreds of different purposes. We need to deliver production-level applications from different teams thousands of times a day. These applications may require completely different releasing and scaling strategies. In addition, we need to connect to dozens of more complex upper-layer platforms and schedule and deploy different types of jobs in a hybrid way to maximize resource utilization. These requirements are the problems to be solved in Alibaba's Kubernetes practice. Scaling and performance are only one of them.

In addition to native Kubernetes functions, a large number of infrastructures will be developed within Alibaba to connect to these functions in the form of Kubernetes plug-ins. As the scale increases, finding and managing these capabilities in a unified way has become a key issue.

Alibaba also provides a large number of PaaS products to meet the needs of users to migrate to the cloud for different business scenarios. For example, users may want to upload a Java war package to run the products, while other users want to upload an image to run the products. Behind these requirements, Alibaba teams have done a lot of application management work for users, which is the reason for the emergence of existing PaaS. The connecting process between these existing PaaS and Kubernetes may cause various problems. Currently, Alibaba is using OAM, a unified and standard application management model, to help these PaaS systems connect to the Kubernetes chassis, achieving standardization and cloud-native.

Decoupling allows Kubernetes projects and cloud service providers to expose different dimensions to different roles and implement declarative APIs more consistently with users' needs. For example, application developers only need to declare in a YAML file that "application A needs to use a 5 GB readable and writable space." The application O&M personnel only need to declare in the corresponding YAML file that "Pod A needs to be mounted with a 5 GB readable and writable data volume." The concentration brought by allowing users to only care about their own things is the key to reducing the learning threshold and difficulty for Kubernetes users.

According to Sun Jianbo, most current solutions are pessimistic. For example, Alibaba's PaaS platform has only set five Deployment fields for R&D to reduce the burden of R&D use. The "all-in-one" YAML on Kubernetes makes a complete YAML too complex for R&D. In most cases, there is no sense to having R&D. However, the O&M personnel of PaaS may think that Kubernetes YAML is too simple and cannot fully describe the O&M capabilities of the platform. Therefore, we need to add a large number of annotations to the YAML file.

In addition, the core problem is that the result of this pessimistic treatment for the O&M personnel is that it is too dictatorial, carrying out a large amount of detailed work but getting little results. For example, the scale-out strategy is decided by the O&M side. However, as the people that write the code, the R&D personnel have the most say in how to scale the application. The R&D personnel also want to share their opinions with the O&M personnel, so Kubernetes can be more flexible and meet the requirements for scaling out. However, this requirement cannot be realized in the current system.

Therefore, the point of R&D and O&M decoupling is not to separate the two but to provide a standard, efficient way for R&D personnel to communicate with O&M personnel. This is also a problem the OAM application management model needs to solve. Sun Jianbo said, "One of the main functions of OAM is to provide a set of standards and norms for R&D personnel to express demands from their own perspective." Then, you know, I know, and the system knows this set of standards, and these problems can be solved.

Specifically, OAM is a standard specification that focuses on describing applications. The application description can be separated from the details of infrastructure deployment and application management with this specification. There are design benefits of this separation of concerns. For example, in a production environment, whether it is Ingress, Container Network Interface (CNI), or Service Mesh, these O&M concepts that seem to be consistent are very different in different Kubernetes clusters. By separating the application definition from the cluster O&M capabilities, we allow application developers to focus more on the application value, instead of the O&M details, such as where the application is deployed.

Moreover, this separation of concerns allows platform architects to encapsulate platform O&M capabilities into reusable components, so application developers can focus on integrating these O&M components with code to build trusted applications easily and quickly. The OAM aims to make simple application management easier and complex application delivery more controllable. Sun Jianbo said, "In the future, the team will focus on gradually promoting this system to the cloud Independent Software Vendors (ISV) and software distributor side, so Kubernetes-based application management systems can truly become the mainstream solution of the cloud era."

Sun Jianbo is a Technical Expert at Alibaba and a member of the Kubernetes project community. Currently, he is working on the delivery and management of large-scale cloud-native applications at Alibaba. In 2015, he worked on the technical book, Docker Containers and Container Cloud Technology. He previously worked for Qiniu Cloud and participated in time series database migration, stream computing, log platform, and other project-related applications to the cloud.

PrudenceMed: Using Serverless Containers to Improve Diagnostic Accuracy

175 posts | 31 followers

FollowAlibaba Clouder - July 16, 2018

Alibaba Clouder - March 18, 2020

Apache Flink Community China - November 6, 2020

Alibaba Clouder - April 13, 2020

Alibaba Developer - January 14, 2020

JeffLv - December 2, 2019

175 posts | 31 followers

Follow Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More CloudBox

CloudBox

Fully managed, locally deployed Alibaba Cloud infrastructure and services with consistent user experience and management APIs with Alibaba Cloud public cloud.

Learn MoreMore Posts by Alibaba Container Service