By Lv Renqi.

Back last year around when I was doing some research into Service Meshes, I read all about Kubernetes, mostly looking through some presentations online. And around the same time, I also deployed and played around a bit with OpenShift, which is a Kubernetes application platform. Afterwards, I wrote two articles in which I discussed my experiences and thoughts about Service Mesh and Kubernetes.

Looking back on those previous articles now, though, I think that my understanding back then was a bit limited and one sided, as with most information you'll find online about these topics. Now that my team are in the middle of considering using Dubbo for a cloud-native architecture, I spent some time brushing up on the basics reading some practical guides to Docker and Kubernetes in the hope of providing a better and more comprehensive understanding of all the relevant technology.

Now, to begin discussion, I'd like to consider what exactly Docker, Kubernetes, and Service Mesh are and what do they do to try to make better sense of what cloud native exactly is and means. Last in the blog, we'll also tackle the concept and meaning of cloud native and take a stab at what exactly "cloud nativeness" is.

In this section, I'm going to present my thoughts after reading the practical guide to Docker.

Docker is a Linux container toolset that is designed for building, shipping, and running distributed applications. Docker helps with the process of containerization, which ensures application isolation and reusability, and also virtualization, which is a functional way to ensure the security of physical machines.

In many ways, Docker was one of the first mainstream pieces of what would become the cloud native puzzle.

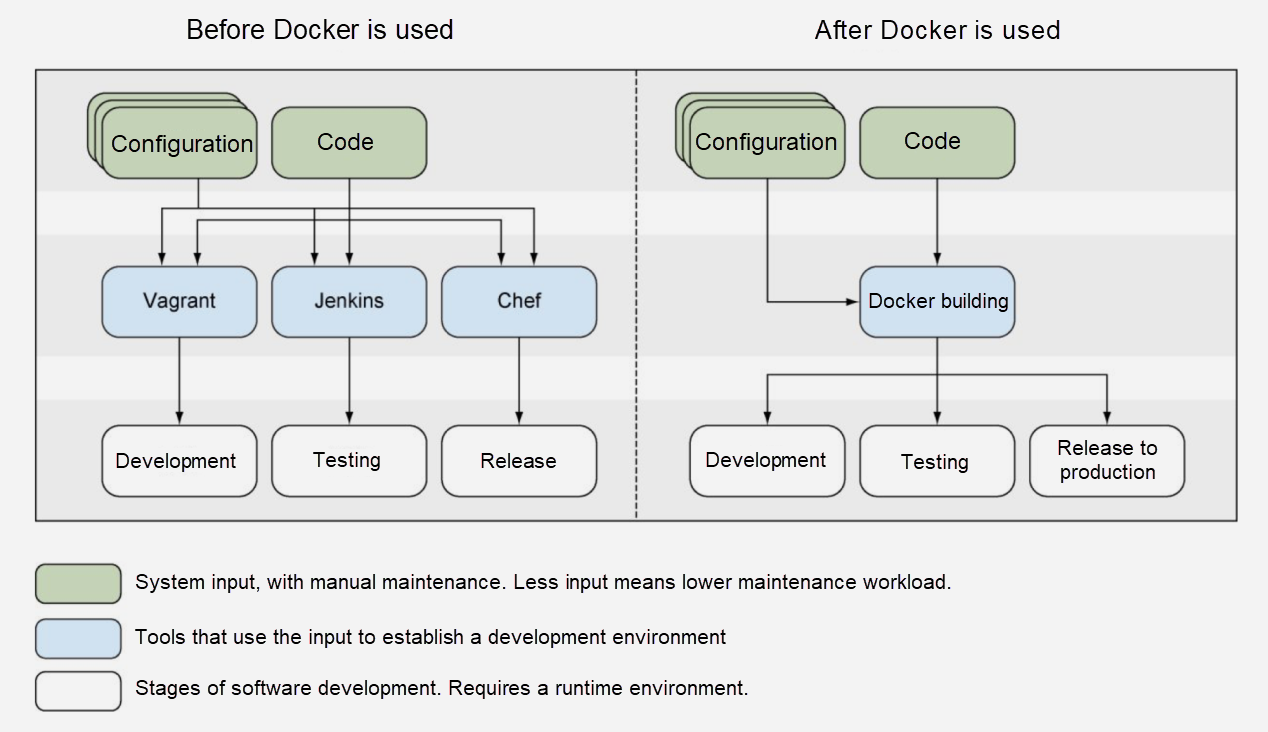

Below is a graphic that shows how Docker can be used to streamline things:

The points below sum up what exactly Docker is and what it does.

Docker is also well integrated with all the stages of the application lifecycle:

Docker is responsible for the following:

As I hinted at before, Kubernetes came after containerized applications and Docker became popular and were started to be used as a platform to manage containers. In this section, like the last, I'm going to present my thoughts on reflection after reading a practical guide to Kubernetes.

The popularity of Kubernetes is closely connected with Docker. The wide adaptation of Docker made Platform-as-a-Service (PaaS) a viable thing. Kubernetes is a great achievement that came after much practical experience in managing massive data centers at Google. It was also largely heralded in by the explosion of containerized applications that occurred at the time. Google's goal was to establish a new industry standard, and they undoubtedly did-with Kubernetes being as wildly popular as it has come to be.

Kubernetes is really a big piece of the cloud native revolution.

Google started using containers in 2004 and released Control groups (typically referred to as cgroups) in 2006. At the same time, they were using cluster management platforms such as Borg and Omega internally in their cloud and IT infrastructure. Kubernetes was inspired by Borg and draws on the experience and lessons of container managers, including Omega.

You can read more about this fascinating bit of history on this blog.

Some things to consider moving forward is how did Kubernetes beat other early comers like Compose, Docker Swarm, or even Mesos? The answer to this question, in short, is Kubernetes's superior abstraction model. Kubernetes in principle is different at the core of its design. To understand Kubernetes, we'll need to go over all the concepts and terms that are involved in its underlying architecture.

Below are some of the concepts that are more or less unique to Kubernetes. From understanding the concepts behind Kubernetes, you can gain a better general understanding of how Kubernetes works:

If you'd like to learn more, you can get it from the source itself, read the official documentation directly, which you can find here.

Continuous integration and deployment is arguably the most distinctive features of Platform-as-a-Service (PaaS) models and cloud native as well, and most cloud vendors provide PaaS models that are based on Kubernetes. Therefore, general user experience and the specific Continuous integration and continuous delivery (CI/CD) pipelines used in these models are the things that distinguish different services. Generally speaking, CI/CD pipelines start when the project is created and permeate every stage of the application lifecycle. This is the core advantage of CI/CD pipelines. They can be integrated into all steps of a development workflow and provide an all-in-one application service experience.

Below are some mainstream CI/CD tools that can be used with Kubernetes:

Now, the last important thing to consider when it comes to Kubernetes are the API objects and metadata connected with Kubernetes:

In reality, all that metadata does really is it defines the basic information of an API object. It is represented by the metadata fields and has the following attributes:

metadata.namespace. If this field is not defined, then the default namespace called default is used.metadata.nameAll API objects except Node belong to a namespace. Within the same namespace, these objects are identified by their names. Therefore, the name of an API object must be unique within the namespace. Nodes and namespaces must be unique in the system.ReplicationController and ReplicationService use labels to be associated with pods. Pods can also use labels to select nodes.In this section, I'm going to quickly look into Service Mesh, which is really the next big thing after Kubernetes and Docker. To me, the core strength of Service Mesh is its control capabilities, and that's one place where the service mesh Istio in particular especially shines.

In fact, I'll go as far to say that, if the Istio model could be continued to be standardized and expanded, it could easily become the de facto PaaS product for containerized applications. Though, I think that the service meshes of Apache's Dubbo, Envoy, NGINX, and even Conduit are also viable integration choices.

Since I think that Istio really is a stellar option, let's focus on it first.

To understand Service Meshes, you really need to understand the design principle of a service mesh. Let's look at what's the design principle behind Istio. In a nutshell, a service abstraction model comes first before implementation on a container scheduling platform. Consider the figure below.

If you're interested in learning all the specifics about what exactly Istio is, you can check out its official explanation here.

Generally speaking, the Istio service model involves an abstract model of services and their instances. Istio is independent of the underlying platform, having platform specific adapters populating the model object with various fields from the metadata found in the platform. Within this, service is a unit of an application with a unique name that other services refer to, and service instances are pods, virtual machines and containers that implement the service. There can be multiple versions of a service.

Next there's the services model. Each service has a fully qualified domain name (or FQDN) and one or more ports where the service is listening for connections. A service can have a single load balancer and virtual IP address associated with it. Also, involved with the service model are instances. Each service has one or more instances, which serve as actual manifestations of the service. An instance represents an entity such as a pod, with each one having a network endpoint.

Also involved in the design of Istio is service versions with each version of a service being differentiated by a unique set of labels associated with the particular version. Another concept is Labels, which are simple key value pairs assigned to the instances of a particular service version. All instances of a same version must have the same tag. Istio expects that the underlying platform provides a service registry and service discovery mechanism.

With the above discussion about Docker, Kubernetes, and Service Meshes like Istio, I left out one major thing to cover and that's "cloud native". So, what is "cloud native"? There are different interpretations of what it means or what it takes to be "cloud native", but according to the Cloud Native Computing Foundation (CNCF), cloud native can be understood as follows:

Cloud-native technologies and solutions can be defined as technologies that allow you to build and run scalable applications in the cloud. Cloud native came with the development of container technology, like what we saw with Docker and then Kubernetes, and generally involves the characteristics of being stateless, having continuous delivery, and also having micro services.

Cloud-native technologies and solutions can ensure good fault tolerance and easy management and when combined with a robust system of automation thanks to CI/CD pipelines, among other things, they can provide a strong impact without too much hassle.

Nowadays, the concept of cloud native and "cloud nativeness" even according to the CNCF involving using Kubernetes, but Kubernetes is not the only piece of the puzzle but rather is only the beginning. With traditional microservice solutions Dubbo now being a part of the CNCF landscape, more and more people are attracted to cloud native due to the unique features and capabilities that these solutions have to offer.

In other words, in many ways, in today's landscape of cloud native, cloud native may start with Kubernetes but end up going on to embrace Service Mesh solutions like Istio. It's just like the progression we saw throughout this blog, first there was Docker, and then Kubernetes, and now there's also Service Meshes.

Performance Issues Related to LocalDateTime and Instant during Serialization Operations

Alibaba Clouder - January 5, 2021

Alibaba Developer - March 3, 2020

Alibaba Clouder - June 8, 2020

Alibaba Developer - December 24, 2019

Alibaba Clouder - February 14, 2020

Alibaba Clouder - February 2, 2021

Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More