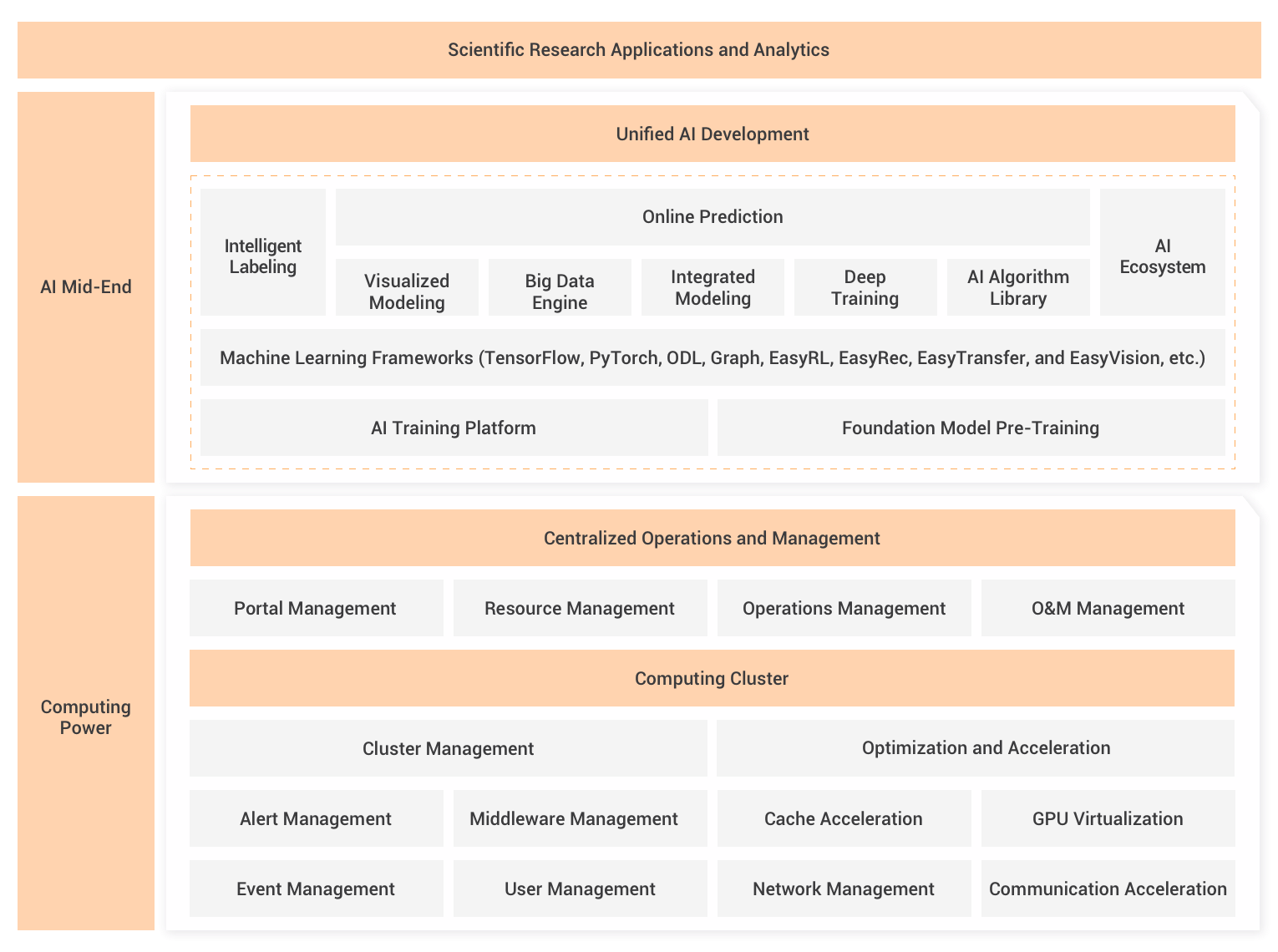

Are you ready to dive into the world of Generative AI (GenAI) and witness the magic of Large Language Models (LLM)? Meet Alibaba Cloud Platform for AI (PAI). This cutting-edge platform combines the advanced capabilities of Artificial Intelligence (AI) with the groundbreaking features of Langchain and LLM to revolutionize the field of GenAI.

PAI offers plenty of exciting features that will leave you awe-inspired. Let's explore some of its key highlights:

Covering all features in one publication will be challenging. Hence, we will publish a series of articles related to LLM on PAI. Let's start with integration with Langchain.

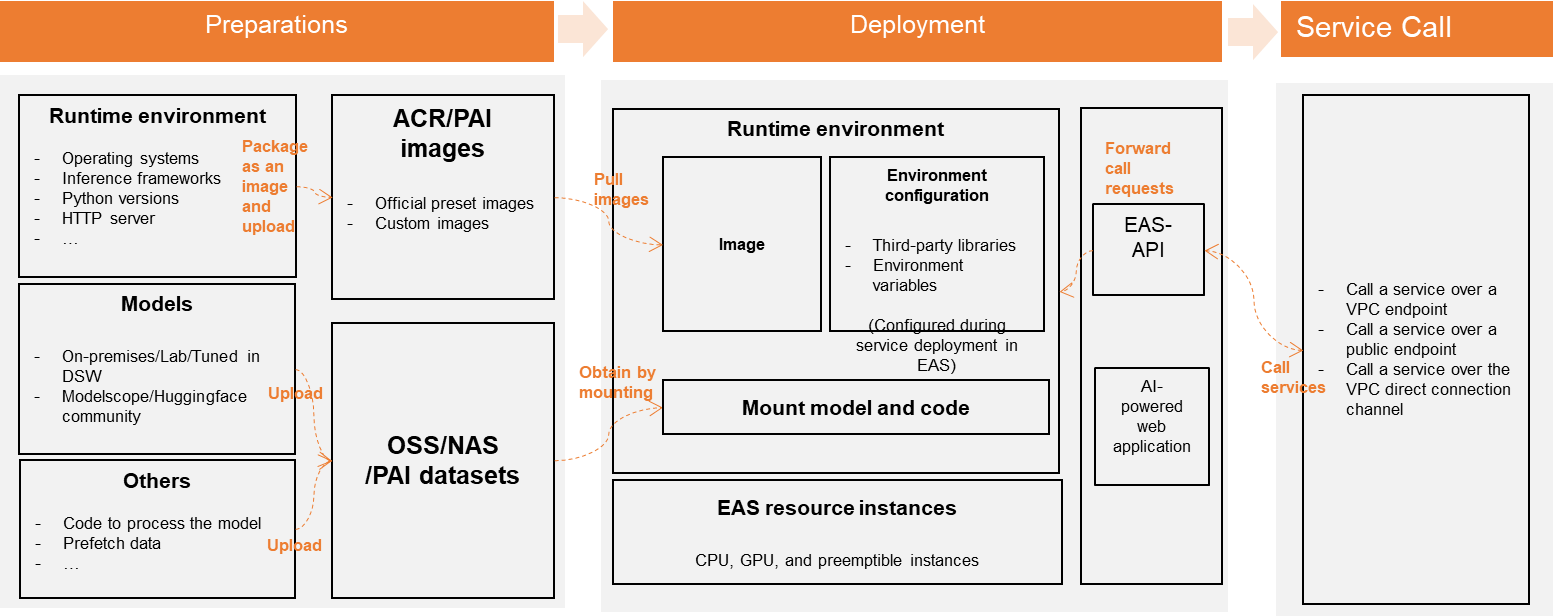

Platform for AI - Elastic Algorithm Service (PAI-EAS) for online inference of the model. It offers features like automatic scaling, blue-green deployment, and resource group management. PAI-EAS supports real-time and near-real-time AI inference scenarios and provides a flexible infrastructure, efficient container scheduling, and simplified model deployment. It also enables real-time and near real-time synchronous inference, ensuring high throughput and low latency for various AI applications.

LangChain is a powerful framework for developing applications powered by language models. It enables context-aware applications that can connect a language model to various sources of context, such as prompt instructions, few-shot examples, or content to ground its response in. LangChain allows applications to reason and make decisions based on the provided context, utilizing the capabilities of the language model.

The LangChain framework consists of several components. The LangChain Libraries provide Python and JavaScript libraries with interfaces and integrations for working with language models. These libraries include a runtime for combining components into chains and agents, as well as pre-built implementations of chains and agents for different tasks. The LangChain Templates offer easily deployable reference architectures for a wide range of applications.

By using LangChain, developers can simplify the entire application lifecycle. They can develop applications using LangChain libraries and templates, produce them by inspecting and monitoring with LangSmith, and deploy the chains as APIs using LangServe.

LangChain offers standard and extendable interfaces and integrations for modules such as Model I/O, Retrieval, and Agents. It provides a wealth of resources, including use cases, walkthroughs, best practices, API references, and a developer's guide. The LangChain community is active and supportive, offering places to ask questions, share feedback, and collaborate on the future of language model-powered applications.

In this tutorial, we will walk through the steps to set up PAI EAS, deploy a chat model, and run it using different configurations. Here is the documentation link.

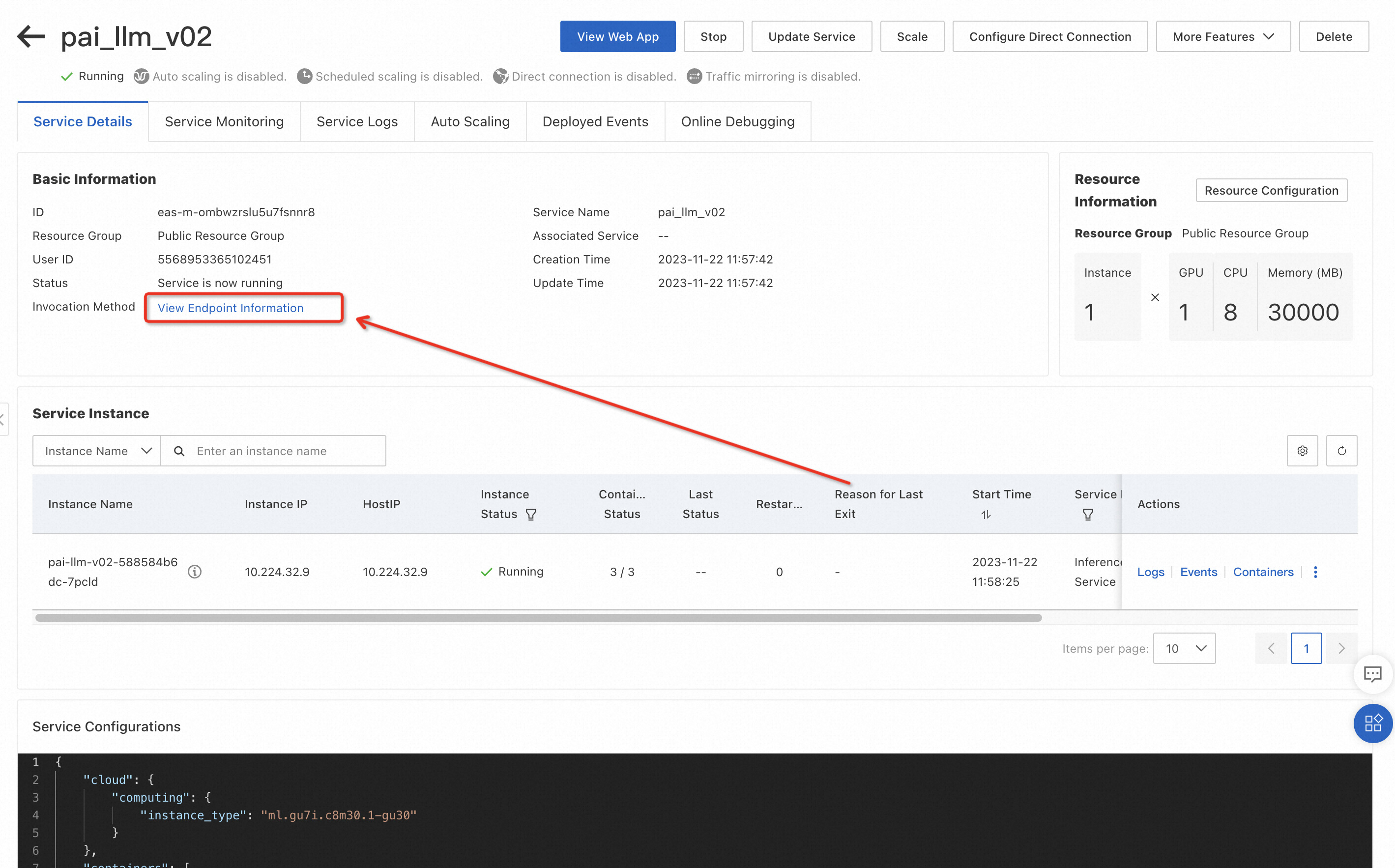

We begin from the lunching LLM on PAI-EAS. Here, you can find a tutorial or documentation on how to set up EAS and obtain the PAI-EAS service URL and token. These credentials will be essential for connecting to the EAS service.

Suppose you want to try the Qwen model. Feel free to modify the "_Command to Run" _in the number 4 field. Qwen is open-source and has a series of models, including Qwen, the base language models, namely Qwen-7B and Qwen-14B, as well as Qwen-Chat, the chat models, namely Qwen-7B-Chat and Qwen-14B-Chat.

python api/api_server.py --port=8000 --model-path=Qwen/Qwen-7B-ChatIt requires setting the environment variables URL and token from the PAI-EAS. You can export these variables in your terminal or set them programmatically in your code. This will allow your application to access the EAS service securely.

Export variables in the terminal:

export EAS_SERVICE_URL=XXX

export EAS_SERVICE_TOKEN=XXXTo get these variables from the PAI-EAS, you can follow the below steps to find the host URL and token required for the code:

Import the necessary dependencies in your code. This includes the PaiEasChatEndpoint class from the langchain.chat_models _module and the _HumanMessage class from the _langchain.chat_models.base _module. These modules provide the foundation for running chat models with PAI-EAS.

import os

from langchain.chat_models import PaiEasChatEndpoint

from langchain.chat_models.base import HumanMessage

os.environ["EAS_SERVICE_URL"] = "Your_EAS_Service_URL"

os.environ["EAS_SERVICE_TOKEN"] = "Your_EAS_Service_Token"

chat = PaiEasChatEndpoint(

eas_service_url=os.environ["EAS_SERVICE_URL"],

eas_service_token=os.environ["EAS_SERVICE_TOKEN"],

)Create an instance of the PaiEasChatEndpoint class by passing the PAI-EAS service URL and token as parameters. This initializes the connection to the EAS service and prepares it for chat model interactions.

output = chat([HumanMessage(content="write a funny joke")])

print("output:", output)Utilize the chat model by calling the chat method and passing a HumanMessage object with the desired input content. By default, the chat model uses the default settings for inference. However, you can customize the inference parameters by including additional keyword arguments such as _temperature, top_p, and top_k_. This allows you to optimize the model's behavior as per your requirements.

kwargs = {"temperature": 0.8, "top_p": 0.8, "top_k": 5}

output = chat([HumanMessage(content="write a funny joke")], **kwargs)

print("output:", output)Here is the list of other parameters for PaiEasEndpoint:

| Parameter Name | Type | Purpose | Default Value |

|---|---|---|---|

| eas_service_url | str | Service access URL | |

| eas_service_token | str | Service access token | |

| max_new_tokens | Optional[int] | Maximum output token ID length | 512 |

| temperature | Optional[float] | 0.95 | |

| top_p | Optional[float] | 0.1 | |

| top_k | Optional[int] | 10 | |

| stop_sequences | Optional[List[str]] | Output truncation character set | |

| streaming | bool | Whether to use streaming output | False |

| model_kwargs | Optional[dict] | Other parameters for EAS LLM inference |

You can run a stream call to obtain a stream response for more dynamic interactions. Set the streaming parameter to True when calling the stream method. Iterate over the stream outputs to process each response in real-time.

outputs = chat.stream([HumanMessage(content="hi")], streaming=True)

for output in outputs:

print("stream output:", output)import os

from langchain.llms.pai_eas_endpoint import PaiEasEndpoint

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

# Initialize the EAS service endpoint

os.environ["EAS_SERVICE_URL"] = "EAS_SERVICE_URL",

os.environ["EAS_SERVICE_TOKEN"] = "EAS_SERVICE_TOKEN"

llm = PaiEasEndpoint(eas_service_url=os.environ["EAS_SERVICE_URL"], eas_service_token=os.environ["EAS_SERVICE_TOKEN"])

# Accessing EAS LLM services:

# Access method 1: Direct access

kwargs = {"temperature": 0.8, "top_p": 0.8, "top_k": 5}

output = llm("Say foo:", **kwargs)

# Access method 2: Template-based access

# 2.1 Prepare the question template

template = """Question: {question}

Answer: Let's think step by step."""

prompt = PromptTemplate(template=template, input_variables=["question"])

# 2.2 Wrap with LLMChain

llm_chain = LLMChain(prompt=prompt, llm=llm)

# 2.3 Access the service

question = "What NFL team won the Super Bowl in the year Justin Bieber was born?"

llm_chain.run(question)

Alibaba Cloud PAI is the perfect blend of cutting-edge platforms, which provides technology and captivating features, creating a transformative experience in the realm of GenAI. Brace yourself for a journey where language becomes a canvas for AI-powered creativity and innovation. Get ready to unlock the true potential of LLM with PAI – your gateway to the future of GenAI!

With Alibaba Cloud PAI-EAS, deploying and running chat models becomes a seamless and efficient process. By using the above steps and information, you can unlock the full potential of chat models and create intelligent conversational experiences. PAI-EAS empowers developers to harness the capabilities of machine learning with ease while providing high throughput, low latency, and comprehensive operations and maintenance features. Start leveraging chat models within your applications today and embark on a journey of exciting AI-driven conversations.

Experience the power of LLM and LangChain on Alibaba Cloud's PAI-EAS and embark on a journey of accelerated performance and cost-savings. Contact Alibaba Cloud to explore the world of generative AI and discover how it can transform your applications and business.

Rapid Deployment of AI Painting with WebUI on PAI-EAS using Alibaba Cloud

Farruh - January 22, 2024

ApsaraDB - May 15, 2024

Alibaba Cloud Community - November 24, 2023

Farruh - July 18, 2024

Alibaba Cloud Indonesia - January 30, 2024

Farruh - February 26, 2024

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn MoreMore Posts by Farruh

AI's AI March 7, 2024 at 7:34 am

You mentioned the innovative integration of Generative AI with Alibaba Cloud PAI, emphasizing the transformative power in GenAI through the combination of AI, Langchain, and LLM. This strategic move mirrors historical trends where converging technologies have led to groundbreaking advancements. Drawing parallels, we've witnessed how the integration of diverse tools can redefine the landscape of AI capabilities. In this context, I'm curious about the specific challenges or breakthroughs you've encountered during the integration process. Could you share insights into overcoming any unique obstacles, and how these advancements align with the evolving needs of the AI community?

Farruh March 18, 2024 at 2:44 am

During the integration of Generative AI with Alibaba Cloud PAI, we faced challenges such as fine-tuning LLMs to work with Langchain and ensuring real-time response streaming. These were overcome through iterative development, expert collaboration, and the application of advanced cloud technologies. Breakthroughs included the ability to enhance AI response quality with Knowledge Base Systems and the creation of customizable AI models through template-based access. These advancements meet the AI community's needs for accessible, context-aware, and interactive AI tools, aligning with the demand for more sophisticated and user-friendly AI applications.