By Yun Hao

In a Kubernetes cluster, Ingress serves as an access point for services within the cluster to be exposed to the outside, and almost carries all the traffic for service access in the cluster. We know that Nginx Ingress Controller is an important sub-project in the Kubernetes community. It mainly relies on the high-performance load balancing software Nginx, which automatically converts the Kubernetes Ingress resource objects into Nginx configuration rules in real time, to provide the expected authorized access portal.

As the number of microservices deployed in a Kubernetes cluster increases, the routing rules exposed become more and more complex, and the backend endpoints of services change more frequently. As a result, the Nginx configuration files in the Nginx Ingress Controller component change more frequently. However, any Nginx configuration change requires to reload Nginx to take effect. This is acceptable in scenarios with low frequency of change, but it will cause frequent reloading of Nginx in scenarios with high frequency of change.

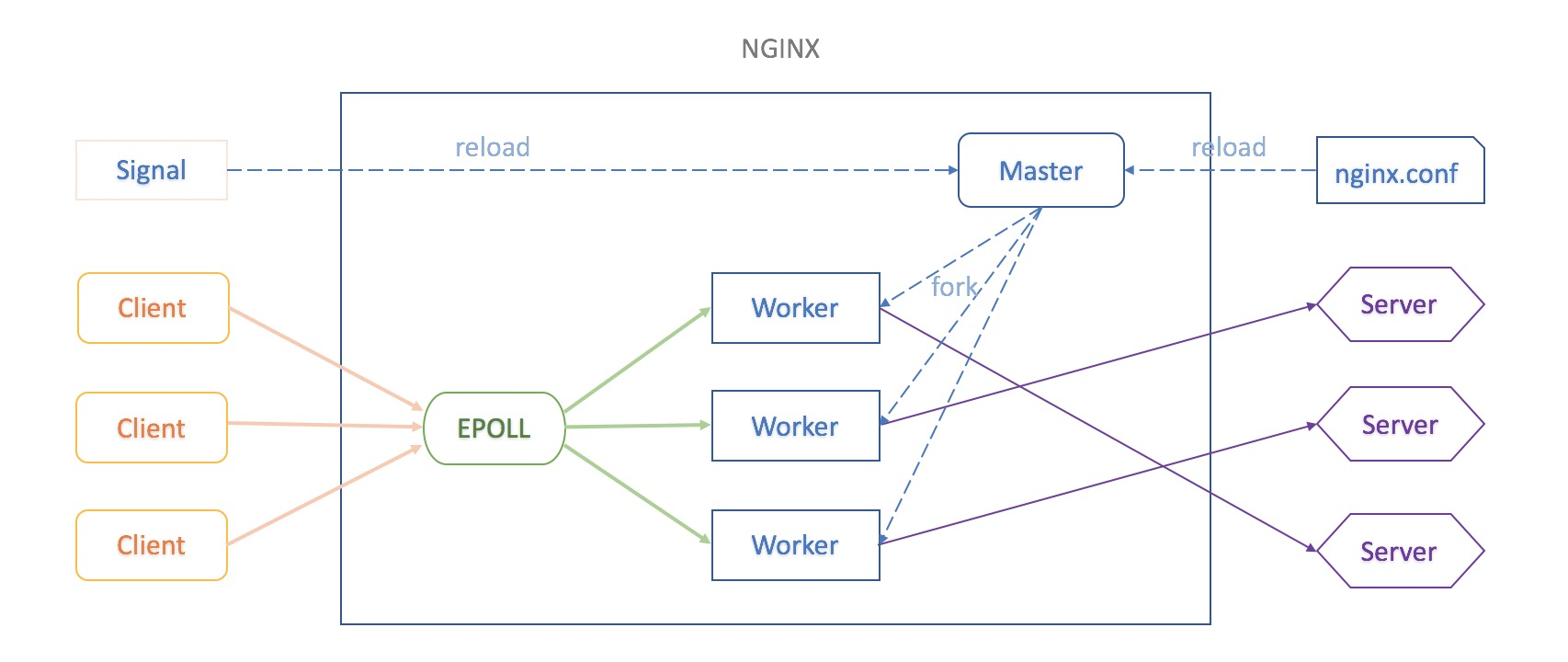

The problem caused by frequent reloading of Nginx has become a common topic. The nature of the problem mainly stems from the original architecture design model of Nginx:

Generally, on a Linux server, the EPOLL multi-process mode using Nginx will be configured. After the Nginx configuration file is modified, the new Nginx configuration rules need to be reloaded through the command:

nginx -s reload After the Nginx Master process receives the reload signal, it reloads the new Nginx configuration file from the specified path, and verifies the validity of the configuration rules. If it is a valid configuration file, a specified number of new Nginx Worker processes are forked based on the worker_processes value in the new configuration file, and the new forked child processes completely inherit the memory data ngx_cycle of the parent processes (including the newly parsed Nginx configuration rules). At the same time, each Listen Socket FD in the configuration is registered to the EPOLL event listening of the kernel, and these new Nginx Worker processes can receive and process requests from clients.

And, the Nginx Master process sends QUIT signal to notify the old Nginx Worker process to exit smoothly. When the old Nginx Worker process receives the QTUI signal, it removes the listening event previously registered to EPOLL, and does not receive and process new client requests any more. And it sets a timer based on the set worker_shutdown_timeout value, and then continue to process the received client requests. If the existing client requests are processed before worker_shutdown_timeout, the process automatically exits. If the client requests are not processed, the process is forced to be killed, and quits. In this case, the client request response is abnormal.

Therefore, frequent reloading of Nginx may cause the following obvious request access problems in scenarios with high frequency of change:

To mitigate the impact of frequent reloading of Nginx, we need to load the Nginx configuration rules through dynamic update, that is, to update the Nginx configuration rules loaded into memory in real time without forking new Nginx Worker processes.

First, let's take a look at the Nginx configuration file style, which mainly includes the following sections:

# 1. Main configuration

daemon off;

worker_processes 4;

events {

# 2. Event configuration

multi_accept on;

worker_connections 1024;

use epoll;

}

http {

# 3. Http main configuration

access_log /var/log/nginx/access.log;

error_log /var/log/nginx/error.log;

upstream {

# 4. Upstream configuration

server 0.0.0.1;

}

server {

# 5. Server configuration

server_name _ ;

listen 80 default_server;

location / {

# 6. Location configuration

proxy_pass http://upstream_balancer;

}

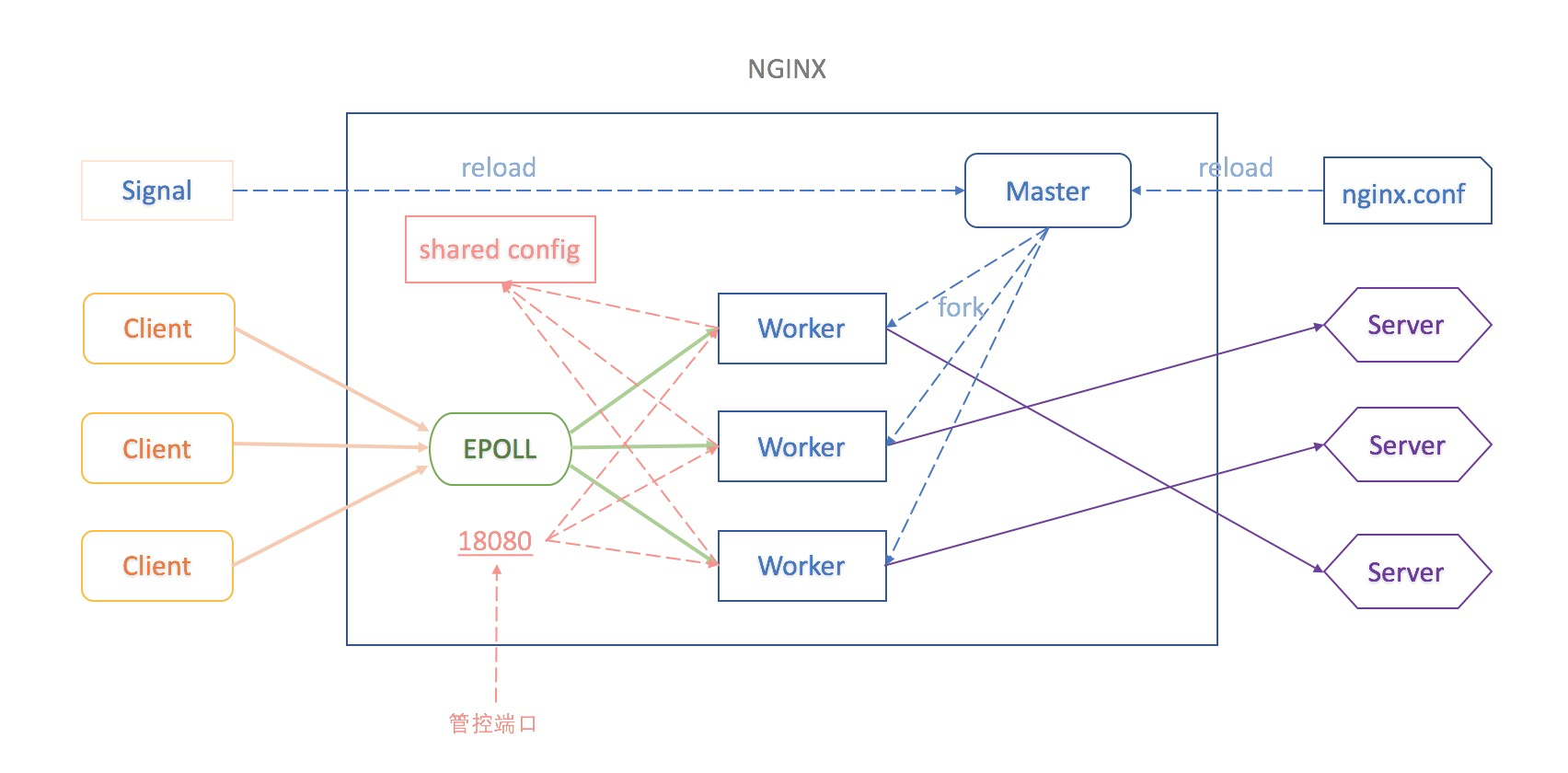

}In a Kubernetes cluster, an Ingress resource object is parsed and mapped to the configuration rules in the HTTP Main Block, Server Block, Upstream Block, and Location Block sections of Nginx. Therefore, we can maintain these frequently changed configurations of these sections in memory in the Shared Memory mode, and expose the control port inside the Ingress Controller, thus managing the configuration of Nginx routing rules in real time through API:

When the K8S Ingress Controller monitors and finds changes to the Ingress and associated resources in the cluster, the latest Nginx configuration rules can be pushed to the unified shared memory configuration through the Internal API, instead of reloading Nginx to make the new configuration take effect. Thus, when Nginx processes any newly received client requests, it can perform rule matching and route forwarding based on the latest configurations in the shared memory;

1. Currently, the latest version of the Nginx Ingress Controller component in the cluster of Alibaba Cloud Container Service for Kubernetes has enabled the dynamic update of Upstream by default, and supports phased release and blue-green release of application services. For detailed configuration instructions, see Ingress configurations.

You can run the following command to view the configuration list of Nginx Upstream in the shared memory:

kubectl -n kube-system exec -it <NGINX-INGRESS-CONOTROLLER-POD-NAME> -- curl http://127.0.0.1:18080/configuration/backends2. In addition, it also supports the dynamic update of HTTPS certificates. The dynamic update of certificates for Nginx Ingress Controller can be enabled by modifying the following parameter configuration of nginx-ingress-controller deployment:

- args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

- --publish-service=$(POD_NAMESPACE)/nginx-ingress-lb

- --enable-dynamic-certificates=true ### Add this configuration

- --v=2After the dynamic update of HTTPS certificates is enabled, the TLS certificates of Ingress are all maintained in the Nginx shared memory. The list of certificates configured in the current shared memory can be viewed by running the following command:

kubectl -n kube-system exec -it <NGINX-INGRESS-CONOTROLLER-POD-NAME> -- curl http://127.0.0.1:18080/configuration/certs3. Further, the dynamic update of configurations for Nginx Server and Location is also supported. The dynamic update of Nginx Ingress Controller Server and Location can be enabled by modifying the following parameter configuration of nginx-ingress-controller deployment:

- args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

- --publish-service=$(POD_NAMESPACE)/nginx-ingress-lb

- --enable-dynamic-certificates=true ### Add this configuration

- --enable-dynamic-servers=true ### Add this configuration and enable-dynamic-certificates

- --v=2Similarly, when the dynamic update of the Nginx Ingress Controller Server is enabled, the configurations for all Nginx Servers and Locations are maintained in the shared memory, and the Server configuration list in the current shared memory can also be viewed by running the following command:

kubectl -n kube-system exec -it <NGINX-INGRESS-CONOTROLLER-POD-NAME> -- curl http://127.0.0.1:18080/configuration/serversNote: When the dynamic update of the Server is enabled, some Ingress Annotation configurations are not currently supported, and are being optimized. You can configure it directly through ConfigMap.

How to Use NAS Persistent Volumes Dynamically in a Kubernetes Cluster

Use a Local Disk Through LocalVolume Provisioner in a Kubernetes Cluster

228 posts | 33 followers

FollowAlibaba Cloud Native Community - April 21, 2025

Alibaba Cloud Native Community - November 26, 2025

Alibaba Clouder - April 22, 2019

Alibaba Cloud Storage - June 4, 2019

Alibaba Cloud Native Community - January 6, 2026

Alibaba Cloud Native Community - March 21, 2024

228 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Container Service