By Xixian from Alibaba Cloud Traffic Product Team

In the era of intelligent technology, the application of intelligent technology is emerging one after another in various fields, focusing on the topic of improving efficiency. Against the various middle and back platform business scenarios, the Alibaba Cloud Traffic Product Frontend Team has proposed an intelligent frontend code generation scheme that uses image recognition technology.

In the process of exploration, with the continuous improvement of the platform capabilities, a code generated by stacking the render function of React components can no longer satisfy the needs. Therefore, it is imperative to generate the Dva module that conforms to the common habit of development at this time. This article will introduce the team's overall idea of design-to-code (D2C) and the general solutions.

Dumbo is an intelligent development platform that uses image recognition algorithms to generate frontend code with one click. Now, it has been implemented in multiple Alibaba Cloud consoles and middle and back platform projects.

The basic procedure of Dumbo is to generate a JSON description (Schema) that conforms to the agreed specifications through a picture using intelligent technologies. Then, fine-tune and correct it manually through the visual building platform and generate the React module code. Those with less demanding design requirements can generate code by dragging and dropping components on the building platform directly.

Some people may wonder why we still need to generate code as we have already had a build-out platform, which provides the Runtime environment to render the JSON data. For the middle and back platform applications, a static image only describes very limited requirements. In addition, complex interactive scenarios and non-standard UIs are unavoidable. As engineers are required to meet the requirements to avoid overly complicated platforms, the secondary development of intelligently generated code is the optimal solution for the currently limited human resources of the team.

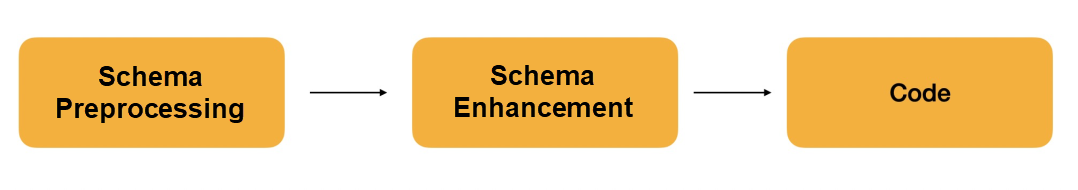

Before the generation of the Dva module, to generate a code is to output index.jsx using limited Schema information. In addition, in the preliminary stage, the code generation requires us to manually create the AST node based on the Schema node and generate the entire AST based on the Schema to obtain the final code. However, the operation of AST is costly, and AST is almost unreadable. For some scenarios that need to be optimized, AST is so inflexible that it is eliminated. Instead, we use a direct string mosaic solution with some of the group's experience in code generation as a reference. To minimize manual intervention, the overall process of code generation can be described like this:

In this process, the content preprocessed in Schema is the preliminary supplement and adjustment after intelligent algorithm identification. After the preprocessing by Schema, users can use the Dumbo platform, where they can perform a series of adjustments and interventions and give supplements for the existing Schema. Then, there is the Schema enhancement, which is mainly to adjust the style of the code generated. The Schema after enhancement contains all information about the project, which is assembled into code.

The following parts describe the implementation process of each step.

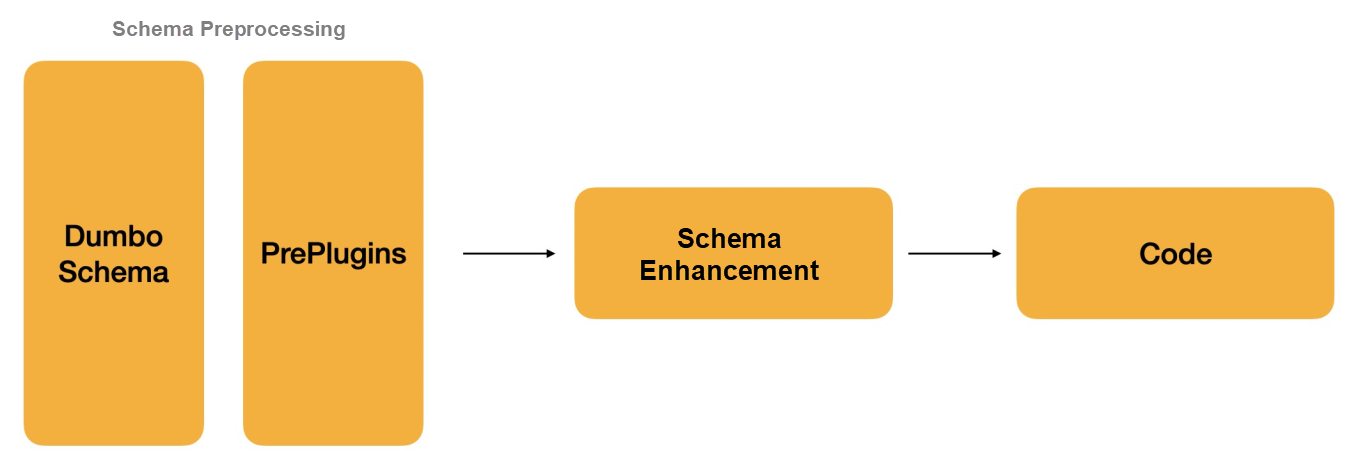

The first part is about preprocessing. Image recognition returns an array of components that include the names and positions. Through a series of positional processing and nesting, a very basic Schema tree conforming to the standard middle and back platform protocol specification of Alibaba economy will be obtained. In the following figure, it is named Dumbo Schema. As image recognition cannot make a further judgment on interactions or actions, the information contained in the Schema tree is very limited at this time. To make the final generated code as abundant as possible, preprocessing is needed at this stage to add interactive actions for common functions.

Let's take a Table as an example to briefly describe what PrePlugins has completed at this time. For a Table, a loading attribute will be added here for the Table node, and the same attribute value will be set as the corresponding value of this state. Based on the information that already exists in the Schema, the onSort and rowSelection attributes are added to the Table nodes, and the attribute values are set to a simplified version of the sample function. In addition, the fetchTableData method will be added to Schema.methods to ensure the integrity of Schema. The isLoadingTableData interaction will be implemented. Finally, the fetchTableData method is called at the Schema.lifeCycles.componentDidMount.

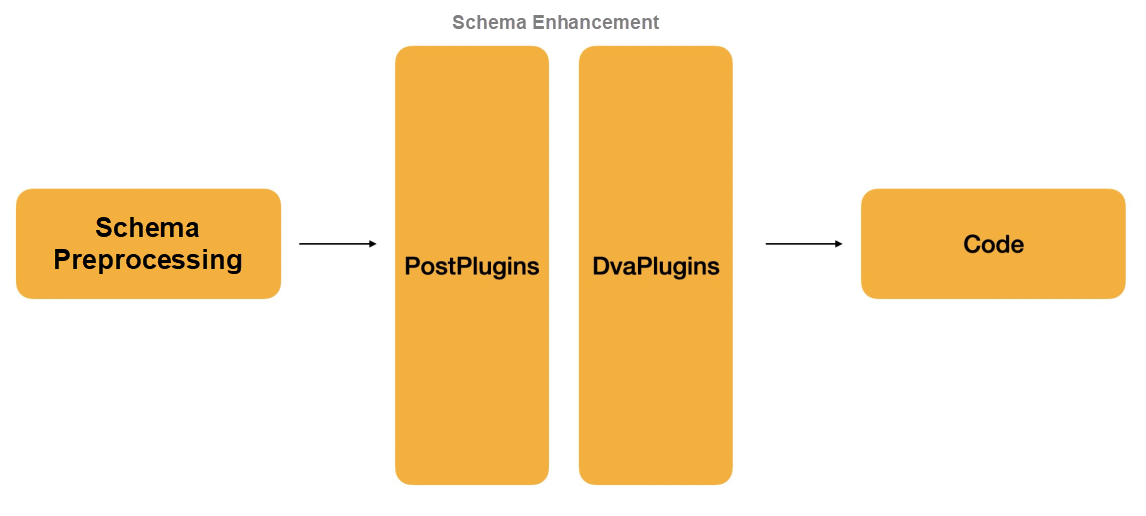

Before the code is generated, we need to put the initially generated Schema on the canvas for users to make a series of adjustments. There are still a series of problems to be dealt with, from the adjusted Schema to the generated code. The main station is the revision of the code style, the support for Dva, and more. This is the last stage of the Schema manipulation. The enhanced Schema traverses to generate corresponding code Chunk, which will be spliced into a complete code.

Let's take the Table as an example again and briefly describe what PostPlugins has done this time. In the Table scenario, with the default code output format, the Table and Table.Column will be output directly, which does not conform to the normal code writing style. At this point, all the sub-elements under the Table need to be extracted and added to the loop as a map.

In principle, DvaPlugins should also be a type of PostPlugins. Due to its particularity, it is described separately here. Note: The Dva model design has certain deficiencies at this time.

In principle, the emergence of action and sage requires a large amount of business logic to ensure the simplicity of the view layer. However, the canvas currently has limited control over the context of the entire frontend application. Therefore, even after the Dva module is finally generated, a single page of the store is still generated separately. In addition, the store only handles asynchronous requests with little business logic. In Dumbo, due to the diversity of logic, the generation of the model cannot help but be completed under this style.

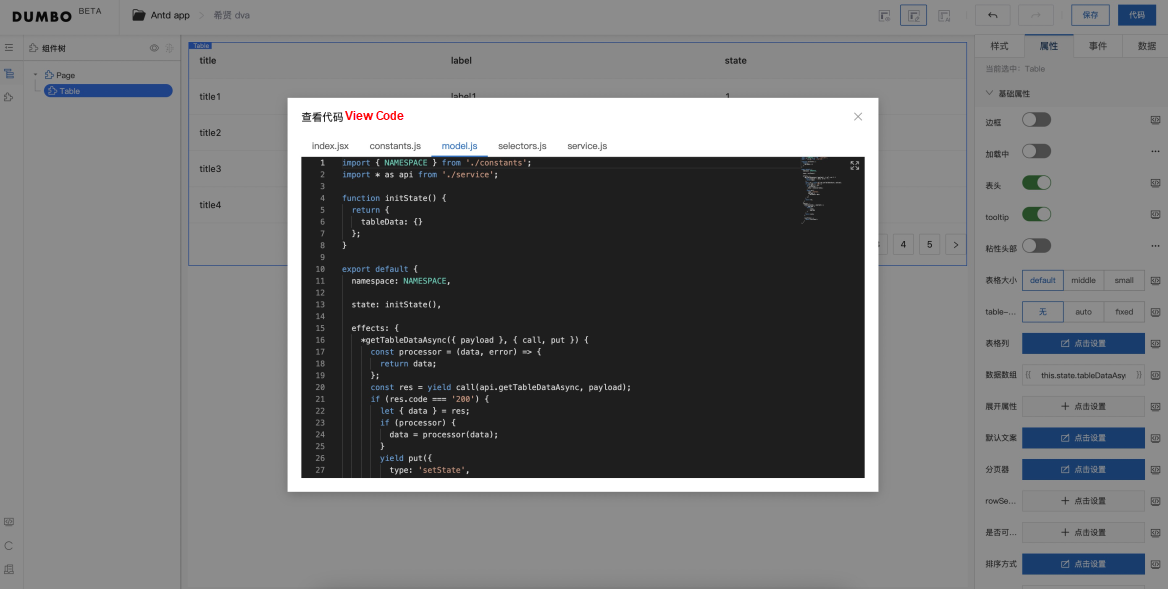

Taking the current project of the team as an example. A serious Dva module includes five files: actions.js, index.js, model.js, selectors.js, and service.js. The content of actions.js and selectors is relatively fixed. The index.js is the body content of the page. The service.js initiates a request set for the page. The model.js is the top priority among the Dva generation.

Since all Dva deals with asynchronous data generation, all interactive data requests can be configured according to the dataSource attribute in the group specification Schema. In the logic of code generation, to better output the side-effect functions in the Dva module and initialize the State in the model, we agree on the format of data source ID as xxxTypeAsync. The xxx indicates the user-defined data name in the format of "small hump," and Type indicates the data type. Async indicates the fixed identifier. In the generated code, all contents in the data field returned by the API are mounted to the state. For side-effect functions that do not need to be mounted to the state, start the xxx in xxxTypeAsync with set.

The basic principle of Dva module's generation and design is to ensure the normal rendering of the canvas. In other words, all configurations must be effective and run properly on the canvas so the exported code can run properly.

So far, the Schema contains all the information about the existing content on the canvas. Here, we need to traverse all Schema nodes and splice each node to generate a Chunk object. Each Chunk object contains at least three attributes: name, content, and linkAfter. Each one indicate the name of the current Chunk, the code segment represented by the current node, and the location of the Chunk. The linkAfter attribute is an array of name attributes of the other Chunk objects, indicating that the current Chunk should appear after the content. This attribute is used to control the order of outputting Chunk. The splicing process of content is mainly the recursive splicing process of nodes. Each independent node can represent the code fragment represented by the current node independently without the context information. What is left handles the boundary conditions.

Splicing is the process of traversing chunks multiple times. Each time all chunks with the length of 0 will be found, with their names recorded and their Content concatenated into the result string. Then, the name in the linkAfter of all other chunks will be deleted. As a result, the final splicing is completed in sequence after all of the Chunk linkAfter is empty. This is code generation.

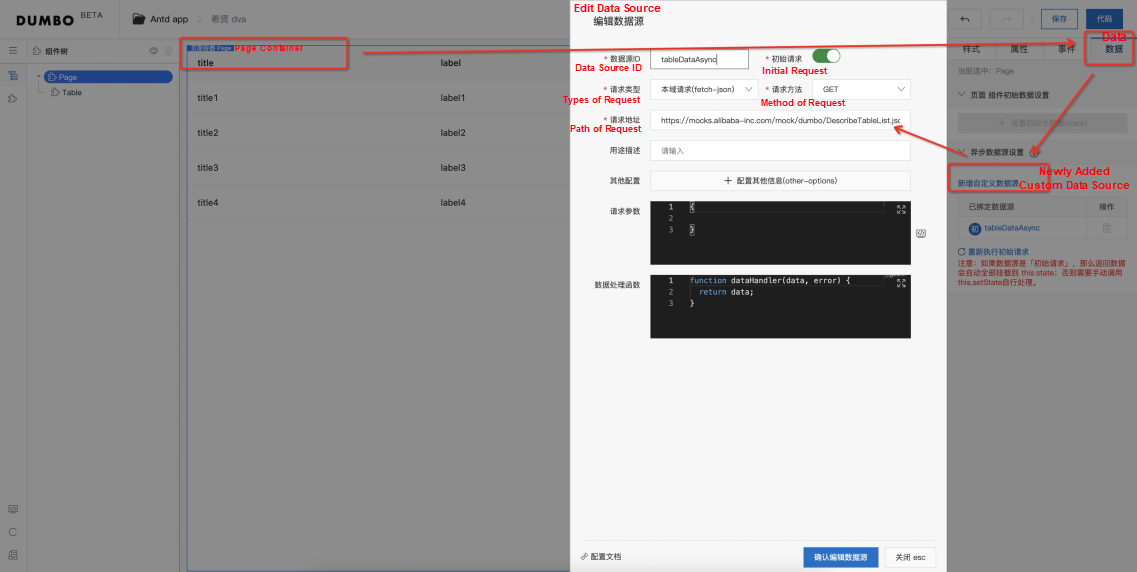

Data can only be configured on the outermost Page node. Therefore, click the Page container on the outermost layer of the canvas, click "data" in the right-side configuration plug-in, and then click "Add custom data source" to open the data configuration form.

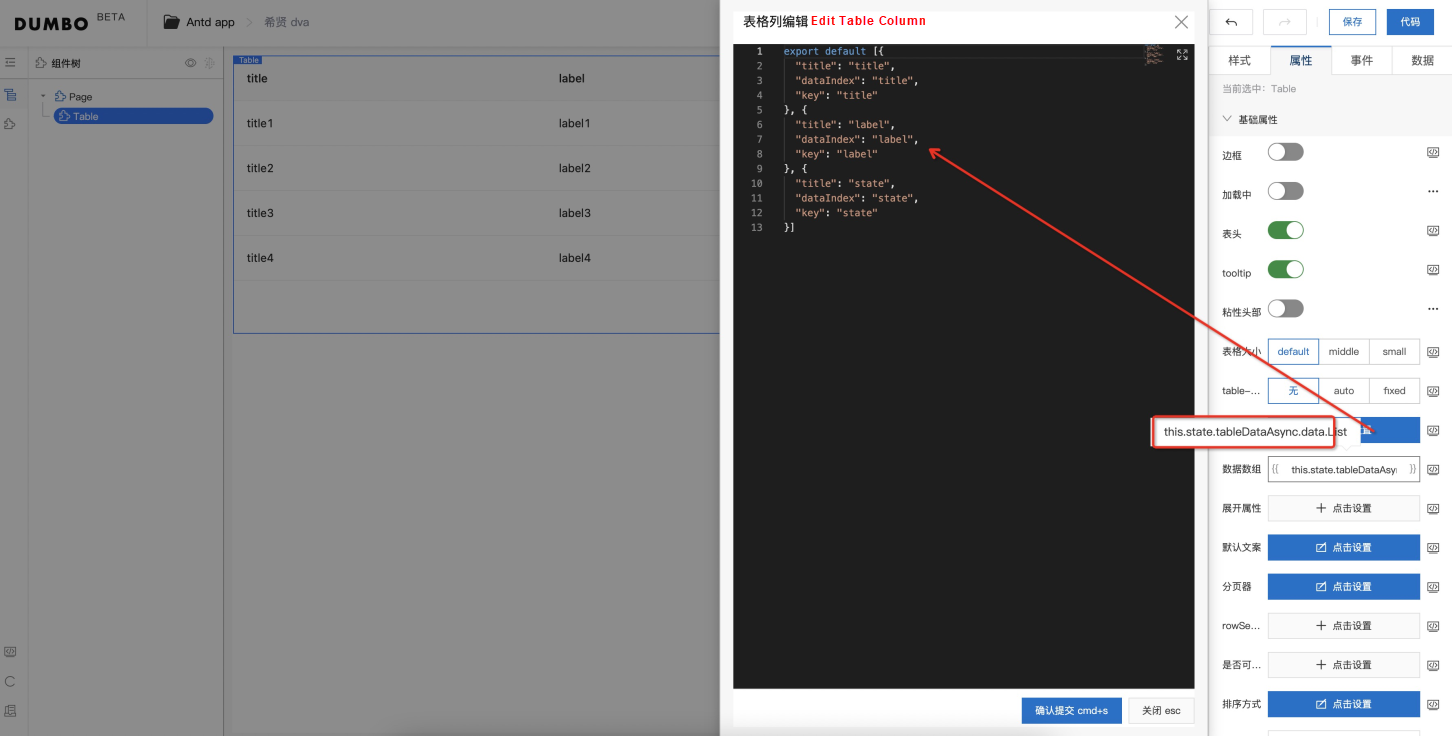

After that, drag and drop a normal Table of the antd into the canvas, edit the "Table column configuration" on the right side, and adjust the column configuration of the Table according to the field of the interface. In the data array, select "Use variable" to fill this.state.tableDataAsync.data.List. Let's take a look at this field. It looks very long, but the tableDataAsync is the same data source ID created above. The data.List indicates the level of a field in the data source.

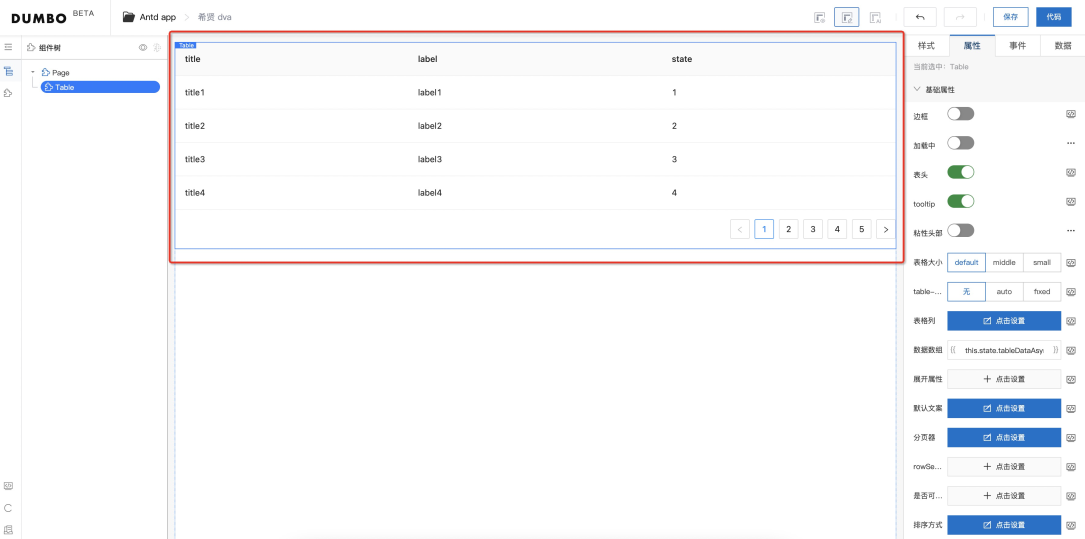

The canvas is rendered with real data.

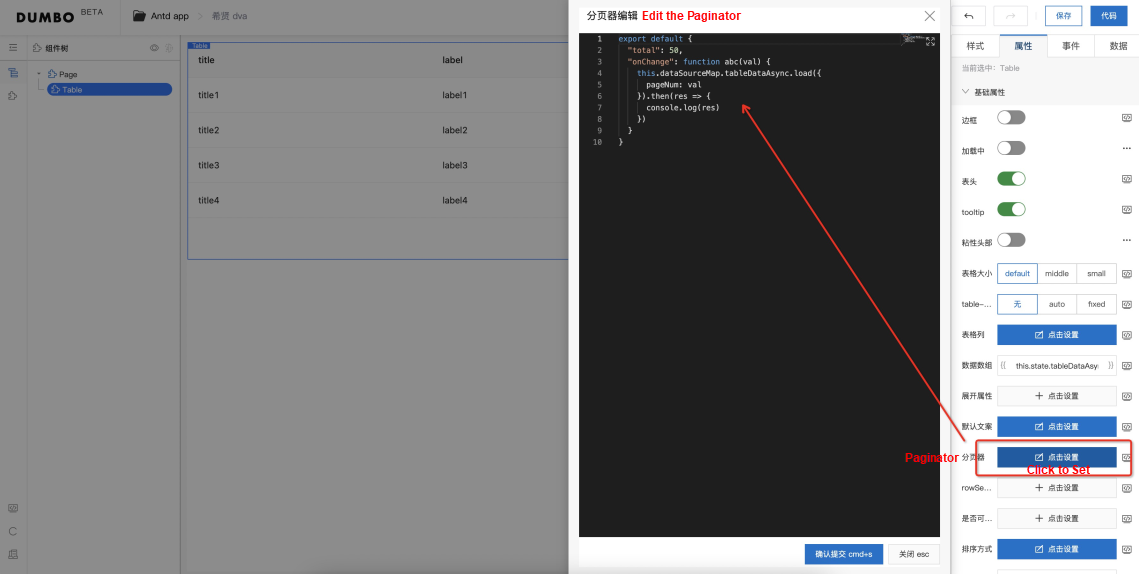

Now, we will configure the pages of the Table. The Table component is the same as before. Under the right-side "Attribute," click the paging to add the onChange attribute of the paging device. Pay attention to the call method here.

According to the group's standard, data sources must be called using dataSourceMap as the identity. The tableDataAsync is still the data source ID in the preceding configuration item. You can call the load method to pass the parameter. In addition, the load method returns a promise and supports chain calls of "then". To make another request after "then", we need to nest a load function.

function(val) {

this.dataSourceMap.tableDataAsync.load({

pageNum: val

}).then(res => {

this.setState({

tableDataAsync: res

})

})

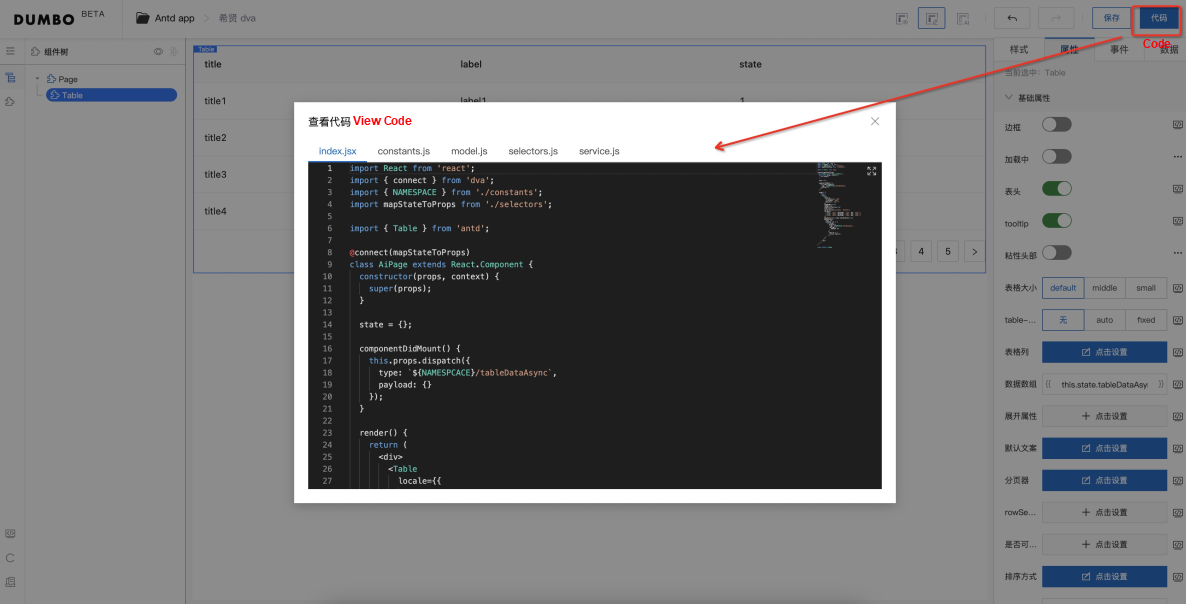

}Click "View code" in the upper-right corner to get the code

The details of generating a code should be emphasized once again:

get${data source ID}. By default, the data of the returned data is mounted to the state, which is res.data. Pay attention to the return of the interface./^ set(\w +)/, there will not be such an operation of mounting data to the state.dataSource specification, in the case of non-initialized requests, this.dataSource['xxxTypeAsync'] is required for triggering. This can be difficult for non-frontend personnel to use.Currently, all operations on the canvas side are processed based on Alibaba Group's specifications. We have developed a series of conventions to generate Dva codes. After the data-related configuration is completed, the configured data needs to be called actively according to the contract to trigger Ajax calls on the page. Later, we will continue paying attention to and optimize the usage. In addition, the subsequent data interface's forwarding will be supported by the Gateway, and the canvas and code for the implementation of real data forwarding operations will be completed.

Practices of Intelligent Frontend in Alibaba Cloud's Big Data R&D Platforms

66 posts | 5 followers

FollowAlibaba Clouder - March 2, 2021

Alibaba Clouder - April 20, 2018

Alibaba Clouder - December 31, 2020

Alibaba F(x) Team - June 20, 2022

Alibaba F(x) Team - February 25, 2021

Alibaba Clouder - December 31, 2020

66 posts | 5 followers

Follow AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba F(x) Team