By Priyankaa Arunachalam, Alibaba Cloud Community Blog author.

In the previous articles, we went over Big Data Analytics and used the command line interface to login to the cluster and access all the Hadoop components. As we continue to play with big data using several different tools and related technology, it is important that we understand how we can access the Hadoop components more easily, making easier and more productive.

Hadoop User Experience (HUE) is an open-source Web interface used for analysing data with Hadoop Ecosystem applications. Hue provides interfaces to interact with HDFS, MapReduce, Hive and even Impala queries. You can install it on any server. Then, users can access Hue right from within the browser, which can help to enhance the productivity of Hadoop development workflows. In other words, Hue essentially provides "a view on top of Hadoop", by making accessing the cluster and its components much easier, eliminating the need to use the command line prompt. For those who are new to Command Line and Linux environment, it tends be tough to even perform basic operations, such as viewing and editing files, or copying and moving file, using a command line interface. And to add to this, these actions are generally the most common operations that users will be carrying out day to day.

Apart from its web interface, Hue comes with a lot of other features that could be available to the Hadoop developers, among which include:

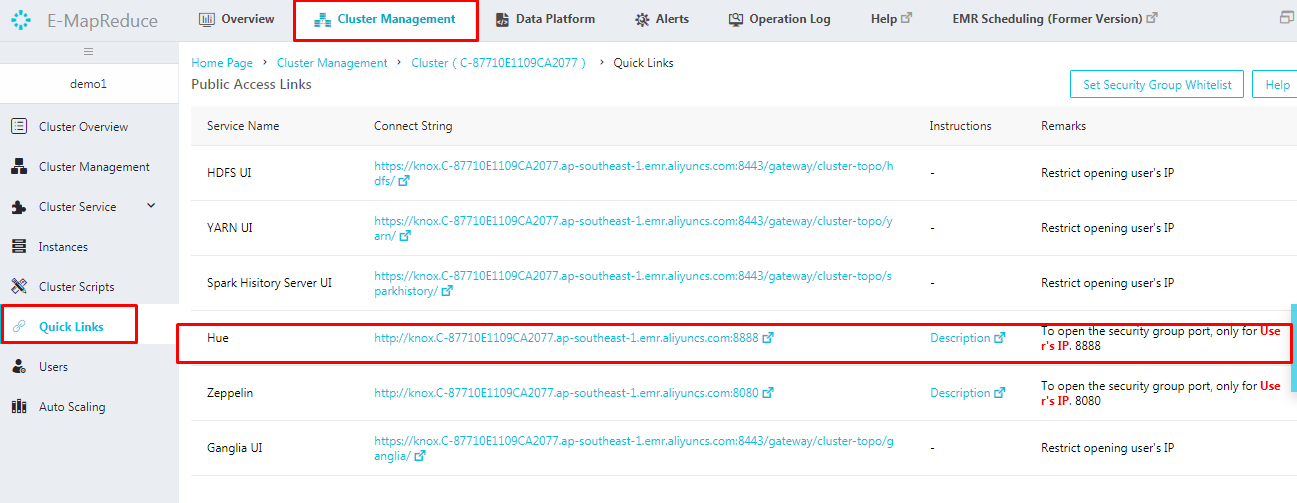

Alibaba E-MapReduce provides you with a set of services which includes HUE and it can be accessed through Apache Knox. So how can I access Hue? Well, if you remember how we accessed Zeppelin in our previous article, then the way in which you access Hue should be no surprise. Hue uses the CherryPy web server, and the default port is 8888. Therefore, to access Hue from Alibaba E-MapReduce console, we will need to add this port number though adding a Security Group Rule, which you can see how to do below.

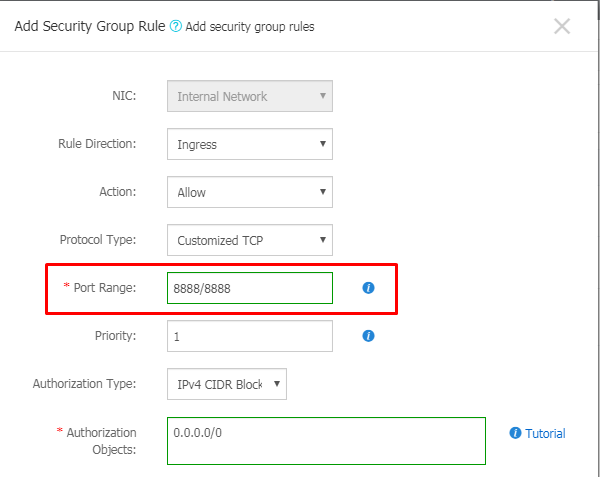

Let's quickly add a security group rule. To do so, navigate to Security Group ID under Cluster Overview. Then, on the page that follows, click Add Security Group. This leads to a window like the one shown below where you can specify the port range.

Mention all the details and click OK once done. This will add up the corresponding security rule to the set of rules. For detailed steps on how to create a security group rule and adding a security group to a cluster that you created, you can refer the previous article Diving into Big Data: Visual Stories through Zeppelin. Now we are all set to access Hue.

To access hue, proceed with the following steps.

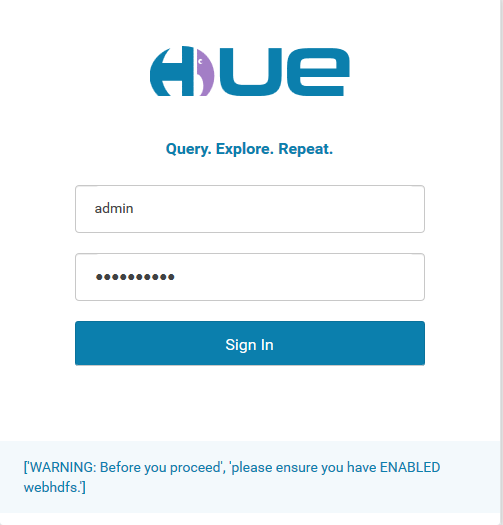

Clicking the quick link will take you to a page where you will be asked for your credentials to log in to Hue. Now before logging in to Hue, you will also receive a warning to enable WebHDFS.

A high-performance native protocol or native Java API is fine if you need to access data stored in HDFS from inside a cluster or from another machine on the network. However, in the case that if an external application wants to access the files in HDFS over the web or HTTP, then what as such a protocol or API sufficient? The answer is no.

For these kinds of requirements, an additional protocol called WebHDFS is needed. It works by defining a public HTTP REST API, which permits clients to access HDFS over the Web. It allows web services to have access to data stored in HDFS and additionally helps with operations such as reading and writing files, creating directories, changing permissions, and renaming files or directories. At the same time, WebHDFS also retains the security of the system and uses parallelism to allow for better throughput.

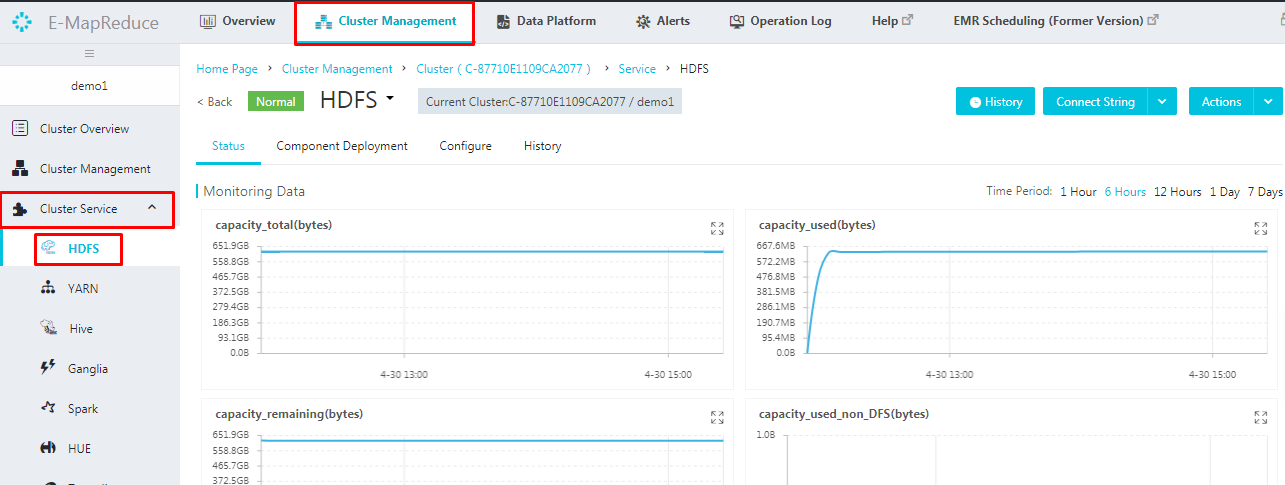

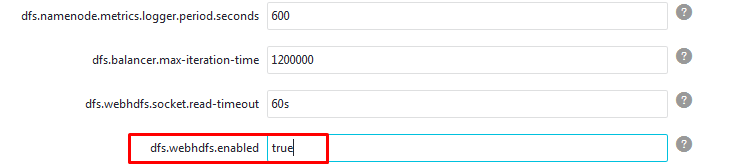

To enable WebHDFS in the nodes, you must set the value of dfs.webhdfs.enabled to true in hdfs-site.xml. You can change the configurations in Alibaba E-MapReduce console easily using the user interface. To do so, navigate to Cluster Service tab under Cluster Management and choose the service HDFS from the list of services.

Now move on to the Configure tab to change the configurations. Under hdfs.site you can see that the dfs.webhdfs.enabled property is set to false by default. Change it to true and click save.

Clicking Save will take you to a Confirm Changes page. On this page, you'll want to provide a description of your choice in this window and click OK.

On successful change, you will receive a prompt saying The action is performed.

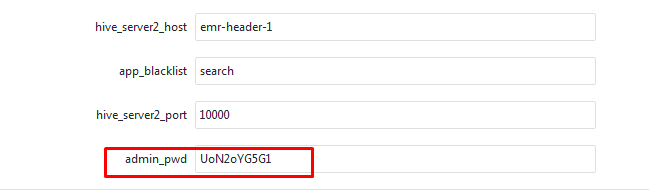

Now that we have set with the pre-requisites, let's navigate to the login page. As Hue does not have an administrator, the first user to log on is automatically set to administrator. For security, Alibaba E-MapReduce generates an admin account and password by default. The default administrator account is admin. To view the auto-generated password, follow the steps mentioned below.

Use these credentials to log into hue.

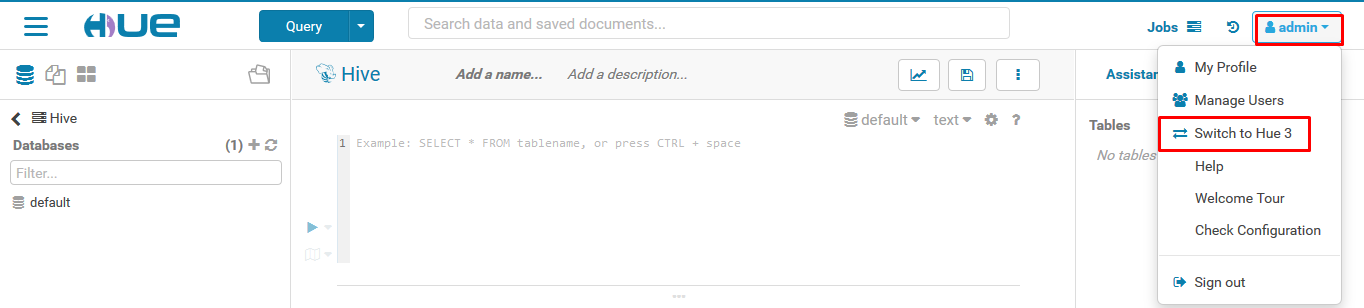

On successful login, you will be taken to the launch page of Hue, which is the Hue 4 interface. For those who are familiar with the previous version of Hue can switch it over by clicking admin on the top right and choosing Switch to Hue 3 from the drop down.

Viewing and managing the data in HDFS is an important part of big data analytics. The HDFS Browser/File Browser in Hue will help us achieve this.

We have seen how accessing the Hadoop File System by logging into the cluster using Cygwin and interacting with the files in HDFS using Linux commands may be difficult for someone not familiar with using a Command Line Interface. Being able to access, browse, and interact with the files in Hadoop Distributed File System is one of the most important factors in the use and analysis of big data because this is an important basis for working with different tools related to big data. Hue provides a user interface for this, and this interface happens to be capable of performing all the required tasks. When you do not feel like working with the command line interface, the Hue interface may be a good option for you.

For example, creating a directory might be ok, but finding a directory which was created before or created by someone else might be difficult. However, with Hue, it is much easier to access files just through using the Windows Operating System. At an initial point, let's quickly create a directory and upload a file into it using Hue.

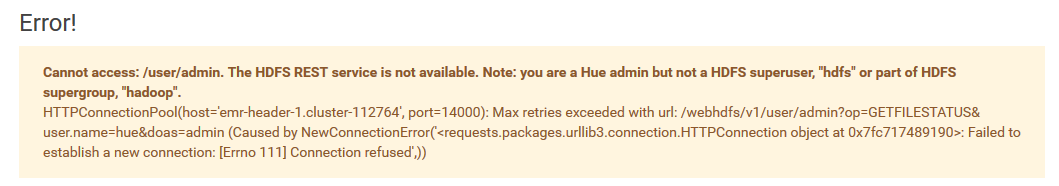

On clicking the HDFS icon on the left panel and when trying to access HDFS, you may receive an error as shown below:

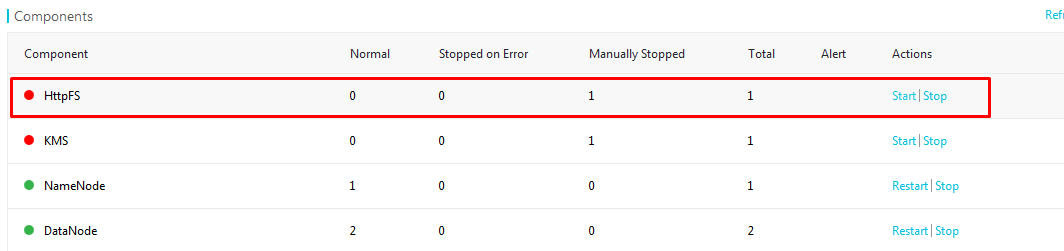

To solve this issue and access the files in HDFS, we have to start HttpFS.

HttpFS is a service that provides HTTP access to HDFS. The difference between WebHDFS and HttpFS is that, the former needs access to all nodes of the cluster whereas in HttpFS, a single node will act similar to a gateway.

To start HttpFS follow the steps mentioned below:

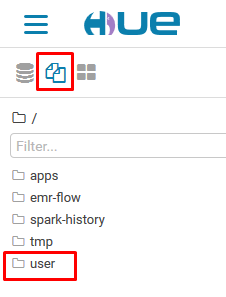

Now let's navigate to the HUE interface and click on HDFS icon again. You will see the directories listed up as shown below

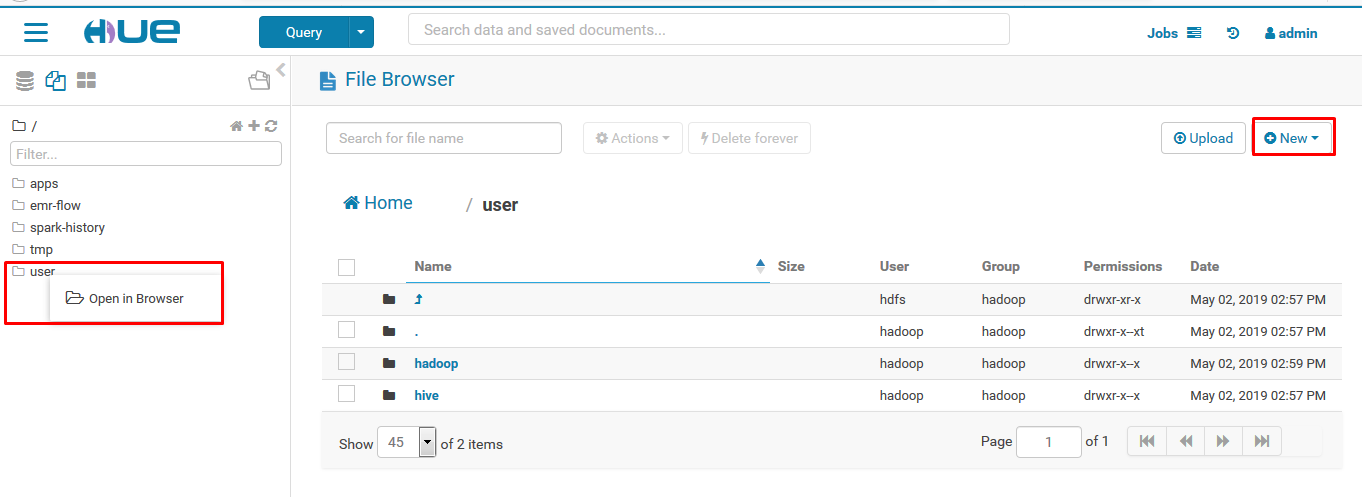

Right click on the desired directory and click on "Open in browser" which will open the file browser and list all the available files, where we can delete, upload and download files .For now let's quickly create a new user under the user directory and upload a file into it. To do this, follow the steps mentioned below.

Click New to create a new directory

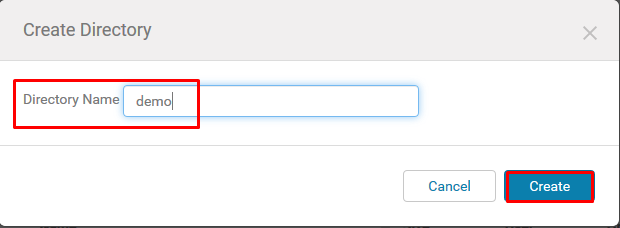

On the create directory wizard, give a name to the directory and click on create.

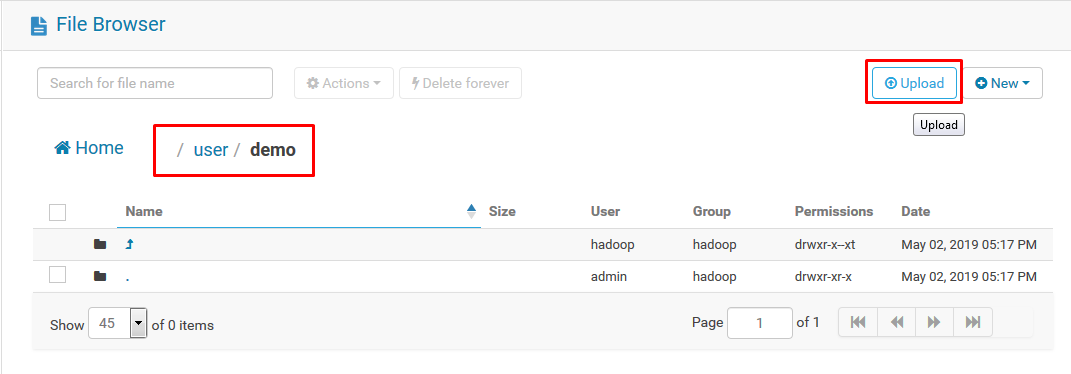

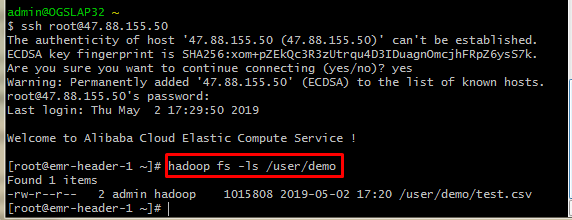

Now a new user demo has been created successfully. Click the demo and upload icons to upload a file into this directory

In the Upload to window, browse for a file and upload it

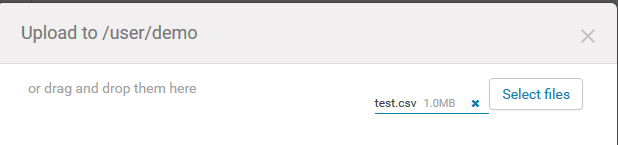

As you can see from the above explanation, moving a file into Hadoop ecosystem is made simpler, being only a few clicks, thanks to the user interface.

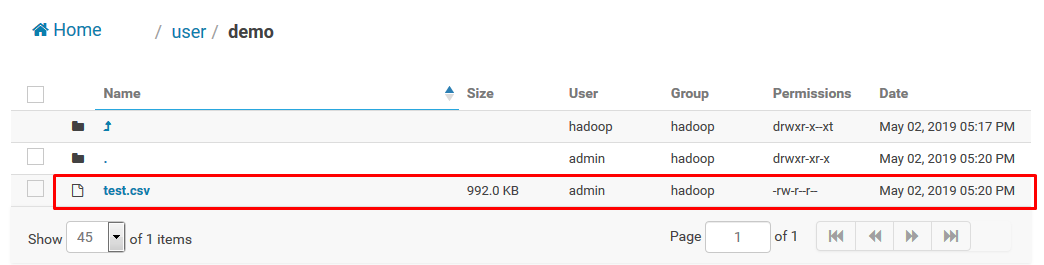

Log in to the cluster using the Cygwin terminal to check whether the demo directory is created and the file is inserted into it:

The presence of the string hadoop fs –ls /user/demo confirms that the file is loaded into Hadoop! As such, there is no need to struggle with command line interface to use Hadoop ecosystem if you have Hue set up.

In this article, we have showed you how to use Hue and the related file browser, and how using these can be simpler and easy. In later articles in this series, we will continue to take a look on other file operations, additional features of Hue, different editors and workflows in our next article.

2,593 posts | 793 followers

FollowAlibaba Clouder - September 2, 2019

Alibaba Clouder - September 27, 2019

Alibaba Clouder - July 20, 2020

Alibaba Clouder - September 2, 2019

Alibaba Clouder - March 4, 2021

Alibaba Clouder - November 14, 2019

2,593 posts | 793 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba Clouder