Tongyi Qianwen-7B (Qwen-7B) is a 7 billion parameter scale model of the Tongyi Qianwen large model series developed by Alibaba Cloud.

Qwen-7B is a large language model (LLM) based on Transformer, which is trained on extremely large-scale pre-training data. The pre-training data types are diverse and cover a wide range of areas, including a large number of online texts, professional books, codes, etc.

To see detailed description of the model, please visit the following:

https://www.modelscope.cn/models/qwen/Qwen-7B-Chat/files

In this article, we will explore two approaches for interacting with Tongyi Qianwen-7B model, one using a Graphical User Interface (GUI) and the other through Command Line Interface (CLI).

Please note:

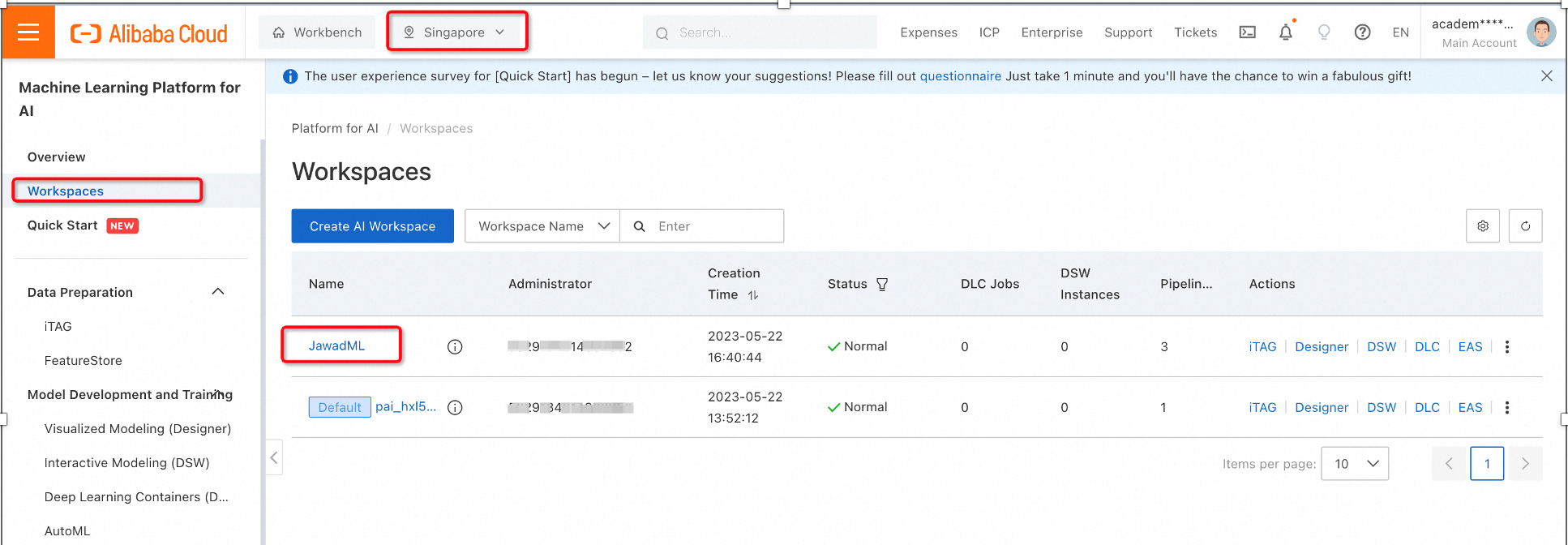

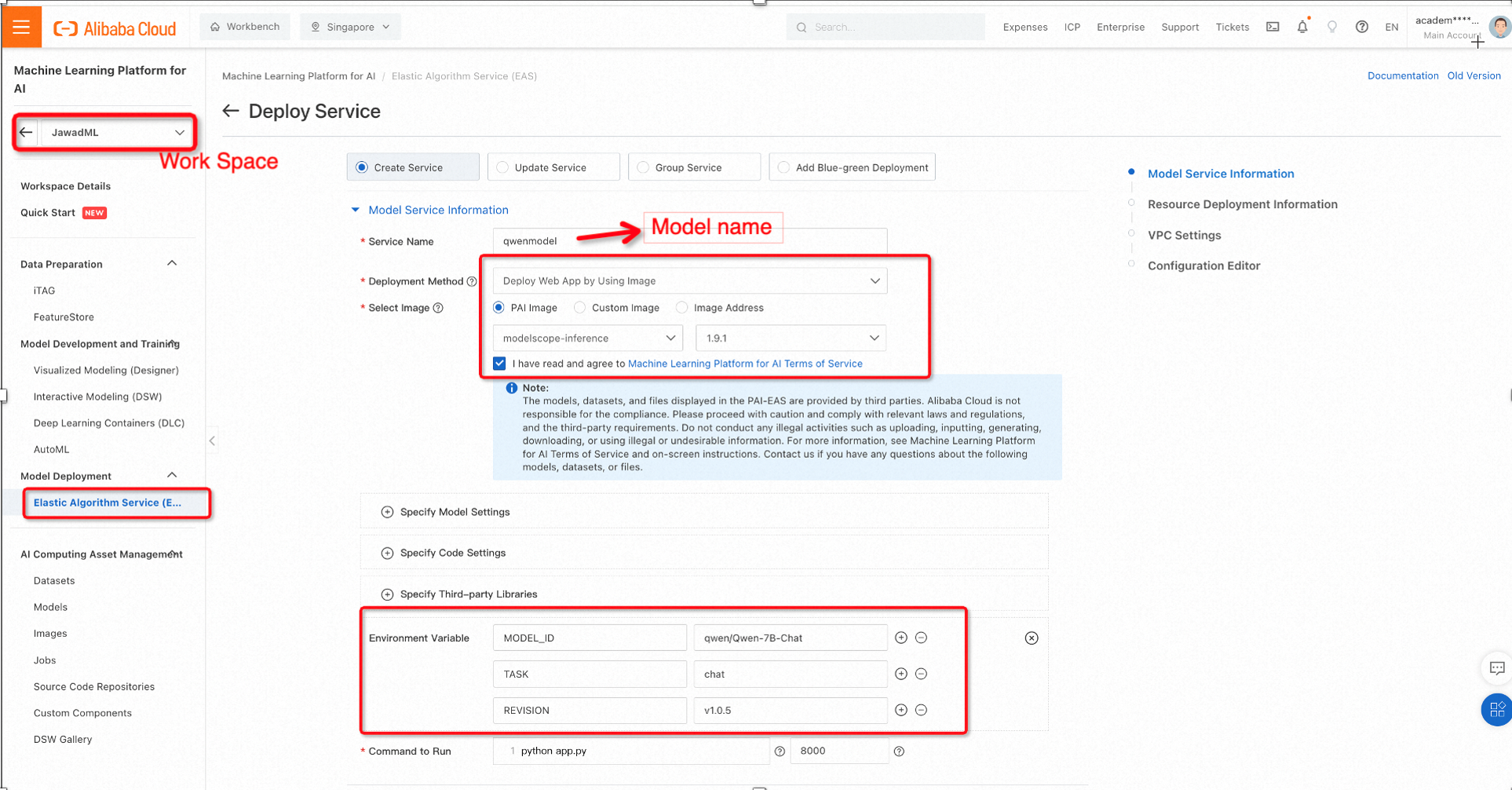

1. On the PAI platform, select your workspace and under the Model Deployment, select Elastic Algorithm Service (EAS) then click Deploy Service.

2. We will use the pre-trained model from ModelScope by using the following configurations for selecting the image and environment variables.

ModelScope is an open-source Model-as-a-Service (MaaS) platform, developed by Alibaba cloud, that comes with hundreds of AI models, including large pre-trained models for global developers and researchers.

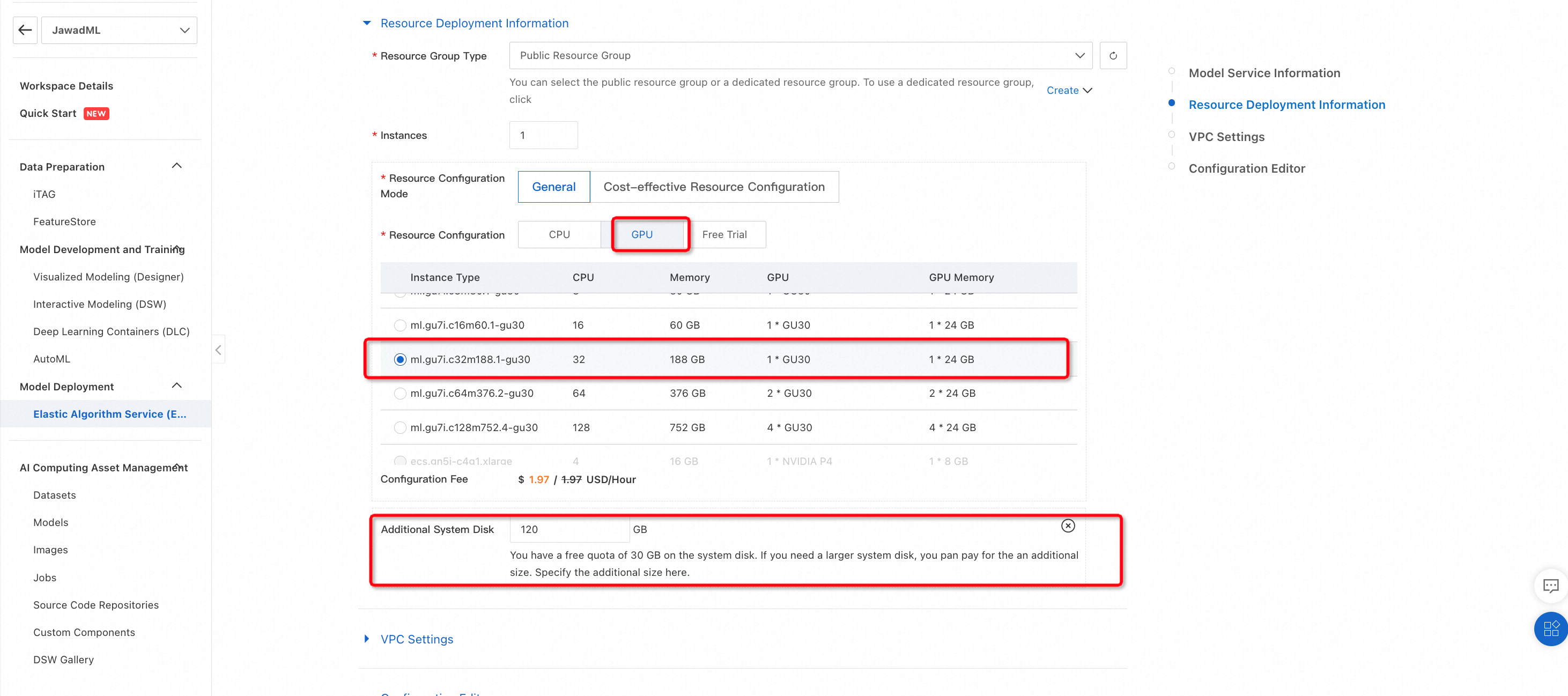

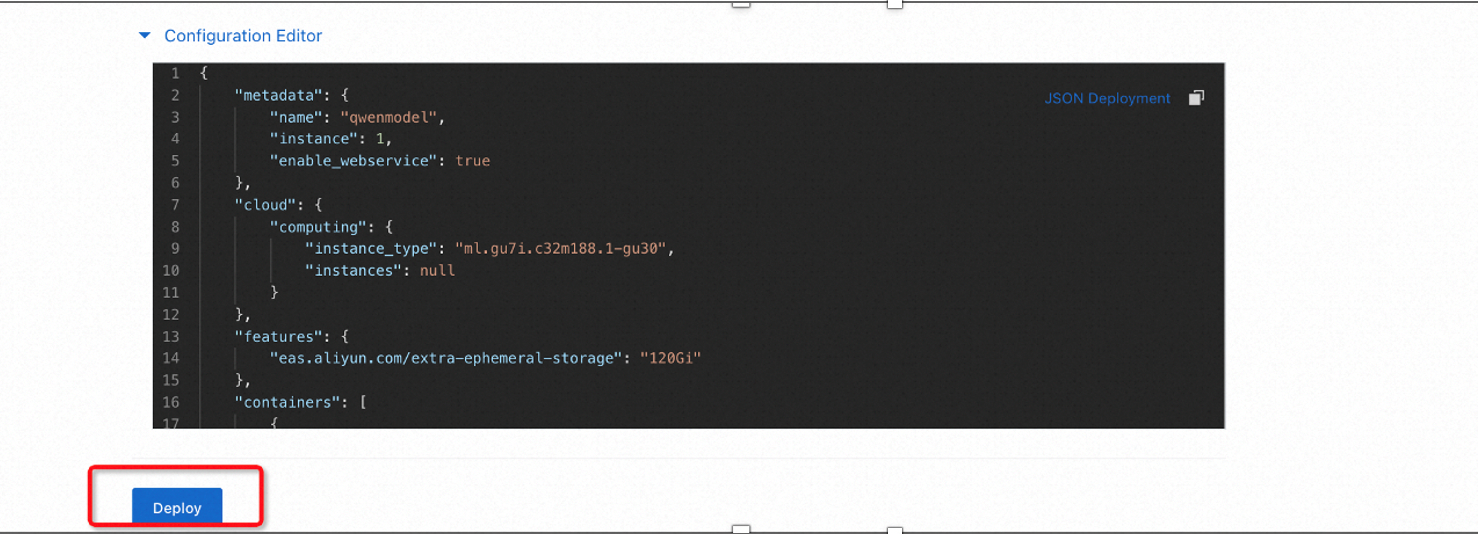

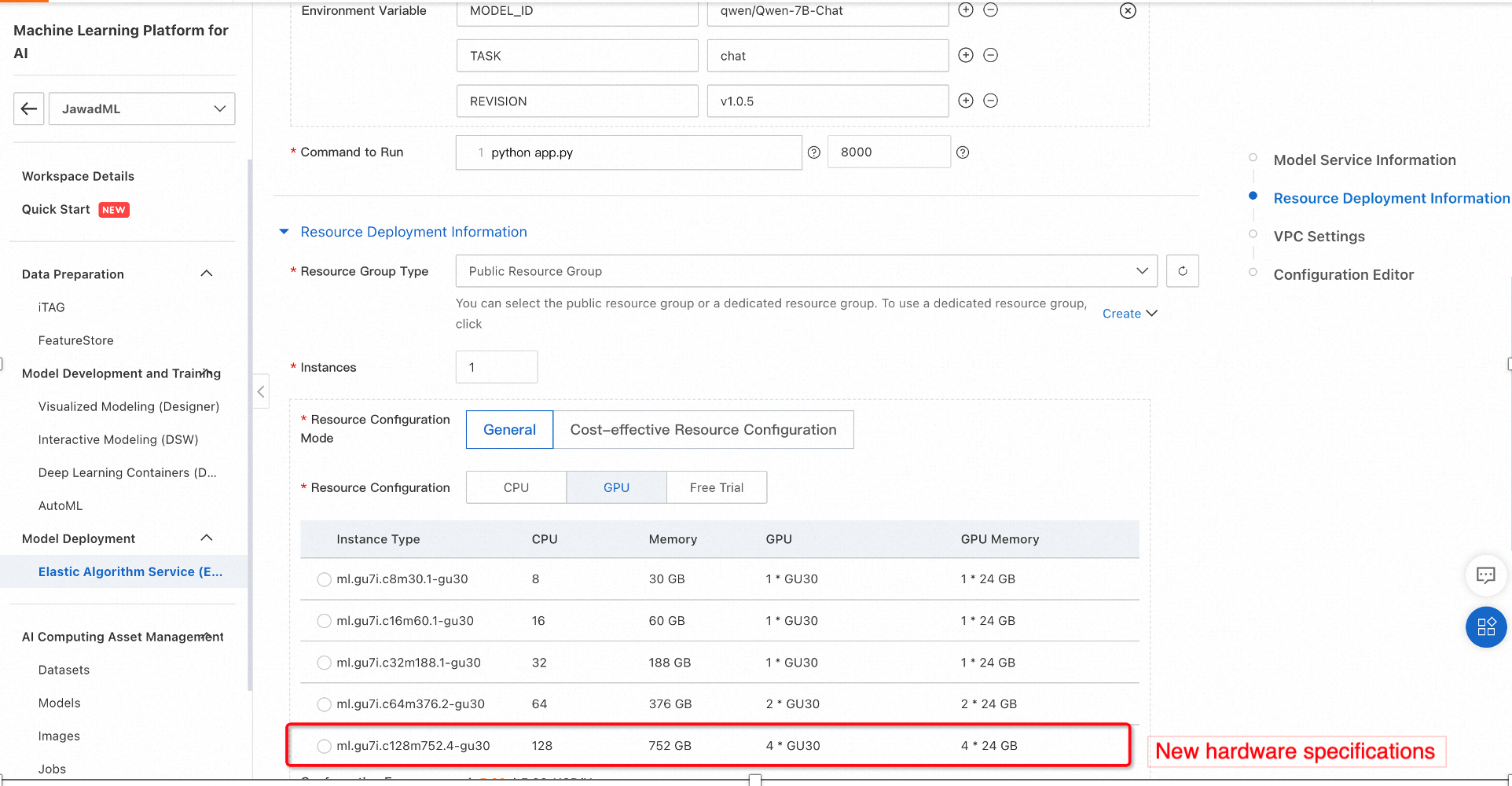

Alibaba Cloud PAI provides various options for hardware that can run the machine learning models for inference. In this case, we may use a GPU with sufficient memory. Select the following configurations and click Deploy.

This will start deploying the pre-trained Qwen-7B model on the selected hardware. The process may take some time so it is advisable to check various events during the deployment process.

3. Checking the creation process

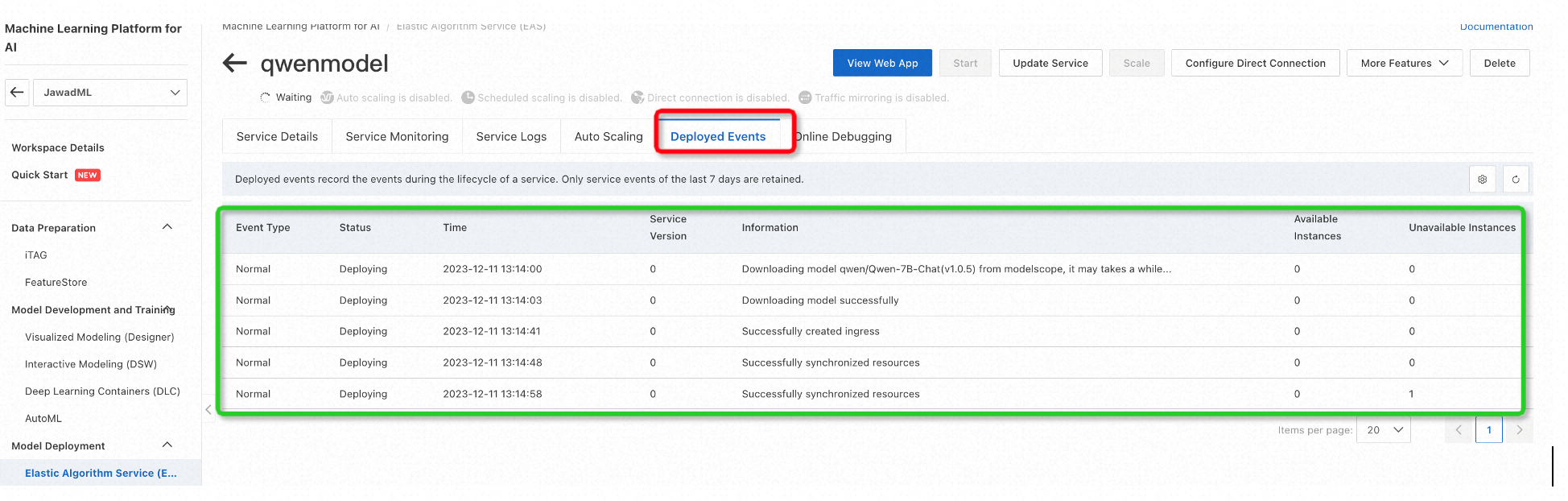

To check the deployment events and make sure that everything is fine, click on the Service ID/Name (in our case it is qwenmodel) and then select Deployment Events.

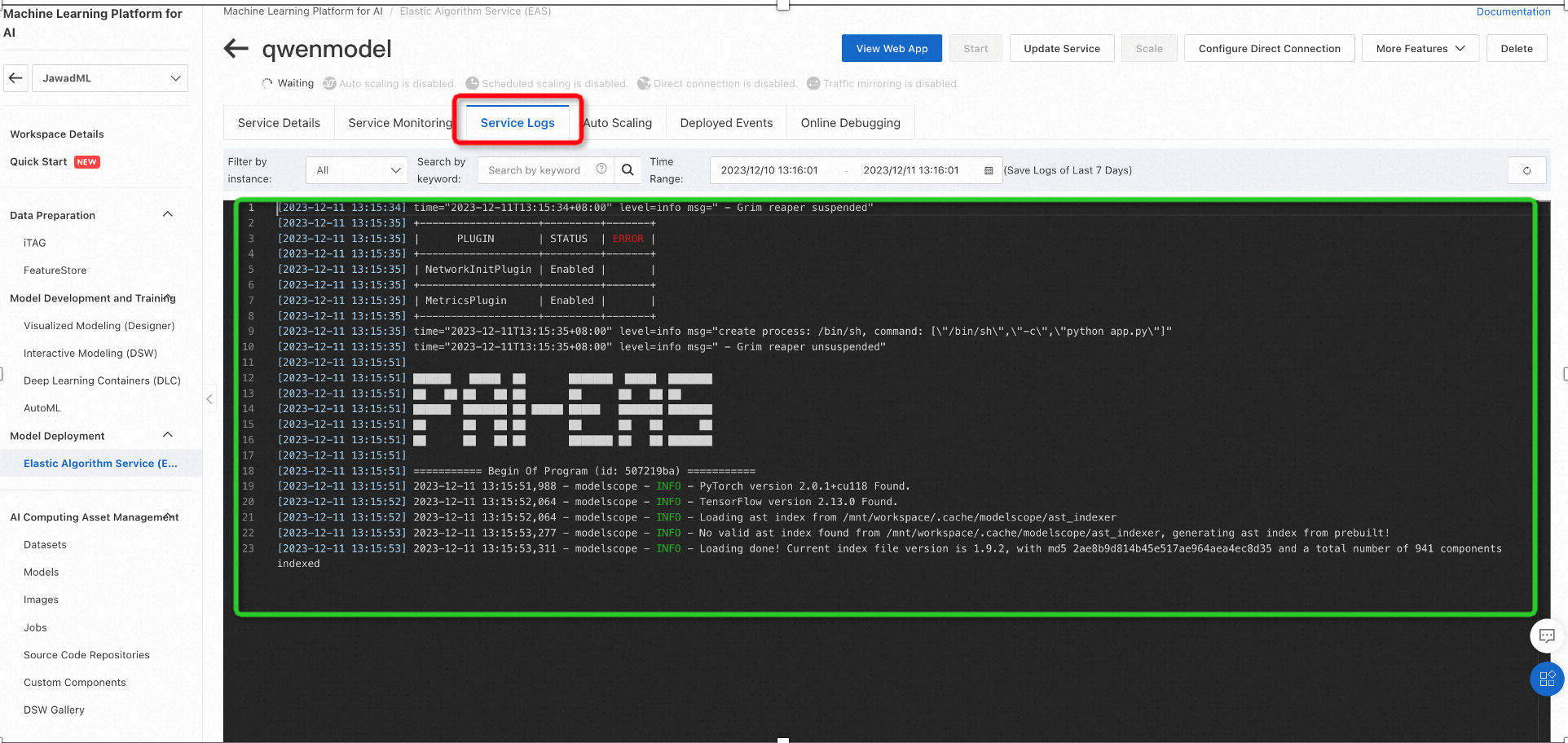

Similarly, Service Logs can be used to see the packages installation related logs, as shown below:

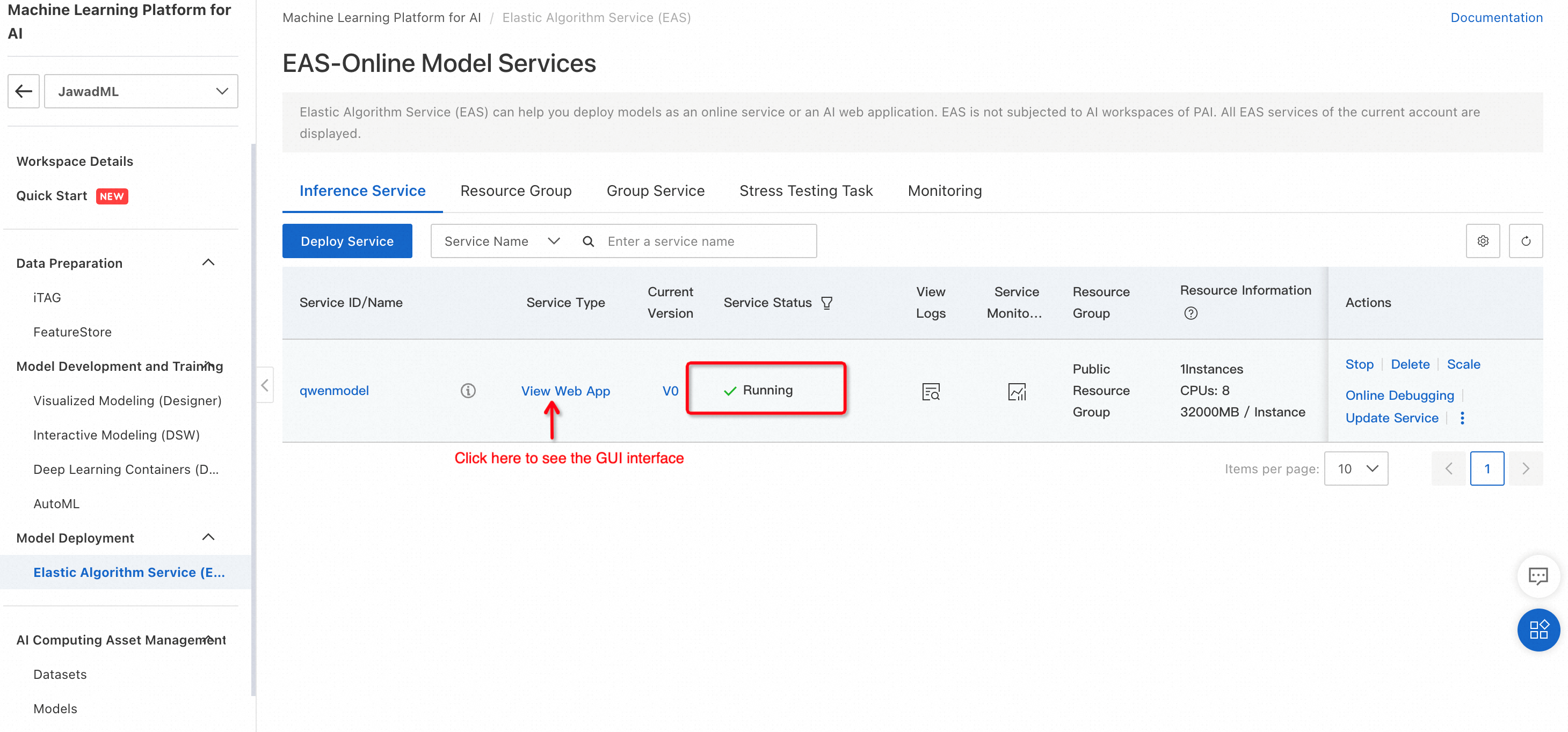

Once the model is successfully deployed, the service status will change to Running.

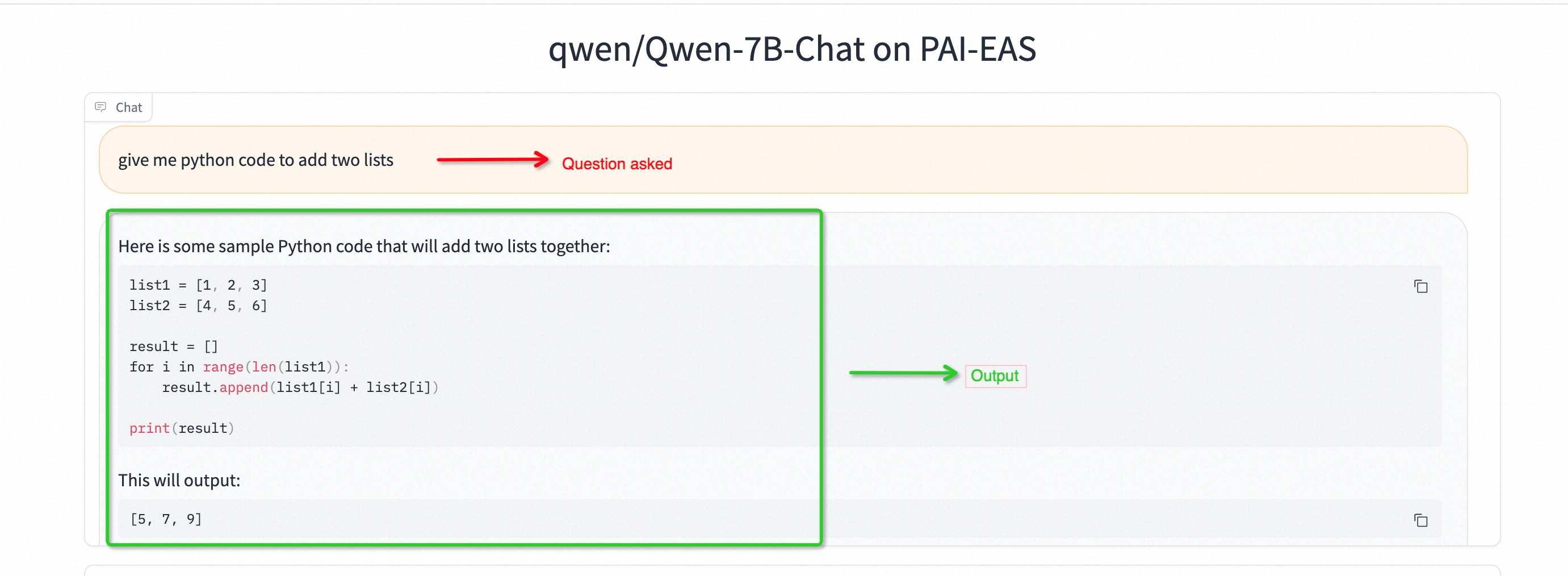

To use the model for inference, click on View Web App. This will open the GUI interface of the model which can be used for testing to generate text.

4. Testing: Qwen-7B to generate python code

It is important to note that using GUI mode, the model processes full input text and then generate the entire text.

5. Upgrading if the you want to improve the performance (Optional)

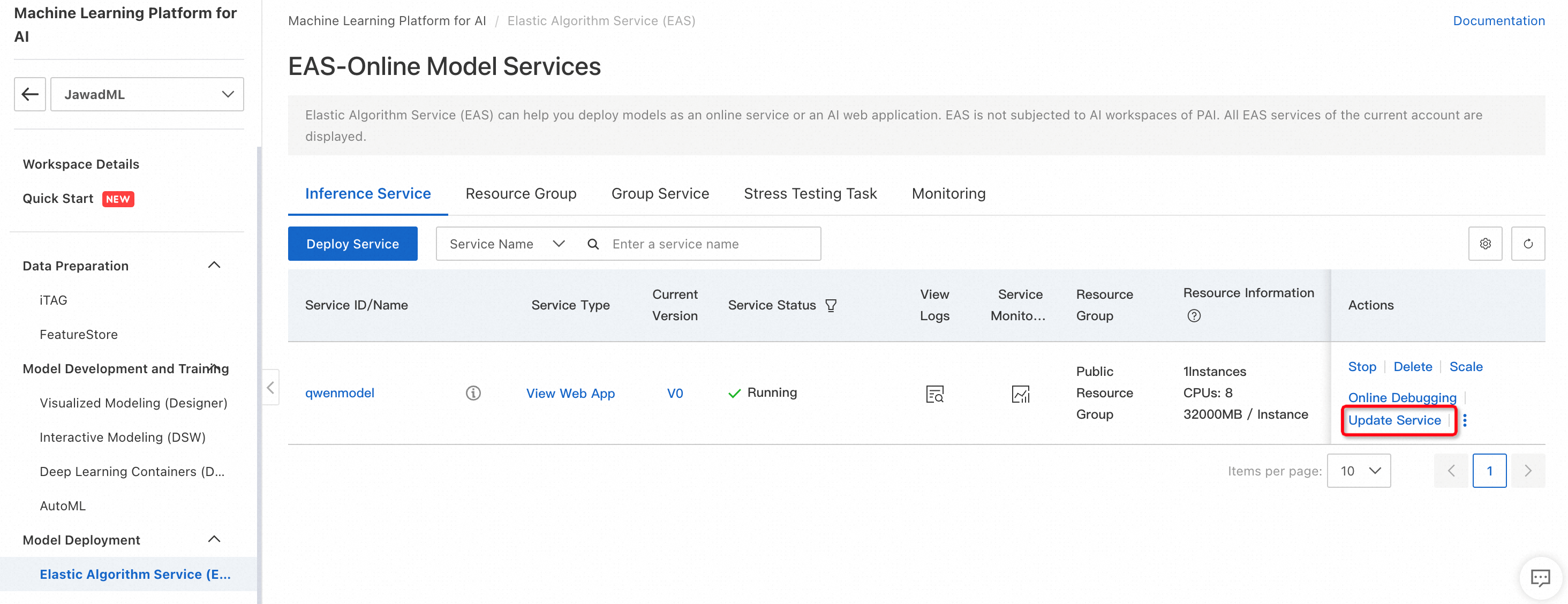

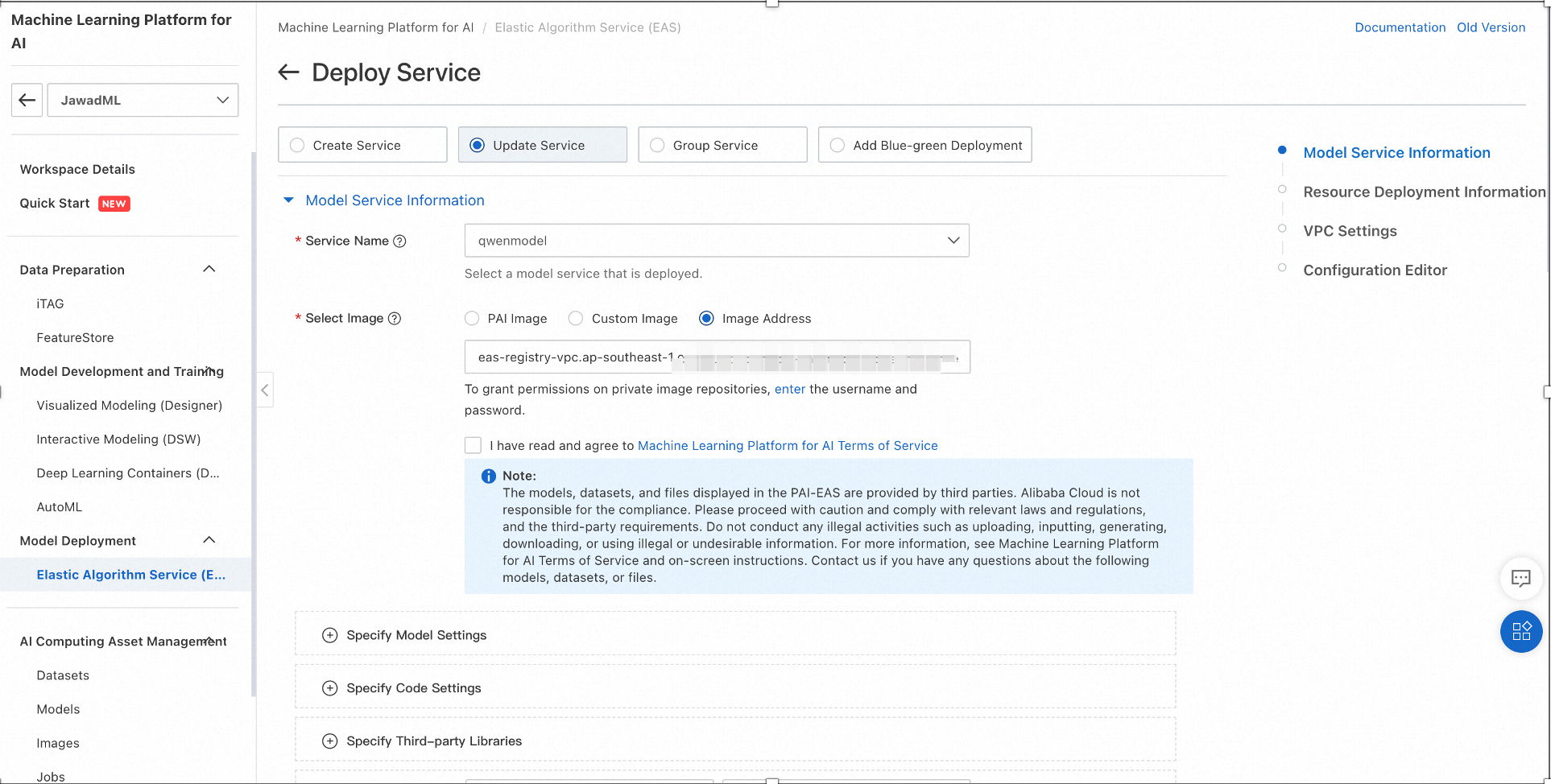

The hardware configuration can be changed if needed so. As shown below, clicking on Update Service will open up configuration window.

Following figure shows how to change the hardware configuration of the inference engine. Once the configuration is changed, click on Deploy.

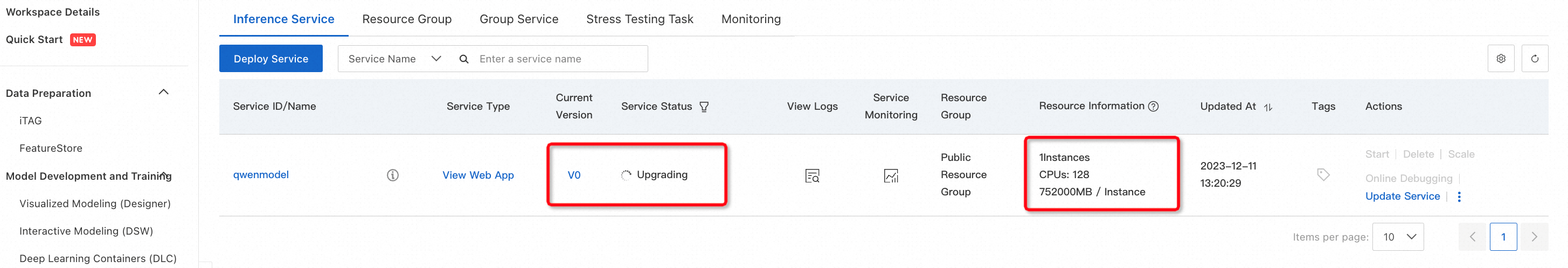

The configuration changes takes some time. Once complete, the Service Status will turn from Upgrading to Running.

We can implement Qwen 7-B in CLI mode which can generate a running text response. For this purpose we can either use PAI interactive Modeling (DSW) or ECS instance. We will discuss the DSW based implementation.

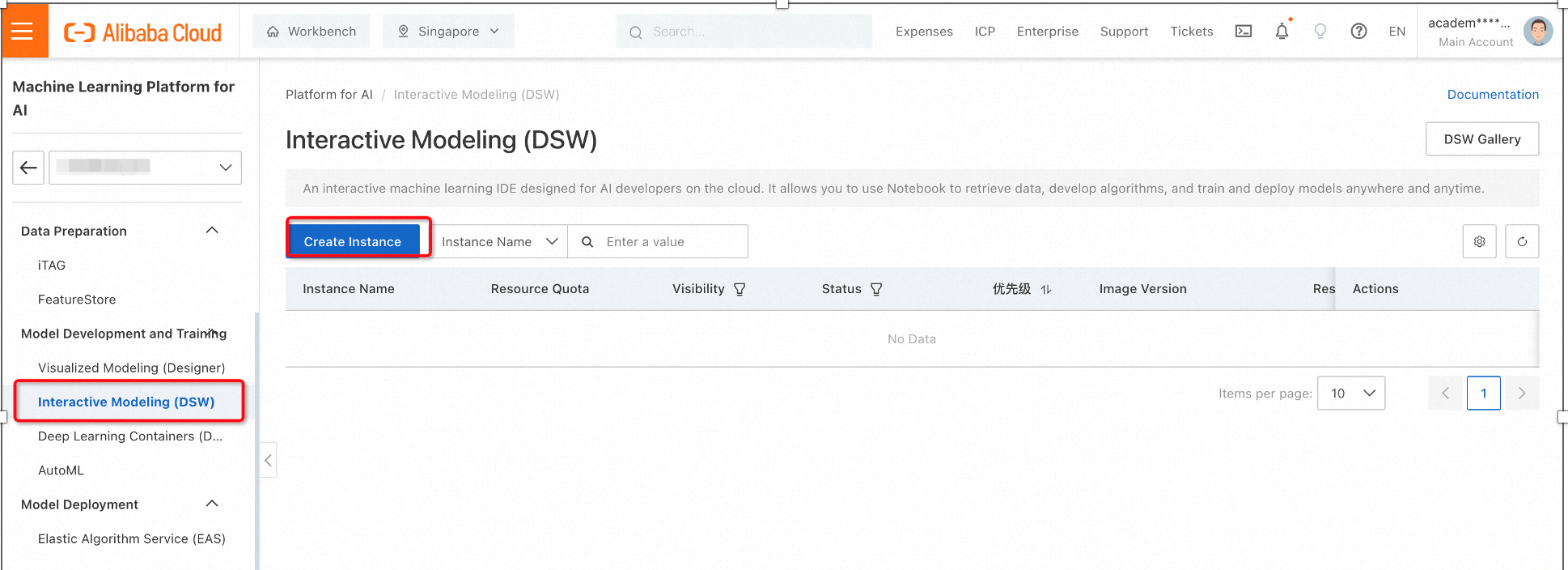

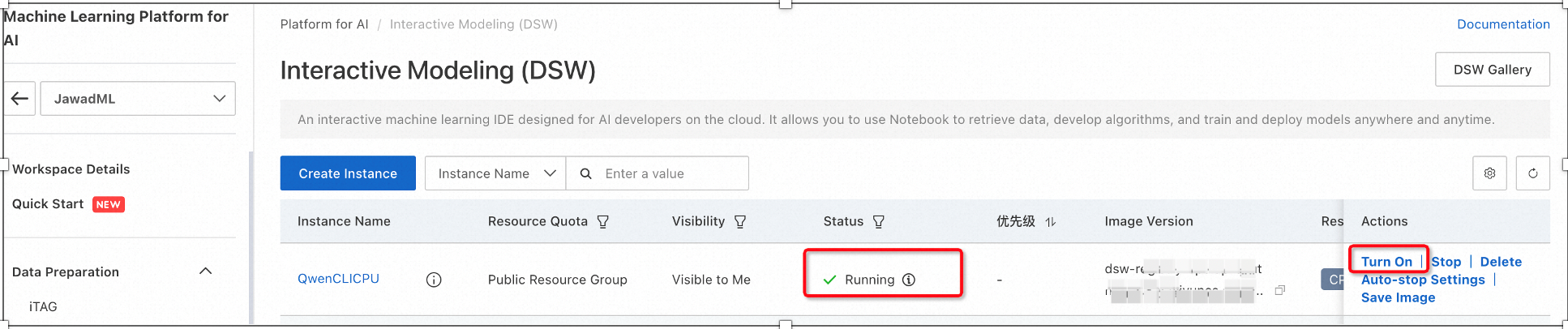

1. On PAI, select Interactive Modeling (DSW) and then click Create Instance. This open the configuration window.

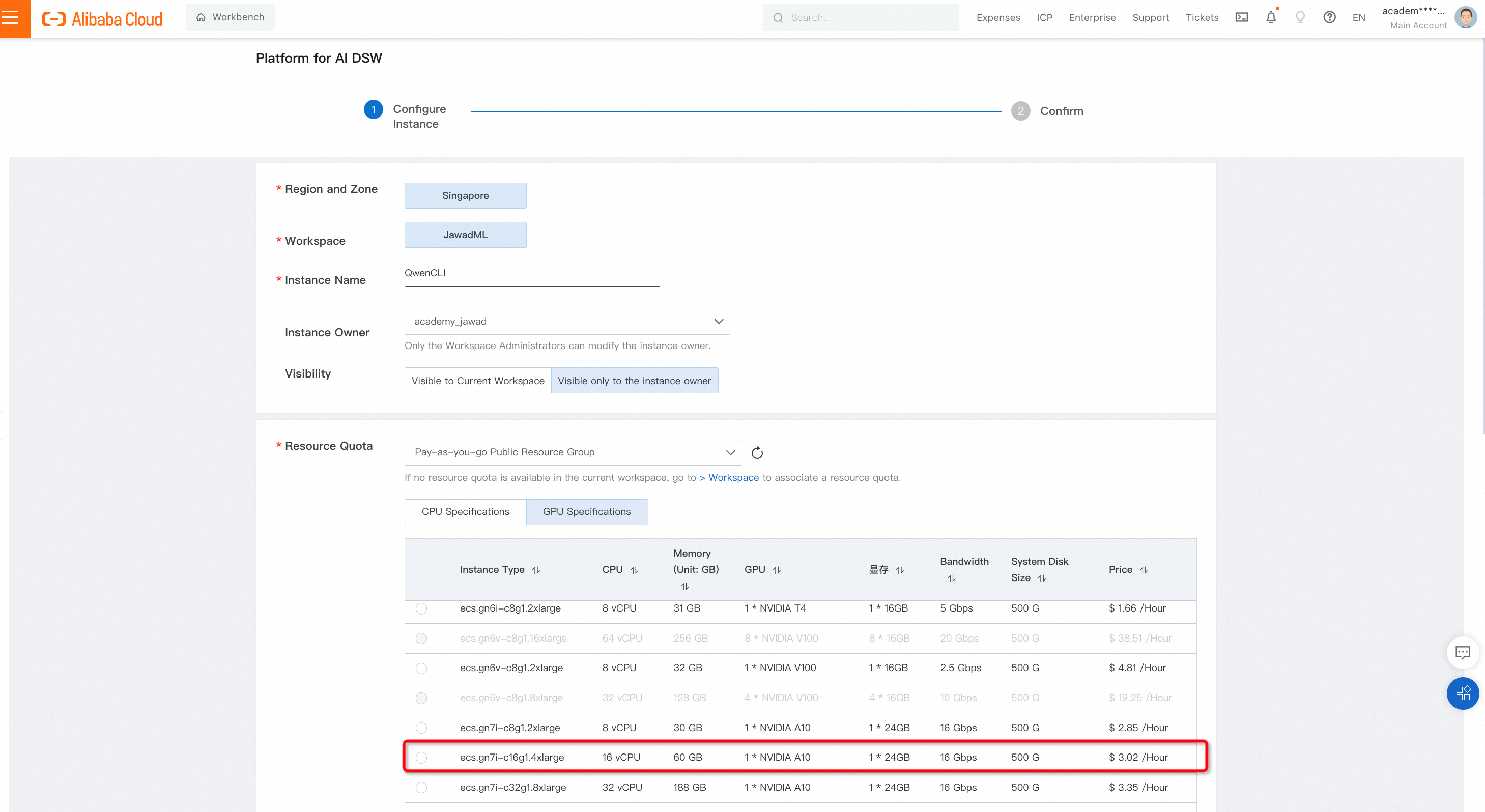

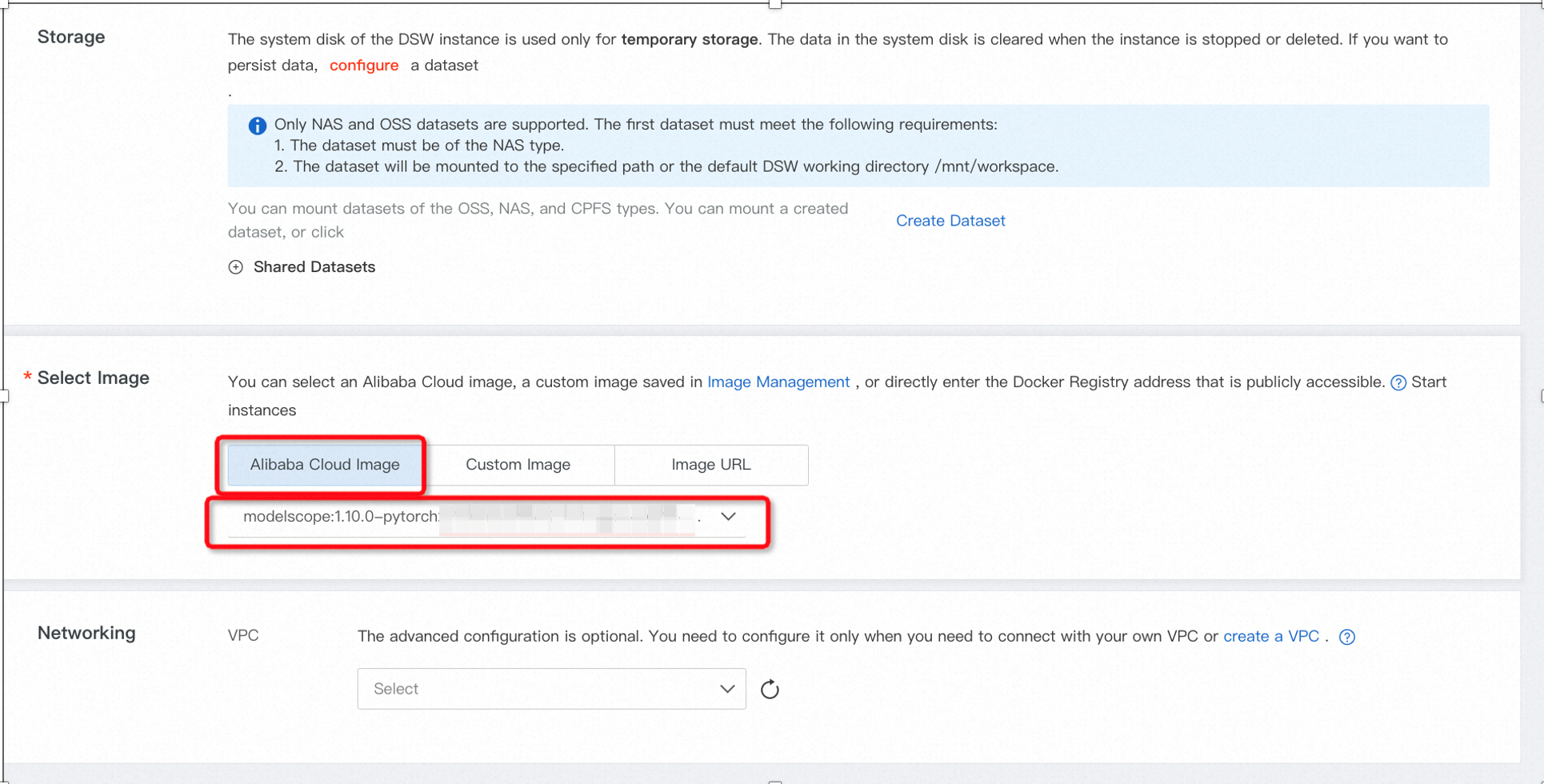

For the configuration, select the proper GPU specifications and image type as shown below.

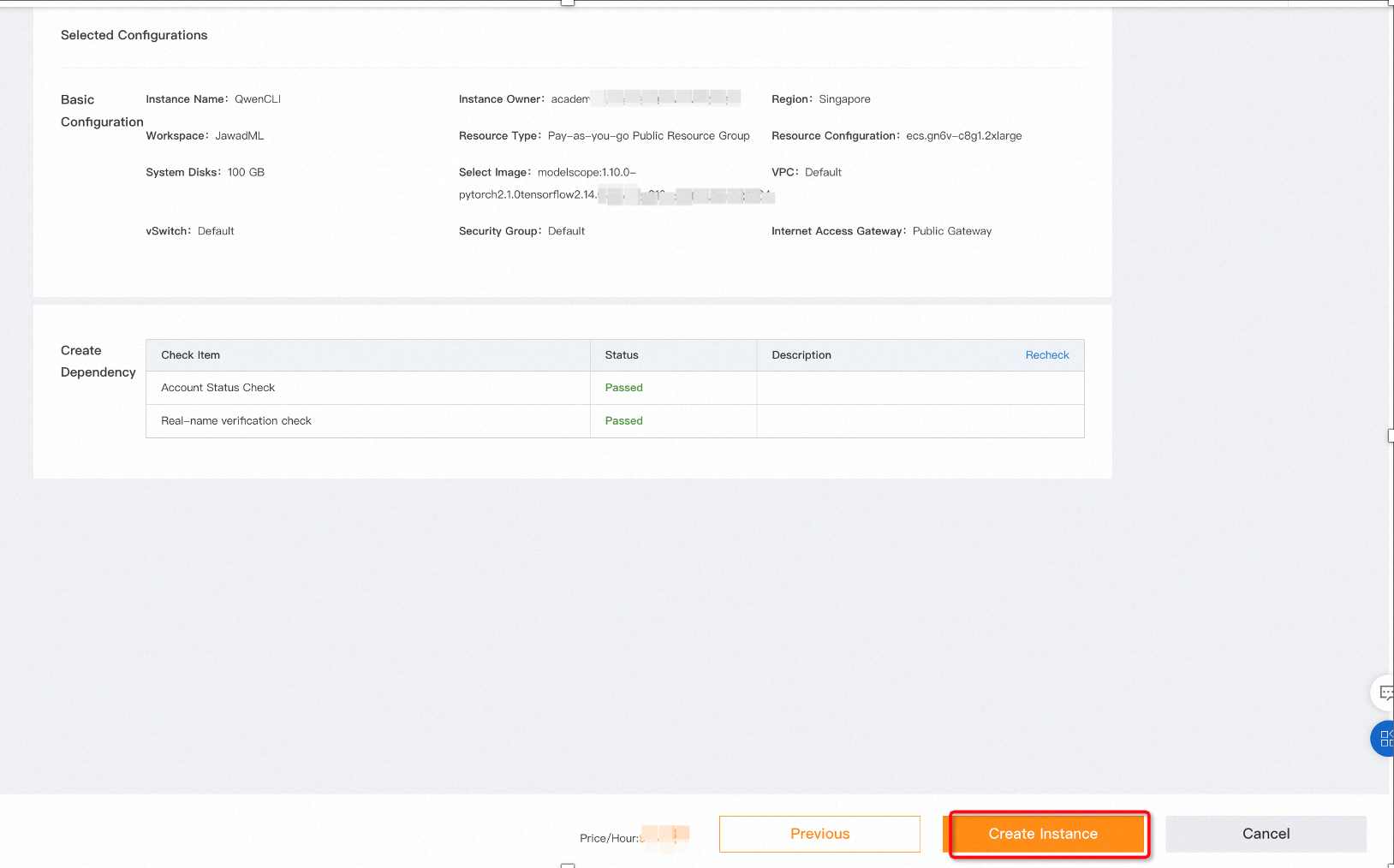

Click Next to go the final page. Select, create instance which will start spinning up the DSW instance.

The initial status of instance will indicate Preparing Resources.

2. After the instance status turns into running, click on the Turn on option on the right side. It will launch the Jupter lab.

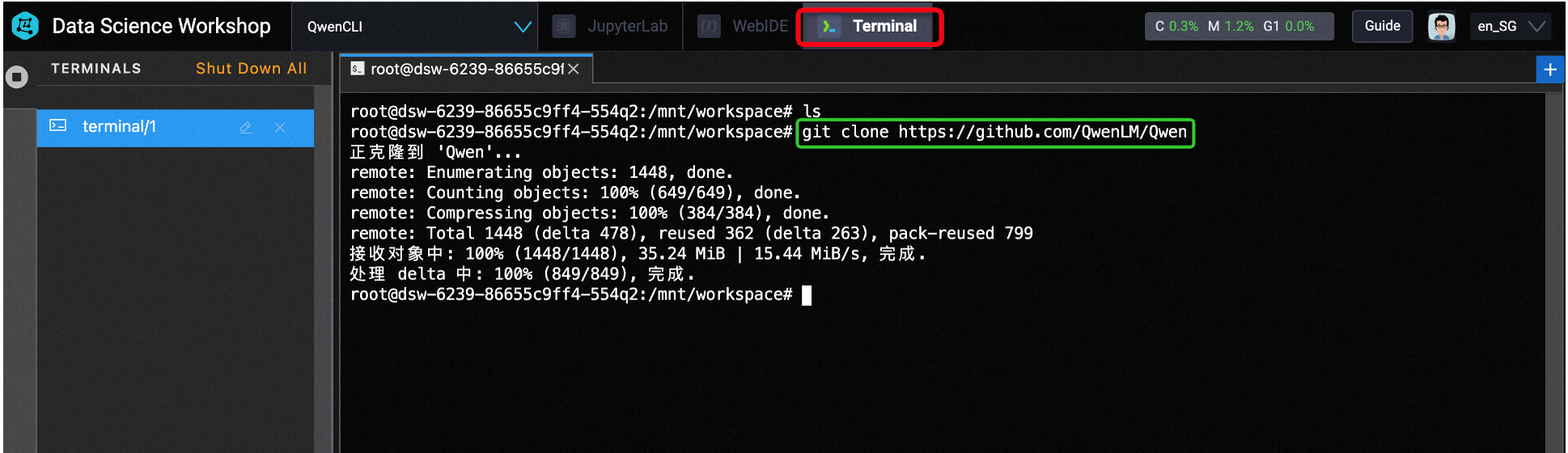

3. We will not use Jupyter Lab here. Instead, we will go the terminal to download the model from Github for deployment.

The best way would be to create python environment first. Once the virtual environment is created, active it and use the following commands in the terminals:

a) git clone https://github.com/QwenLM/Qwen

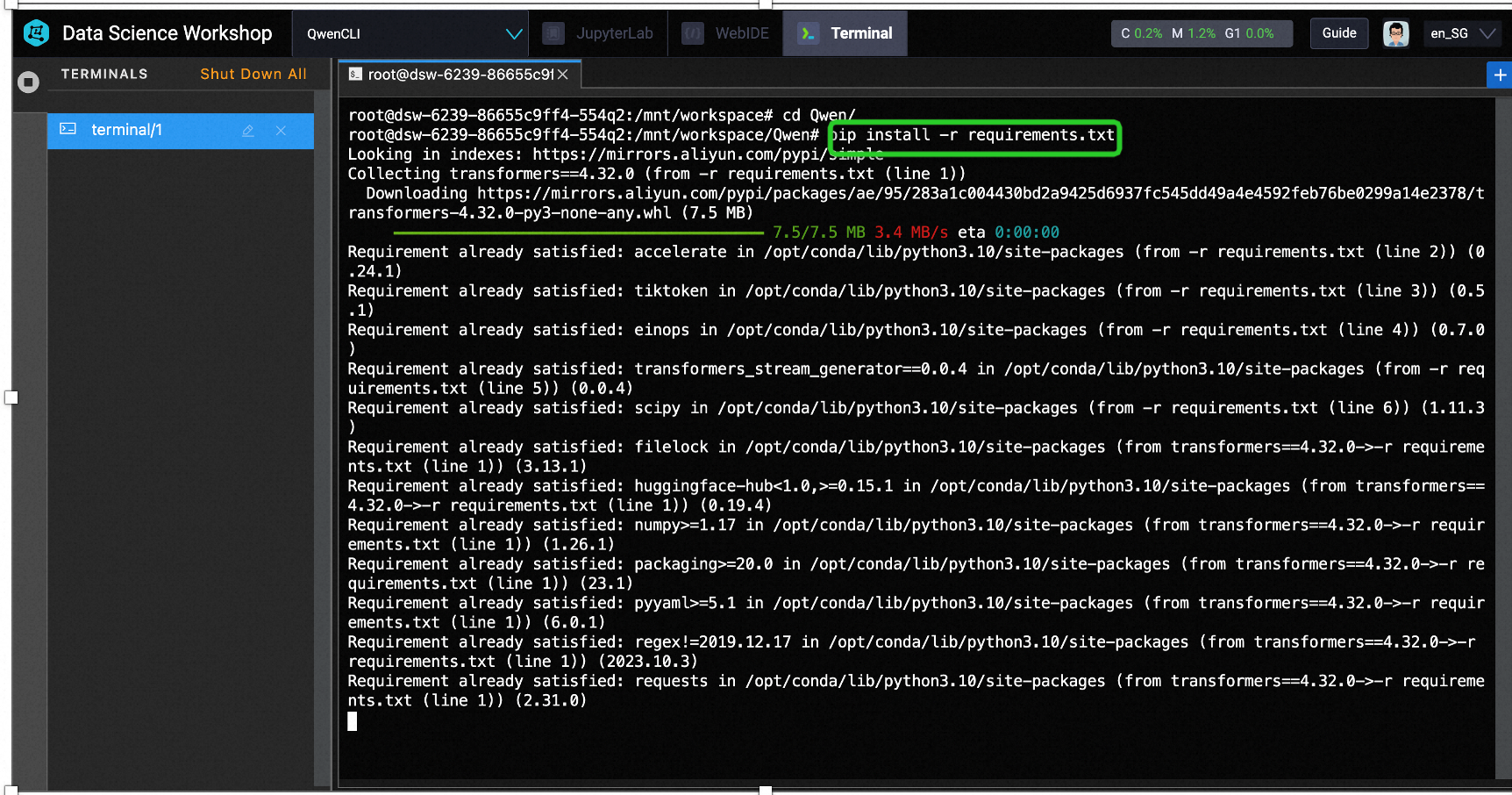

b) cd Qwen/

c) pip install -r requirements.txt

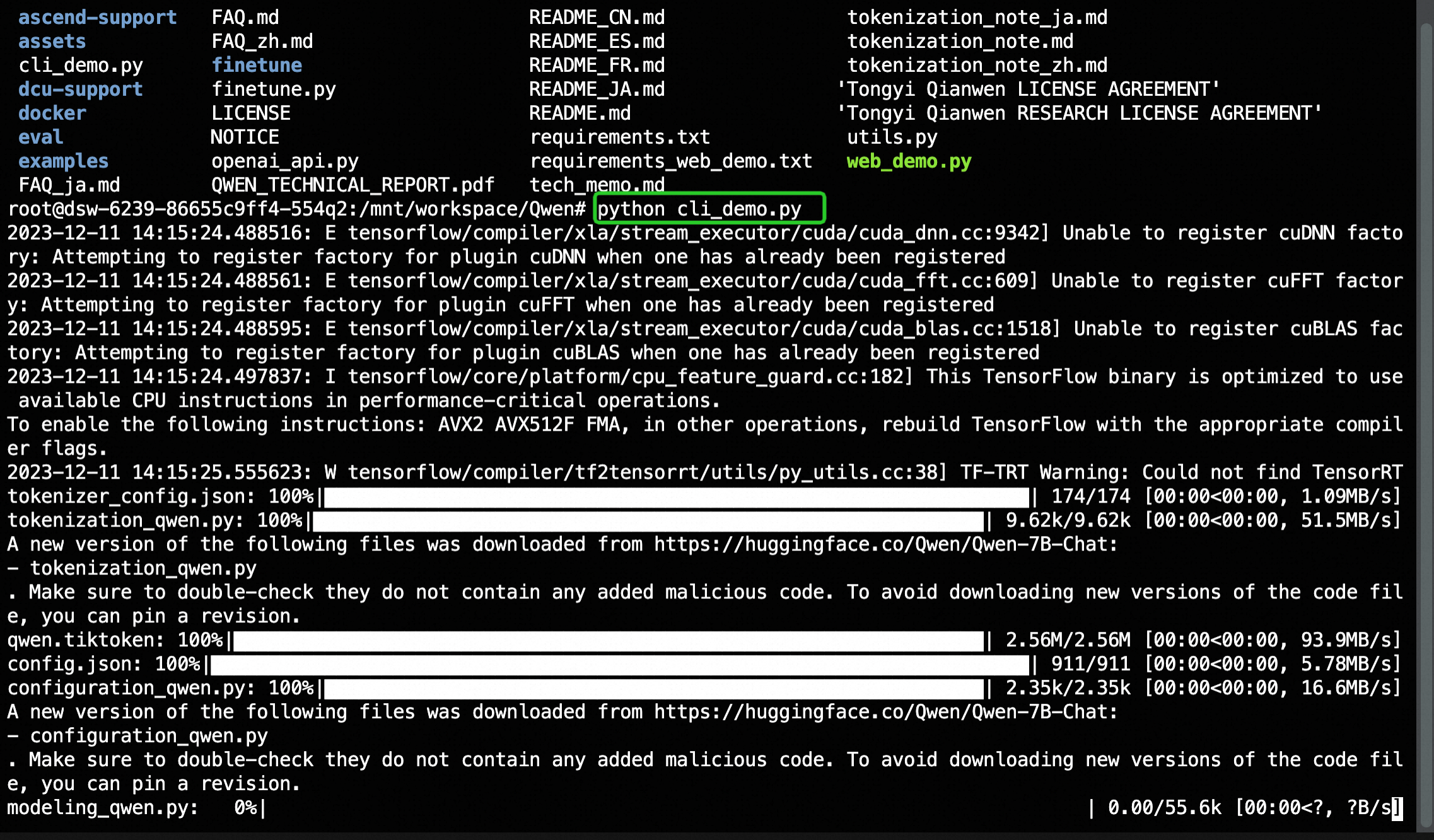

d) python3 cli_demo.py

Please grab a cup of coffee and wait patiently for the installation to complete.

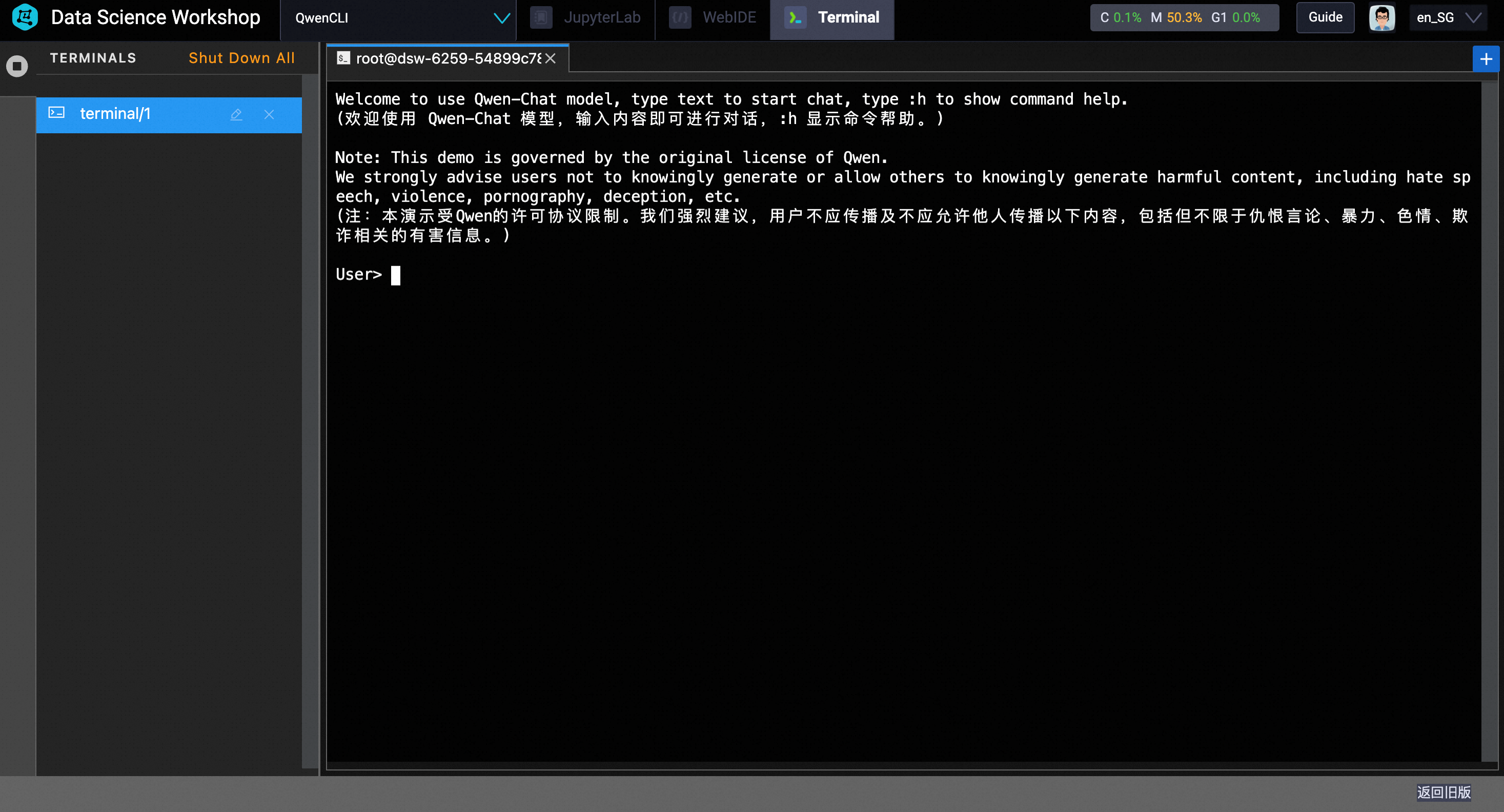

Once completed, you will see the Command line prompt:

4. Testing the model:

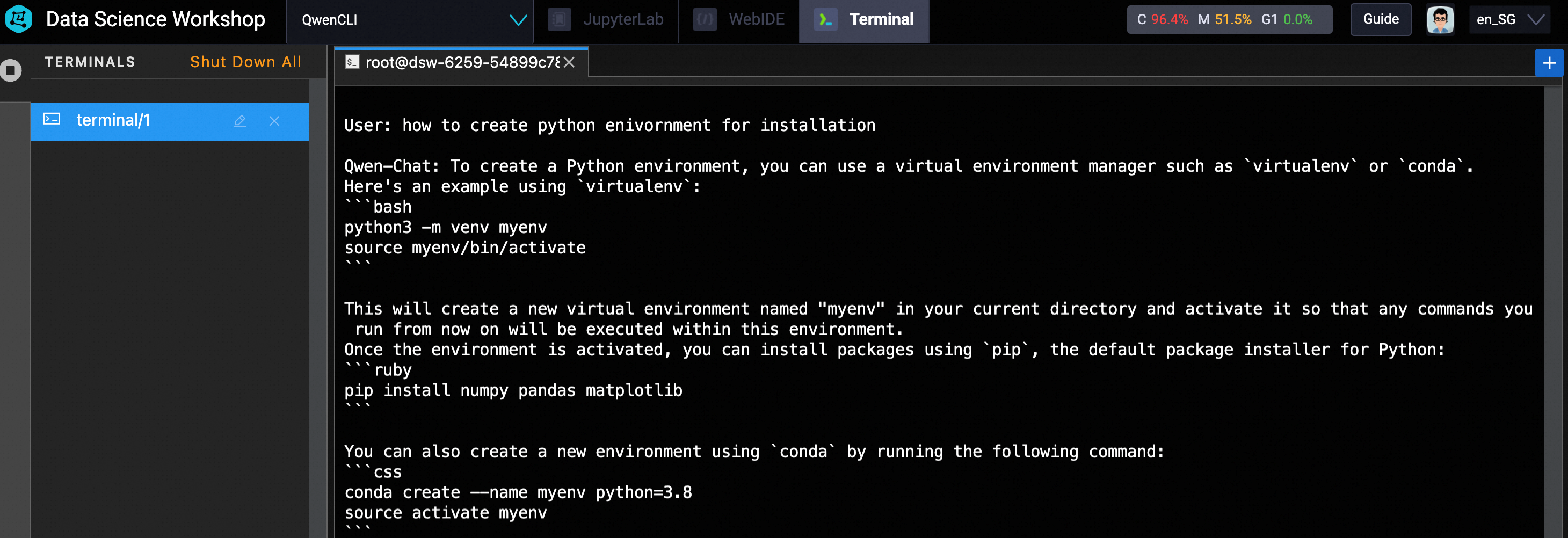

To test the model, directly type the text at the CLI interface. In my case, I use the prompt: how to create python environment for installation.

As shown below, it produces the python code for creating and activating virtual environment.

Try different questions and enjoy the with Qwen. 😀

Deploying Pre-trained Models on Alibaba Cloud ECS Using Hugging Face Transformers and Gradio

Alibaba Cloud Community - September 6, 2024

Alibaba Cloud Community - February 23, 2024

Regional Content Hub - July 8, 2024

Regional Content Hub - July 15, 2024

Regional Content Hub - July 8, 2024

Regional Content Hub - July 15, 2024

Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by JwdShah