This article uses the Bloom7B1 model as an example to demonstrate the distributed inference method for large language models in Alibaba Cloud Container Service for Kubernetes (ACK).

With the increasing availability of large language models, there are now many exceptional open-source models that can be utilized by everyone. It is no longer unattainable to develop your own applications using existing large language models. However, unlike previous models, the memory capacity of a single GPU card may not be sufficient to support large language models. Therefore, it becomes necessary to employ model parallelism to divide large language models and perform inference across multiple GPUs. In this article, we explore the deployment of a distributed inference service for large language models using DeepSpeed Inference.

DeepSpeed Inference is a distributed inference solution provided by Microsoft which supports large transformer-type language models. DeepSpeed Inference offers model parallelism to enable parallel inference across multiple GPUs for large models. By utilizing tensor parallelism, it becomes possible to leverage multiple GPUs simultaneously and improve inference performance. Additionally, DeepSpeed provides optimized custom inference kernels to enhance GPU resource utilization and reduce inference latency. For more information, please refer to DeepSpeed Inference [3].

Even with the availability of a distributed inference solution for large models, there are still several engineering challenges to efficiently deploy large model inference services in Kubernetes clusters. These challenges include rapid deployment of an inference service, ensuring that resources can handle fluctuating page views post-launch, and the absence of suitable tools to monitor key metrics such as inference service latency, throughput, GPU utilization, and memory usage. Furthermore, model splitting schemes and model version management methods need to be established.

This article describes the implementation of DeepSpeed distributed inference using the ACK cloud-native AI suite. This enables easy management of large-scale heterogeneous resources, refined GPU scheduling policies, and comprehensive GPU monitoring and alerting capabilities. Additionally, Arena can be utilized to submit and manage auto-scaling inference services, facilitating service-based operations and maintenance.

In this example, the following components will be used:

In the following example, Arena deploys a standalone multi-card distributed inference service based on the Bloom 7B1 model in a Kubernetes cluster. DJLServing is used as the model service framework. DJLServing is a high-performance general model service solution supported by Deep Java Library (DJL). It directly supports DeepSpeed Inference and provides large model inference services through HTTP. For more information, see DJLServing [4]. DJLServing utilizes Arena to submit inference tasks, employs Kubernetes Deployments to deploy the inference service, loads models and configuration files from shared storage OSS, and exposes the service through a service. It also offers functions such as auto scaling, GPU sharing and scheduling, performance monitoring, and cost analysis and optimization. Using DJLServing can help reduce your operations and maintenance costs.

Next, we will demonstrates how to use the Arena command-line tool to submit a single-server, multi-card, and distributed inference task of the Bloom7B1 model in ACK, and how to configure an Ingress to access the service.

The model configuration includes the following two aspects:

Configuration file, which corresponds to the serving.properties file in this example. It describes the model configuration information. You need to focus on two parameters:

Inference logic file. It is used to load models and process requests. The details are as follows:

The content of serving.properties is as follows:

The model_id here is specified as the address in the container after pvc is mounted. If the model is not downloaded locally in advance, it can be specified as bigscience/bloom-7b1, and the program will download it automatically (the total number of model files amounts to 15G jobs).

engine=DeepSpeed

option.parallel_loading=true

option.tensor_parallel_degree=2

option.model_loading_timeout=600

option.model_id=model/LLM/bloom-7b1/deepspeed/bloom-7b1

option.data_type=fp16

option.max_new_tokens=100The content of model.py is as follows:

mport os

import torch

from typing import Optional

import deepspeed

import logging

logging.basicConfig(format='[%(asctime)s] %(filename)s %(funcName)s():%(lineno)i [%(levelname)s] %(message)s', level=logging.DEBUG)

from djl_python.inputs import Input

from djl_python.outputs import Output

from transformers import pipeline, AutoModelForCausalLM, AutoTokenizer

predictor = None

def get_model(properties: dict):

model_dir = properties.get("model_dir")

model_id = properties.get("model_id")

mp_size = int(properties.get("tensor_parallel_degree", "2"))

local_rank = int(os.getenv('OMPI_COMM_WORLD_LOCAL_RANK', '0'))

logging.info(f"process [{os.getpid()} rank is [{local_rank}]]")

if not model_id:

model_id = model_dir

logging.info(f"rank[{local_rank}] start load model")

model = AutoModelForCausalLM.from_pretrained(model_id)

tokenizer = AutoTokenizer.from_pretrained(model_id)

logging.info(f"rank[{local_rank}] success load model")

model = deepspeed.init_inference(model,

mp_size=mp_size,

dtype=torch.float16,

replace_method='auto',

replace_with_kernel_inject=True)

logging.info(f"rank[{local_rank}] success to convert model to deepspeed kernel")

return pipeline(task='text-generation',

model=model,

tokenizer=tokenizer,

device=local_rank)

def handle(inputs: Input) -> Optional[Output]:

global predictor

if not predictor:

predictor = get_model(inputs.get_properties())

if inputs.is_empty():

# Model server makes an empty call to warmup the model on startup

return None

data = inputs.get_as_string()

output = Output()

output.add_property("content-type", "application/json")

result = predictor(data, do_sample=True, max_new_tokens=50)

return output.add(result)Upload the serving.properties, model.py, and optional model files to OSS. For more information, see Upload Files in the Console [7].

After the files are uploaded to OSS, create a PV and a PVC named bloom7b1-pv and bloom7b1-pvc to mount to the container of the inference service. For more information, see Use OSS Static Volumes [8].

Put the configuration file information into the PVC. You can run the following arena command to start the inference service.

-- gpus: Set this value to 2, which indicates that two GPUs are required for distributed inference.-- data: the bloom7b1-pvc is the pvc created in the previous step, and /model is the path to mount the PVC to the container.arena serve custom \

--name=bloom7b1-deepspeed \

--gpus=2 \

--version=alpha \

--replicas=1 \

--restful-port=8080 \

--data=bloom7b1-pvc:/model \

--image=ai-studio-registry-vpc.cn-beijing.cr.aliyuncs.com/kube-ai/djl-serving:2023-05-19 \

"djl-serving -m "View the task running status.

$ kubectl get pod | grep bloom7b1-deepspeed-alpha-custom-serving

bloom7b1-deepspeed-alpha-custom-serving-766467967d-j8l2l 1/1 Running 0 8s

View the startup logs.

kubectl logs bloom7b1-deepspeed-alpha-custom-serving-766467967d-j8l2l -fThe service startup logs are as follows. As we can see:

INFO ModelServer Starting model server ...

INFO ModelServer Starting djl-serving: 0.23.0-SNAPSHOT ...

INFO ModelServer

INFO PyModel Loading model in MPI mode with TP: 2.

INFO PyProcess [1,0]<stdout>:process [92 rank is [0]]

INFO PyProcess [1,0]<stdout>:rank[0] start load model

INFO PyProcess [1,1]<stdout>:process [93 rank is [1]]

INFO PyProcess [1,1]<stdout>:rank[1] start load model

INFO PyProcess [1,0]<stdout>:rank[0] success to convert model to deepspeed kernel

INFO PyProcess [1,1]<stdout>:rank[1] success to convert model to deepspeed kernel

INFO PyProcess [1,0]<stdout>:rank[0] success load model

INFO PyProcess [1,1]<stdout>:rank[1] success load model

INFO PyProcess Model [deepspeed] initialized.

INFO PyProcess Model [deepspeed] initialized.

INFO PyModel deepspeed model loaded in 297083 ms.

INFO ModelServer Initialize BOTH server with: EpollServerSocketChannel.

INFO ModelServer BOTH API bind to: http://0.0.0.0:8080Here we start port-forward for quick verification.

# Use kubectl to start port-forward.

kubectl -n default-group port-forward svc/bloom7b1-deepspeed-alpha 9090:8080In another terminal, request a service.

# Open a new terminal and run the following command.

$ curl -X POST http://127.0.0.1:9090/predictions/deepspeed -H "Content-type: text/plain" -d "I'm very thirsty, I need"

[

{

"generated_text":"I'm very thirsty, I need some water.\nWhat are you?\n- I'm a witch.\n- I thought you'd say that.\nI know a great witch.\nShe's right in here.\n- You know where we can go?\n- That's right, in one moment.\n- You want to"

}

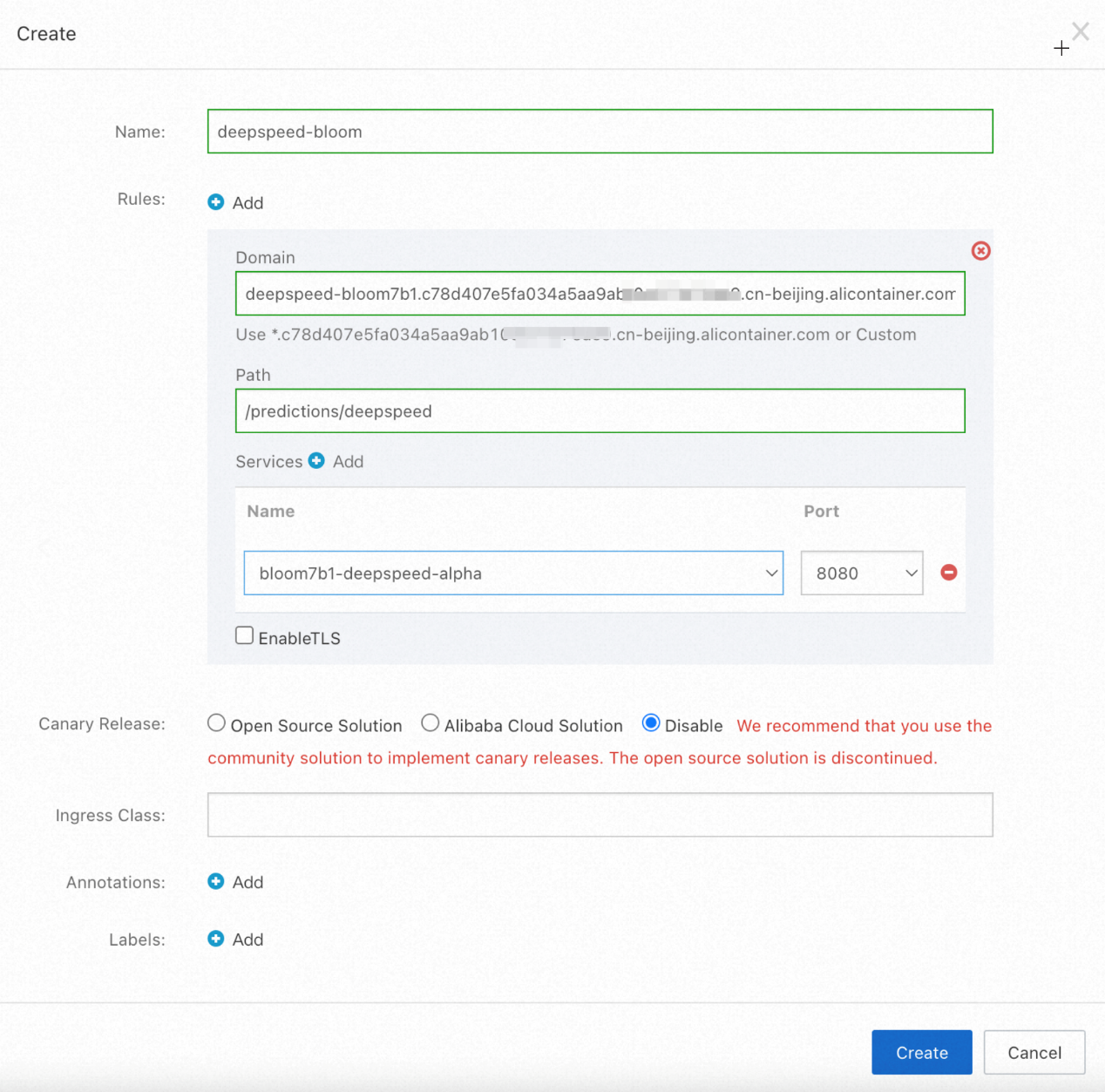

]You can configure an Ingress to communicate model services to the outside to manage external traffic and ensure model availability. The Ingress configuration process for the service created above is as follows:

For more information about how to configure an Ingress, see Create an NGINX Ingress [9].

On the page that appears, set the following parameters:

After the Ingress is created, you can use the domain name configured for the Ingress to access the Bloom model.

% curl -X POST http://deepspeed-bloom7b1.c78d407e5fa034a5aa9ab10e577e75ae9.cn-beijing.alicontainer.com/predictions/deepspeed -H "Content-type: text/plain" -d "I'm very thirsty, I need"

[

{

"generated_text":"I'm very thirsty, I need to drink!\nI want more water.\nWhere is the water?\nLet me have the water, let me have the water...\nWait!\nYou're the father aren't you?\nDo you have water?\nAre you going to let me have some?\nGive me the"

}

]The above example demonstrates how to utilize Arena to deploy a single-machine multi-card inference service for the Bloom7B1 model and leverage DeepSpeed-Inference's model parallel inference technology for inference across multiple GPUs. In addition to DeepSpeed-Inference, there are other distributed inference solutions for large models, such as FastTransformer + Triton. Moving forward, we will continue to explore and aim to combine the cloud-native AI suite with distributed inference solutions for large models. Our goal is to provide high-performance, low-latency, and auto-scaling large model inference services at a lower cost.

[1] Guide of Cloud-native AI Suite for Developers

https://www.alibabacloud.com/help/en/doc-detail/336968.html?spm=a2c4g.212117.0.0.14a47822tIePxy

[2] Ingress Overview

https://www.alibabacloud.com/help/en/doc-detail/198892.html?spm=a2c4g.181477.0.0.67d5225chicJHP

[3] DeepSpeed Inference

https://www.deepspeed.ai/tutorials/inference-tutorial/

[4] DJLServing

https://github.com/deepjavalibrary/djl-serving

[5] Create a Managed GPU Cluster

https://www.alibabacloud.com/help/en/doc-detail/171074.html?spm=a2c4g.171073.0.0.4c78f95a00Mb5P

[6] Install Cloud-native AI Suite

https://www.alibabacloud.com/help/en/doc-detail/201997.html?spm=a2c4g.212117.0.0.115b1cb6yDEAjy

[7] Upload OSS Files in the Console

https://www.alibabacloud.com/help/en/doc-detail/31886.htm?spm=a2c4g.276055.0.0.528e663f4mIHH9#concept-zx1-4p4-tdb

[8] Use OSS Static Volumes

https://www.alibabacloud.com/help/en/doc-detail/134903.html?spm=a2c4g.134903.0.0.132a4e96wLxEPu

[9] Create an NGINX Ingress

https://www.alibabacloud.com/help/en/doc-detail/86536.html?spm=a2c4g.198892.0.0.3acd663fsFwQPY

Cloud-native AI Engineering Practice: Accelerating LLM Inference with FasterTransformer

666 posts | 55 followers

FollowAlibaba Container Service - July 25, 2025

Alibaba Cloud Community - June 23, 2025

Alibaba Cloud Native Community - March 6, 2025

Alibaba Cloud Big Data and AI - January 8, 2026

Alibaba Container Service - July 24, 2024

Alibaba Cloud Native Community - March 18, 2024

666 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Alibaba Cloud Native Community