Recently, several papers led by the Alibaba Cloud Machine Learning Platform for AI (PAI) Team were selected by the ACL 2023 Industry Track. ACL is the top international conference in the artificial intelligence natural language processing field, focusing on academic research of natural language processing technology in various application scenarios. The conference has promoted core innovations in pre-trained language models, text mining, dialogue systems, machine translation, and other natural language processing fields and has had a huge impact in both academic and industrial circles.

The results of the paper are jointly achieved by Alibaba Cloud PAI, Alibaba International Business Department, a training project jointly developed by Alibaba Cloud and the South China University of Technology, and the team of Professor Yanghua Xiao of Fudan University. This selection means the natural language processing and multi-modal algorithms (developed by PAI) and the ability of the algorithm framework have reached an advanced level in the global industry and gained recognition from international scholars. This showcases China's artificial intelligence technology innovation and its competitiveness worldwide.

As a popular cross-modal task, graphic retrieval is of great practical value in a wide range of industrial applications. The booming development of visual-linguistic pre-training (VLP) models has improved representation learning across different modal data, resulting in significant performance gains. However, the data in the e-commerce field has its characteristics:

1) The text of the general scene mostly contains a complete sentence structure description, while the description or query in the e-commerce scene usually consists of multiple descriptive phrases describing the material or style of the product and other details.

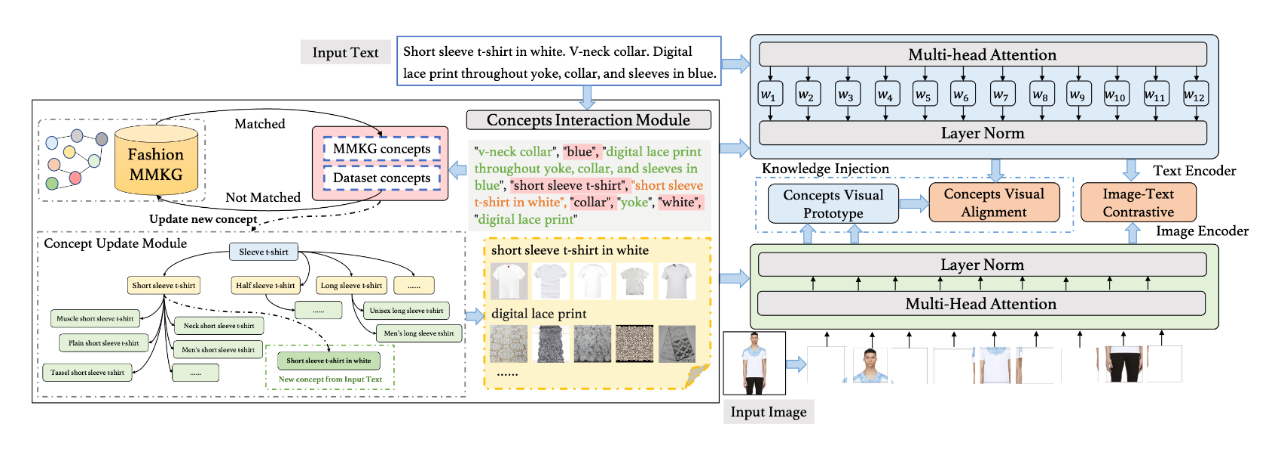

2) Images in the general domain usually have a complex background. In contrast, commodity images mainly contain a large commodity image without any background objects. Based on this, the paper proposed a VLP model of e-commerce knowledge enhancement, FashionKLIP. It contains two parts: a data-driven construction strategy to build a multi-modal e-commerce concept knowledge graph (FashionMMKG) from a large-scale e-commerce graphic corpus and a training strategy to train and integrate knowledge to learn the representation alignment of two modal image-text pairs and further obtain concept alignment by matching the text representation with the visual prototype representation of the fashion concept in FashionMMKG.

In order to verify the practicability of the FashionKLIP method, we applied it to the product search platform of the Alibaba International Department and verified the zero-sample scenario on two retrieval subtasks of image-product and text-product. We compared it with the baseline method CLIP, and the experimental results further proved the practical value and efficiency of FashionKLIP.

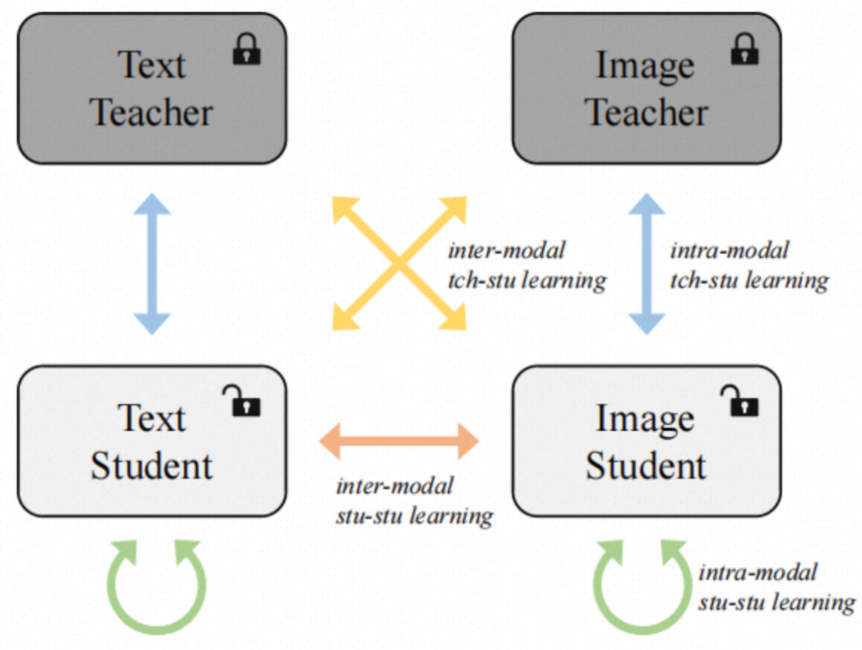

The purpose of text-image retrieval is to retrieve a list of the most relevant images from a large collection of images given a specific text query. With the rapid development of information interaction and social scenarios, this task has been considered a key component of cross-modal applications and is required by various real-world scenarios (such as e-commerce platforms, websites, etc.) Existing correlation models (such as CLIP) are still impractical on edge devices with limited computing resources or dynamic indexing scenarios (such as private photo/message collections). In order to solve this problem, our goal is to start from a large-scale pre-trained dual encoder model and focus on the distillation process of the small model pre-training stage to obtain a series of smaller, faster, and more effective corresponding lightweight models. Unlike existing work, our approach introduces a fully-connected knowledge interaction graph for distillation in the pre-training phase. In addition to intra-modal teacher-student interactive learning, our method includes intra-modal student-student interactive learning, inter-modal teacher-student interactive learning, and inter-modal student-student interactive learning, as shown in the following figure:

This fully-connected graph for the student network can be seen as an integration of multi-view and multi-task learning solutions, which can enhance the robustness and effectiveness of the pre-trained model. At the same time, we suggest that each type of learning process should test the effects of different supervision strategies in detail. We apply the proposed technology to the end-to-end cross-modal retrieval scenario of the e-commerce platform, and the results show that we significantly reduce the storage space of the model and increase the computational efficiency while ensuring performance.

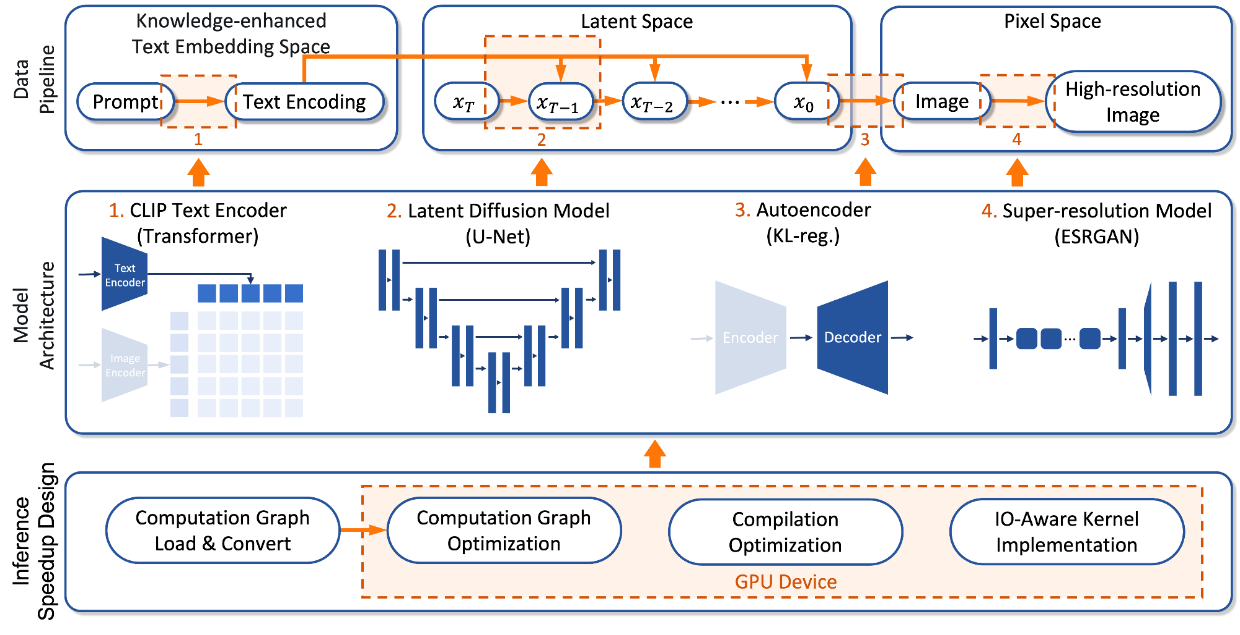

Text-to-Image Synthesis (TIS) refers to the technology of generating images based on text input. Given a text instruction, a computer program is used to generate an image that conforms to the description of the text content. However, due to the lack of domain-specific entity knowledge of the pre-trained language model and the limited inference speed of the diffusion model, it is difficult for the current popular text-image generation model of the open-source community to support the application of specific industrial fields. The main problem is that the diffusion-based method requires the input text to be encoded using a pre-trained text encoder, which is used as a conditional input to the UNet model of the diffusion model. However, the current pre-trained text encoder models using text images collected online lack the ability to understand specific entity concepts and are difficult to capture entity-specific knowledge, which is essential for generating realistic images of entity objects. At the same time, the inference speed and computational cost of the diffusion model are important factors to be considered, and the tedious computing of the iterative inverse diffusion denoising process has always been the bottleneck of the diffusion model inference speed. The new framework we propose is used to train and deploy a text-image generation diffusion model. The model architecture is shown in the following figure. We inject rich entity knowledge into CLIP's text encoder and use knowledge graphs for knowledge enhancement to improve the ability to understand specific entities. Unlike the open-source Stable Diffusion, which directly uses the large-scale hierarchical diffusion model, we integrated an ESRGAN-based network after the image diffusion module to improve the resolution of the generated image while effectively solving the problem of parameter explosion and time-consuming. For online deployment, we designed an efficient inference process based on the neural architecture optimized by FlashAttention. The Intermediate Representation (IR) of the generated model computation image is further processed by the end-to-end AI compiler BladeDISC to improve the inference speed of the generated model.

Our experiments prove that our knowledge-augmented model for a specific domain can better understand domain knowledge and generate more realistic and diverse images. In terms of inference speed, we use the end-to-end artificial intelligence compiler BladeDISC and FlashAttention technology to improve the inference speed of the model. We integrated this technology with PAI to demonstrate its practical value in applications. Users can train, fine-tune, and infer their models with one click on their tasks (data).

Soon, the source code of the preceding three algorithms will be contributed to the natural language processing algorithm framework EasyNLP to serve the open-source community. NLP practitioners and researchers are welcome to use them. EasyNLP is an easy-to-use and comprehensive Chinese NLP algorithm framework developed by the Alibaba Cloud PAI Team based on PyTorch. EasyNLP supports commonly used Chinese pre-trained models and large model landing technologies and provides an all-in-one NLP development experience from training to deployment. Due to the increasing demand for cross-modal understanding, EasyNLP will support various cross-modal models, especially those in the Chinese field, to the open-source community, hoping to serve more NLP and multi-modal algorithm developers and researchers and work with the community to promote the development and model implementation of NLP/multi-modal technology.

GitHub: https://github.com/alibaba/EasyNLP

FashionKLIP: Enhancing E-Commerce Image-Text Retrieval with Fashion Multi-Modal Conceptual Knowledge Graph | Authors: Xiaodan Wang, Chengyu Wang, Lei Li, Zhixu Li, Ben Chen, Linbo Jin, Jun Huang, Yanghua Xiao, Ming Gao

ConaCLIP: Exploring Distillation of Fully-Connected Knowledge Interaction Graph for Lightweight Text-Image Retrieval | Authors: Jiapeng Wang, Chengyu Wang, Xiaodan Wang, Jun Huang, Lianwen Jin

Rapid Diffusion: Building Domain-Specific Text-to-Image Synthesizers with Fast Inference Speed | Authors: Bingyan Liu, Weifeng Lin, Zhongjie Duan, Chengyu Wang, Ziheng Wu, Zipeng Zhang, Kui Jia, Lianwen Jin, Cen Chen, Jun Huang

44 posts | 1 followers

FollowFarah Abdou - November 18, 2024

Alibaba Cloud Community - January 4, 2024

Alibaba Cloud Data Intelligence - July 19, 2023

Amuthan Nallathambi - May 12, 2024

Alibaba Cloud Community - August 23, 2023

Alibaba Cloud MaxCompute - October 31, 2022

44 posts | 1 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Alibaba Cloud Data Intelligence