Machine vision, or computer vision, is a popular research topic in artificial intelligence (AI) that has been around for many years. However, machine vision still remains as one of the biggest challenges in AI. In this article, we will explore the use of deep neural networks to address some of the fundamental challenges of computer vision. In particular, we will be looking at applications such as network compression, fine-grained image classification, captioning, texture synthesis, image search, and object tracking.

Texture synthesis is used to generate a larger image containing the same texture. Given a normal image and an image that contains a specific style, then style transform not only retains the original contents of the image but transforms that image into the specified style.

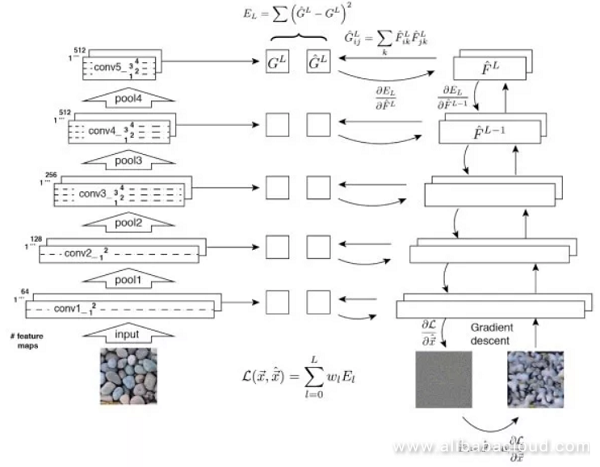

Feature inversion is the core concept behind texture synthesis and style transform. Given a middle layer feature, we hope to user iteration to create a feature and an image similar to the given feature. Feature inversion can tell us how much image information gets contained in a middle layer feature.

Given DxHxW deep convolution features, we convert them to Dx(HW) matrix X, so we can define the gram matrix corresponding to that layer's feature as

G=XX^T

Through the outer product, the Gram matrix captures the relationships between different features.

It performs feature inversion on the gram matrix of a given texture image and makes the Gram matrix of each of the image's features similar to the Gram for each layer of the given texture image. The low layer features will tend toward capturing detailed information, while the high layer features can capture features across a larger area.

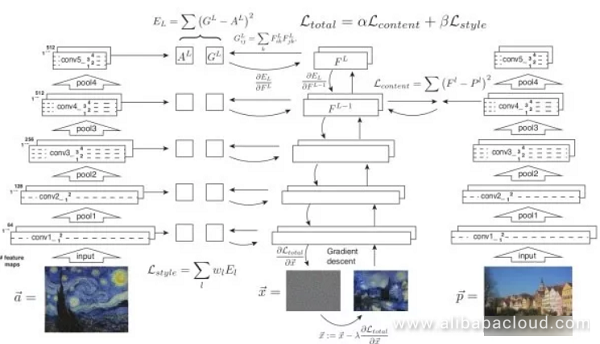

There are two primary objectives to this optimization. The first is to bring the contents of the generated image closer to that of the original image, while the second is make the style of the generated image match the specified style. The style is embodied by the Gram matrix, while the content is represented directly by the activation values of the neurons.

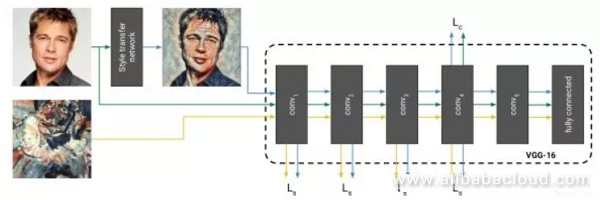

A failing of the method outlined above is that we can only reach convergence after multiple iterations. The solution offered by related work is to train a neural network to create a style transformed image directly. Once training ends, the style transform only requires a single iteration through a feed-forward network, which is very useful. During training, we take the generated image, the original image, and the style transformed image and feed them into a set network to extract features from different layers and calculate the loss algorithm.

When instance normalization and batch normalization act on a batch, the image itself can determine the mean and variance of the sample normalization. Experiments demonstrate that by using instance normalization, a style transform network can remove the comparative information related to the image to simplify the generation process.

One problem with the method described above is that we have to train a separate model for each different style. Since different styles sometimes contain similarities, this work can be improved by sharing parameters between style transform networks for different styles. Specifically, it changes the instance normalization for the style transform network so that it has N groups of zoom and translation parameters, each group corresponding to a specific style. This way we can obtain N style transform images from a single feed-forward process.

We can consider face verification/recognition a more precise fine-grained image recognition task. Face verification is where the system takes two images and determines whether or not they belong to the same person, while face recognition attempts to determine who the person in the given image is. A face verification/recognition system typically consists of three major steps: finding the face in the image, locating the relevant features, and then verifying/recognizing the face. A major difficulty to face verification/recognition is that learning has to get executed on a small sample. Under typical conditions, there will only be one image for each person in the dataset, a situation called one-shot learning.

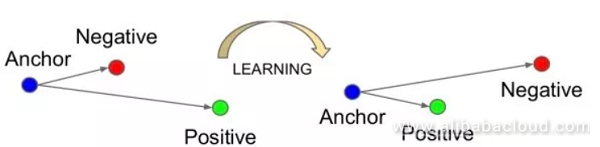

As an issue of classification (facing a massive number of classifications), or as an issue of metric learning. If two images are of the same person, then we would hope that their deep features would be quite similar. Otherwise, their features should be dissimilar. Later, verification gets applied according to the distance between the deep features (setting a threshold of distance between features at which the point the images are determined to belong to different people), or recognition (k nearest neighbor classification).

The first system to successfully apply a deep neural network to a face verification/recognition model. DeepFace uses non-shared parameter locality connection. This is because different parts of the human face have different features (for example eyes and lips have different features), so the classic "shared parameters" nature of the traditional convolution layer makes it inapplicable to face verification. Therefore, face recognition networks use non-shared parameter locality connections. The siamese network it uses gets utilized in face verification. When the deep features of two images are smaller than the given threshold, they are considered to be of the same person.

Three-factor input, where it is hoped that the distance between negative samples is larger than the distance between positive samples by a given amount (ex: 0.2) Furthermore, the three input factors are not random, otherwise, because the difference between the negative samples would be too large, the network would be incapable of learning. Selecting the most challenging group of three elements (for example the farthest positive sample and closest negative sample) puts that network into the most optimal situation. FaceNet uses a half-difficulty method, where it chooses negative samples that are farther away than the positive sample.

This has been a hot research topic in recent years. Since the differences within a category could be quite large, and the similarity between categories could likewise be quite high, no small amount of research has got aimed at elevating the ability of classic crossover loss to determine deep features. For example, the optimization goal of L-Softmax is to increase the angle of intersection between the parameter vectors and deep features of different categories.

A-Softmax takes another step towards ending the length of the parameter vector at 1, focusing training on optimizing deep features and angle of intersection. Practically, L-Softmax and A-Softmax are both challenging to converge, so during training, they used an annealing method to gradual anneal the standard softmax to the L-Softmax or A-Softmax.

This system determines whether the image of a face came from a real person or from a photograph, a key obstacle to face verification/recognition tasks. Some methods that are currently popular in the industry is reading the changes in a person's facial expression, texture information, blinking, or requiring the user to complete a series of movements.

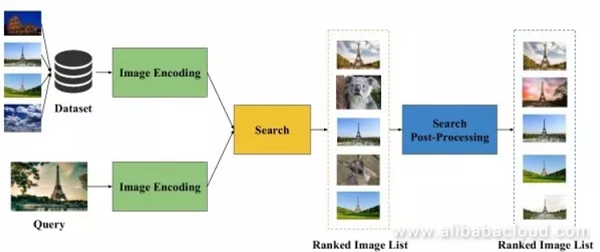

Given an image that contains a specific instance (for example a specific target, scene, or building), image searching is used to find images in the database that contain elements similar to the given instance. However, because the angle, lighting, and obstacles in two images are most often not the same, the issue of creating a search algorithm capable of dealing with these differences within a category of images poses a major challenge to researchers.

First of all, we have to extract appropriate representative vectors from the image. Secondly, apply Euclidean distance or Cosine distance to these vectors to perform a nearest neighbor search and find the most similar images. Finally we use specific processing techniques to make small adjustments to the search results. We can see that the limiting factor in the performance of an image search engine is the representation of the image.

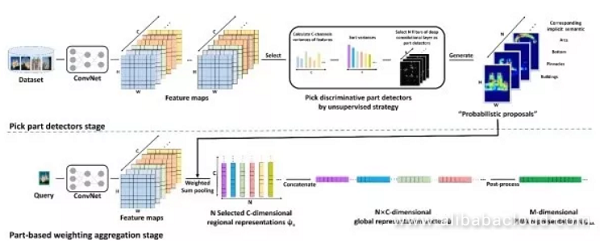

Unsupervised image search uses a pre-trained ImageNet model, without outside supervision information, as the set feature extraction engine to extract representations of the image.

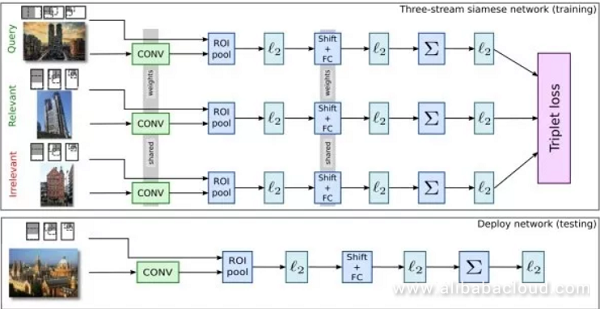

Supervised image search first takes the pre-trained ImageNet model and tunes it on another training dataset. Then it extracts image representations from this tuned model. To obtain a better result, the training dataset used to optimize the model is typically similar to the search dataset. Furthermore, we can use a candidate regional network to extract a foreground region from the image that might contain the target.

Siamese network: Similar to the idea behind face recognition, this system uses two elements or three element input (++-) to train the model to minimize the distance between two samples and maximize the distance between two different samples.

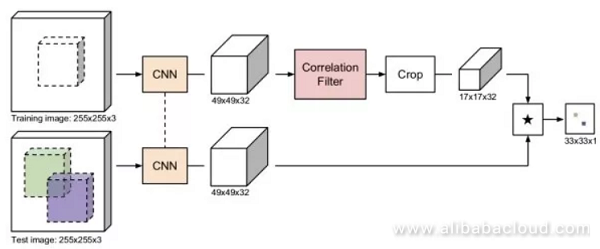

The objective of object tracking is to track the movements of a target in a video. Normally, the target is located in the first frame of the video and marked by a box. We need to predict where the box will get located in the next frame. Object tracking is similar to target testing. However, the difficulty to object tracking is we don't know ahead of time what target we will be tracking. Therefore, we have no way of collecting enough training data before the task and train a specialized test.

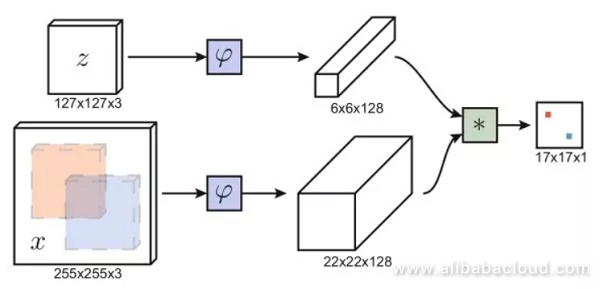

Similar to the concept behind face verification, utilizing a health network means inputting an image within a target box on one line, and on another line input a candidate image region, then output the degree of similarity between two images. We do not need to traverse all of the other candidate regions in different frames; rather we can use a convolution network and only have to feed-forward each image once. Through convolution, we can obtain a two-dimensional response map where the most significant response position determines the predicted location of the box. Methods based on a health network are quite fast and able to process images of any size.

Related filters train a linear template to distinguish between image regions and the regions around them, then use Fourier Transformation. Associated filters are incredibly useful. CFNet, in combination with a health network trained offline and a related online filter template, is capable of elevating tracking performance on a weighted network.

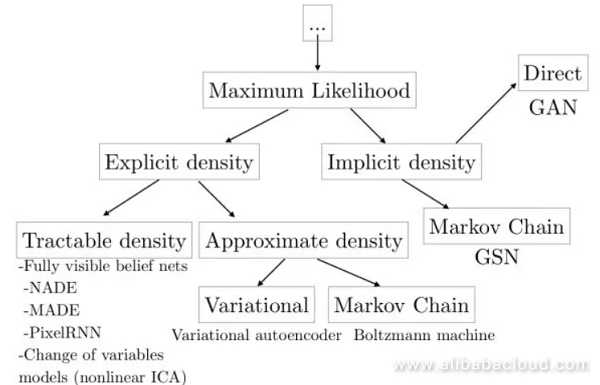

This type of model is used to learn the distribution of the data (image) or sample a new image from its distribution. Generative models can be used in super-resolution reconstruction, image coloring, image conversion, generating images from text, learning hidden representatives of an image, half-supervised learning, and more. Furthermore, generative models can be combined with reinforcement learning for use in simulation and inverse reinforcement learning.

Use a formula representing the probability of conditions to make maximum likelihood estimations of an image's distribution and learn from it. This disadvantages of this method are that, since the pixels in each image are dependent on the previous pixels, the process of generating the image will be somewhat slow due to having to start in one corner and proceed in an ordered manner. For example, WaveNet can produce speech similar to that created by humans, however since it cannot get produced concurrently, one second of speech takes 2 minutes to compute, and real-time generation is impossible.

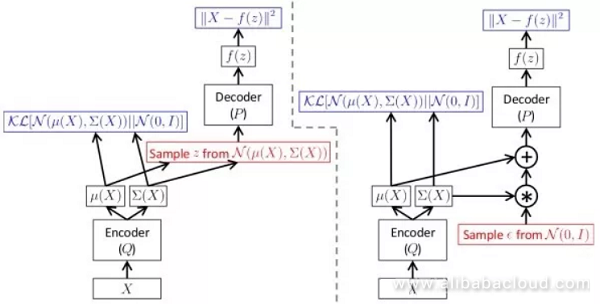

In order to avoid the downsides to explicit modeling, a variational auto-encoder applies implicit modeling on data distribution. It considers that generating an image is affected by hidden variable control, and assumes that the hidden variable is subject to Diagonal Gaussian distribution.

A variational auto-encoder use a decoding network to generate an image according to the hidden variable. As we are unable to directly apply maximum likelihood estimation, when training, similar to an EM algorithm, a variational self-encoder constructs the lower bound function of a likelihood function, then uses this lower bound function for optimization. The benefit to a variational self-encoder is that because of the independence of each dimension; we can control the hidden variables to control the factors influencing changes in the output image.

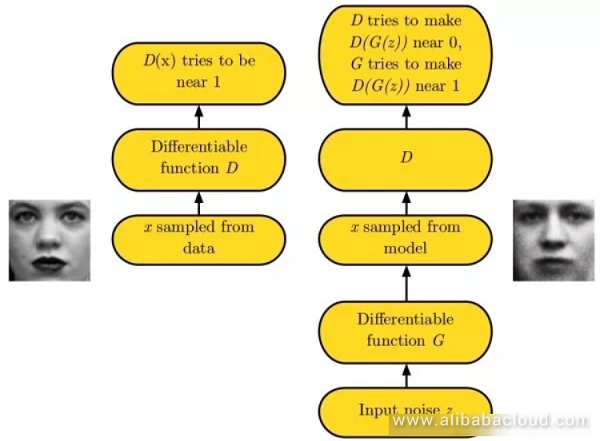

Because of the extreme difficulty in learning data distribution, generative adversarial networks avoid this step entirely and generate the image right away. Generative adversarial networks use a generative network G to create an image from random noise, and a discriminative network D to determine whether the input image is real or forged.

During training, the goal of discriminative network D is to determine whether an image is real or forged, and the purpose of the generative network G is to make the discriminative network D tend toward deciding that its output image is real. In practice, training a generative adversarial network brings about the issue of model collapse, where the generative adversarial network is unable to learn the complete data distribution. This creates improvement in LS-GAN and W-GAN. Like with a variational self-encoder, a generational adversarial network provides better-detailed information.

The below link is to a compilation of several essays on generational adversarial networks: hindupuravinash/the-gan-zoo. The below link is to a collection of methods of training generational adversarial networks: soumith/ganhacks.

Most of the tasks described above can get used in video classification. Here we will use video classification as an example to illustrate some basic methodology for processing video data.

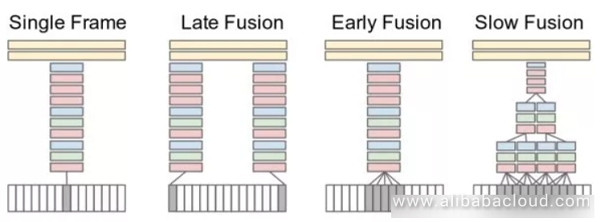

This type of method treats the video as a series of frame images. The network receives a set of multiple frame images (15 frames for example) belonging to the video, then extracts the deep features from those images, and finally integrates these image features to obtain the characteristics of that section of the video to classify it. Experiments show that results are best when using "slow fusion." Furthermore, independently organizing single frames can also produce very competitive results, meaning that the image from a single frame contains a significant amount of relevant information.

Expands standard two-dimensional convolution to three-dimensional convolution to connect localities on the temporal dimension. For example, the system can take a VGG 3x3 convolution and extend it to a 3x3x3 convolution, or a 2x2 convergence ton a 2x2x2 convergence.

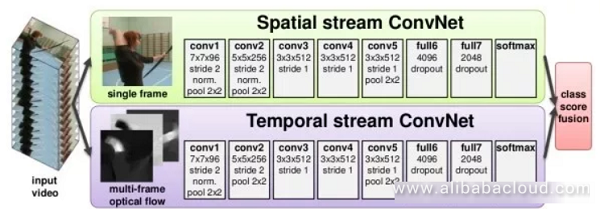

This type of method uses two independent networks to discriminate between image information captured from the video and temporal information. The image information can be obtained from the still image from a single frame and is a classic question of image classification. Movement information is then obtained via optical flow, tracking the movement of the target across adjacent frames.

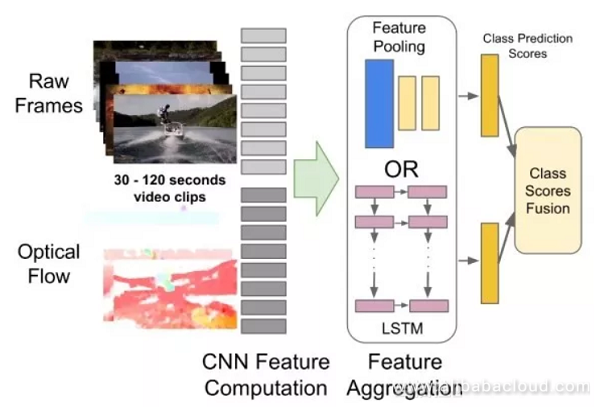

The previous methods are only able to capture the dependent relationships between the images of a few frames. This method uses CNN to extract the image features from a single frame, then uses RNN to capture the dependencies between frames.

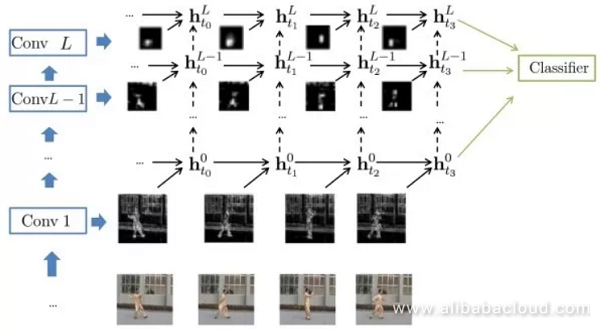

Moreover, researchers have attempted to combine CNN and RNN so that each convolution layer is capable of capturing distant dependencies.

Read similar articles and learn more about Alibaba Cloud's products and solutions at www.alibabacloud.com/blog.

Deep Dive into Computer Vision with Neural Networks – Part 1

Deciphering Data to Uncover Hidden Insights – Understanding the Data

2,599 posts | 764 followers

FollowAlibaba Clouder - November 4, 2019

Alibaba Clouder - August 10, 2018

Alibaba Clouder - October 29, 2019

Alibaba Clouder - November 5, 2019

Alibaba Clouder - May 6, 2020

shiming xie - November 4, 2019

2,599 posts | 764 followers

Follow Image Search

Image Search

An intelligent image search service with product search and generic search features to help users resolve image search requests.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Alibaba Clouder