A data warehouse is a collection of data that facilitates the decision-making process. It is non-volatile, time-variant, and integrated. Database design, on the other hand, refers to the creation of a detailed data model of a database. The model includes logical and physical design choices along with physical storage parameters.

More and more technical experts are emphasizing on creating a database design that is coherent with the data warehouse (data warehouse-friendly designs).

Two types of database systems exist: OLTP and OLAP.

These two database systems work closely together to create a complete data warehouse ecosystem for enterprises. In simple terms, OLTP systems feed data to data warehouses, and OLAP systems analyze the data.

There are two methods to synchronize data from OLTP to OLAP. Below is a brief description:

Compared with incremental synchronization, full synchronization needs to synchronize all the data each time. It takes a longer time and imposes a higher load on the system. Whereas, for incremental synchronization, the challenge is identifying the incremental data.

The following fields are contained when creating a table:

Data warehouse includes the ODS layer and the DW layer. ODS stores OLTP's raw data. The synchronization process mainly occurs on the ODS layer. If the modified_time is the record of the day, it indicates that the relevant data is the incremental data of the very day. Therefore, when synchronizing, you can make a judgment based on the modified_time.

The synchronization task would have been executed at 20170305 00:00. However, due to the schedule delay, it was not executed until 20170305 00:30.

At this time, entry A will be missed, because the modified_time of entry A has been changed to 20170305 at the time of synchronization.

When execution of deduplication is implemented for the data of the ODS layer on the DW layer, you can get the same data as the production data. Verify whether it is true with the following method:

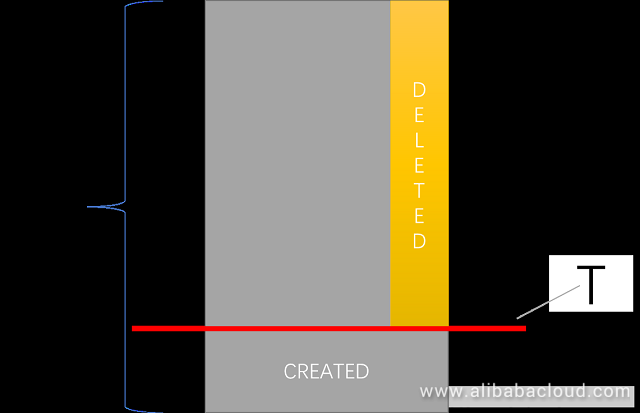

Data volume on the DW layer = All the data for production (total) - Data volume created today (created) - Data volume deleted before today (deleted)

Using table_a table as an example:

total = select count(*) from table_a

created = select count(*) from table_a where created_time = T+1

deleted = select count(*) from table_a where created_time <= T and modified_time < = T and deleted_flag = true

result = total - created - deletedDuring this process, understanding the calculation method of the deleted data volume (deleted) is difficult. You can understand it like this:

If the modified_time is today, it can be confirmed that this data existed before today, because when the deleted_flag is set as true, the modified_time remains unchanged.

In this blog, we discussed about the types of database systems that exist in data warehousing and how we can achieve data synchronization by adopting different methods. Various processes could be followed to facilitate the creation of a database design that is supportive and coherent to the database system. The consolidation of a database system and a database design yield advantages such as enhanced data availability, reduced response time, along with efficient volume replication.

Hosting Multiple Websites on a Single Alibaba Cloud ECS Server the DevOps Way

Cloud Computing and Cyber Security Paves the Path to Fourth Industrial Revolution

2,593 posts | 793 followers

Followamap_tech - April 20, 2020

Alibaba Cloud Storage - February 27, 2020

Alibaba Clouder - March 10, 2017

Cherish Wang - February 20, 2019

Alibaba Clouder - November 6, 2018

Alibaba Cloud Storage - May 8, 2019

2,593 posts | 793 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreLearn More

Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn MoreMore Posts by Alibaba Clouder