Recently, I participated in many refactoring projects. Some projects aimed to reconstruct gateways, AMAPS, and other services to improve server resource utilization. Other projects aimed to split shared business into services to improve architecture rationality and R&D efficiency. In this article, I would like to share some lessons drawn from my experience. I will be focusing on some misunderstandings and frequently overlooked aspects in refactoring based on my own experience.

In Chinese philosophy, Dao (道) and Shu (术) are two important concepts. Roughly translated, Dao means "the way" or "direction," and Shu means "technique" or "method." I believe these are central concepts of programming as well. When we have a general direction or idea, we need a technique for putting it into practice. If we do not even have an idea of what we're doing, then all kinds of techniques and methods are useless.

In this article, I will be discussing some of the basic refactoring direction and principles and the application of common refactoring solutions.

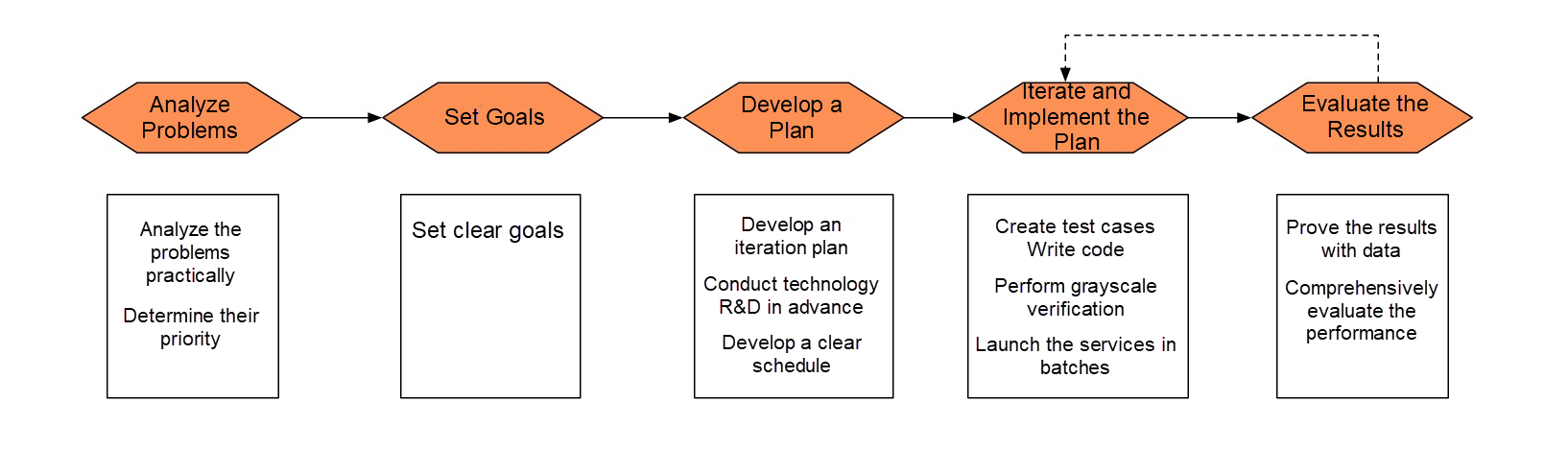

When is the correct time to refactor the system? What preparations must be made before refactoring? What can we do to ensure success in refactoring and achieve our goals? These are the questions that will guide our investigation of the basic ideas and principles of refactoring.

System refactoring is done to solve actual problems, not just for the sake of it. Some people refactor their systems just because they want to apply new technologies or keep up with new trends. Others even refactor their systems to fulfill unnecessary requirements. But what these engineers need to realize is that system stability should always be the top priority and that refactoring just for the sake of it can do harm in terms of the stability of your system. That is, refactoring that does not improve services exposes your system to unnecessary risks.

Then, before performing any refactoring, we should first clarify the problems we want to solve. Do we want to improve performance and security or to achieve quick and continuous integration and release?

The final result of a project largely depends on whether clear goals are set in advance. Refactoring is no exception. The SMART (Specific, Measurable, Attainable, Relevant, and Time-bound) principles are often mentioned in organization management and goal management courses. For refactoring projects, we must also set specific, measurable, attainable, relevant, and time-bound goals.

The specific, attainable, and time-bound principles are easy enough to understand. However, I want to describe the measurable principle in detail. Can we use the preceding issues as goals of a refactoring project? The answer is no because the indicators are not measurable. For example, in a refactoring project aiming to improve performance, measurable performance indicators include the reduction in the service response time, the increase in the queries per second of a single kernel, and the reduction in the number of service resources required.

Then, how can we turn non-quantitative goals into measurable indicators? Take a refactoring project aiming to improve service availability as an example. It is hard to quantify this goal. As such, we can use specific events as indicators, for example, on-premises fault recovery that is imperceptible to users or automatic downgrade and recovery of underlying faults. We often use many 9's in the row to evaluate system availability, but this indicator can only be calculated after a period of time, so it is not suitable for a short- or medium-term project goal.

Alibaba engineers often mention starting points. Specific and measurable goals are the starting points of refactoring projects.

Both insufficient design and excessive design are design mistakes. Insufficient design results from the lack of necessary abstract thinking and forward-looking thinking, resulting in system design defects. Excessive design is often caused by insufficient knowledge of system problems, excessive pursuit of scalability, and the deviation from actual requirements. In addition to useless scalability functions, excessive design introduces new problems, such as increases in the cost of system maintenance and iteration and difficulties in online problem troubleshooting.

In addition to avoiding insufficient or excessive design, we must also take cost-effectiveness into consideration. Some designs may solve certain problems, but the solution might be overly complex and costly. So, we need to determine whether or to apply the solution based on our return on investment.

There are no shortcuts to overcoming insufficient design. The only solution is continuous learning and experience. To combat excessive design, we need to think about the necessity and cost-effectiveness of the design when preparing a design solution.

An early iteration plan is important but easily ignored in refactoring. When starting to design a refactoring plan, it is necessary to consider how to implement the plan in phases. Sometimes we have to make compromises to achieve phase-by-phase implementation. If we are to view a refactoring project as a construction project, a layered design is just like the scaffold in the construction site. It is an effective way to control risks within an acceptable range.

Take the refactoring of an ordering service as an example. The number of orders may be small, but many different types of service are involved, including hotel, admission ticket, and train ticket services. In the final design scheme, the system is divided into four modules based on the order processing procedure: order module, CP order synchronization module, order processing module, and statistics module. You may wonder whether it is appropriate to split the system into multiple modules even if the order volume is small. Besides design-related factors, another important consideration is the ability to launch the system and verify the results phase by phase. This is the only way to ensure risks can be controlled. Here, we assume the system is not vertically partitioned by service type.

Therefore, as far as possible, we need to make an iteration plan for system refactoring from the very beginning.

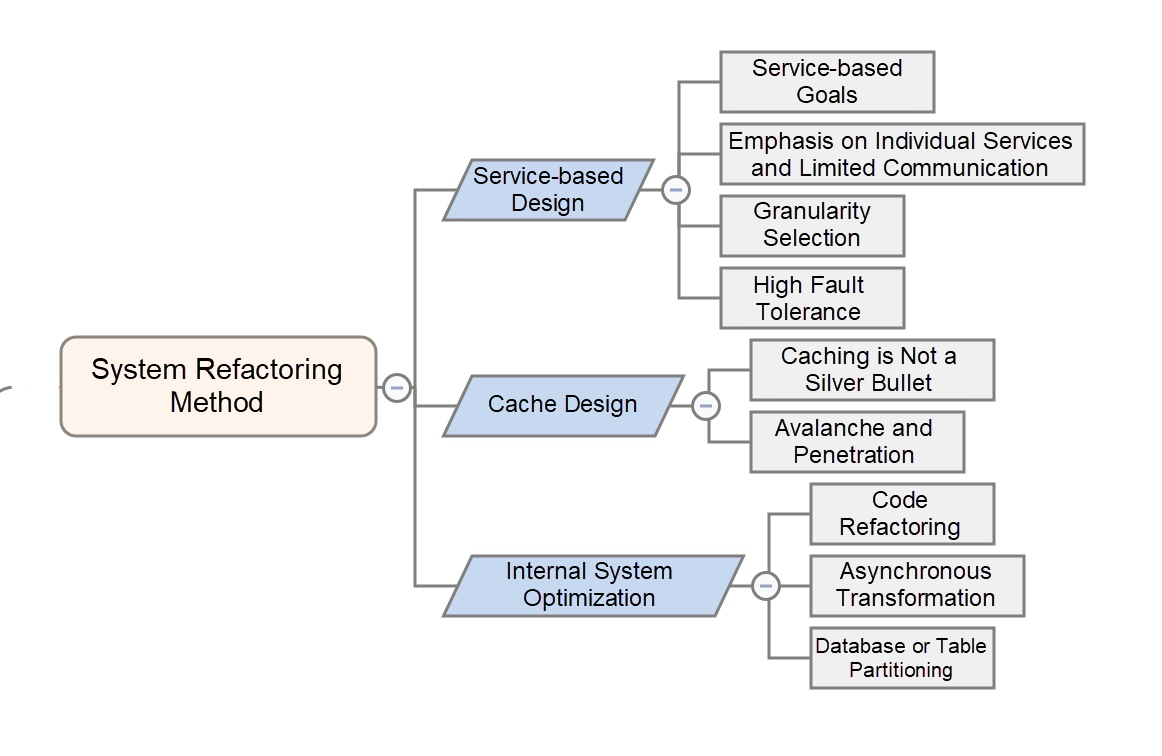

During system refactoring, some methods are used to solve specific problems. A great deal of information about refactoring solutions is available. Therefore, in this section, I will only focus on considerations for applying common refactoring solutions, without describing the solutions in detail.

Service-based design is mentioned in many refactoring projects. Abstraction, decoupling, division, and unification are important ideas for system design and refactoring. Service-based design is an important application of these ideas. What are the considerations for service-based design?

During service-based design or refactoring, we must first clarify the values or goals of service-based design.

The preceding are the values of service-based design. Interestingly, these are also problems caused by poor service-based design. For example, people often complain that service-based design is more difficult than the initial development and launch work. Therefore, the first step in service-based design is to clarify its goals. If the goals are not achieved or negative results are generated, so we need to consider whether the designs are reasonable.

Many engineers tend to use the remote procedure call frameworks or message middleware at the beginning of service-based design. Service-based design focuses on the design of individual services. The specific communication methods are not that important, at least in the beginning. Instead, we must focus on the core problems of each stage.

In service-based design, the most difficult and experience-dependent step is the selection of service granularity. For information on how to correctly design subsystem boundaries based on system characteristics, we can refer to some design principles available on the Internet, such as the single responsibility principle and statelessness principle, but we need to rely more on our experience. For system refactoring, we must start with the most important modules. When it is hard to decide the granularity, we can implement plans with a large granularity. As such, even if problems occur, we can divide the system into multiple modules and optimize them one by one. If we go right into the details of the design, it is difficult to backtrack.

As mentioned above, service-based design emphasizes individual services. The fault tolerance of individual services is an important consideration because it determines the stability of the service system as a whole. Therefore, service-based design does not only split services. We need to further analyze the dependencies between individual services, distinguish between strong and weak dependencies, and make fault tolerance designs accordingly.

Data caching is by far the most used refactoring method. The following points of data caching deserve our attention.

A common practice for performance optimization is adding a cache. This method produces immediate results and has become the first choice of many engineers when solving system performance problems.

Whether you are designing a new system or refactoring an old system, do not rely on data caching as the first choice for solving performance problems. Otherwise, you will not be able to see the problems that exist on other levels. When I worked in an enterprise-oriented software company, I did basic service design with the company's chief architect. He did not allow me to consider any cache design during initial system design. This left a deep impression. Adding caches to improve performance is a tactical method. However, strategically, we need to perform a comprehensive evaluation from multiple perspectives to systematically solve the problems.

Data caching solves data read performance problems, but it also introduces new failure points into the system architecture.

Avalanche and penetration must be considered when data caching is introduced. First, we need to consider the downgrade policies and specific downgrade plans at the service level. At the technical level, we need to take some details into consideration, such as cache availability, persistence of cached data, necessity of a cache push mechanism, and the discrete design of the expiration time.

Code optimization includes code structure optimization and code content optimization. The latter focuses on how to identify code smell. Many specific methods are available, but I cannot mention them here. The former focuses on the adjustment of code design, which requires us to be able to abstract the design and possess a good knowledge of domain-driven design. Therefore, an excellent programmer must be an expert in a certain field.

For I/O-intensive applications, asynchronous transformation is an effective method. However, two factors make it hard to implement. One is that a complete technical solution is required. Fortunately, efforts have been made to solve asynchronization issues for commonly used middleware such as HSF, Tair, MetaQ, TDDL, and Sentinel. We recommend that you study the Taobao architecture upgrade project, which is an unprecedented refactoring project in terms of its size. The core of the project is microservice-based design and asynchronous transformation based on responsive programming. The project proves that making a system asynchronous from scratch is a big project. The other factor is the learning costs. All the team members must have a good knowledge of asynchronous and responsive programming models. This is especially important.

When a single database does not have the capacity to meet your service demands as data increases rapidly, database or table partitioning is a common solution.

If you are developing a new system, we do not recommend that you perform database or table partitioning at the initial stage of development, unless the service depends on a large amount of data, because database and table partitioning complicate system design and development to a certain extent. If database or table partitioning is performed at the very beginning and the service developers have little experience, this can turn simple challenges into complex problems.

For example, using different mechanisms can generate primary keys. The commonly used and mostly discussed mechanisms are Snowflake, which has system time requirements, and TDDL generation policies, which can fulfill core requirements concerning performance, global uniqueness, and single-database incremental scale-out.

After database or table partitioning is performed, cross-database and cross-table queries must be considered and avoided as far as possible. However, for an ordering service, we need to split the data for users and the seller along different dimensions. For example, we can use the user and the seller as the shard keys of the primary database and secondary database respectively. Complex multi-condition queries in the operation management background will run slowly in single databases even if database or table partitioning is performed. Elasticsearch can be used for non-massive data query and Elasticsearch+HBase can be used for massive data query.

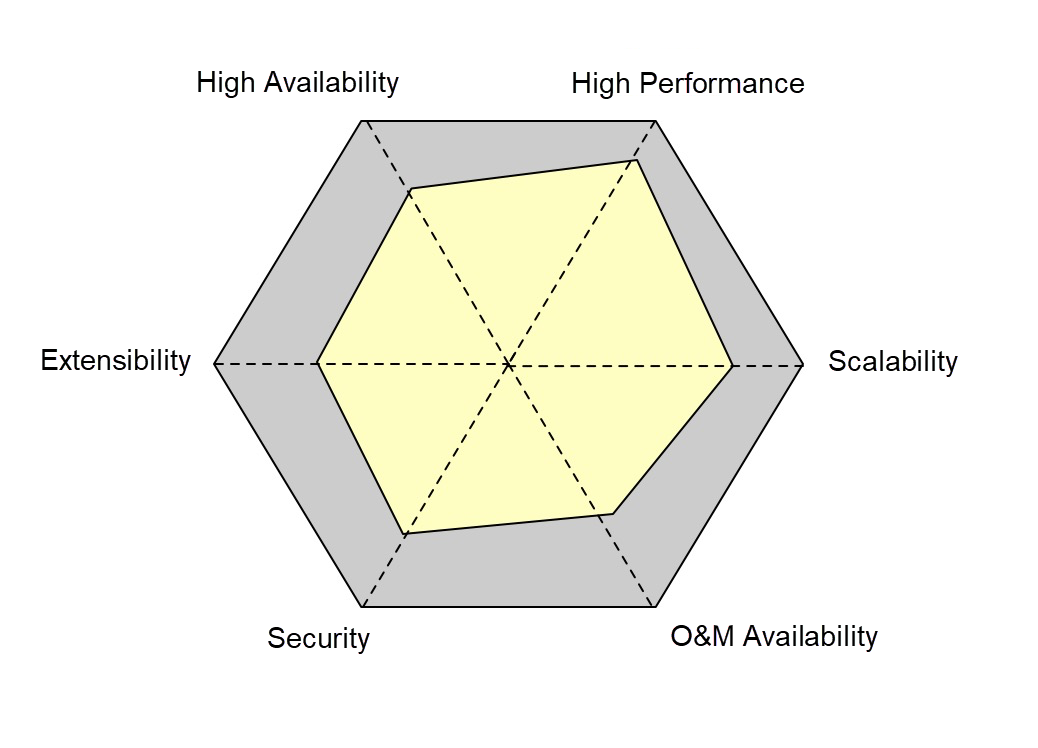

Finally, let's talk about system evaluation. In the R&D stage, we can evaluate a system along six dimensions: performance, availability, extensibility, scalability, security, and maintainability. There is no one-size-fits-all standard. The system evaluation results must be based on actual service conditions. For example, if multiple instances are deployed for an operation management platform, the availability is high. To make an online transaction system highly available, we need to deploy multiple data centers, which is only a basic requirement.

During system evaluation, we need to evaluate the system based on the degree to which the system satisfies the preceding six indicators, very unsatisfactory, unsatisfactory, and satisfactory, and actual service conditions to analyze the system shortcomings. In addition, it is important to note that these dimensions are not mutually independent. When the system is refactored based on one dimension, the impact on other dimensions must be considered.

It is not easy to refactor a system, but the difficulty does not lie in solving specific problems. It is a systematic project. The biggest challenge is how to select the optimal solution after considering a wide range of solutions and specific factors.

Are you eager to know the latest tech trends in Alibaba Cloud? Hear it from our top experts in our newly launched series, Tech Show!

Application of Machine Learning in Start-point Road Tracking of AMAP

Alibaba Cloud Community - March 22, 2023

淘系技术 - April 14, 2020

FlyingFox - January 20, 2021

XianYu Tech - December 24, 2021

Alibaba Cloud Community - October 23, 2024

Alibaba Cloud Community - December 22, 2023

E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn MoreMore Posts by amap_tech