As a leading navigation service in China, AMAP pools data from its large user base and partners around the clock, and its users also actively contribute valuable feedback. These inputs are integral to the development and improvement of AMAP and are seen as starting points for app optimization. This article describes how it uses machine learning to automate and improve the efficiency of processing large amounts of user intel.

The following are key terms used in this article and their definitions.

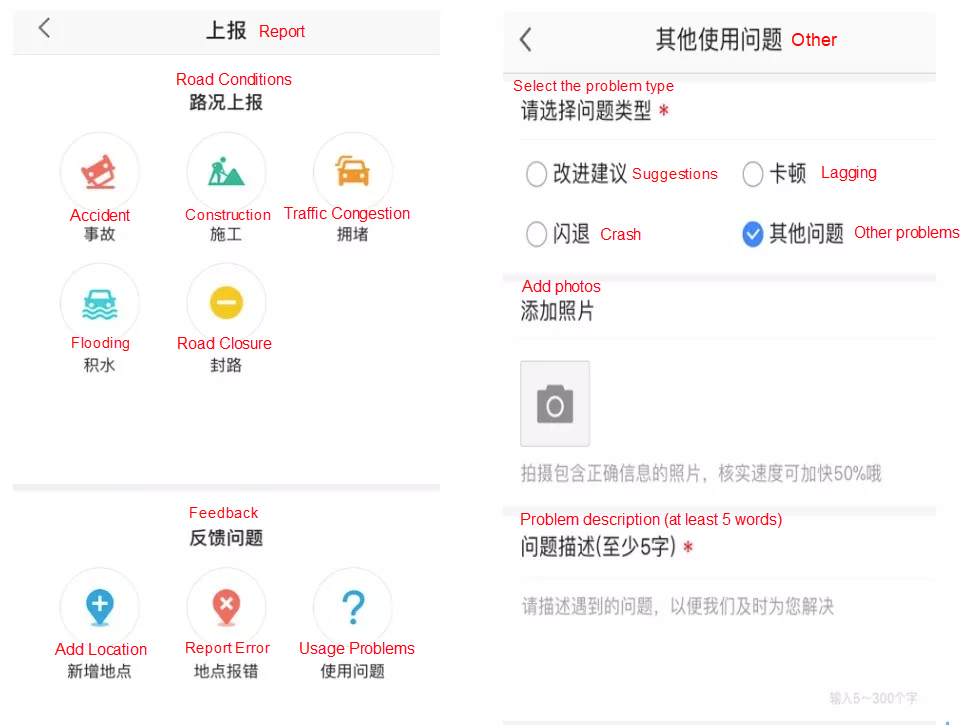

The typical types and options for user feedback are shown below:

AMAP users send feedback from the AMAP mobile app or the AMAP website. The following feedback example shows the options and the description input field. The Issue Source, Main Category, Subcategory, and Road Name display the options selected by the user and the User Description shows a short text description. These are the main data points we use.

| Ticket No. | Serial No. | Time | Issue Source | Main Category | Subcategory | User Description | Road Name |

|---|---|---|---|---|---|---|---|

| 30262 | 2017/3/30 7:34 | Navigation interface | The broken bridge on Huicheng South Road has been repaired and the road has been opened to traffic. |

After sending feedback, users hope for a quick response. However, this is difficult for AMAP since we receive hundreds of thousands of feedback submissions each day.

After the information is received, it is first processed through rule-based classification. Data related to specific roads are then validated by AMAP and then used to update the navigation geodata.

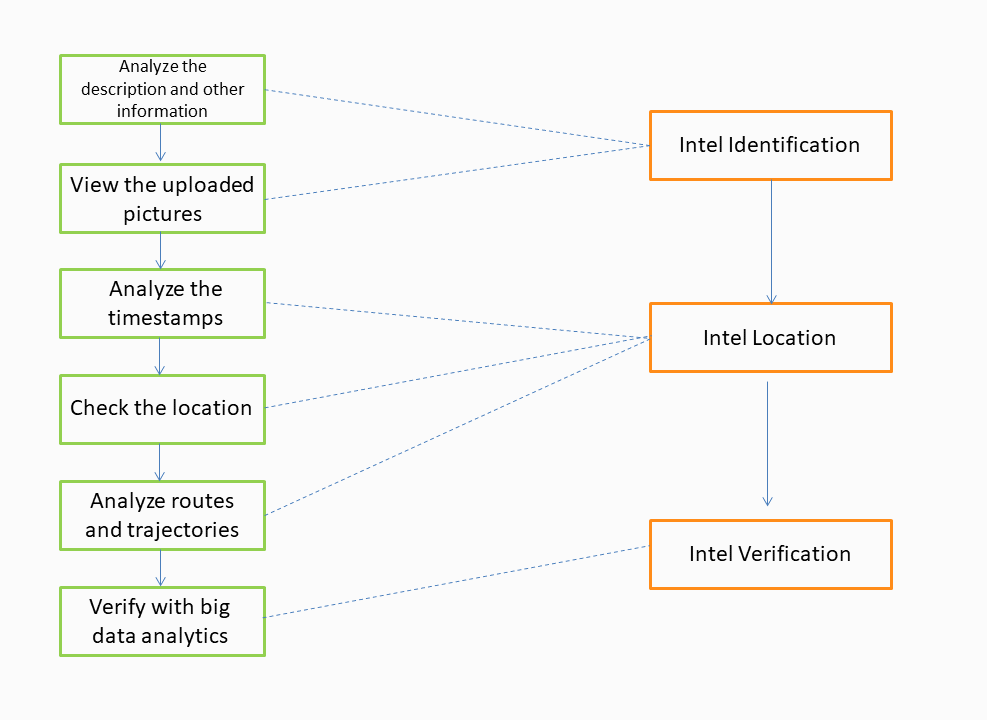

User intel is identified, located, and verified as follows:

1) The intel is identified, or labeled, based on the type of issue reported.

2) The intel location is identified to obtain the GPS coordinates for where the problem occurred.

3) After intel is identified and located, its label information (including road names) is verified.

The workflow is illustrated in the following diagram:

AMAP takes user feedback seriously and strives to resolve the reported problems. If the type and location of an issue cannot be deduced from the provided information, AMAP will try to help the user by considering his or her route and trajectory information.

Our current response procedure has some drawbacks, such as the low accuracy of rule-based classification, complex manual validation processes, high skill requirements, low efficiency, and a high rate of incorrectly discarded feedback.

To address these problems, we introduced machine learning to implement data-driven performance improvement. In this process, we broke down old business operations into individually stratified classes and classified user intel based on algorithms rather than rules. We divided manual validation into intel identification, location, and verification, which were to be carried out by dedicated personnel to improve efficiency. Finally, we used algorithms to automate the identification process.

The original user feedback process started with rule-based classification and proceeded to manual identification, location, and verification, after which the feedback piece will be placed into one of nearly 100 subcategories. Only then could the higher-level category be determined and positioned within the overall processing hierarchy.

As you may note, this is not a workflow but a single complicated step that even skilled employees cannot complete with efficiency. Furthermore, as the whole procedure is highly dependent on human intervention, the assessment results may easily be swayed by individual judgments.

To address this issue, we reorganized and split the operations and adopted machine learning and process automation to increase efficiency.

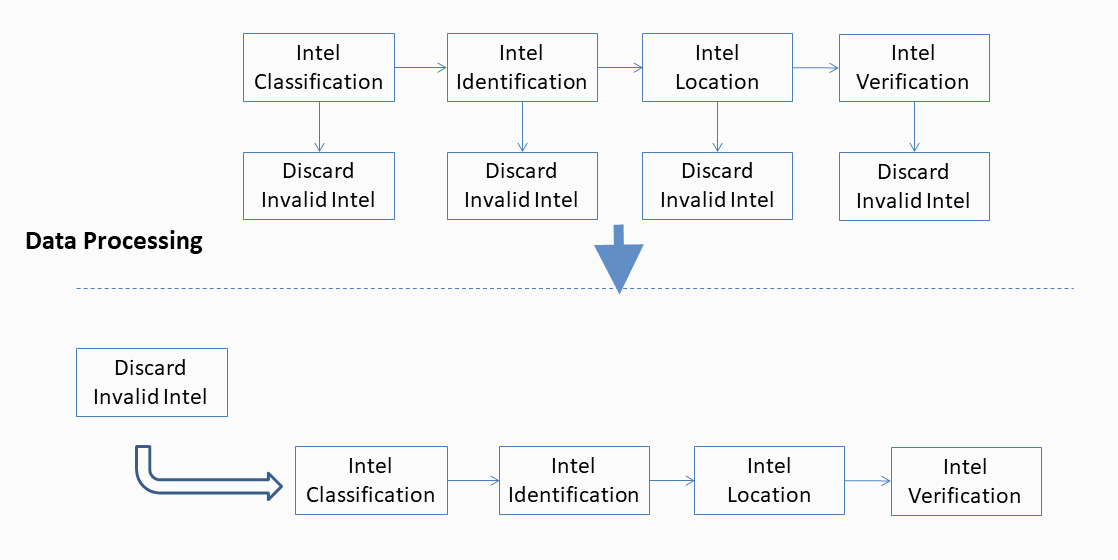

First, the received user intel is filtered to retain only valid information. Afterward, the workflow is divided into six stages: first-stage operations, second-stage operations, third-stage operations, intel identification, intel location, and intel verification.

As shown in the preceding diagram, the first three stages automate the classification of user intel, and only the last three stages are human-dependent. In this way, the process is stratified and automated into a streamlined structure with each step handled by a dedicated employee, significantly increasing the efficiency.

Before users submit feedback, they must select from several options, some of which have default values and require further selections, such as the Issue Source. Sometimes, users get impatient and select options at random. But even the most careful users may be confused by the many options. This makes user descriptions the most valuable source of information as users must enter the text manually instead of just selecting an option.

There are three types of descriptions: blank descriptions, unintelligible descriptions, and descriptions with meaningful input. The first two types of descriptions are called invalid descriptions, and the third type is called valid descriptions.

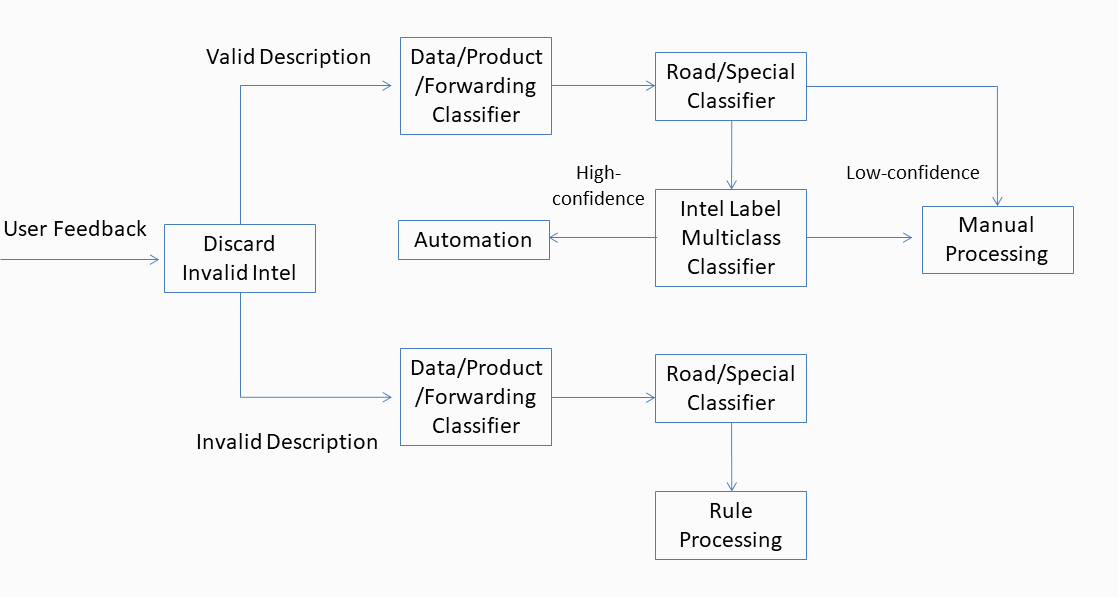

After removing invalid feedback, we process the descriptions by applying different procedures according to the description type.

1) Valid descriptions go through a two-stage process. In the first stage, they are categorized into three types: data, product, and forwarding. Product and forwarding descriptions are automatically processed, and the data descriptions proceed to the second stage where they are subcategorized into the road or special category. Special category descriptions refer to non-road situations, such as restrictions and walking or biking navigation.

2) The categories and processing methods for invalid descriptions are mostly the same as those of the valid descriptions, just with different samples and a different model. Also, invalid descriptions are processed by rules or by AMAP personnel in the final stage, not with algorithms.

The following diagram describes the operational model applicable to the current business process after stratification.

The classification and verification of user intel are fundamentally a type of multiclass document classification. Therefore, we target each data type based on its characteristics and operate accordingly.

First, the traditional method for converting unstructured text to a vector or a vector space model is to employ one-hot encoding to represent a matrix of term frequency-inverse document frequency (tf-idf) features, with the dimensionality equivalent to the dictionary size. However, such statistical-based methods typically suffer from the problems of data sparsity and dimensionality explosion when they are applied to a large-scale corpus.

To avoid such problems and better reflect the relationship between words, for example, the semantic similarity or the adjacent placement of words, we used the word embedding produced by the Word2vec models which were developed by Tomas Mikolov. Word2vec uses a large corpus of text as its input and produces a vector space, in which words that share common contexts in the corpus are located close to one another. It can be regarded as a form of contextual abstraction.

The most crucial step is to select an appropriate model from a set of candidates. Compared with traditional statistical methods that involve complex feature engineering, deep learning is gaining popularity in the data science field. Recurrent neural networks (RNNs) are a very popular deep learning model and a very important variant of neural networks extensively used in natural language processing (NLP). RNNs use their internal state (memory) to process variable-length sequences of inputs. They deal with more diverse time series corpora than feedforward neural networks and provide better context expression. However, during training, RNNs may suffer greatly from vanishing and exploding gradients. Therefore, we used long short-term memory (LSTM) networks to prevent such problems.

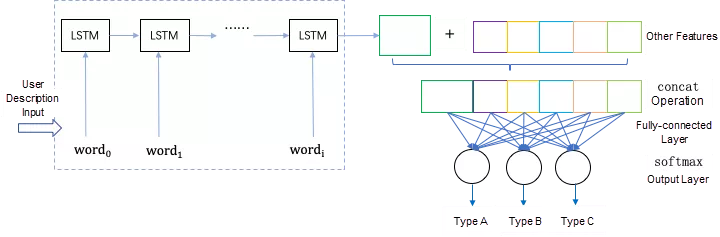

We used the word vectors extracted from user intel as the inputs of LSTM and the last LSTM unit as text features, which were merged with the selected options as the model inputs. The inputs were presented to the fully-connected layer whose outputs became the inputs of the Softmax classification layer. Then, we obtained real numbers in the range of 0 to 1, which served as the basis for intel classification. The following diagram show the network architecture for multiclass classification:

After sorting out the business logic, determining the problem-solving steps, confirming the sample annotation schedule, and running the initial version of the model, we thought that all that was left to do was to perform parameter tuning and optimization, wait for enough samples to be accumulated, train the model, and deploy the model in the production environment.

The actual situation was far more complicated. We were faced with a series of problems, such as the lack of training data, the poor performance of the model, and poor hyper parameter tuning results. The following are some of the issues we encountered during optimization and iteration, and the corresponding measures we took to mitigate them.

The first problem we encountered when applying the model in intel identification was the serious shortage of samples. We modified and fine-tuned a pre-trained model and re-trained it to improve the model performance. As the number of annotated samples increased, we noticed an approximate 3% increase in performance on datasets of all sizes.

Parameter tuning is more of an art than a science, which means it may lead to different and not necessarily good results. We have conducted nearly 30 rounds of parameter tuning. During this time, we learned the following lessons:

1) The initialization step is indispensable and can be critical to the model's ultimate performance. We adopted the SVD-based initialization.

2) The dropout approach and ensemble learning are necessary to address the problem of overfitting. To prevent overfitting, dropout must be applied before the LSTM layers, especially for bidirectional LSTMs.

3) After testing several optimizers, such as Adam, RMSprop, SGD, and AdaDelta, we decided on Adam because it could be viewed as a combination of RMSProp and Momentum. This means that, even though RMSprop is a very similar algorithm, Adam is superior to using RMSprop alone.

4) A batch size of 128 is a good starting point. Large batch sizes may not be beneficial and may even have negative effects. I also recommend using a batch size of 64 on different datasets in order to optimize test performance.

5) Data shuffling is indispensable.

We used ensemble learning on low-accuracy models. After multiple tests, five of the best-performing models trained with different parameter settings were selected for ensemble by voting, and the overall accuracy was 1.5 percent higher than the best individual score.

We also used bidirectional LSTMs and different padding options for optimization, which were not proven effective in intel identification. This was because the descriptions from different users varied and there were not any obvious differences in distribution.

As the structural optimization and parameter tuning for the multiclass model hit the bottleneck, we discovered that the model was not delivering fully automated data analysis. This could result from incomplete features, low information extraction accuracy, unbalanced classes, and insufficient samples per class.

To improve and implement the algorithm, we tried using a confidence model and setting confidence thresholds by class. We finally chose the latter approach because it was simple and efficient.

The confidence model used the label output of the classification model as its input, and the sample set of each label was re-divided into a training set and a validation set for binary classification. The confidence model was obtained after training, and results with a high-confidence level were applied.

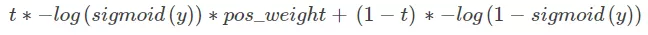

We used binary cross-entropy, weighted cross-entropy, and ensemble methods in the confidence model test. The weighted cross-entropy was calculated with the following formula:

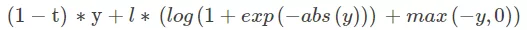

The formula was changed as follows to avoid out-of-memory errors:

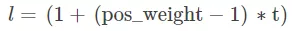

where

The results showed that the binary cross-entropy method didn't achieve a significant improvement. The ensemble method produced high recall at the 95% confidence level but did not reach the 98% confidence level of the model.

We drew on the method used in the intel classification model for high-confidence prediction, where different Softmax thresholds were set for classes and applied it to intel identification. This approach yielded an improvement compared to the high-confidence model and thus was chosen to increase the operating efficiency. At the same time, we also added the top N recommendations in the low-confidence section to simplify the business process.

Algorithm Efficiency: The operational requirements were satisfied. The accuracy for product-related intel was above 96%, and the recall for data-related intel reached 99%.

Application: Use this approach in combination with other strategies for enhanced automation. After rule optimization, our manpower needs were greatly reduced and labor costs fell by 80%. This solved the challenge of backend feedback response by implementing a smooth workflow.

Algorithm Efficiency: In our strategy, high-confidence intel was automatically processed, while low-confidence intel was manually annotated. The results showed that the accuracy for intel identification increased to above 96%.

Application: After the classification model for intel labels was put into production, employee productivity increased by more than 30% due to the different processes for high- and low-confidence labels.

During this project, we established a system of methods and principles for solving complex business problems and learned from our hands-on experience with NLP applications. These methods have been implemented in other projects, where they have proven effective, and will be reinforced and developed in future practices. AMAP will strive to deliver services that exceed customers' expectations and make unremitting efforts to improve our products down to the smallest detail. At the same time, we will utilize automation in the problem-solving process to take our efficiency to a higher level.

amap_tech - March 16, 2021

amap_tech - April 20, 2020

Anna Chat APP - July 3, 2024

Anna Chat APP - June 27, 2024

Alibaba Clouder - April 1, 2020

Alibaba Clouder - June 17, 2020

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by amap_tech

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free