By Wang Jianming, Senior Engineer

In the SQL Server Best Practices Series, we have discussed three key topics, including: Using Asymmetric Keys to Implement Column Encryption, Database Recovery Models and Backups, and Monitoring Backup and Restore Progress.

In this article, we'll share how SQL Server can use filegroups to implement the cold and hot data isolation backup solution.

In our example scenario, we'll assume that a company has a critical large database (over 10 TB) and faces the following scenarios:

As a Database Administrator, you need to optimize the design of the database and Backup Recovery System to make backup and restore more efficient. But the question is, how can you do this on a SQL Server?

This article will not focus on the details of filegroups. However, as it is the core technology involved, it is necessary to briefly introduce filegroups in SQL Server in terms of their advantages, creation, and usage.

SQL Server supports the storage of table and index data in Non-Primary filegroups, which provides the following benefits when a database has multiple filegroups:

We can create a filegroup directly when we create a database. The code is as follows:

USE master

GO

EXEC sys.xp_create_subdir 'C:\SQLServer\Data\'

EXEC sys.xp_create_subdir 'C:\SQLServer\Logs\'

CREATE DATABASE [TestFG]

ON PRIMARY

( NAME = N'TestFG', FILENAME = N'C:\SQLServer\Data\TestFG.mdf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FG2010]

( NAME = N'FG2010', FILENAME = N'C:\SQLServer\Data\FG2010.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FG2011]

( NAME = N'FG2011', FILENAME = N'C:\SQLServer\Data\FG2011.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FG2012]

( NAME = N'FG2012', FILENAME = N'C:\SQLServer\Data\FG2012.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB )

LOG ON

( NAME = N'TestFG_log', FILENAME = N'C:\SQLServer\Logs\TestFG_log.ldf' , SIZE = 5MB , FILEGROWTH = 50MB)

GONote:

To ensure the load balancing capability of the database filegroup I/O, keep consistent the initial size and increment parameters of all files to ensure the polling scheduling allocation algorithm works properly.

If the database already exists, we can also add filegroups. The code is as follows:

-- Add filegroup FG2013

USE master

GO

ALTER DATABASE [TestFG] ADD FILEGROUP [FG2013];

-- Add data file to FG2013

ALTER DATABASE [TestDb]

ADD FILE (NAME = FG2013, SIZE = 5MB , FILEGROWTH = 50MB ,FILENAME = N'C:\SQLServer\Data\FG2013.ndf')

TO FILEGROUP [FG2013]

GO

USE [TestFG]

GO

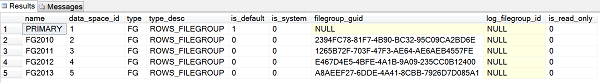

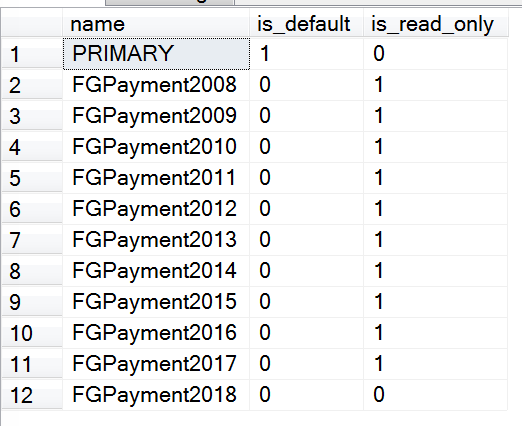

SELECT * FROM sys.filegroupsThe final filegroup information is shown as follows:

After filegroups are created, we can put the table and index into the corresponding filegroup. For example:

Put the clustered index into the PRIMARY filegroup; table and index data are placed in the FG2010 filegroup. The code is as follows:

USE [TestFG]

GO

CREATE TABLE [dbo].[ Orders_2010](

[OrderID] [int] IDENTITY(1,1) NOT NULL,

[OrderDate] [datetime] NULL,

CONSTRAINT [PK_Orders_2010] PRIMARY KEY CLUSTERED

(

[OrderID] ASC

) ON [PRIMARY]

) ON [FG2010]

GO

CREATE NONCLUSTERED INDEX IX_OrderDate

ON [dbo].[ Orders_2010](OrderDate)

ON [FG2010];Now that we have briefly introduced filegroups as described in the introduction, we are going to cover the use of SQL Server filegroups to implement the solution design for cold and hot data isolation backup.

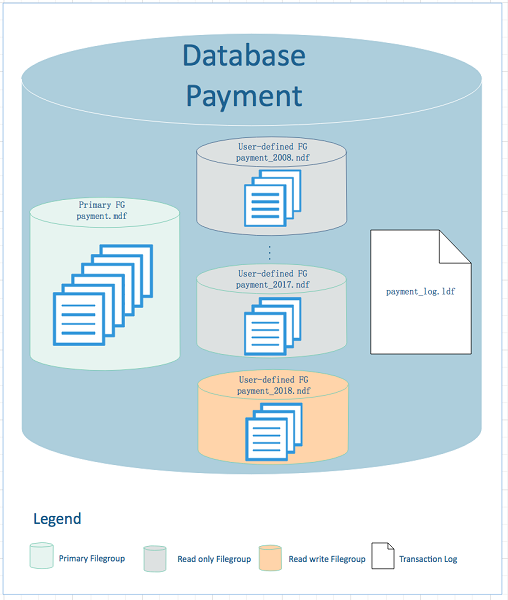

The payment database is too large (over 10 TB), so a single full backup takes more than 20 hours. If a full backup is performed normally, the backup file will be too large and the process will take too long, and even normal operations may be affected due to the I/O capacity consumption of the backup operation. If we think about it carefully, we find that, although the database is very large, the amount of data that is undergoing data change operations is not large compared with the entire database. This is because only the data in the table for the current year is constantly changing (hot data), and the data in tables for previous years are not modified (cold data). So, we design the database to put the data in tables for previous years into read-only filegroups and the data in the table for the current year into read-write filegroups. The backup system only needs to back up the Primary and the filegroup containing the table for the current year (but it still needs to perform a one-time full backup of the database the first time). This greatly reduces the I/O capacity consumed by the backup, implements the isolation backup operation for hot and cold data, distributes the I/O pressure of files, and ultimately achieves optimization of the database design and backup system.

According to the text analysis above, a beautiful design diagram and visual representation is shown as follows:

The design diagram is detailed below for a more intuitive and thorough understanding of the design solution.

The entire database contains 13 files, including:

After the solution design is completed, the next step is its collective implementation. The specific implementation includes:

While creating the database, we create the Primary filegroup and the filegroup for 2008 to 2017. Note that the initial size and increment of files in all filegroups must be the same. The code is as follows:

USE master

GO

EXEC sys.xp_create_subdir 'C:\DATA\Payment\Data\'

EXEC sys.xp_create_subdir 'C:\DATA\Payment\Log\'

CREATE DATABASE [Payment]

ON PRIMARY

( NAME = N'Payment', FILENAME = N'C:\DATA\Payment\Data\Payment.mdf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2008]

( NAME = N'FGPayment2008', FILENAME = N'C:\DATA\Payment\Data\Payment_2008.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2009]

( NAME = N'FGPayment2009', FILENAME = N'C:\DATA\Payment\Data\Payment_2009.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2010]

( NAME = N'FGPayment2010', FILENAME = N'C:\DATA\Payment\Data\Payment_2010.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2011]

( NAME = N'FGPayment2011', FILENAME = N'C:\DATA\Payment\Data\Payment_2011.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2012]

( NAME = N'FGPayment2012', FILENAME = N'C:\DATA\Payment\Data\Payment_2012.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2013]

( NAME = N'FGPayment2013', FILENAME = N'C:\DATA\Payment\Data\Payment_2013.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2014]

( NAME = N'FGPayment2014', FILENAME = N'C:\DATA\Payment\Data\Payment_2014.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2015]

( NAME = N'FGPayment2015', FILENAME = N'C:\DATA\Payment\Data\Payment_2015.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2016]

( NAME = N'FGPayment2016', FILENAME = N'C:\DATA\Payment\Data\Payment_2016.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB ),

FILEGROUP [FGPayment2017]

( NAME = N'FGPayment2017', FILENAME = N'C:\DATA\Payment\Data\Payment_2017.ndf' , SIZE = 5MB ,FILEGROWTH = 50MB )

LOG ON

( NAME = N'Payment_log', FILENAME = N'C:\DATA\Payment\Log\Payment_log.ldf' , SIZE = 5MB , FILEGROWTH = 50MB)

GOConsidering that we add new filegroups to the database every year, the filegroups for 2018 are created separately as follows:

-- Add filegroup FGPayment2018

USE master

GO

ALTER DATABASE [Payment] ADD FILEGROUP [FGPayment2018];

-- Add data file to FGPayment2018

ALTER DATABASE [Payment]

ADD FILE (NAME = FGPayment2018, SIZE = 5MB , FILEGROWTH = 50MB ,FILENAME = N'C:\DATA\Payment\Data\Payment_2018.ndf')

TO FILEGROUP [FGPayment2018]

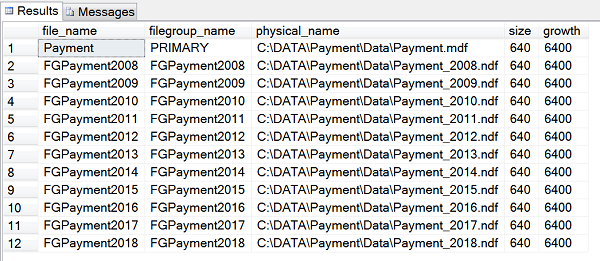

GOFinally, reconfirm the database filegroup information. The code is as follows:

USE [Payment]

GO

SELECT file_name = mf.name, filegroup_name = fg.name, mf.physical_name,mf.size,mf.growth

FROM sys.master_files AS mf

INNER JOIN sys.filegroups as fg

ON mf.data_space_id = fg.data_space_id

WHERE mf.database_id = db_id('Payment')

ORDER BY mf.type;The result is shown in the figure below:

After the database and corresponding filegroups are created, we create corresponding tables and insert some test data, as follows:

USE [Payment]

GO

CREATE TABLE [dbo].[ Payment_2008](

[Payment_ID] [bigint] IDENTITY(12008,100) NOT NULL,

[OrderID] [bigint] NOT NULL,

CONSTRAINT [PK_Payment_2008] PRIMARY KEY CLUSTERED

(

[Payment_ID] ASC

) ON [FGPayment2008]

) ON [FGPayment2008]

GO

CREATE NONCLUSTERED INDEX IX_OrderID

ON [dbo].[ Payment_2008]([OrderID])

ON [FGPayment2008];

CREATE TABLE [dbo].[ Payment_2009](

[Payment_ID] [bigint] IDENTITY(12009,100) NOT NULL,

[OrderID] [bigint] NOT NULL,

CONSTRAINT [PK_Payment_2009] PRIMARY KEY CLUSTERED

(

[Payment_ID] ASC

) ON [FGPayment2009]

) ON [FGPayment2009]

GO

CREATE NONCLUSTERED INDEX IX_OrderID

ON [dbo].[ Payment_2009]([OrderID])

ON [FGPayment2009];

-- The creation of tables for 2010 to 2017 is omitted here. Refer to the above table creation and index code to complete it yourself

CREATE TABLE [dbo].[ Payment_2018](

[Payment_ID] [bigint] IDENTITY(12018,100) NOT NULL,

[OrderID] [bigint] NOT NULL,

CONSTRAINT [PK_Payment_2018] PRIMARY KEY CLUSTERED

(

[Payment_ID] ASC

) ON [FGPayment2018]

) ON [FGPayment2018]

GO

CREATE NONCLUSTERED INDEX IX_OrderID

ON [dbo].[ Payment_2018]([OrderID])

ON [FGPayment2018];Two things need special attention here:

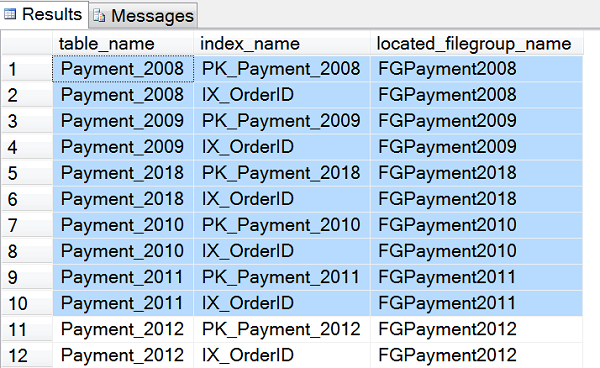

Next, we check the filegroup distribution on all the tables as follows:

USE [Payment]

GO

SELECT table_name = tb.[name], index_name = ix.[name], located_filegroup_name = fg.[name]

FROM sys.indexes ix

INNER JOIN sys.filegroups fg

ON ix.data_space_id = fg.data_space_id

INNER JOIN sys.tables tb

ON ix.[object_id] = tb.[object_id]

WHERE ix.data_space_id = fg.data_space_id

GOThe query results are partially truncated as follows, from which we can see that all tables and indexes are distributed to the corresponding filegroups as expected.

Finally, we enter some data in the corresponding tables for testing:

USE [Payment]

GO

SET NOCOUNT ON

INSERT INTO [Payment_2008] SELECT 2008;

INSERT INTO [Payment_2009] SELECT 2009;

-- The code for 2010¨C2017 has been omitted. Please complete it yourself

INSERT INTO [Payment_2018] SELECT 2018;After tables are created and the test data is initialized, we set the read and write properties of the filegroup. The code is as follows:

USE master

GO

ALTER DATABASE [Payment] MODIFY FILEGROUP [FGPayment2008] READ_ONLY;

ALTER DATABASE [Payment] MODIFY FILEGROUP [FGPayment2009] READ_ONLY;

-- The setting of the read-only property for the 2010¨C2017 filegroups is omitted here. Please complete it yourself

ALTER DATABASE [Payment] MODIFY FILEGROUP [FGPayment2018] READ_WRITE;Eventually, the read and write properties of the filegroups are as follows:

USE [Payment]

GO

SELECT name, is_default, is_read_only FROM sys.filegroups

GOThe screenshot is shown in the following figure:

After all the filegroups have been created successfully and the read and write properties have been configured, we perform a full backup, differential backup, and database-level log backup for the readable and writable filegroups in the database. To facilitate testing, we insert a piece of data between the two backups. Generally, the backup operation consists of the following:

-- Make a one-time full backup of payment database

USE [master];

GO

BACKUP DATABASE [Payment]

TO DISK = N'C:\DATA\Payment\BACKUP\Payment_20180316_full.bak'

WITH COMPRESSION, Stats=5

;

GO

-- For testing, init one record

USE [Payment];

GO

INSERT INTO [dbo].[ Payment_2018] SELECT 201801;

GO

-- Make a full backup for each writable filegoup (just backup FGPayment2018 as an example)

BACKUP DATABASE [Payment]

FILEGROUP = 'FGPayment2018'

TO DISK = 'C:\DATA\Payment\BACKUP\Payment_FGPayment2018_20180316_full.bak'

WITH COMPRESSION, Stats=5

;

GO

-- for testing, insert one record

INSERT INTO [dbo].[ Payment_2018] SELECT 201802;

GO

--Take a differential backup for each writable filegoup (just backup FGPayment2018 as an example)

BACKUP DATABASE [Payment]

FILEGROUP = N'FGPayment2018'

TO DISK = N'C:\DATA\Payment\BACKUP\Payment_FGPayment2018_20180316_diff.bak'

WITH DIFFERENTIAL, COMPRESSION, Stats=5

;

GO

-- for testing, insert one record

INSERT INTO [dbo].[ Payment_2018] SELECT 201803;

GO

-- Take a transaction log backup of database payment

BACKUP LOG [Payment]

TO DISK = 'C:\DATA\Payment\BACKUP\Payment_20180316_log.trn';

GOThe advantage of this backup is that we only need to perform full and differential backups of the readable and writable filegroups (FGPayment2018) (Primary contains system objects with little change. In the actual scenario, Primary filegroup also needs to be backed up), while the other 9 read-only filegroups do not need to be backed up because the data will no longer change. In this way, we have implemented the cold and hot data isolation backup solution.

The next problem is, how can we recover the database from the backup set in case of Payment data disaster, resulting in data loss? We can recover the backup set as follows:

-- We restore full backup

USE master

GO

RESTORE DATABASE [Payment_Dev]

FROM DISK=N'C:\DATA\Payment\BACKUP\Payment_20180316_full.bak' WITH

MOVE 'Payment' TO 'C:\DATA\Payment_Dev\Data\Payment_dev.mdf',

MOVE 'FGPayment2008' TO 'C:\DATA\Payment_Dev\Data\FGPayment2008_dev.ndf',

MOVE 'FGPayment2009' TO 'C:\DATA\Payment_Dev\Data\FGPayment2009_dev.ndf',

MOVE 'FGPayment2010' TO 'C:\DATA\Payment_Dev\Data\FGPayment2010_dev.ndf',

MOVE 'FGPayment2011' TO 'C:\DATA\Payment_Dev\Data\FGPayment2011_dev.ndf',

MOVE 'FGPayment2012' TO 'C:\DATA\Payment_Dev\Data\FGPayment2012_dev.ndf',

MOVE 'FGPayment2013' TO 'C:\DATA\Payment_Dev\Data\FGPayment2013_dev.ndf',

MOVE 'FGPayment2014' TO 'C:\DATA\Payment_Dev\Data\FGPayment2014_dev.ndf',

MOVE 'FGPayment2015' TO 'C:\DATA\Payment_Dev\Data\FGPayment2015_dev.ndf',

MOVE 'FGPayment2016' TO 'C:\DATA\Payment_Dev\Data\FGPayment2016_dev.ndf',

MOVE 'FGPayment2017' TO 'C:\DATA\Payment_Dev\Data\FGPayment2017_dev.ndf',

MOVE 'FGPayment2018' TO 'C:\DATA\Payment_Dev\Data\FGPayment2018_dev.ndf',

MOVE 'Payment_log' TO 'C:\DATA\Payment_Dev\Log\Payment_dev_log.ldf',

NORECOVERY,STATS=5;

GO

-- restore writable filegroup full backup

RESTORE DATABASE [Payment_Dev]

FILEGROUP = N'FGPayment2018'

FROM DISK = N'C:\DATA\Payment\BACKUP\Payment_FGPayment2018_20180316_full.bak'

WITH NORECOVERY,STATS=5;

GO

-- restore writable filegroup differential backup

RESTORE DATABASE [Payment_Dev]

FILEGROUP = N'FGPayment2018'

FROM DISK = N'C:\DATA\Payment\BACKUP\Payment_FGPayment2018_20180316_diff.bak'

WITH NORECOVERY,STATS=5;

GO

-- restore payment database transaction log backup

RESTORE LOG [Payment_Dev]

FROM DISK = N'C:\DATA\Payment\BACKUP\\Payment_20180316_log.trn'

WITH NORECOVERY;

GO

-- Take database online to check

RESTORE DATABASE [Payment_Dev] WITH RECOVERY;

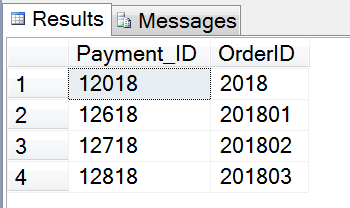

GOFinally, check the results of data restore. According to the test data we inserted, there should be four records.

USE [Payment_Dev]

GO

SELECT * FROM [dbo].[ Payment_2018] WITH(NOLOCK)According to the execution results, there are four result sets that meet our expectations. The screenshot is as follows:

This monthly report shows how to use SQL Server filegroup to implement and optimize cold and hot data isolation backup, which greatly improve the efficiency of database backup and restore and provides load balancing of I/O resources, improving and optimizing the performance of the entire database.

To learn more about Alibaba Cloud Database Backup product, visit https://www.alibabacloud.com/products/database-backup

SQL Server Best Practices: Database Recovery Models and Backups

SQL Server Best Practices: Migrating to ApsaraDB RDS for SQL Server Using SDK

11 posts | 0 followers

FollowAlibaba Clouder - July 22, 2020

Alibaba Clouder - August 6, 2020

Cherish Wang - February 20, 2019

Cherish Wang - February 20, 2019

ApsaraDB - December 30, 2025

Alibaba Clouder - August 24, 2017

11 posts | 0 followers

Follow Database Backup

Database Backup

A reliable, cost-efficient backup service for continuous data protection.

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn MoreMore Posts by Cherish Wang