By Meng Borong and Wangde

Most developers are familiar with transaction isolation levels and know common terms related to this concept, such as dirty read, non-repeatable read, read committed, and repeatable read. However, developers, as technical personnel, must also have an in-depth understanding of transaction isolation levels. With only a basic knowledge of transaction isolation levels, database kernel developers may develop confusing isolation levels, and business developers may get unexpected results from databases.

To check whether you have an in-depth understanding of transaction isolation levels, ask yourself the following questions:

(1) What are transaction isolation levels? What problems can transaction isolation levels solve? Are transaction isolation levels clearly defined? Are the definitions accurate?

(2) How is the performance of the isolation levels of mainstream databases, such as Oracle and MySQL and how are they implemented? Are their definitions consistent with the "official" definitions?

If you can clearly answer these questions, then congratulations, you do have an in-depth understanding of transaction isolation levels. If not, fret not. After reading this article, you will have the answer to all of these questions.

Transaction isolation levels represent the isolation of "I" in atomicity, consistency, isolation, durability (ACID) of relational databases and ensure the security of concurrent transactions that conflict. The American National Standards Institute (ANSI) SQL 92 standard defines the following three phenomena that concurrent transactions may cause:

If you think the preceding definitions are lengthy, take a look at the following formal descriptions:

A1 Dirty read: w1[x] ... r2[x] ... (a1 and c2 in any order)

A2 Fuzzy read: r1[x] ... w2[x] ... c2 ... r1[x] ... c1

A3 Phantom read: r1[P] ... w2[y in P] ... c2 ... r1[P] ... c1w1[x] indicates that transaction 1 writes record x. r1 indicates that transaction 1 reads record x. c1 indicates that transaction 1 is committed. a1 indicates that transaction 1 is rolled back. r1[P] indicates that transaction 1 reads several records according to the conditions of predicate P. w1[y in P] indicates that transaction 1 writes record y that meets the conditions of predicate P.

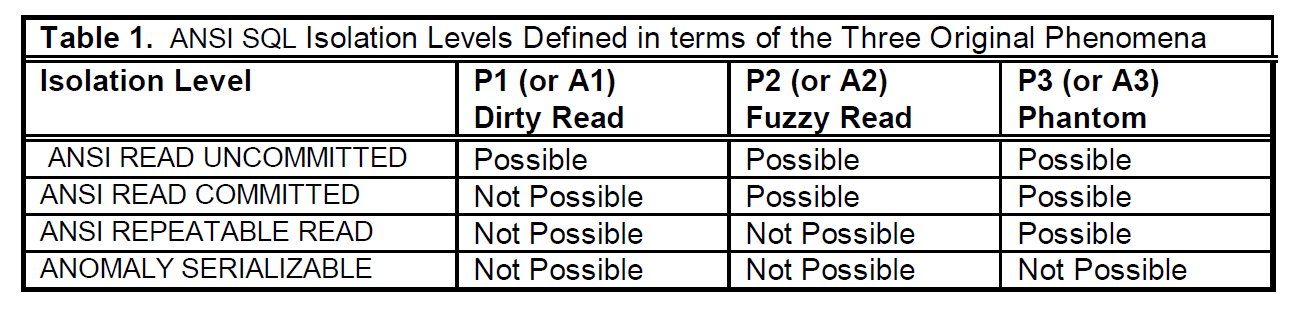

According to the preceding formal descriptions, ANSI defines four isolation levels to solve the three phenomena.

However, Jim Gray, in his famous paper "A Critique of ANSI SQL Isolation Levels" (Critique for short), argued that defining isolation levels according to the preceding phenomena is not rigorous due to the following reasons:

Reason 1: The serializable level is met if transactions of P1, P2, and P3 are prohibited. However, the ANSI standard explicitly describes the serializable level as "the performance of executing multiple concurrent transactions is equivalent to that of a certain serialization". The two are contradictory. When transactions of P1, P2, or P3 are prohibited, "equivalent to a certain serialization" may not be met. The Critique calls the isolation level with P1, P2, and P3 transactions prohibited in the ANSI standard as anomaly serializable.

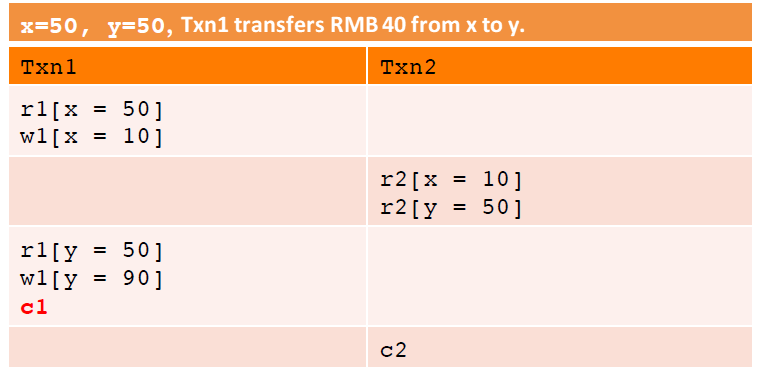

Reason 2: The definitions of phenomena are inaccurate. As shown in the following figure, the example is not included in A1 but has a dirty read (Txn2 reads x + y != 100). There are similar examples for A2 and A3. Those who are interested can try to list examples. I will not go into detail here.

ANSI defines phenomena strictly. If the requirements for transaction commit, rollback, and data query scopes are removed and only the read and write sequences of concurrent transactions are retained, the looser and more accurate definitions of phenomena are as follows:

P1 Dirty Read: w1[x]...r2[x]...(c1 or a1)

P2 Fuzzy Read: r1[x]...w2[x]...(c1 or a1)

P3 Phantom: r1[P]...w2[y in P]...(c1 or a1)Reason 3: The three phenomena apply only to S(ingle) V(alue) systems and cannot define isolation for M(ulti) V(ersion) systems. The snapshot isolation (SI) implemented by a lot of commercial databases does not violate P1, P2, and P3 but may violate constraints and cannot be serialized. In addition to P1, P2, and P3, other phenomena include:

P4 Lost update: r1[x]...w2[x]...w1[x]...c1

A5A Read skew: r1[x]… w2[x]... w2[y]… c2… r1[y]… (c1 or a1)

A5B Write skew: r1[x]… r2[y]… w1[y]… w2[x]… (c1 and c2 occur)

A5B2 Write skew 2: r1[P]... r2[P]… w1[y in P]... w2[x in P]...(c1 and c2 occur)I will give an example for each of the preceding phenomena.

r1[x=50] r2[x=50] w2[x=60] c2 w1[x=70] c1Lost update: Transactions 1 and 2 charge RMB 20 and RMB 10, respectively, to account x. After transaction 1 is committed, RMB 70 is written to the database. After transaction 2 is committed, RMB 70 is overwritten by RMB 60. After transactions 1 and 2 are committed, the account should have RMB 80.

(x+y=100) r1[x=50] w2[x=10] w2[y=90] c2 r1[y=90] c1Read skew: Accounts x and y have RMB 50, respectively, totaling RMB 100. After transaction 1 reads account x (RMB 50), transaction 2 transfers RMB 40 from account x to account y. After transaction 2 is committed, transaction 1 reads account y (RMB 90). For transaction 1, x + y = 140, resulting in inconsistency.

(x+y>=60) r1[x=50] r2[y=50] w1[y=10] c1 w2[x=10] c2Write skew: Accounts x and y have RMB 50, respectively, totaling RMB 100. There is a constraint that the sum of money in accounts x and y must be RMB 60 or more. Transactions 1 and 2 read accounts x and y and take RMB 40 from accounts x and y, respectively, and think they do not break the constraint. After the two transactions are committed, x + y = 20, so the constraint is broken.

(count(P)<=4):r1[count(P)=3],r2[count(P)=3],insert1[x in P],insert2[y in P],c1,c2,Write skew 2: Change the conditions of write skew to a scope.

The previous section describes three phenomena defined in ANSI and the transaction isolation levels defined based on the number of prohibited phenomena. There are no strict or rigorous "official" definitions. The performance of the isolation levels of different mainstream databases varies, and some phenomena are confusing. In my opinion, we should not get caught up with accurately defining isolation levels and, instead, pay more attention to the isolation level performance and implementation of different databases and know how to correctly use them in production environments. In this section, I will use examples to describe the performance of the isolation levels of mainstream databases and how to implement them.

Before describing the implementation of lock-based isolation levels, I will first introduce some lock-related concepts.

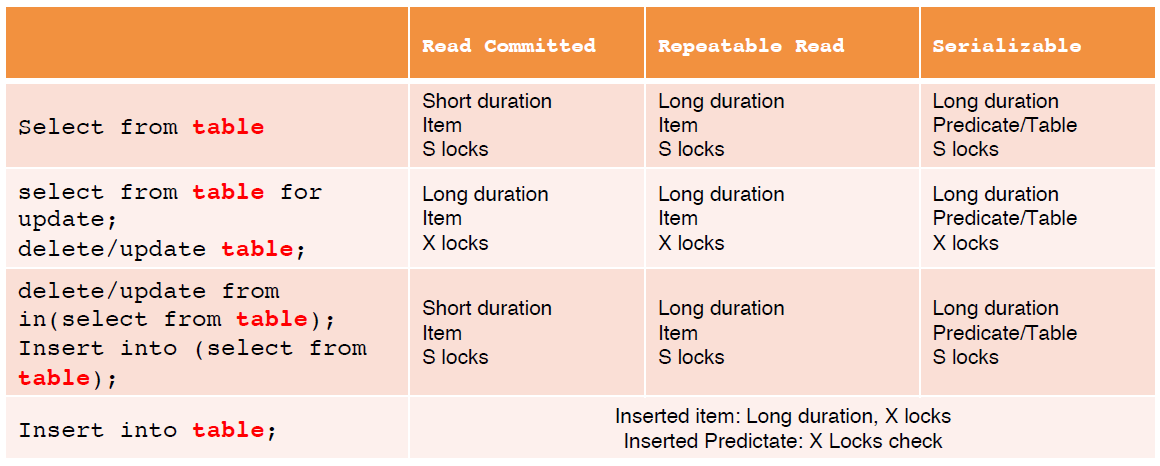

The preceding lock operations can be combined to implement different transaction isolation levels, as described in the following table.

S lock indicates a shared lock, and X lock indicates an exclusive lock.

All write operations with X locks will select long duration. If a write operation with an X lock selects short duration, it may be overwritten by a write operation of another transaction after the lock is released and before the transaction is committed. This will result in dirty writes.

For write operations:

Short duration item S locks prohibit P1. If a read operation detects a row that is being modified (X lock is added to the write transaction), the read operation is blocked at the S lock until the write transaction is committed.

Long duration item S locks prohibit P2. If a write operation encounters a read transaction (S lock), the write operation is blocked at the X lock until the read transaction is committed or rolled back.

Long duration predicate or table S locks prohibit P3. When a scope-based write operation encounters a scope-based read operation (predicate S lock), the write operation will be blocked until the read transaction is committed or rolled back.

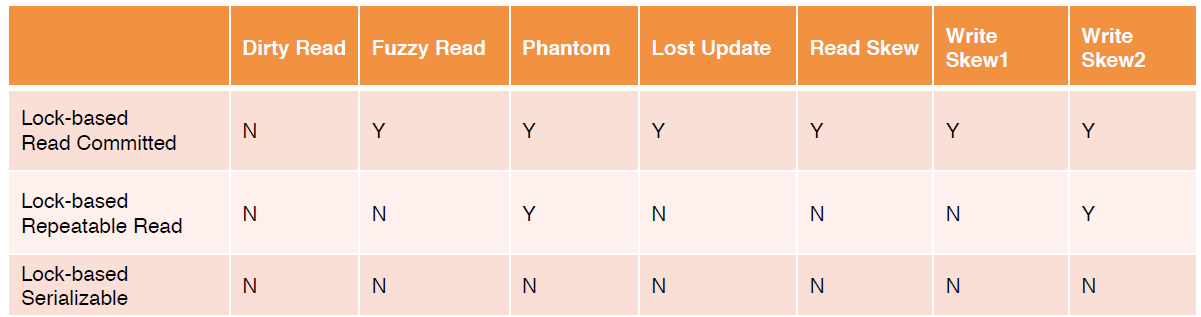

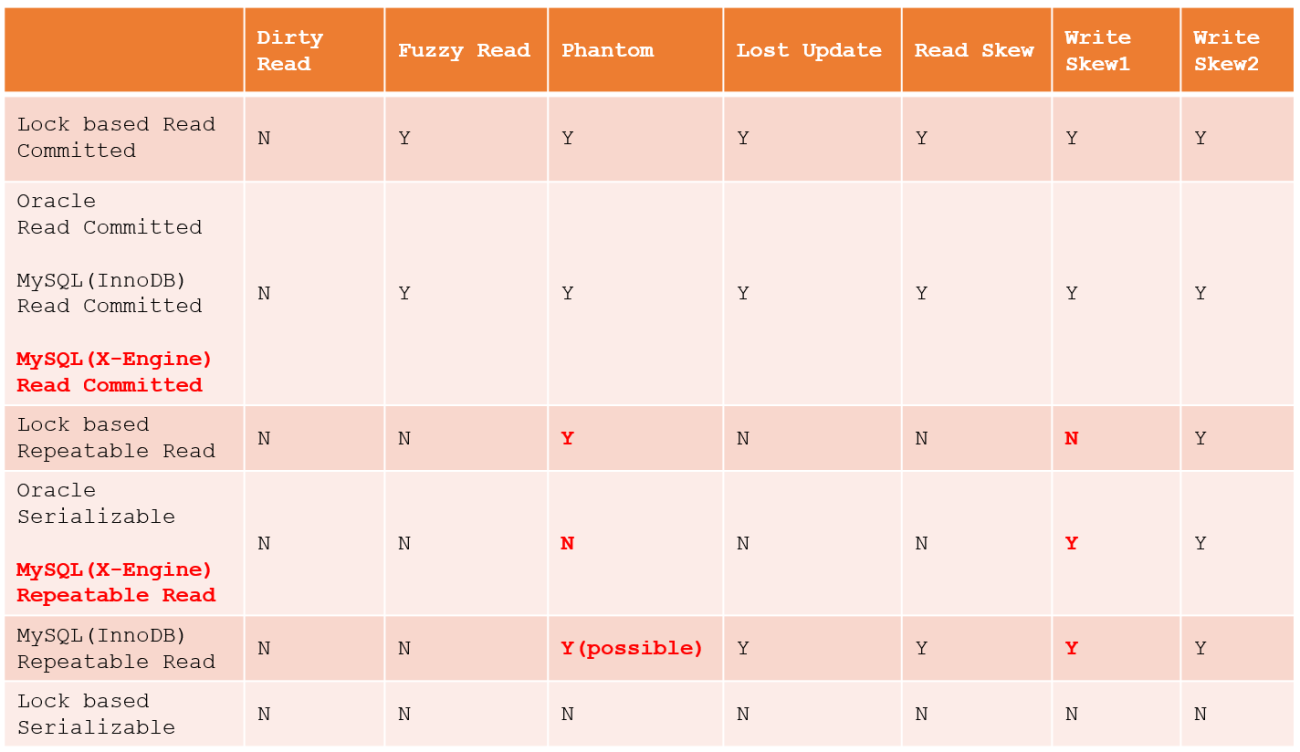

The following table describes the phenomena that can be prohibited by the three lock-based isolation levels.

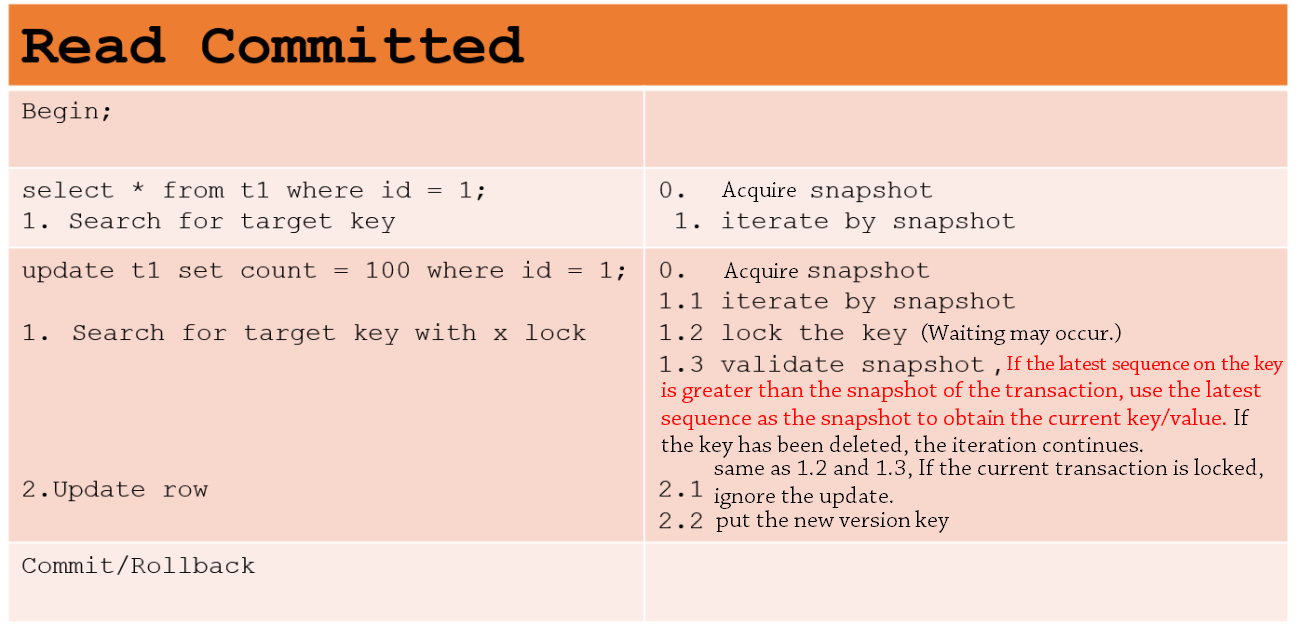

Due to performance and other considerations, databases rarely implement isolation levels based only on locks. With the MVCC + lock method, read requests do not have to be locked. This is the most popular implementation method.

Oracle databases only support the read committed and serializable isolation levels according to the official description. However, the serializable level of Oracle databases is actually based on the SI level of the MVCC + Lock implementation method.

To implement snapshot reads, a global variable SCN (System Commit/Change Number) is internally maintained, which increases when a transaction is committed. A read request for obtaining the snapshot obtains the latest SCN. In Oracle databases, the MVCC implementation method classifies blocks into two types: (1) Current blocks: latest pages and consistent with persistent data. (2) Consistent read blocks: pages of consistent versions based on the snapshot SCN.

The following two examples show what happens to a database with read and write statements at different isolation levels.

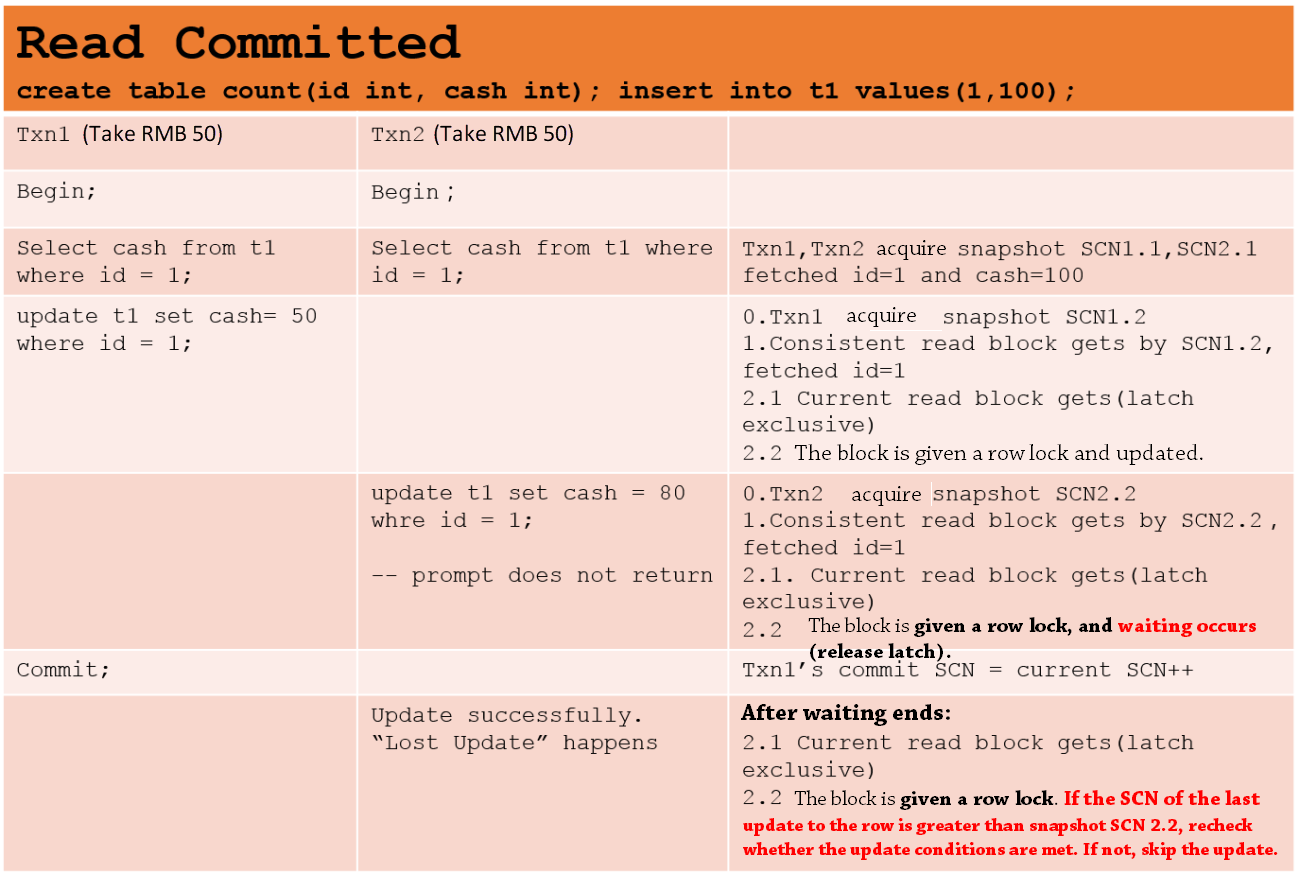

At the read committed isolation level of an Oracle database, each statement will obtain the latest snapshot, and all read requests are snapshot reads. Row locks need to be added to write requests to rows. A write operation to a row will not stop because other transactions update the same row unless it does not meet the predicate conditions for an update. Therefore, lost updates may occur.

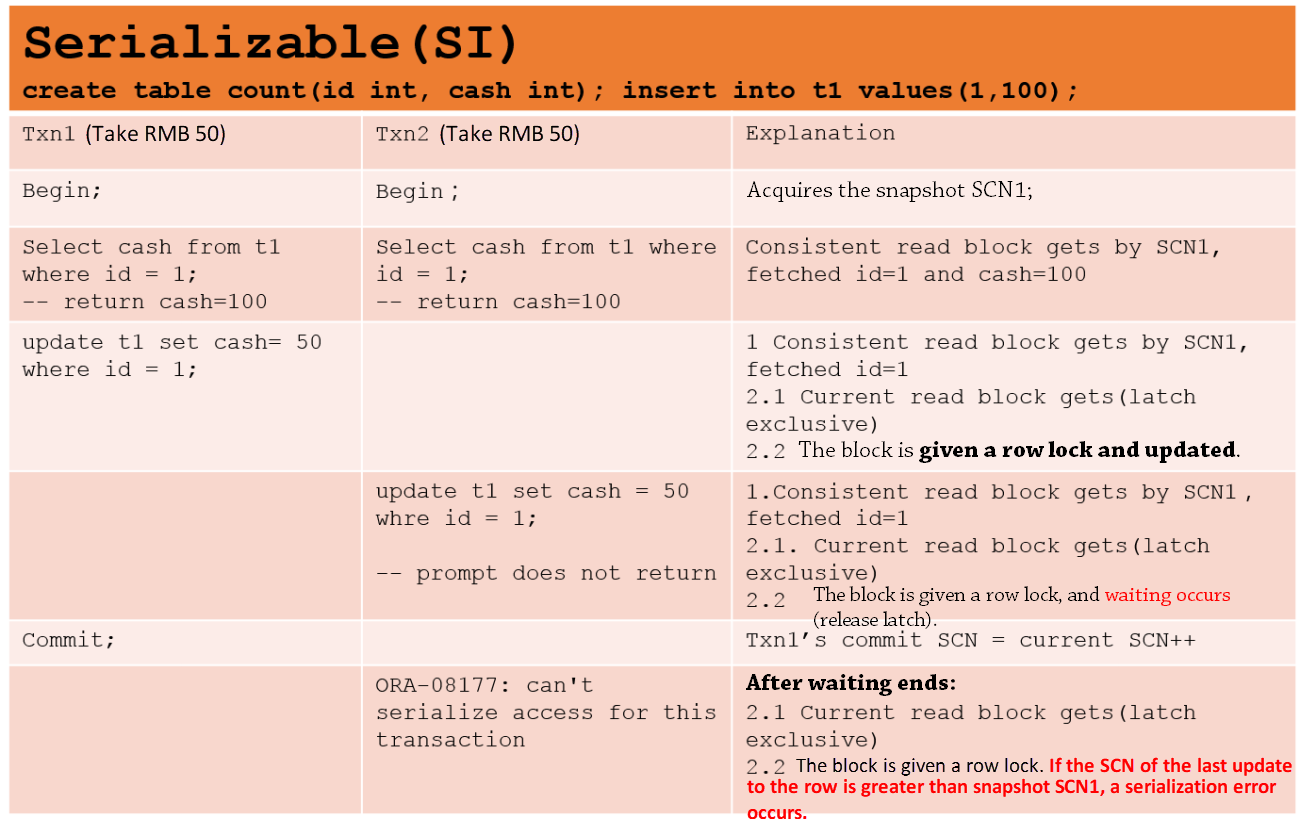

At the serializable isolation level of an Oracle database, the snapshot is obtained after a transaction starts. All read requests are snapshot reads, and row locks need to be added to write requests to rows. After a lock is added to a write operation, first check the target row. If the SCN of a transaction that has recently modified the row is greater than the SCN of the current transaction, the row has been modified and cannot be viewed by the current transaction. If this happens, an error is reported, which prevents lost updates. In this write conflict implementation method, the first committer wins.

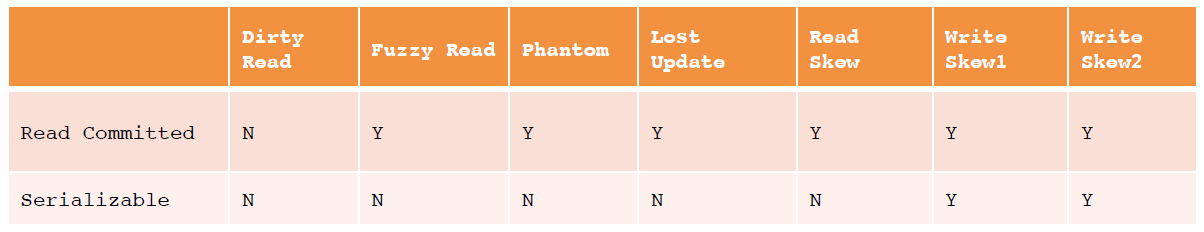

The following table describes the phenomena that can be avoided using two Oracle isolation levels.

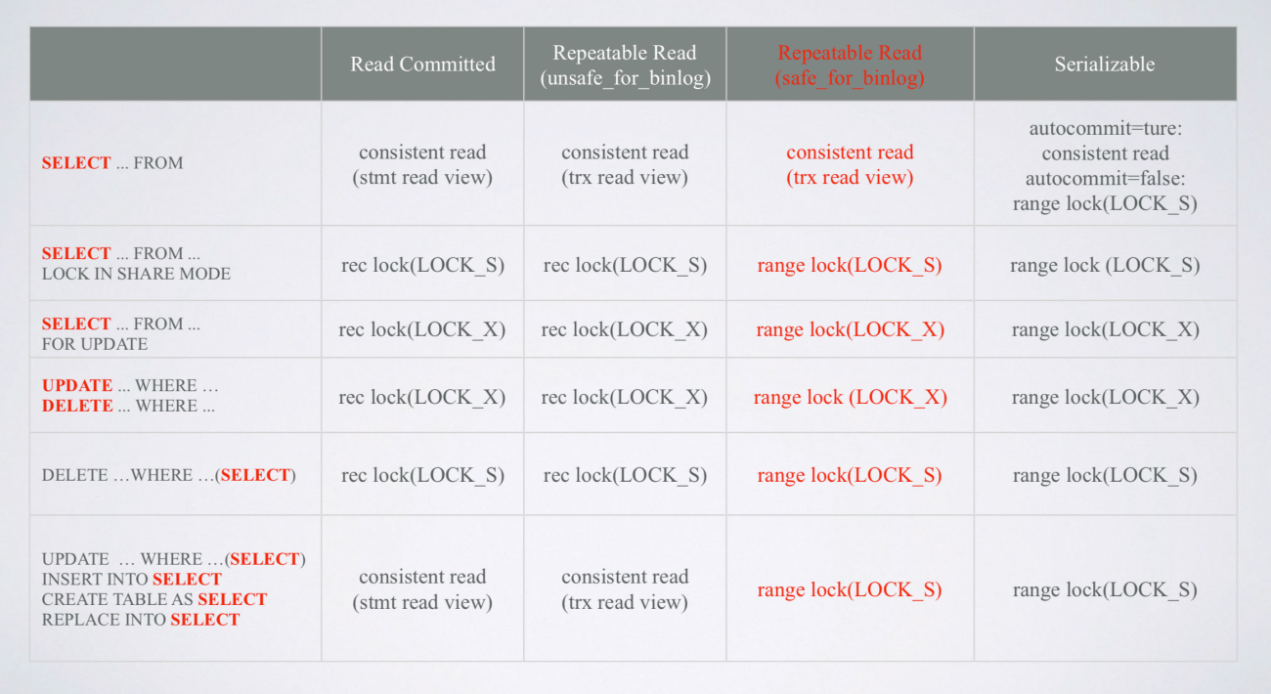

InnoDB also uses the MVCC + Lock method to implement isolation levels. All common select statements are snapshot reads. Delete, update, select for update, and other statements are current reads with locks, as described in the following table. (Note: The table uses Pecona 5.6 code implementation.)

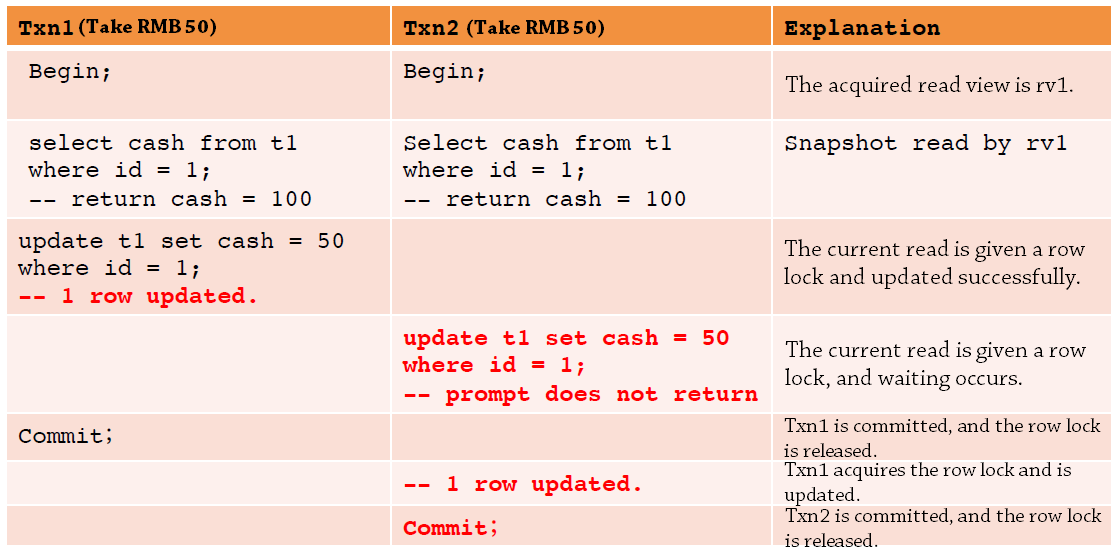

The performance of the read committed isolation level in InnoDB is similar to that in Oracle. Compared with SI in Oracle, the repeatable read isolation level in InnoDB cannot prevent lost updates. The following figure shows an example. At the InnoDB repeatable read isolation level, when a transaction for a row is committed, whether the row is modified by other transactions is not determined. This avoids the rollback costs caused by S1 update conflicts, but may result in lost updates.

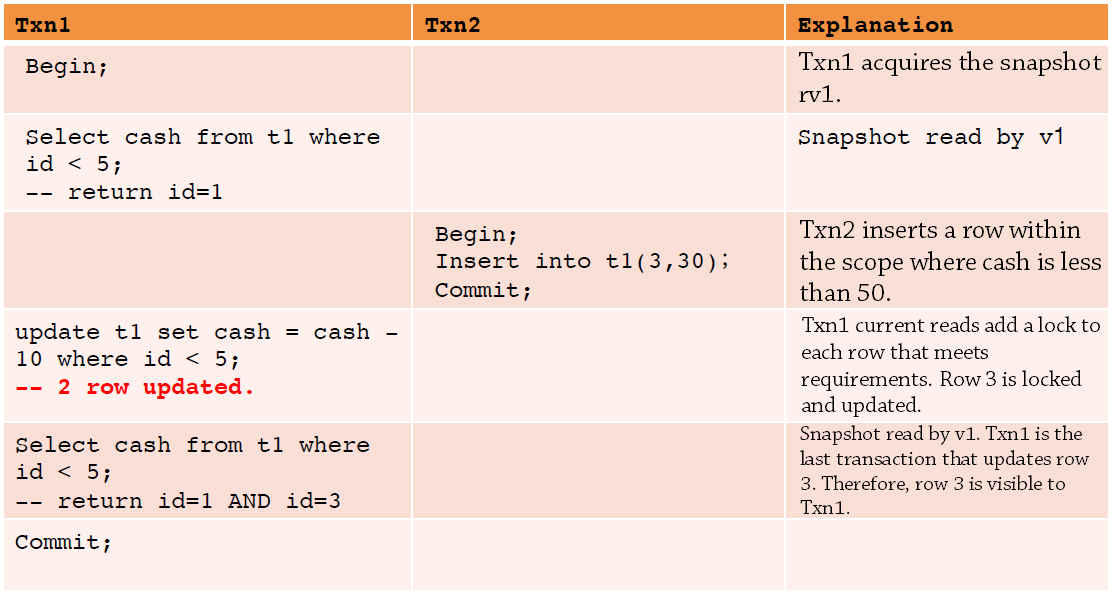

Update and other operations are locked current reads, and phantom reads may also exist, as described in the following table. However, if the update statement of Txn1 is replaced by a select statement, phantom reads can be prohibited because the select statements of a transaction use the same snapshot.

Although the implementation method of InnoDB repeatable read does not avoid lost updates or repeatable reads, it makes full use of current MVCC reads without locks. In addition, current reads avoid excessive rollback costs when S1 update conflicts increase dramatically.

InnoDB also implements lock-based serializable to prohibit all abnormal phenomena. For more information, see section 2.1 "Lock-based Isolation Level Implementation."

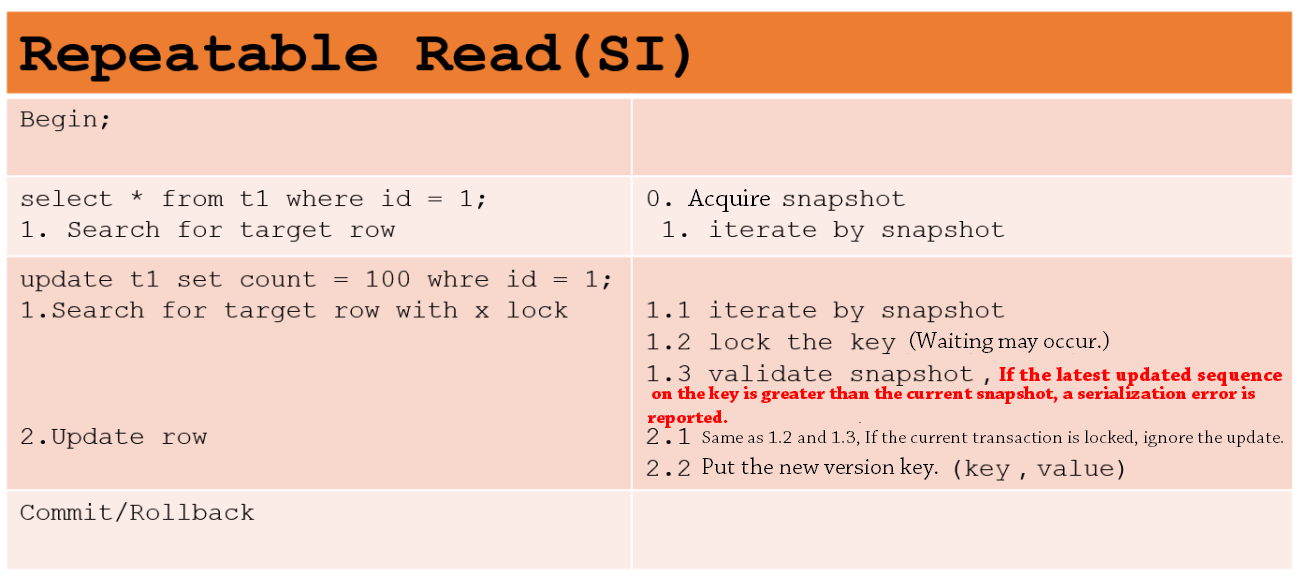

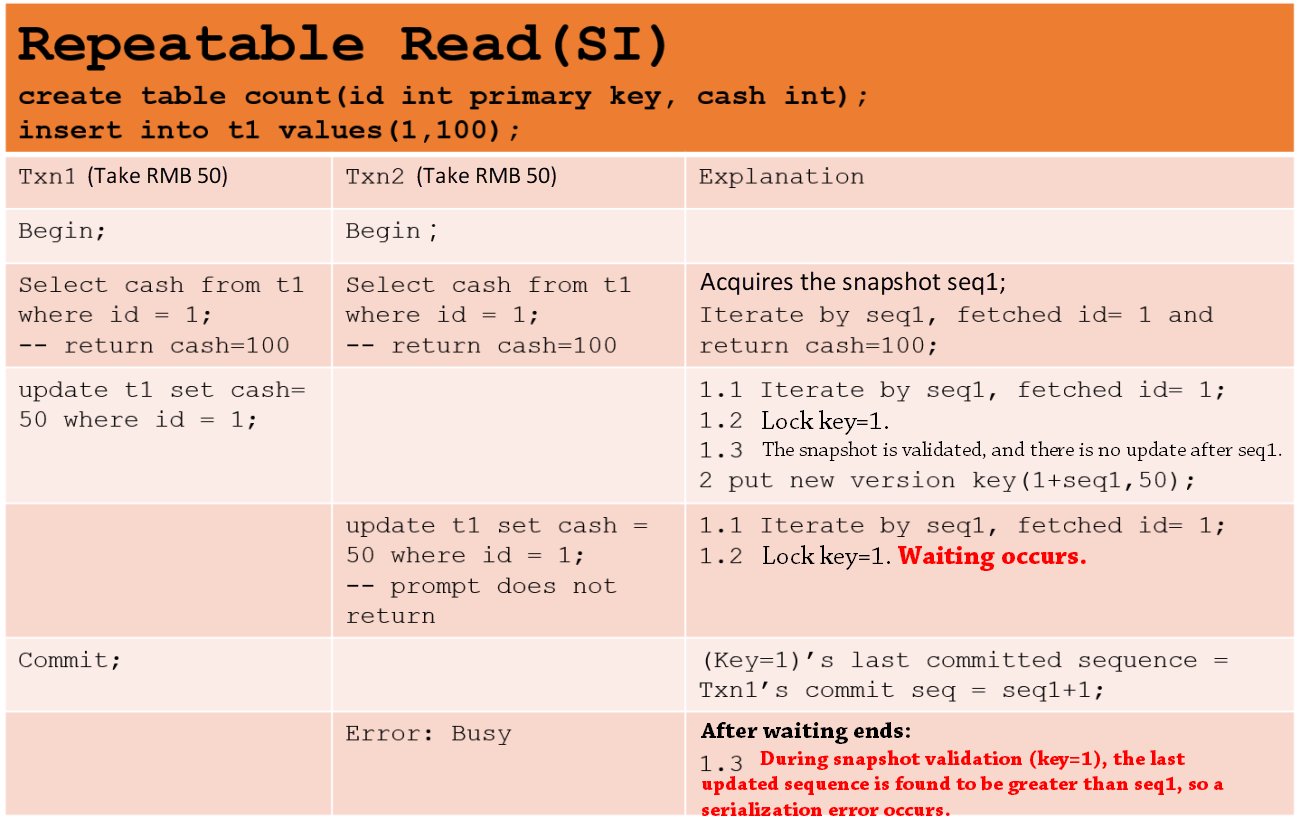

X-Engine isolation levels are also implemented based on the MVCC + Lock method and support repeatable read and SI. They have the same performance as the repeatable read and serializable isolation levels in Oracle databases. X-Engine implements a row-level MVCC. The key of each record has a sequence to represent the version. All read operations are snapshot reads (including locking reads). The snapshot required by read requests is also a sequence. Write conflicts are processed based on locks at two phases and comply with the "first committer wins" principle.

The following two examples are used to describe the implementation principles.

Similar to Oracle databases, the X-Engine SI isolation level can avoid lost updates.

The preceding sections describe the performance of various databases' isolation levels. The preceding table compares their differences. MySQL isolation levels are unique, and the repeatable read isolation level can avoid certain phantom reads. Developers must note that some databases may have the same isolation level names, but the performance of these isolation levels may be different.

Alibaba Clouder - November 8, 2018

Alibaba Cloud New Products - June 2, 2020

Alibaba Clouder - September 2, 2020

Alibaba Developer - May 21, 2021

ApsaraDB - May 14, 2020

Alibaba Clouder - July 22, 2020

ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB supports multiple storage engines, including MySQL InnoDB to meet different user requirements.

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free