By Huang Gui (Qushan)

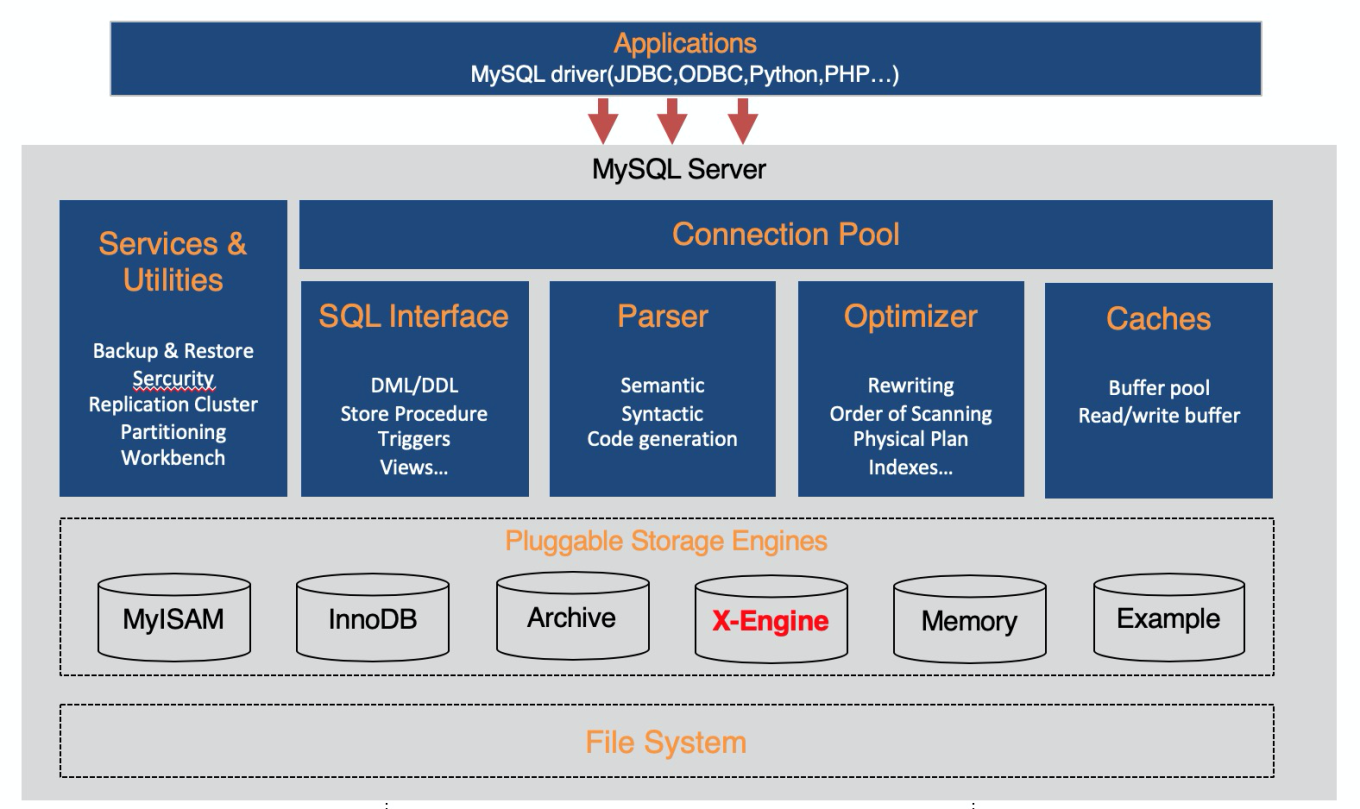

X-Engine is an Alibaba proprietary database storage engine. It is compatible with MySQL and is often used as a MySQL storage engine. So far, X-Engine has been widely used in the internal business systems of Alibaba Group. To use it, simply set the default storage engine to X-Engine, and then use X-Engine for all the tables created subsequently. Alternatively, create a table with the storage engine set to X-Engine, in which case X-Engine is only used for the created table.

Then, here comes the question: Why should we use X-Engine?

X-Engine was born and continues to grow in Alibaba. At first, it aimed to cope up with the challenges faced by Alibaba's internal businesses. In 2010, Alibaba began deploying MySQL databases on a large scale. However, the explosive growth in the business volume imposed rigid requirements on our databases. First, the databases required extremely high concurrent transaction processing capabilities, especially when faced with the sudden increase in traffic during the Double 11 Shopping Festival. Second, the databases needed to support an extremely large scale of data, which required a large number of storage resources.

Although both problems could have been solved by a distributed solution that expands database nodes, adding servers alone is not an efficient approach. We preferred to use technical means to maximize the cost performance of single-node databases, to significantly improve performance at the expense of few resources.

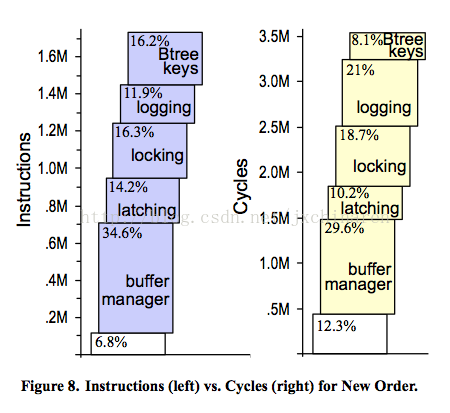

The performance of conventional database architecture has been carefully studied. Michael Stonebreaker, a leader in the database field and a winner of the Turing Award, wrote a paper on this topic, where he pointed out that conventional general-purpose relational databases spend less than 10% of their time efficiently processing data. The remaining 90% of their time is wasted on other work, such as waiting for locking, managing buffers, and synchronizing logs.

This is caused by significant changes to the hardware systems that we depend on in recent years, including multi-core and many-core CPUs, new processor architectures such as processors of the cache-only memory access (COMA) model and the non-uniform memory access (NUMA) model, various heterogeneous computing devices such as GPUs and field-programmable gate arrays (FPGAs), increasingly high-capacity and cost-effective memories, and increasingly powerful storage devices such as SSDs, 3D-XPoint, and NVRAM.

However, the database software stack built on this infrastructure has not changed much but includes everything that is designed for slow disks, for example, fixed-size data pages based on B-tree indexing, transaction processing and data recovery mechanisms based on the algorithms for recovery and isolation exploiting semantics (ARIES), and concurrency control based on independent lock managers.

All this makes it difficult to achieve the potential performance of the hardware in the existing system architecture. As a result, a large number of CPU cycles are wasted on meaningless operations such as waiting for locking. These problems are less obvious during the processing of small volumes of data. However, when the throughput and data volume grow high, the performance becomes limited.

Therefore, we designed the X-Engine storage engine with brand new architecture.

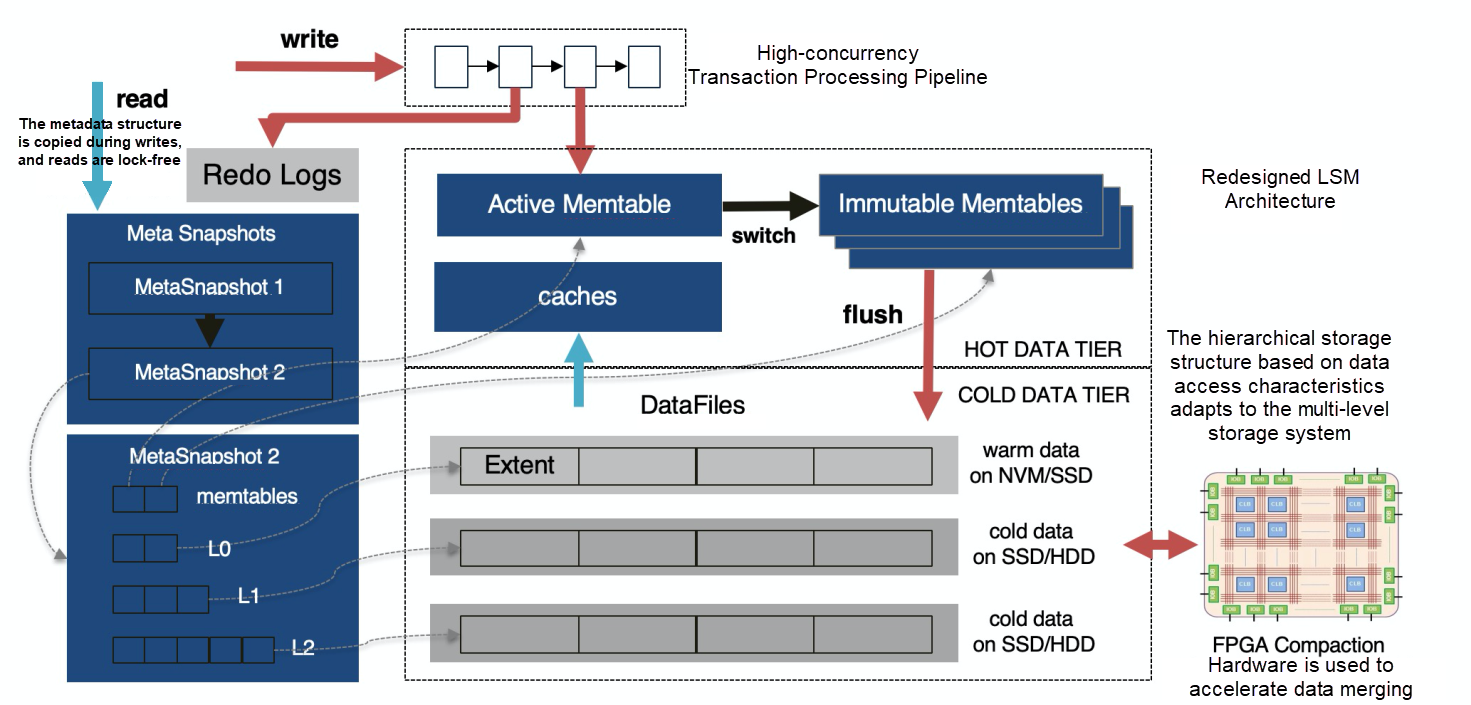

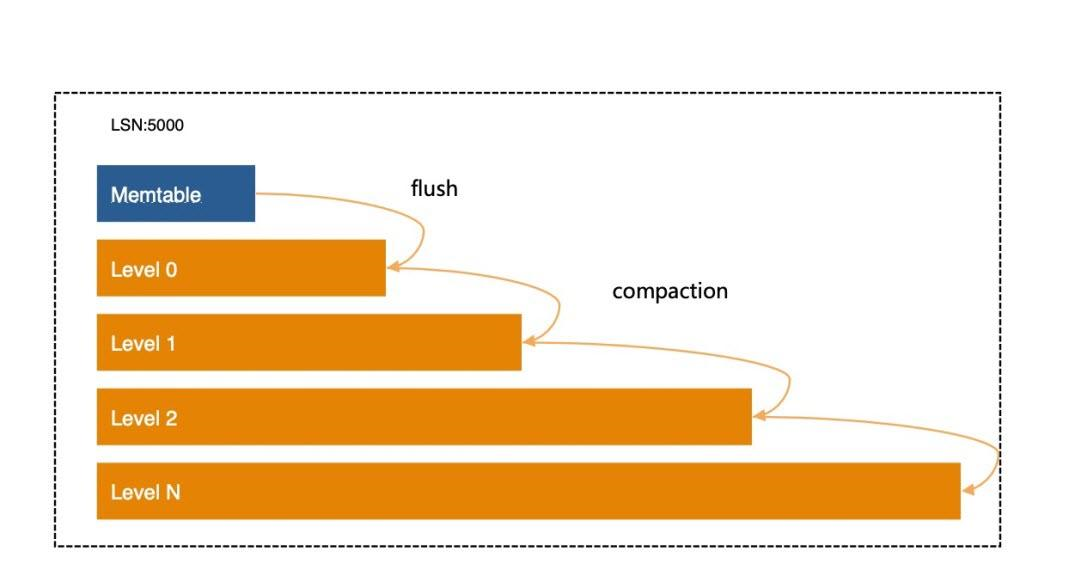

Thanks to the pluggable storage engine in MySQL, X-Engine seamlessly integrates with the features of MySQL, so we only focused on optimizing the storage structure. X-Engine uses a hierarchical data storage architecture, as shown in the following figure, which aims to store large amounts of data to provide highly concurrent transaction processing capabilities while minimizing costs. We observed that data access showed an uneven heat map in most scenarios with large data volumes, this implies that hot data accounts for only a small proportion of all the data.

X-Engine divides data into multiple levels by access frequency (cold, warm, and hot data). Based on the access characteristics of each level of data, X-Engine designs storage structures and writes the data to appropriate storage devices.

X-Engine builds on the log-structured merge-tree (LSM-tree) architecture for hierarchical storage and then makes some improvements to it. Simply put, X-Engine stores hot data and data updates in the memory, and improves transaction processing performance by leveraging many memory database technologies, such as lock-free data structures and append-only data structures.

We also designed a transaction processing pipeline mechanism to run transaction processing stages in parallel, which greatly increases the throughput. In addition, cold data and warm data are gradually eliminated, or merged into persistent storage levels and stored in the hierarchical system with abundant storage devices, such as NVMs, SSDs, and HDDs.

We also made a lot of improvements in the compaction process that imposes a significant impact on performance. In particular, by leveraging the fact that data update hotspots are concentrated, we broke down the data storage granularity to ensure that data is reused as much as possible in the data merging process. In addition, we refined the hierarchy of the LSM tree to reduce I/O and computing costs and minimize spatial expansion in the data merging process.

At the same time, we use more fine-grained access control and caching mechanisms to optimize the read performance.

X-Engine Architecture

We have summarized the architecture and optimization technologies of X-Engine in a paper that was presented at the 2019 SIGMOD Conference, the top conference in the database industry. This was also the first time that a mainland Chinese company published its technological achievements in OLTP database kernels at a top international conference.

X-Engine is designed based on LSM-Tree architecture. To avoid some inherent disadvantages of the LSM tree while leveraging its natural hierarchical structure, we thoroughly optimized the storage architecture by introducing the following technologies:

The following sections introduce each of the architectural optimizations and their implementation details in X-Engine. Now that X-Engine is based on the LSM architecture, let's take a quick look at some of the features of this architecture.

In the LSM structure, the journey of a piece of data begins when the data is written to the write-ahead log (WAL) and then enters the memtable. This is the first stop in its whole lifecycle. Later, the flush operation writes the data to a more solid medium, and then the compaction operation either moves the data to a lower level or discards it midway, depending on when its successor arrives.

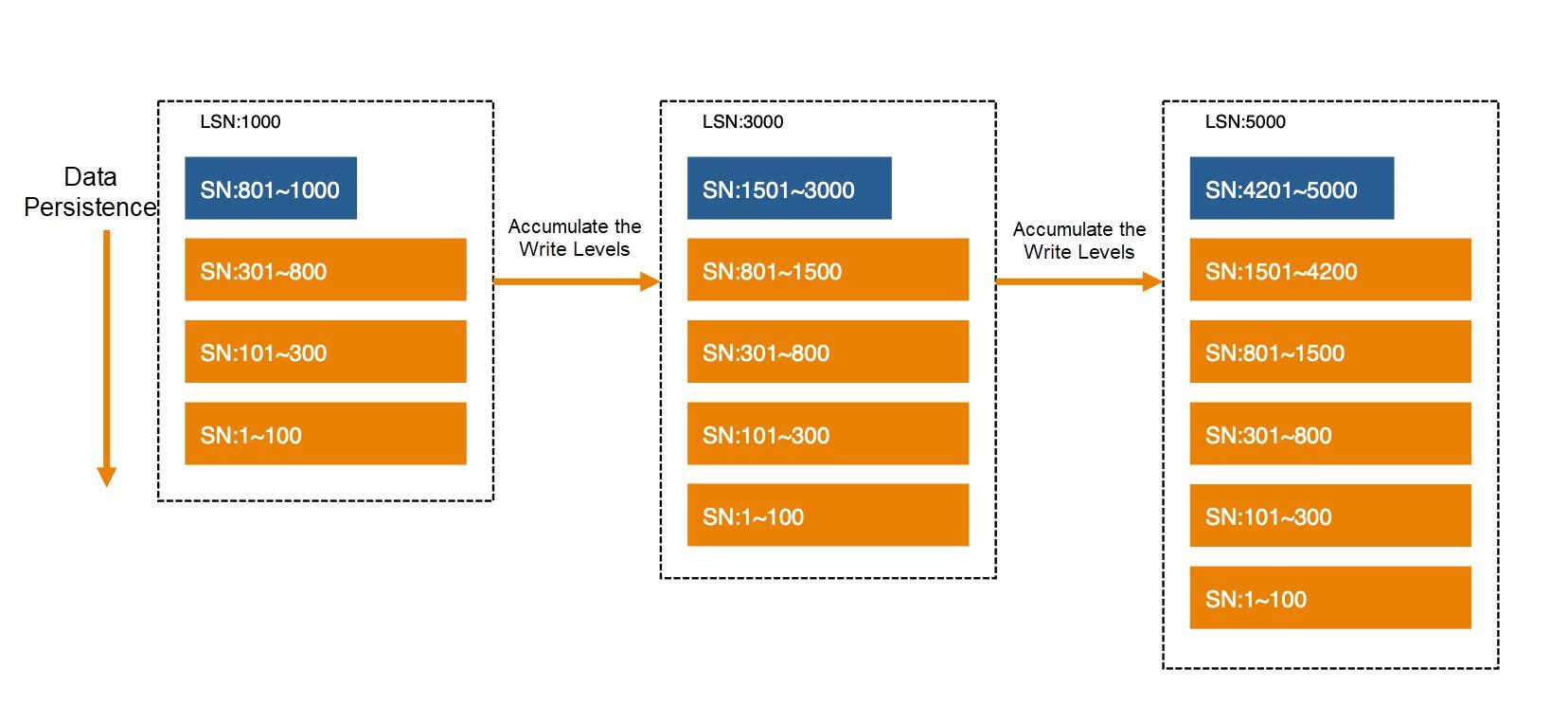

The essence of LSM is that all write operations append data to the memory instead of updating the data in place. Each time the written data is accumulated to a certain amount, the data is frozen as a level and then flushed to persistent storage. All rows of the written data are sorted by key, regardless of whether the data is stored in the memory or persistent storage. In the memory, data is stored in a sorted in-memory data structure, such as a skip list or B-tree. In persistent storage, data is stored in a read-only and fully sorted persistent storage structure.

To support transaction processing, especially to ensure atomicity, consistency, and isolation (ACI) in common storage systems, we need to introduce a temporal factor, based on which we can build an independent view that is not affected by concurrency, for each transaction. The storage engine sorts the transactions and assigns them transaction sequence numbers (SNs) that increase monotonically and globally. The SN is stored in the record of each transaction to determine the visibility between independent transactions, which implements isolation between transactions.

If data is continuously written to the LSM storage structure and none of the other actions is performed, the LSM storage structure will eventually become the structure shown in the following figure.

Note that the SN range of each level identifies the order in which the transaction data is written, and persisted data will no longer be modified. Each level of data is sorted by key, and the key ranges at different levels may overlap.

This structure is very write-friendly, because the written data simply has to be appended to the latest memtable. To implement crash recovery, just record the data to WALs or redo logs. New data does not overwrite old data, and therefore, appended records form a natural multi-SN structure.

When more persistence levels of data are accumulated and frozen, the query performance will be adversely affected. The multi-SN records generated for different transaction commits with the same key are distributed across different levels, as are those with different keys. In this case, read operations, such as sequential scanning, need to search all the levels of data and merge the found data to obtain the final results.

LSM introduces a compaction operation to solve this problem. This operation continuously merges data of adjacent levels and writes the merged data to the lower level. The merging process is actually to read the to-be-merged data from two or more adjacent levels and then sort the data by key. If records with the same key have multiple SNs, the system retains only the data with the latest SN (which is greater than the smallest SN of an active transaction), discards the data with old SNs, and writes the data to a new level. Obviously, this operation is resource-consuming.

The compaction operation in LSM has two objectives. One is to discard outdated data with old SNs, and the other is to control the LSM hierarchy. Generally, in the LSM hierarchy, the data volume increases exponentially with the level. This arrangement aims to improve read performance.

Generally, data access in any storage system is localized, and a large proportion of access traffic is concentrated on a small portion of data. This is the basic prerequisite for effective operations in the cache system.

In the LSM storage structure, if we put the frequently accessed data at a higher level and maintain the scale of this level of data, it's possible to store the data in high-speed storage devices, such as NVMs and DRAMs. In addition, we can store the less frequently accessed data at a lower level and uses cheaper and slower storage devices to store this data. This is the basis of hot and cold data tiers in X-Engine.

To achieve this effect, we must find a way to select appropriate data to be merged into lower levels. This is the first problem to be solved by the compaction scheduling policy. According to the logic of hot and cold data tiers, we need to first merge cold data, because this data is less frequently accessed.

There are many methods to identify cold data, but the methods vary with services. For many streaming services, such as transactions and log systems, newly written data is more likely to be read. Therefore, cold and hot data are distinguished by the write time. However, the access characteristics of many applications are not necessarily related to the write time. In this case, cold and hot data must be identified according to the actual access frequency.

In addition, we can choose to merge the data that affects the read performance, for example, by selecting the to-be-merged data according to the data update frequency. The query of a large amount of multi-SN data will waste more I/O and CPU resources. Therefore, this data should be preferably merged to reduce the number of SNs for a record. X-Engine takes various policies into account to build its own compaction scheduling mechanism.

So far, we have discussed the overall logical structure of LSM. To discuss how reads and writes, as well as compaction, are performed, we need to understand the data structures used at each level, which vary with the variants of LSM.

In X-Engine, lock-free skip lists are used in the memtable, because they are simple and ensure high concurrent read and write performance. Of course, there are more efficient data structures, and you might use multiple indexing technologies at the same time. X-Engine has not been significantly optimized in this area, because the transaction processing logic is complex and writing data to the memtable has not yet become a bottleneck.

To understand how to organize the data at the persistence levels more efficiently, let's discuss the detailed data structure at each level.

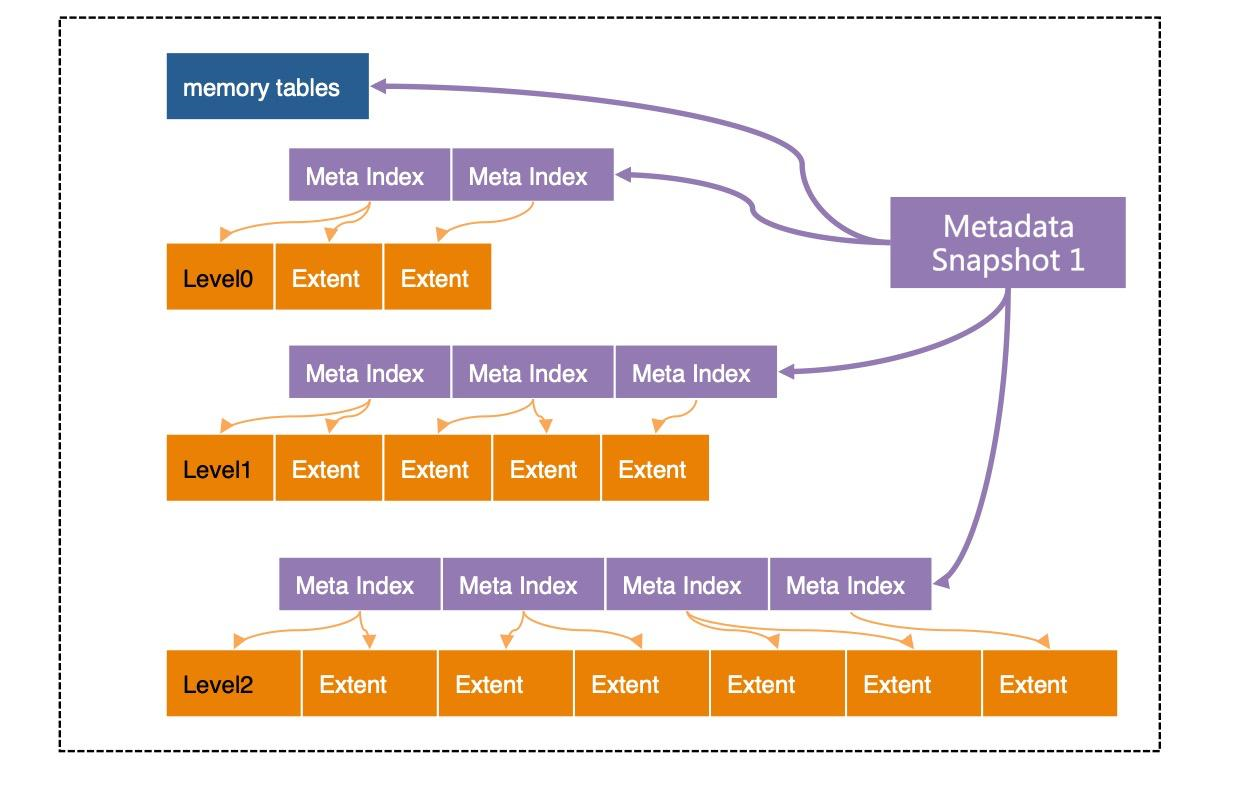

In X-Engine, each level is divided into Extents of a fixed size. An Extent stores the data with a continuous key range at the level. To locate Extents quickly, a set of meta indexes are created for the Extents at each level. The combination of all these indexes and all the memtables, including active and immutable memtables, form a metadata tree, with the root nodes being metadata snapshots. The structure of the metadata tree is similar to but is certainly different from that of the B-tree.

It's critical to note, that except for the active memtable currently being written, all structures in X-Engine are read-only and cannot be modified. When a time point is specified, for example, when the log sequence number (LSN) is 1000, the structure referenced by metadata snapshot 1 in the preceding figure contains the snapshots of all the data logged at the moment associated with the LSN 1000. This is also why this structure is called a snapshot.

Moreover, the metadata structure itself does not change once generated. All read operations start from this snapshot structure. This is the basis on which X-Engine implements snapshot isolation (SI). As mentioned earlier, when more data is written and accumulated, we must freeze and flush the memtable and perform compaction between levels. These operations modify the data storage structure of each level.

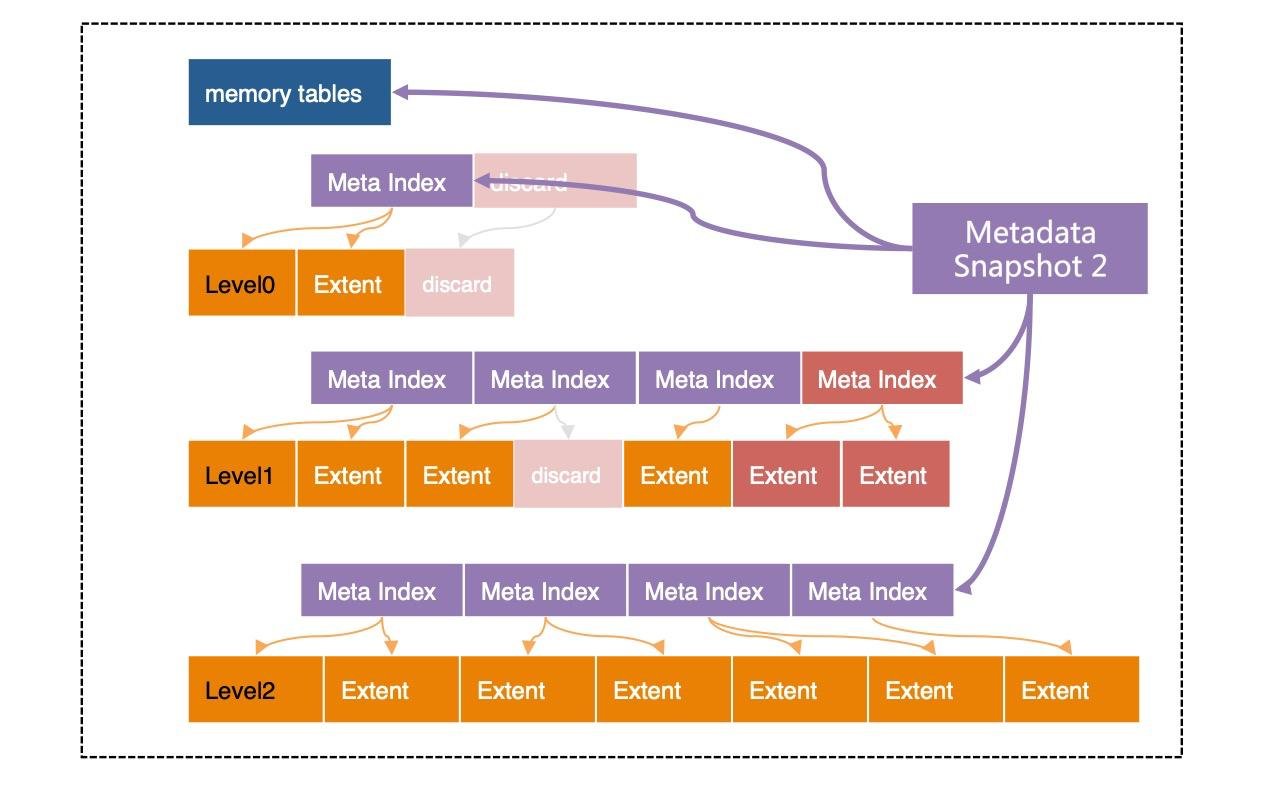

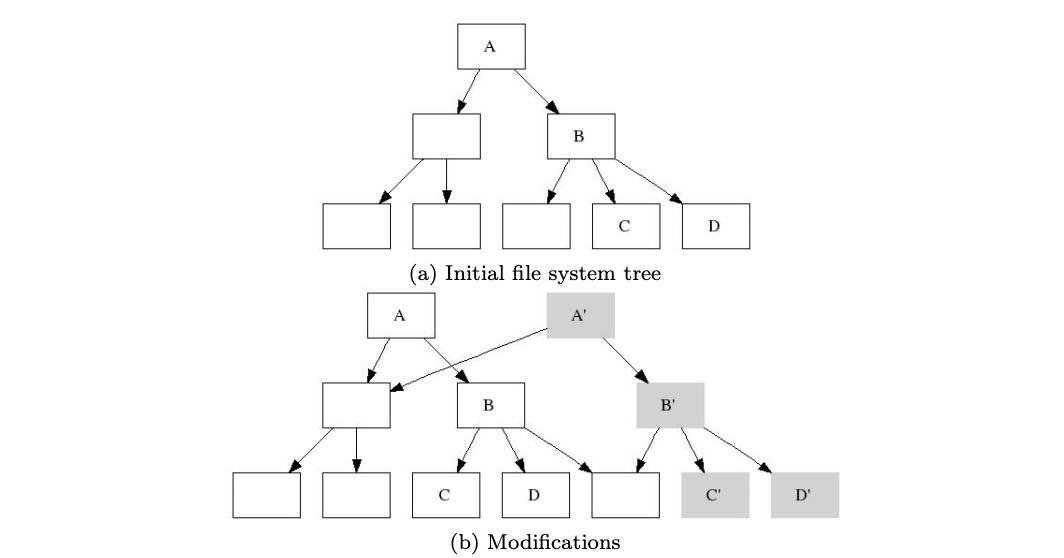

All these operations are implemented through copy-on-write. Specifically, the results of each modification, including switching, flushing, and compaction, are written into a new Extent. Then, a new meta index structure is generated. Finally, a new metadata snapshot is generated. The following figure shows the snapshot change using a compaction operation as an example.

Note that metadata snapshot 2 is slightly different from metadata snapshot 1. Only some leaf nodes and index nodes that have changed are modified. This technology is quite similar to that presented in the paper named "B-trees, Shadowing, and Clones", which will help you understand this process.

With its lightweight write mechanism, LSM has significant advantages in write operations. However, transaction processing is not as simple as writing updated data to a system. To ensure atomicity, consistency, isolation, and durability (ACID), a complex process is involved.

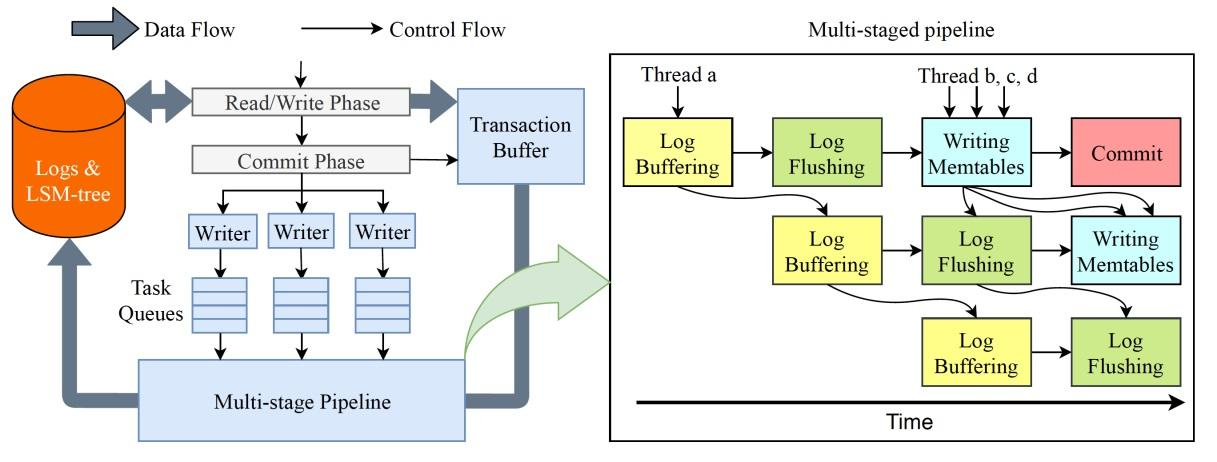

X-Engine divides the entire transaction processing procedure into two phases: the read and write phase and the commit phase. In the read and write phase, we must check for write-write conflicts and read-write conflicts in the transaction and determine whether the transaction can be executed, rolled back, or locked. If no transaction conflict is detected, all the modified data is written to the transaction buffer. The commit phase includes the entire process of writing data to WALs, writing data to memtables, committing the data, and returning results to the user. This process involves both I/O operations (logging and returning messages) and CPU operations (copying logs and writing data to memtables).

To increase the throughput during transaction processing, the system concurrently processes a large number of transactions. A single I/O operation is costly, and therefore most storage engines tend to aggregate multiple transactions for commitment, which is called "group commit". This allows us to combine I/O operations. However, there is still a lot of waiting periods in the group commit process. For example, when logs are being written to a disk, nothing else is done except waiting for the data to be stored in the disk.

To further increase the throughput during transaction processing, X-Engine adopts a pipeline technology that divides the commit phase into four independent and more fine-grained stages: copying logs to the log buffer, flushing logs, writing data to memtables, and committing the data. When a transaction commit thread enters the processing stages, it freely chooses any stage of the pipeline to process the data. In this way, threads concurrently processes data at different stages. All the stages of the pipeline can be nearly fully loaded, provided that the tasks for each stage are properly divided by size.

In addition, instead of background threads, transaction processing threads in the foreground are used in this process. Each thread either chooses a stage of the pipeline to process the data or finds no available stage and then returns to receive requests. This process does not involve any waiting or switching, and therefore the capabilities of each thread are fully utilized.

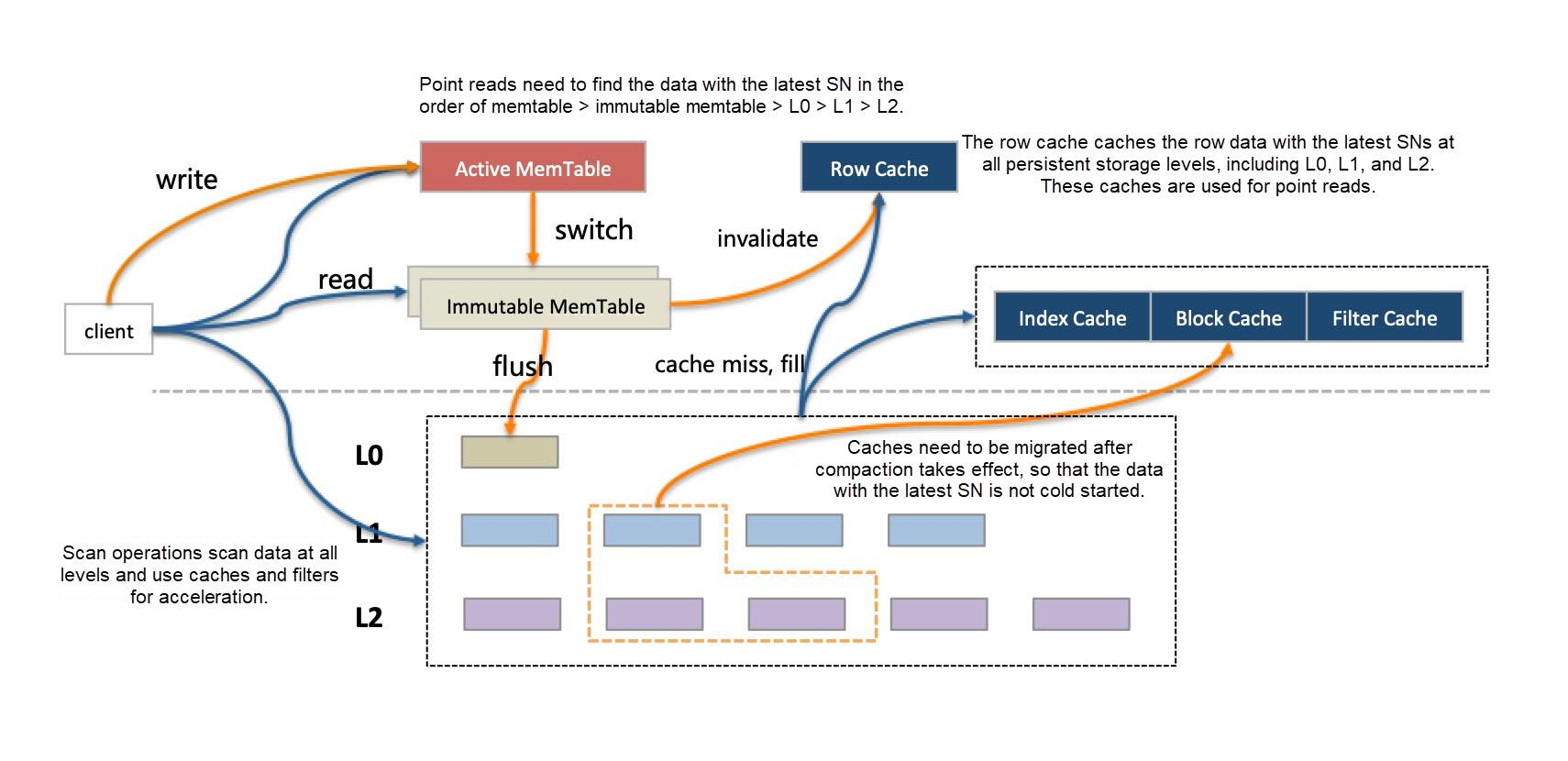

In LSM, if a data record has multiple SNs, the data record with a new SN is appended to the data record with an old SN. Physically, the data record with different SNs may be stored at different levels. Therefore, to query the data record, identify the appropriate SN according to the visibility rules defined based on the transaction isolation levels. Generally, queries are to find the latest data from the highest level (that is written most recently) to the lowest level.

For single-record queries, the query process ends once the single record is found. If the record is located at a relatively high level, for example, in the memtable, it will be returned quickly. If the record is located at a relatively low level, for example, a level of data used for random reading, the system must search downwards level by level, which is very time-consuming. In this case, use a bloom filter to skip some levels to speed up the query, but this involves more I/O operations.

X-Engine introduces a row cache for single-row queries, to cache data above all the persistent data levels. When a single-row query does not hit the memtable, the relevant data will be found in the row cache. The row cache needs to cache the record with the latest SN among its SNs at all persistence levels. However, this record may change. For example, every time after a read-only memtable is flushed to a persistence level, the records cached in the row cache must be updated accordingly. This operation is subtle and requires careful design.

Range scanning operations are more difficult. It is impossible to determine the level where the data associated with a specific key range is stored. The case is that the data may be stored at every level. In this case, the final result returns only after all levels are scanned for the data and the data is merged. X-Engine adopts a series of methods to address this problem. For example, SuRF presented at the best paper at SIGMOD 2018 provides a range scan filter to reduce the number of levels to be scanned. In addition, the asynchronous I/O and prefetching mechanism significantly improve the performance of large-range scanning.

The key to read operations is the cache design. A row cache handles single-row queries, and a block cache handles requests missed by the row cache and can also handle scanning. However, in LSM, the compaction operation batch updates a large number of data blocks. This causes a large amount of data in the block cache to expire instantly and results in a sharp performance jitter. X-Engine addresses this issue in a variety of ways:

X-Engine contains many caches, and the memtable is also considered as one of them. We are yet to solve the problem of properly allocating limited memory resources to each cache to maximize the value of these resources. However, we are striving to explore this in X-Engine.

Undoubtedly, LSM is not useless for read operations. In the read-only structure excluding memtables, the read path can be completely lock-free. Certainly, memtables can also be designed as lock-free for reading as required.

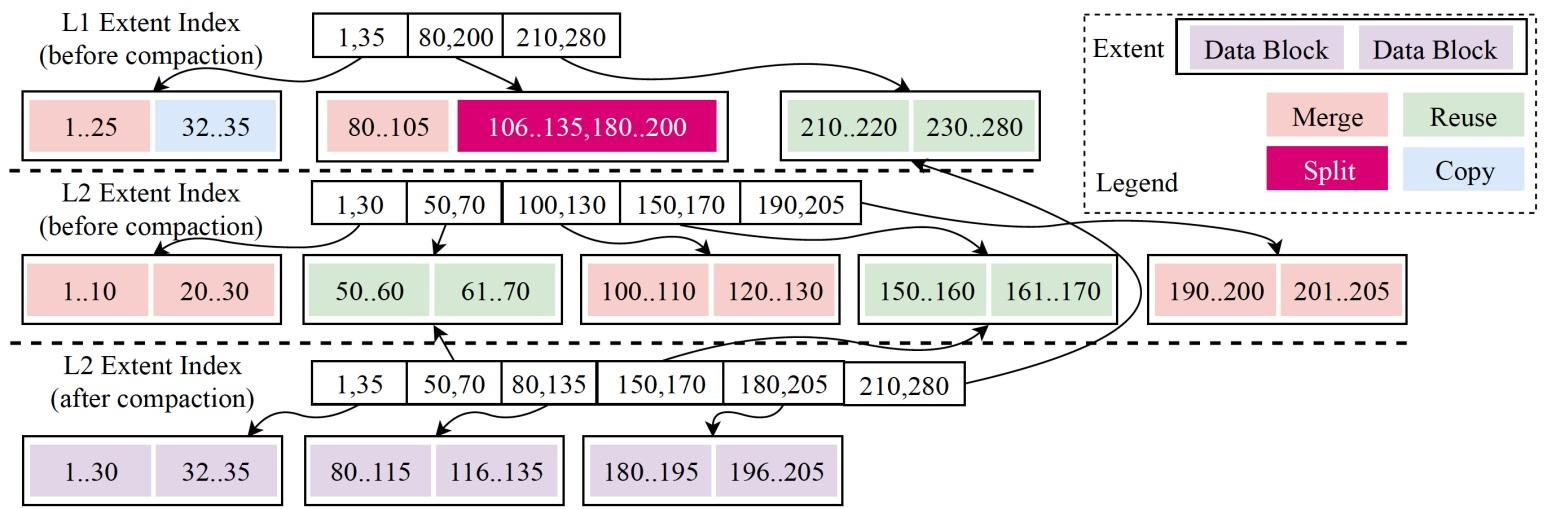

The compaction operation is relatively resource-intensive. The system needs to read data associated with overlapped key ranges from adjacent levels, merge the data, and write the merged data to a new level. This is the cost of simple writes. X-Engine redesigned the storage structure to optimize this operation.

As mentioned previously, X-Engine divides each level of data into Extents of a fixed size. An Extent is equivalent to a small but complete SSTable, which stores the data with a continuous key range at the level. A key range is further divided into smaller continuous segments called data blocks. Data blocks are equivalent to pages in conventional databases, except that data blocks are read-only and of dynamic lengths.

Looking back over the description of the changes to metadata during merge operations in the Data Structure section, we understand the intent of the Extent design by comparing the difference between metadata snapshot 2 and metadata snapshot 1. It is true that not all modifications involve structural adjustments. Some of them only need to modify a small portion of overlapped data and the meta index node.

The two metadata snapshots actually share a large number of data structures. This is called data reuse, and the Extent size is the key factor that determines the data reuse rate. As a completely reusable physical structure, an Extent needs to be as small as possible, so that fewer data overlap between Extents. However, the Extents cannot be too small, or otherwise, too many indexes will be required and the management costs will be too high.

In X-Engine, the data reuse rate is high in compaction operations. Assume that we want to merge the Extents that contain overlapped key ranges at Level 1 and Level 2. In this case, the merge algorithm scans the data row by row. Any physical structure, including data blocks and Extents, that does not overlap with the data at other levels can be reused. The difference between the reuse of Extents and the reuse of data blocks is that the meta indexes of Extents can be modified while data blocks only support data copying (This significantly reduces the CPU usage though).

The following figure shows a typical data reuse process in a compaction operation.

Note that the data reuse process is completed through the row-by-row iteration. However, this fine-grained data reuse has a side effect - data fragmentation. Therefore, a trade-off in the actual operation process is essential.

Data reuse not only benefits the compaction operation itself and reduces I/O and CPU consumption during the operation, but also improves the overall performance of the system in a variety of ways. For example, in the compaction process, data does not need to be completely rewritten, which greatly reduces the expansion of the write space. Moreover, most of the data remain unchanged, and therefore, the data cache remains valid after data updates. This reduces read performance jitters caused by cache expiration during data merging.

In fact, the optimization of the compaction operation is only part of what X-Engine does. More importantly, it optimizes the compaction scheduling policies and defines the method for selecting Extents, the granularity of the compaction tasks, and execution priorities. These all affect the performance of the entire system. Although no perfect policy exists, X-Engine has accumulated some experience and defined many rules. How to schedule the policies properly is an important task for the future.

X-Engine is determined to become the general-purpose storage engine for big data volumes in the MySQL ecosystem. We are continuously optimizing storage structures, compression algorithms, read and write performance, and stability in order to provide optimal cost performance.

X-Engine provides some unique advantages. You may use it as an integrated online database for historical data to fully compress data tables without sacrificing read and write performance. According to our test results, in a standard TPC-C test, the transactions-per-minute-C (tpmC) of X-Engine is basically the same as that of InnoDB. If your application uses MySQL databases and has large write volumes, X-Engine is a great choice to significantly reduce storage costs while meeting query requirements. X-Engine is well suited for logging, message archival, and order storage.

X-Engine is more than a system designed for research. It has aimed to deliver value to users from the very beginning. It has been used in the Alibaba Group for over two years and has completely replaced our original history databases for core transactions and DingTalk messages. In these applications, it has achieved the expected results: reducing the costs of the transaction history database by 33% compared to the original HBase databases, and reducing the costs of the DingTalk message history database by 60% compared to the original MySQL with InnoDB.

Currently, X-Engine is only available in RDS for MySQL 8.0. To use X-Engine in RDS for MySQL 5.6 or 5.7, migrate your data to RDS for MySQL 8.0 and configure X-Engine to take it into effect. To apply X-Engine, set the default storage engine to X-Engine, or set the storage engine to X-Engine when creating a table and use X-Engine with other engines. Also, change the storage engine of an existing table to X-Engine by running the following statement: alter table your_table engine = xengine. Note that this operation locks the table and copies the data, and the time for performing this operation varies depending on the volume of the stored data.

For more information about operations, parameter configurations, and limits, refer to X-Engine Documentation.

As X-Engine is the storage engine for MySQL, X-Engine must be continuously improved in terms of its compatibility with MySQL systems. In the future, based on the most urgent needs, some features such as foreign keys will be gradually enhanced and more data structures and index types will be supported.

The core value of X-Engine lies in cost performance. Continuously improving performance at lower costs is our long-term fundamental goal. X-Engine is constantly exploring new approaches to operations such as compaction scheduling, cache management and optimization, data compression, and transaction processing. X-Engine will not be limited to a storage engine for single-node databases. In the future, it will serve as the core of the Alibaba proprietary distributed PolarDB to provide enterprise-level database services.

5 Simple Steps to Migrate Data Warehouses from Redshift to Alibaba Cloud AnalyticDB for PG

Lindorm: Alibaba Cloud's Newest Cloud-Native Multi-Model Database

ApsaraDB - October 21, 2020

ApsaraDB - November 16, 2020

ApsaraDB - February 19, 2020

ApsaraDB - November 17, 2020

ApsaraDB - March 15, 2024

ApsaraDB - November 17, 2020

ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn MoreMore Posts by ApsaraDB