In March 2020, OceanBase became commercially available on Alibaba Cloud, marking its official launch in the public cloud. At this time, we released the related products, including the cluster management product OceanBase Cloud Platform (OCP), diagnostic product OceanBase Tuning Advisor (OTA), migration service OceanBase Migration Service (OMS), and developer center OceanBase Developer Center (ODC).

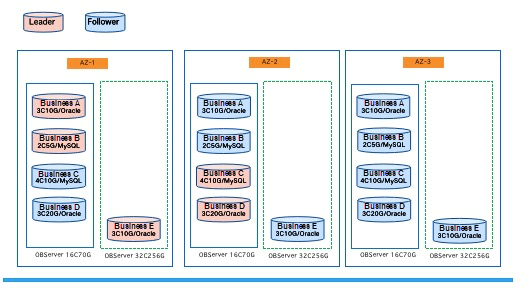

Ant Financial's ApsaraDB for OceanBase (OceanBase) is a fully native, distributed, relational database product that is completely controllable at the code layer. The public cloud OceanBase product is deployed on three copies in three different zones. The Paxos protocol ensures data consistency among multiple nodes in the event of a single point of failure or a single zone failure. It also ensures business continuity with a recovery point objective (RPO) of 0 and a recovery time objective (RTO) of less than 30 seconds, achieving high availability at the data center level. In the future, Alibaba Cloud will introduce a new version with five data centers across three regions to provide cross-city failover capabilities for high availability. OceanBase also supports highly flexible resource management. It also supports multi-tenant deployment. In OceanBase clusters, you can allocate instances as needed and scale online resources in or out.

In terms of security and availability, OceanBase is well suited to financial business scenarios. Due to regulatory requirements, financial business scenarios (such as banking) cannot be implemented in the public cloud. However, this does not affect financial businesses such as insurance and funds.

OceanBase differs from most distributed systems as it does not have a separate master control server or process. A distributed system generally consists of a master control process for global management and load balancing. Its master control process is called RootService, which is integrated into the ObServer. OceanBase dynamically selects one ObServer from all the working hosts to run RootService. If the ObServer where RootService runs fails, the system automatically elects another ObServer to run RootService. Though the implementation is complex, this simplifies the deployment process and reduces the cost of use.

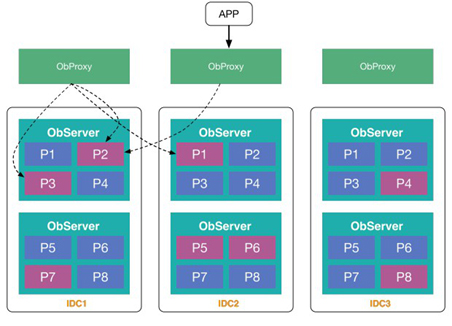

OceanBase implements unlimited scale-out through its partitioning capability. OceanBase partitioning differs from traditional database partitioning because all the partitions of a traditional database must be deployed on the same server. In contrast, OceanBase partitions can be allocated to different servers, and each partition exists in three copies. From the perspective of the data model, OceanBase can be viewed as the implementation of a traditional database partition table on multiple servers. It can integrate data generated by different users into a unified table. The entire system presents a table to a user regardless of how the partitions are distributed on multiple servers, and the back-end implementation is completely transparent to the user. OceanBase uses OBProxy at the user portal. OBProxy is an access proxy that forwards requests to the appropriate server based on user request data. The more prominent feature of OBProxy lies in its outstanding performance. It can process millions of data entries per second on common servers.

As shown in the figure above, multiple partitions are distributed on multiple servers. These partitions are deployed across ObServers. Transactions are internally distributed based on the two-phase commit protocol but since the performance is poor, OceanBase has made many internal optimizations. In this architecture, the partition group concept enables the grouping of multiple partitions of different tables that are often accessed together or with similar access modes into the same partition group. The OceanBase backend schedules the same partition group to one server whenever possible to avoid distributed transactions. It also optimizes the internal implementation of the two-phase commit protocol. The two-phase commit protocol involves multiple servers and includes two roles: the coordinator and the participant. The participant maintains the local status of each server, while the coordinator maintains the global status of the distributed transaction. A common practice is to keep logs for the coordinator to persist the global status of the distributed transaction, while OceanBase's practice is to restore the distributed transaction by querying the status of all participants when a fault occurs. This method reduces the resources used by the coordinator log. Moreover, as long as all participants are pre-committed successfully, the entire transaction is successful, and a response can be returned to the client without waiting for the coordinator to write the log.

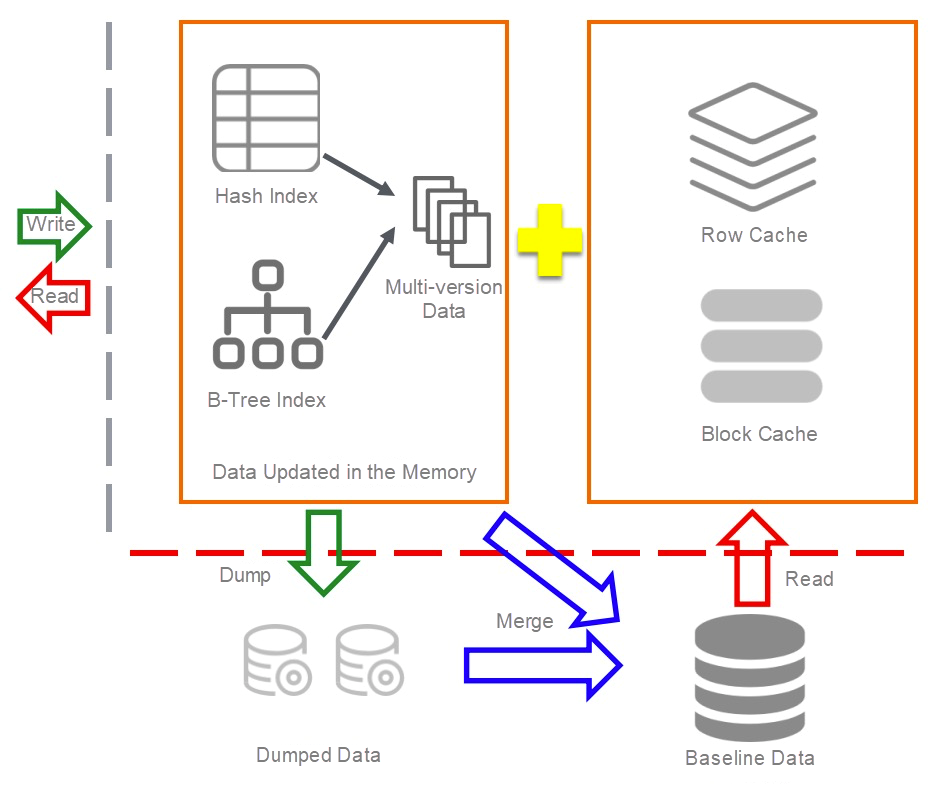

OceanBase is a shared-nothing architecture. Each ObServer has a standalone storage engine and stores data locally. This meets the needs of data continuity services in disaster recovery scenarios. OceanBase adopts an LSM-tree architecture in the design of the cache and data storage. Data is first written into the MemTable in the memory so that the most frequently accessed and active data is accessed in the memory. This significantly improves the efficiency of hot data access. When the amount of written data in the MemTable reaches a threshold, the data is merged and dumped to the SSTable of the disk. In many LSM tree-based storage systems, SSTables are often divided into multiple levels to improve the write performance. When the number or size of the SSTables at a certain level reaches a threshold, data is merged into the SSTables at the lower level.

OceanBase also provides many types of caches, including buffers similar to Oracle and MySQL. The cache uses a block cache to cache SSTable data, as well as a row cache, log cache, and position cache to cache data rows. Baseline data is cached in the memory to improve query performance. For different tenants, each of them has its own cache. You can configure the upper and lower memory usage limits for tenant isolation or oversell preemption, which is suitable for scenarios with different needs.

To reduce storage costs, OceanBase uses multiple data compression algorithms, such as lz4 and zstd. OceanBase performs dataset slimming at two levels. The first level is encoding, which slims data by using dictionaries, RLE, and other algorithms. The second level is general compression, which uses lz4 and other compression algorithms to compress the encoded data. Compared to the traditional MySQL Innodb compression, the zstd algorithm can store the same dataset in one-third of the space in MySQL storage. This greatly reduces storage costs. More importantly, the compression design for fixed-length pages in traditional databases will inevitably cause storage holes, which affect the compression efficiency. In storage systems with LSM-tree architectures like OceanBase, compression has no impact on data write performance.

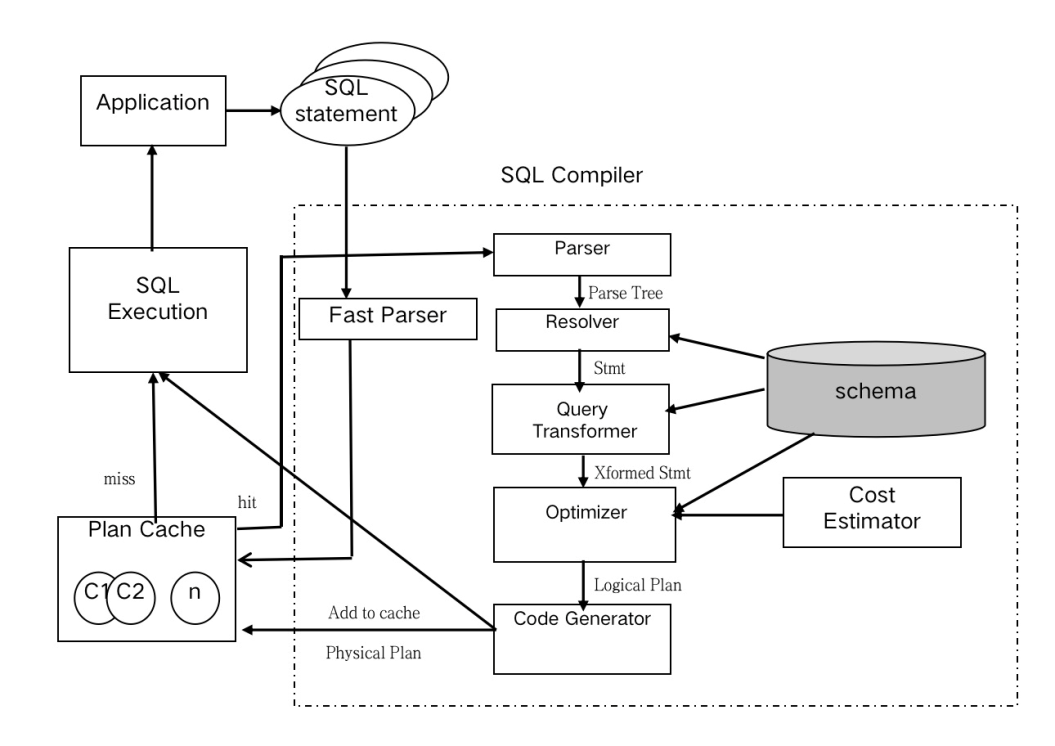

OceanBase tenants enjoy compatibility with Oracle and MySQL statements. In contrast to traditional MySQL, OceanBase also supports soft parsing in addition to hard parsing. The parser also supports SQL parameterization and variable binding. As shown in the following figure, the parser stores the parsed SQL templates and execution plans in the plan cache. The SQL statements in the plan cache can reduce the overhead of each hard parsing and improve the SQL running efficiency.

Based on the LSM-tree storage architecture, OceanBase designs a unique cost model, introduces statistics, and has an optimizer based on the code model. This means that OceanBase can use statistical information to calculate the optimal access path for each SQL statement and create an optimal execution plan. At the same time, OceanBase can dynamically bind fixed execution plans online based on user needs, providing convenience for scenarios that require emergency responses or greater efficiency. In terms of executors, OceanBase supports the nested loop join, the hash join, and the merge join methods to improve the join efficiency for large tables. It also supports concurrent running and distributed SQL statements.

OceanBase is a distributed relational database that conforms to the principles of atomicity, consistency, isolation, and durability (ACID). In addition to traditional ACID, OceanBase emphasizes another "A" feature, availability, to provide AACID. Multi-replica log replication based on the Paxos protocol enables business continuity without data loss in the case of a single point of failure. In terms of consistency, OceanBase adopts multi-version concurrency control (MVCC). When a data block is updated, OceanBase opens another data block with the data version in the transaction ID. In this case, only the SQL statements in a transaction can access the data. Uncommitted data cannot be accessed by other sessions. In terms of isolation, OceanBase supports two levels of transaction isolation, commit read and serialization, which provides sound compatibility with Oracle. In terms of durability, like most traditional databases, OceanBase writes logs first. When a transaction is committed, the redo log is written before data is written. This prevents data ambiguity in the event of exceptions.

In terms of security, OceanBase implements a variety of protection measures to maximize data security. For example, the recycle bin mechanism allows individual tenants to enable the recycle bin. When the recycle bin is enabled, data is not immediately deleted in drop table or truncate operations but is moved to the recycle bin. You can run the flashback command to restore the table within its retention period in the recycle bin, which avoids some risks caused by misoperations.

For data modification operations such as delete and update, OceanBase supports flashback queries to restore data to a specific point in time. This provides data recovery in the event of incorrect SQL execution during business and O&M processes. Oracle tenants can also perform as-of-timestamp or SCN queries.

In October 2019, OceanBase ranked first in TPC-C performance testing. It set a world record for 60.88 million TPMC, twice as high as the previous record-holder Oracle. In November of the same year, Alipay recorded a peak of 61 million payments per second during the Double 11 Shopping Festival, once again breaking a world record. OceanBase has proven itself in extreme business scenarios, showing that a distributed database can rival a centralized database in performance, reliability, and availability. Traditional commercial databases like Oracle, SQL Server, and DB2, rely on high-end hardware devices (small servers, storage, and optical fiber networks.) OceanBase only requires a common PC server with SSD disks and a 10-GE network connection. It also provides a high storage compression rate. After OceanBase databases are migrated to Alibaba Cloud. The database is billed by specifications. The migration service is billed by the hour. The other management platforms (such as OCP, ODC, and OTA) are free of charge. You can use OCP to easily manage clusters, tenants, and database users and monitor tenant and node performance. ODC makes it easy to manage and maintain database objects, such as tables, views, functions, and stored procedures. Its SQLConsole makes it easy to perform database operations. OTA can promptly detect problematic SQL statements in the business database, provides optimization suggestions, and binds execution plans. These platforms can simplify the O&M process.

In the future, OceanBase will provide more practical and efficient features to meet your needs. Meanwhile, peripheral ecosystem products will provide more comprehensive functionality.

Completely developed in-house, OceanBase is now available for external use. To try it out, visit: https://www.alibabacloud.com/products/oceanbase

Laying the Cornerstone of IoT Security and Empowering the New Digital Economy

31 posts | 5 followers

FollowAlipay Technology - November 6, 2019

Alipay Technology - May 14, 2020

Alipay Technology - November 26, 2019

Alipay Technology - February 20, 2020

Alibaba Clouder - March 26, 2020

Alipay Technology - November 6, 2019

31 posts | 5 followers

Follow PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn More Lindorm

Lindorm

Lindorm is an elastic cloud-native database service that supports multiple data models. It is capable of processing various types of data and is compatible with multiple database engine, such as Apache HBase®, Apache Cassandra®, and OpenTSDB.

Learn MoreMore Posts by Alibaba Cloud New Products