Conventional big data architecture is facing increasing pressure due to the increasing and diversified analytic requirements of modern day enterprises. With the development and popularization of cloud technologies, real-time data lakes in the cloud has become a popular solution, which typically serve as the big data platform for enterprises. This article describes how you can build a real-time enterprise-level data lake in the cloud by using Alibaba Cloud's Data Lake Analytics (DLA) and Apache Hudi.

In the big data era, traditional data warehouses can no longer meet the requirements for storing data in diversified formats, such as structured data, semi-structured data, and unstructured data. In addition, they fail to meet a range of other requirements posed by upper-layer applications such as interactive analytics, streaming analytics, and machine learning. The "T+1" data delay mechanism of data warehousing leads to a significant delay in analytics, which is not conducive to companies' timely mining of data value. Meanwhile, with the evolvement of cloud computing technologies and the affordability of cloud object storage services, more and more companies are building data lakes in the cloud. However, traditional data lakes do not support the Atomicity, Consistency, Isolation, Durability (ACID) properties of database transactions. As a result, their tables do not support transactions and cannot handle data updates and deletions, which prohibits data lakes from further unleashing their capabilities. To help companies gain faster insights into data value and support ACID properties of transactions, we need to introduce a real-time data lake as the big data processing architecture to meet various analytic requirements of upper-layer applications.

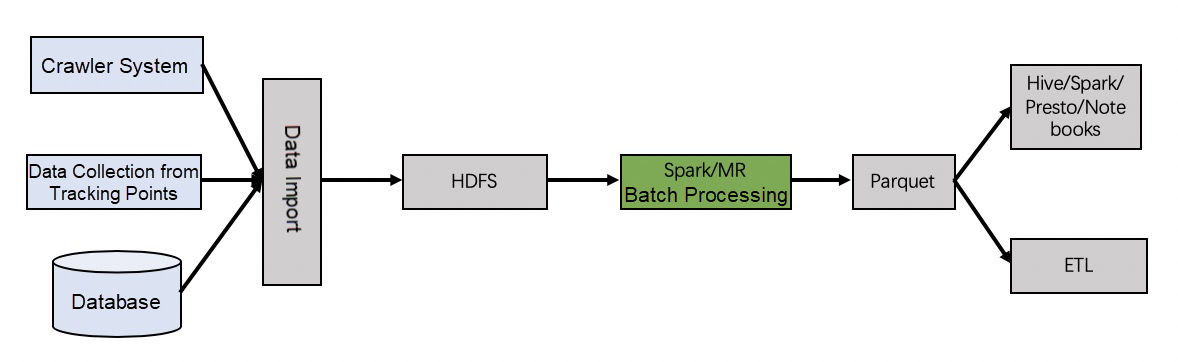

In the big data era, big data analytics platforms headed by the Hadoop system gradually show their superiority, along with the improving ecosystem surrounding the Hadoop system. The Hadoop system has provided an effective solution to the bottlenecks of traditional data warehouses.

Traditional batch processing features a long latency. As the data size grows, the following problems emerge.

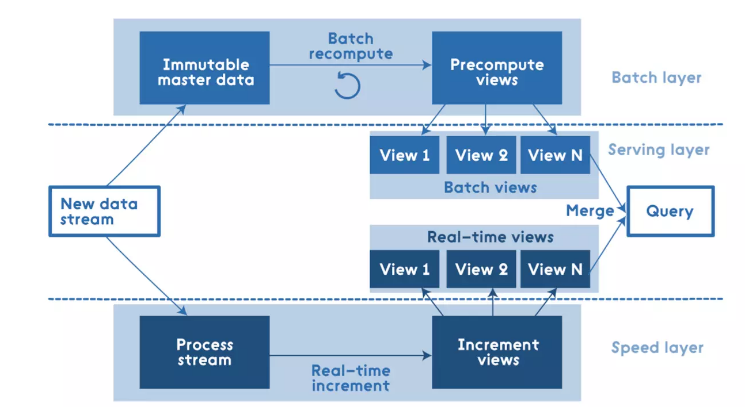

Batch processing, despite its high data quality, produces a significant latency. In view of this problem, Lambda, the popular architecture industry-wide, may be a solution that ensures both low latency and high stability.

In the Lambda architecture, a data copy is transmitted to two layers for separate processing. That is, the speedy layer for streaming processing to generate a real-time incremental view, and the batch processing layer for batch processing to generate a stable and reliable historical view. When an upper-layer application queries the data, Lambda merges the incremental view and the historical view to form and return a complete view, which ensures the low latency of the data query. However, the architecture renders it necessary to maintain two data copies, store two results, and employ multiple processing frameworks, which increases system maintenance costs.

So, can we build a scalable real-time data lake that delivers a low data latency with less burdensome system O&M while avoiding the disadvantages of traditional HDFS solutions? The answer is yes. By using Alibaba Cloud Data Lake Analytics (DLA) and Apache Hudi, we can easily build a real-time data lake.

The DLA + Hudi solution enables you to easily build a real-time data lake for analytics in Alibaba Cloud Object Storage Service (OSS).

The following steps demonstrate a typical data process in a company.

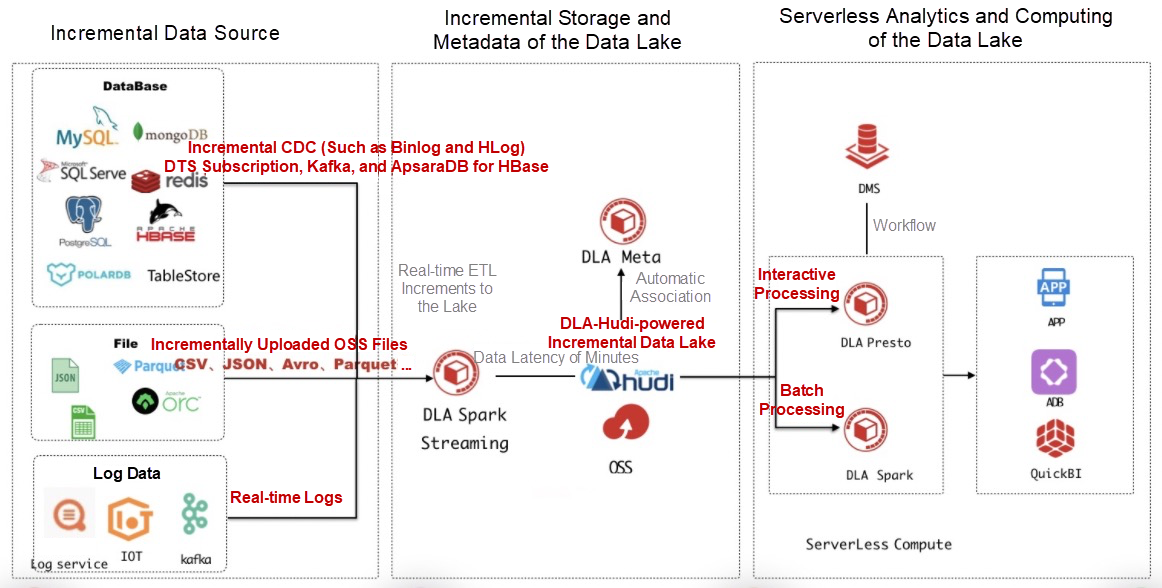

With the integrated Hudi and DLA's built-in out-of-the-box Spark capabilities, you can quickly build a Hudi data lake in DLA. The following figure shows the architecture.

Users consume input data by using DLA's Spark Streaming extension. The results are then written into OSS as Hudi increments, and the metadata is automatically synchronized to DLA Meta. This solution also supports user-created Spark clusters, in which scenario you only need to write input data into OSS in the Hudi format and automatically associate the data with DLA Meta. Then, you can use DLA-SQL for online interactive analytics, or use DLA-Spark for machine learning and offline analytics. Both of the preceding solutions can greatly lower the threshold for DLA use and embrace ultimate openness of DLA. The real-time data lakes based on DLA and Hudi have the following advantages.

In the next section, we'll discuss the details of DLA and Apache Hudi.

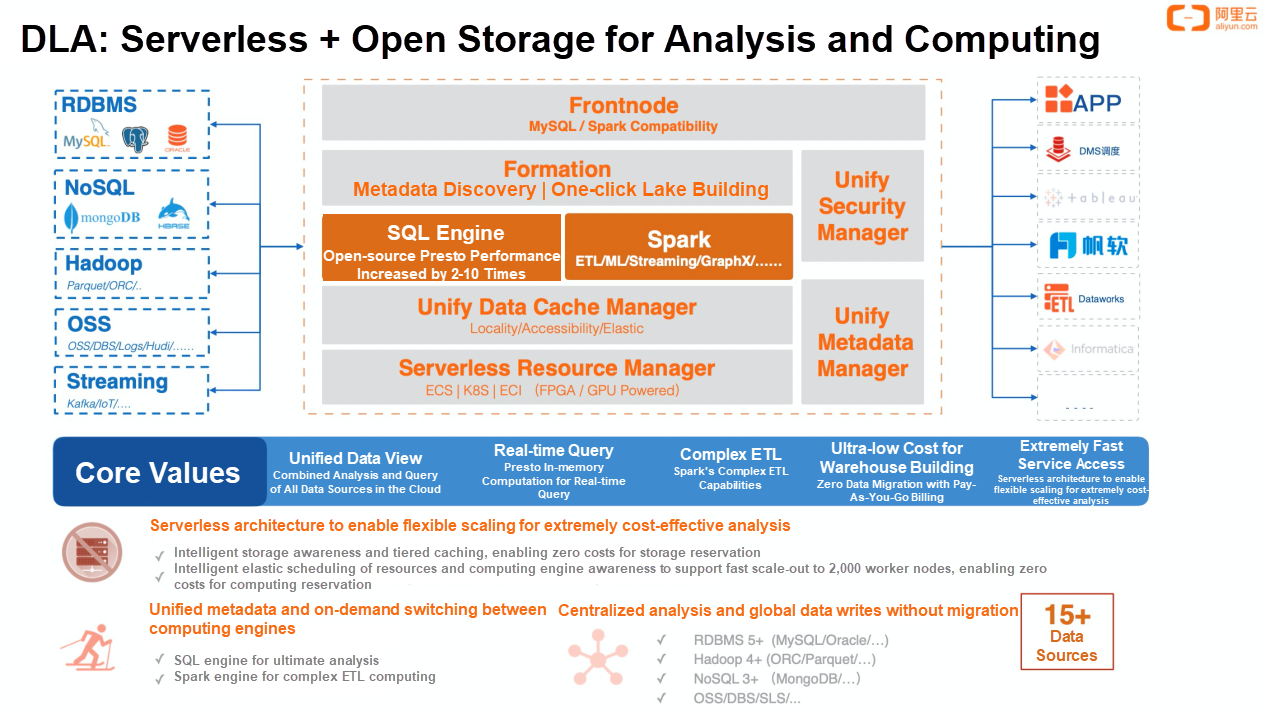

As a core database service independently developed by Alibaba Cloud, Alibaba Cloud Data Lake Analytics (DLA) is a next-generation cloud-native analytics platform. DLA supports open computing, the MySQL protocol, and the Presto and Spark engines. It features a low cost and cost-free serverless management, and provides unified metadata to present a unified data view. DLA is serving thousands of customers of Alibaba Cloud.

For more information, visit https://www.alibabacloud.com/product/data-lake-analytics

DLA's serverless capabilities free companies from high O&M costs and the complexity of scaling in or out during data peaks and valleys. This service is billed on a pay-as-you-go basis without any ownership overhead. Meanwhile, DLA does not store user data separately. Instead, it stores user data in OSS in an open format, and you only need to associate the metadata with DLA Meta to analyze the data by using DLA SQL, and perform complex extract, transform, and load (ETL) operations by using DLA Spark.

Apache Hudi is a processing framework for incremental data lakes and supports data insertion, update, and deletion. You can use it to manage distributed file systems such as HDFS and ultra-large datasets in clouds such as OSS and S3. Apache Hudi has the following key features.

For more information, visit https://hudi.apache.org/

For more information about how to build a real-time data lake demo by using DLA and Hudi, please refer to the following guide: Build a real-time data lake by using DLA and DTS to synchronize data from ApsaraDB RDS.

This article introduces the data lake concept and common big data solutions. It also describes the real-time data lake solution of Alibaba Cloud, which uses DLA and Hudi in combination to quickly build a quasi-real-time data lake for data analytics, and explains the advantages of the solution. In the last section, the article provides a simple demo to show how to integrate DLA and Hudi.

The Evolution of Online Analytics from Alibaba Economy Ecosystem to the Cloud

ApsaraDB - November 17, 2020

Apache Flink Community China - August 12, 2022

Apache Flink Community - July 5, 2024

Apache Flink Community China - September 26, 2021

Apache Flink Community - June 11, 2024

Apache Flink Community - April 8, 2024

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by ApsaraDB