By Zehuan Shi

In normal circumstances, it is important for a service to actively reject excessive requests when it exceeds its capacity, in order to prevent service failure. The traditional approach to achieve this is by using circuit breaking. The basic circuit breaking capability can be configured through the service mesh DestinationRule. However, this requires the user to provide a threshold to trigger circuit breaking, such as a specific number of pending requests. The service mesh data plane will reject requests when the network access exceeds the circuit breaker threshold. This approach requires operations and maintenance (O&M) personnel to have prior knowledge or estimation of the service's capacity and configure the circuit breaker accordingly. However, in many cases, accurately estimating the service's capacity is difficult, especially for non-developer O&M personnel. It often requires multiple rounds of configuration optimization based on the production environment's running status to obtain a reasonable setting. Additionally, when developers upgrade or change a component, the previously measured results may no longer be valid. Some well-known solutions exist in language frameworks, such as the Netflix open-source concurrency-limits.

As a popular cloud-native project, Envoy provides adaptive concurrency limit capability through the Adaptive-Concurrency Filter. During runtime, Envoy continuously samples the service response time under the current concurrency limit value. It periodically reduces the concurrency limit value to a low level and samples the ideal response time. By comparing the difference between the actual response time and the ideal response time under the current concurrency limit setting, Envoy can determine whether the current concurrency value exceeds the service's capacity and by how much. In this case, Envoy dynamically adjusts the concurrency limit to be as close as possible to the range that the service can handle and rejects requests that exceed the limit (returning HTTP 503 and the error message reached concurrency limit). This is how Envoy protects the service.

When Envoy is used as a service mesh Sidecar or gateway, applications written in any language can benefit from adaptive concurrency limits without the need to modify any code. This greatly reduces the burden of operations and maintenance.

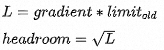

Next, let's explore the algorithm of the Adaptive-Concurrency Filter to understand its core mechanism for calculating concurrency limits. In order to dynamically calculate the concurrency value, we need to continuously measure the actual response time and the ideal response time and compare the two values. For simplicity, we refer to them as sampleRTT and minRTT respectively. The larger the difference between sampleRTT and minRTT, the more severe the service overload. We can use the ratio of the two values to quantitatively reflect this degree, which we call the gradient. A larger gradient indicates a more severe service overload. It's important to note that when the ratio of sampleRTT to minRTT is greater than 1, it means that the service exceeds the load. However, when it is less than 1, it does not necessarily mean that the service is idle. This is because minRTT is already the minimum response time. If the measured response time is smaller than the minimum response time, it can be attributed to normal network fluctuations rather than changes in the service load. (This assumption holds true unless the measured minRTT is unreliable. For example, if the concurrency value during minRTT sampling is configured to be too large and exceeds the service's load.) To tolerate network fluctuations within a certain range, we add a small threshold to sampleRTT when calculating the gradient. This threshold should be similar to the reasonable range of network latency fluctuations. This way, we can try our best to avoid situations where the ratio of sampleRTT to minRTT is less than 1, which we refer to as B. This leads us to the following formula:

With this formula, we can roughly determine the service overload situation. If the gradient ≈ 1, it indicates that the application has not reached its bottleneck yet. We can increase the load until the calculated gradient is greater than 1, and then reduce the load. By repeating this process, the pressure on the application will always be controlled near the bottleneck.

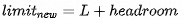

If the measured load indicates that the service still has room for improvement, we need to put pressure on the application, which means amplifying the concurrency limit to maximize its performance. The incremental value is called headroom. The following algorithm is used by Envoy to calculate the headroom. The limit(old) value represents the limit obtained in the last calculation.

Finally, L is added to headroom to obtain the new concurrency limit.

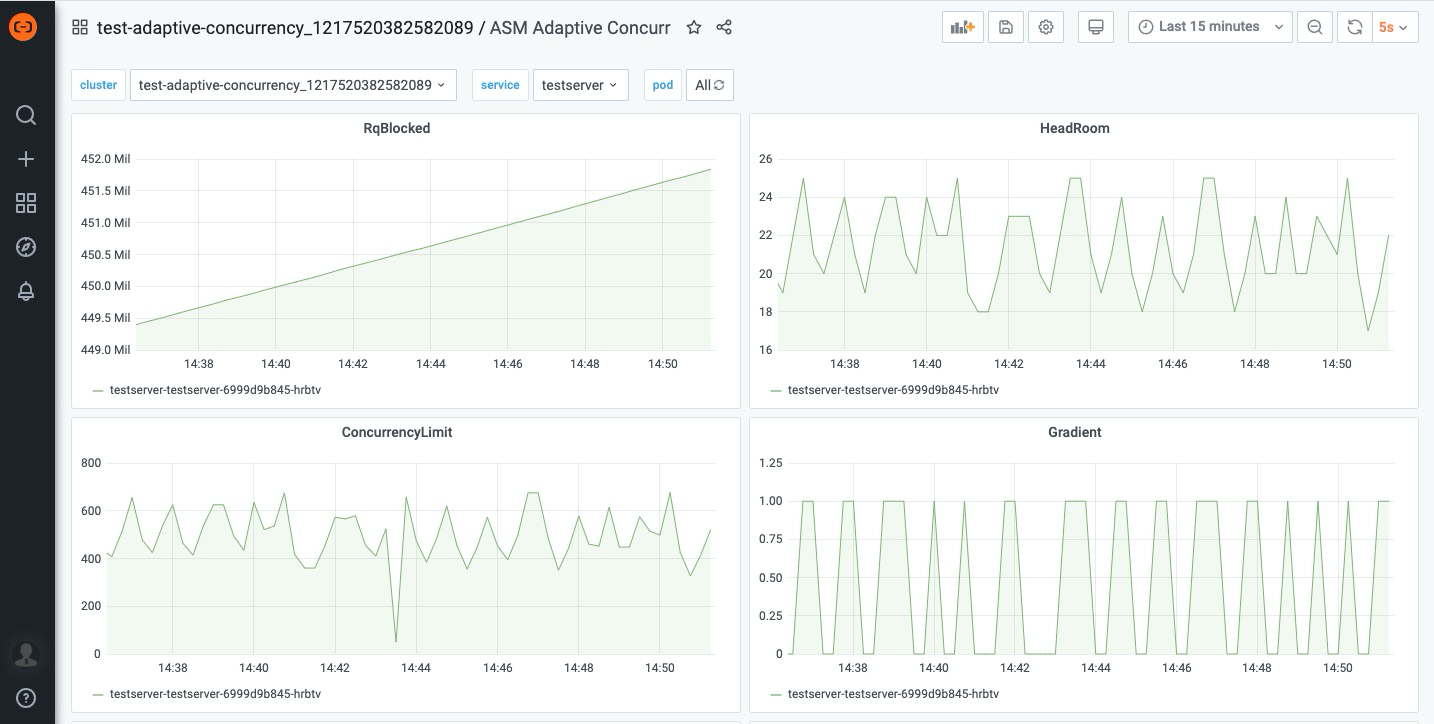

Testing in a cluster with AdaptiveConcurrency enabled, we applied pressure to the application that far exceeds its load. Checking the dashboard, we can see that the ConcurrencyLimit is restricted to fluctuate between 400 and 600, indicating that the application's load capacity is between 400 and 600. The RqBlocked panel shows the number of rejected requests, and the curve continues to grow, which aligns with our expectations.

This article briefly explains the principle of the Adaptive-Concurrency Filter in Envoy. Adaptive concurrency limit greatly reduces the burden of operations and maintenance and increases confidence in operations and maintenance. It is a good choice in many scenarios. However, it is currently not supported to configure AdaptiveConcurrency in the most commonly used scenario of Envoy, Istio. Additionally, configuring EnovyFilter through the Istio EnvoyFilter API can be relatively complex. In this case, Alibaba Cloud service mesh (ASM) provides the ASMAdaptiveConcurrency API, which allows users to focus on relevant business parameters and configure adaptive concurrency limits without the need to learn the configuration rules of Envoy Filter. Feel free to give it a try.

Configure Sidecar Parameters to Balance gRPC/HTTP2 Streaming Performance and Resource Usage

639 posts | 55 followers

FollowXi Ning Wang(王夕宁) - July 1, 2021

Xi Ning Wang(王夕宁) - December 16, 2020

feuyeux - May 8, 2021

Alibaba Cloud Native - August 9, 2023

Alibaba Container Service - May 7, 2025

Alibaba Developer - September 22, 2020

639 posts | 55 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native Community