Abstract: How should we design the architecture of a big data platform? Are there any good use cases for this architecture? This article studies the case of OpSmart Technology to elaborate on the business and data architecture of Internet of Things for enterprises, as well as considerations during the technology selection process.

How should we build the architecture of a big data platform? Are there any good use cases for this architecture? This article studies the case of OpSmart Technology to elaborate on the business and data architecture of the Internet of Things for enterprises, as well as considerations during the technology selection process.

Based on the "Internet + big data + airport" model, OpSmart Technology provides wireless network connectivity services on-the-go to 640 million users every year. As the business expanded, OpSmart technology faced the challenge of increasing amounts of data. To cope with this, OpSmart Technology took the lead to build an industry-leading big data platform in 2016 with Alibaba Cloud products.

Below are some tips shared by OpSmart Technology's big data platform architect:

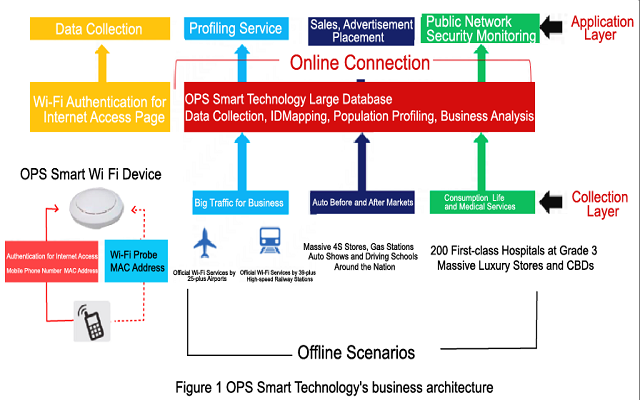

OpSmart Technology's business architecture is shown in the figure above. Our primary business model is to collect data through our own devices, explore value in the data, and then apply the data to our business.

On the data collection layer, we founded the first official Wi-Fi brand for airports in China, "Airport-Free-WiFi", covering 25 hub airports and 39 hub high-speed rail stations nationwide and providing wireless network services on-the-go to 640 million people each year. We also have the nation's largest Wi-Fi network for driving schools and our driving school Wi-Fi network is expected to cover 1,500-plus driving schools by the end of 2017. We are also the Wi-Fi provider of China's four major auto shows (Beijing, Shanghai, Guangzhou, and Chengdu) to serve more than 1.2 million people. In addition, we are also running the Wi-Fi network for 2,000-plus gas stations and 600-plus automobile 4S (sales, spare parts, service, survey) stores across the country.

On the data application layer, we connected online and offline behavioral data for user profiling to provide more efficient and precise advertisement targeting including SSP, DSP, DMP and RTB. We also worked with the Ministry of Public Security to eliminate public network security threats.

OpSmart Technology's big data and advertising platforms also offer technical capabilities for enterprises to help them establish their own big data platforms and improve their operation management efficiency with a wealth of quantitative data.

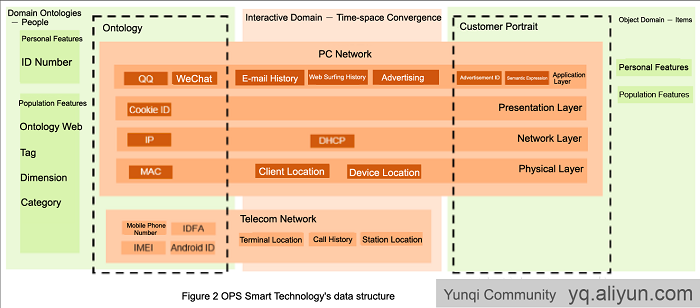

We abstracted our data architecture, which contains a number of themes as shown in the figure. The subject in the figure can be understood as users, and the object can be understood as things. The subject and object are connected through various forms. Such connections are established in time and space and are completed through computer and telecommunication networks. The subject has its own reflection in the connection network, which can be understood as a virtual identity (Avatars). The object also has its own reflection in the connection network, such as the Wikipedia description of a topic, or a commercialized product or service. These reflections are then packaged by advertisements as an advertising image. All these are object mirrors. The interaction between the subject and the object is actually the interaction between the subject image and the object image, and such interactions leave traces in both time and space.

The individual and group characteristics of the subject and object, as well as the subject-object relationships, all constitute big data. Through in-depth mining and learning, this information will give birth to powerful insights and have immeasurable value to businesses.

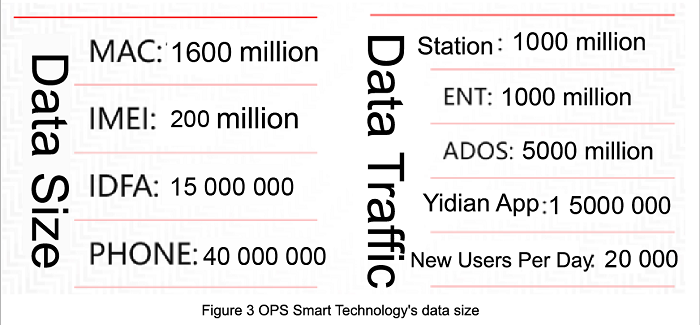

The data sizes of OpSmart Technology in the subject domain and the interaction domain are as follows:

Next let's move to our ideas about technology selection. I think that there is no best technical architecture, only the most appropriate architecture for our applications. Successful IT planning means starting from the business structure and providing the most appropriate technical architecture for each specific business scenario.

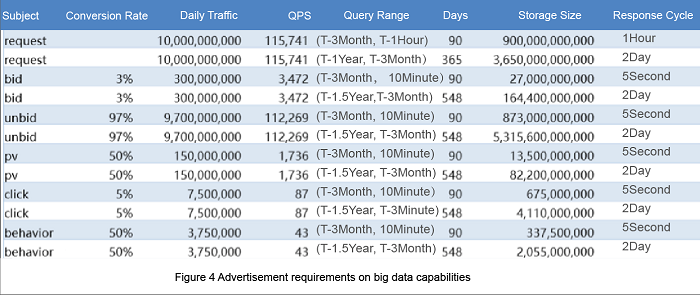

First, let's take a look at our functional requirements. Take our advertising business for example. Our goal is to handle 10 billion messages a day. The requirements for big data capabilities are as follows:

Let's assume that the record size is 2 KB and 70 PB of physical capacity is required to accommodate the data. We can then infer based on the query range requirements that the offline computing processing duration is 24 hours and online calculation duration is 10 minutes.

• We hope to outsource the infrastructure installation and O&M through the cloud platform.

• Big data technology is changing, and we hope component versions can be updated quickly.

• The external business environment is changing rapidly and it is hoped that computing resources can be dynamically increased or decreased to save costs.

• We hope to acquire professional security services at a lower cost.

• We anticipate more use of open-source components to facilitate overall output.

We finally settled on Alibaba Cloud, especially its E-MapReduce products, after comprehensive inspection of domestic cloud service providers. The cluster is ready shortly after purchase, and Hive, Spark, HBase, and other open-source big data components are available immediately.

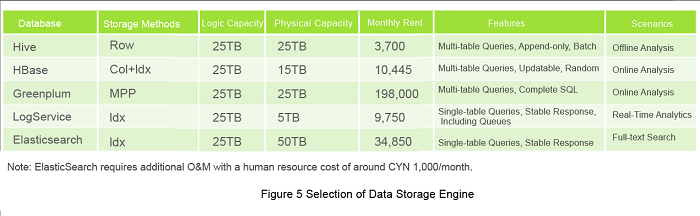

First, we had to select the data storage engine.

We evaluated the performance and prices of each option taking storing 25 TB of data as the benchmark. We can see from the figure that, for offline analysis, you can consider the Hive on OSS mode to use open-source components to store the data of the past year. For online analysis scenarios, using HBase to store data of the last three months can guarantee high cost effectiveness. This solution also enables joint queries in multiple tables, but the SQL query responses are situational and SQL queries with different degrees of complexity may have different response times. If you want a consistent response time, consider the index-based solution, that is, Log Service. However, the shortcoming of using Log Service is the lack of joint queries in multiple tables. If you want to use open-source components, build ELK on the ECS on your own.

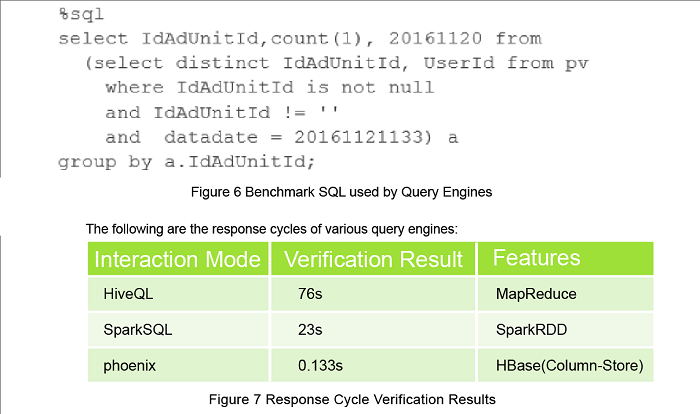

Next we chose the query engine. We used a benchmark SQL to facilitate horizontal comparisons of response time. The benchmark SQL statement is shown in the figure below:

Based on the finding, we concluded that using HBase-based Phoenix for interactive queries delivered a satisfactory response cycle.

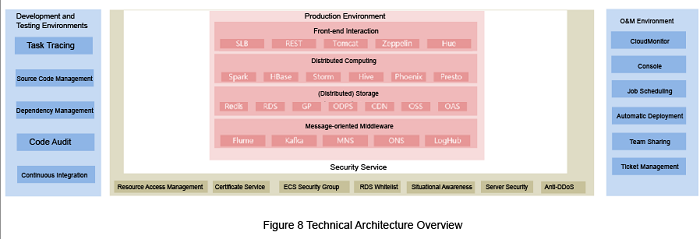

That's it for the technology selection part. Now let's look at the big data platform technical architecture.

The specific Alibaba Cloud products used in our architecture are summarized as follows:

E-MapReduce

Alibaba Cloud's E-MapReduce is the core product of our big data platform, which covers Hive, Spark, HBase, Storm, and other core open-source components in the big data field, as well as industry-leading query engines such as Phoenix and Presto. The Zeppelin, Hue, and other interactive components are also out-of-the-box software.

E-MapReduce has frequent new version releases and its components are also constantly updated. But purchased E-MapReduce is not conveniently upgradable. To update in a timely manner, we chose a monthly subscription rather than an annual subscription. After the monthly resources expire, we directly purchase new resources to upgrade them, and the old resources will automatically be destroyed if not renewed. Alibaba's E-MapReduce supports increasing the number of nodes but does not allow reductions. Following the above rolling mode, we can also adjust the cluster size and various configurations at any time.

The above-mentioned rolling mode is feasible for the computing cluster. But what about data storage? The machines used for E-MapReduce all have high configurations and will be a waste if used only to store data. Data can be stored in the OSS and loaded with Hive. However, you still need to store data on E-MapReduce to use HBase. Once you put the data on E-MapReduce, the cluster cannot be destroyed at will. Therefore, we separated the data cluster and computing cluster so that the computing cluster can be destroyed and upgraded at any time, while the data cluster is guaranteed to stably provide services over the long term. These two clusters have different configurations. The computing cluster uses an SSD to achieve faster processing, while the data cluster (HBase) uses ultra cloud disks to achieve a larger capacity.

Then in what scenarios is the pay-as-you-go option used? According to our calculation, if the computing duration is longer than seven days, it would be more cost-effective to purchase monthly subscription clusters directly. Pay-as-you-go clusters can be used for temporary bursts of computing tasks.

Ticket management

Ticket service is the most attractive reason for us to choose Alibaba's cloud services. Our O&M teams often encounter complicated issues requiring urgent solutions. The team members can then conveniently open a ticket to ask the Alibaba's engineers for help. The process of communication on the issue also allows us to learn new things. We have learned a lot from Alibaba Cloud engineers.

Software overview

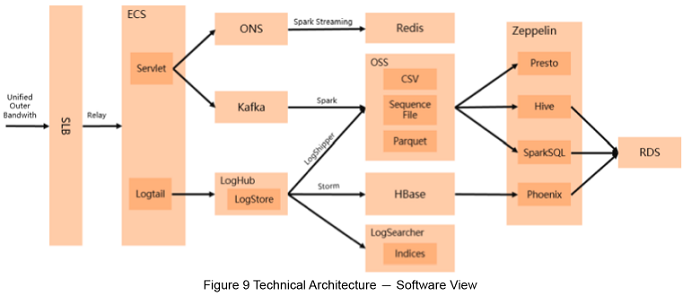

Based on the technical overview, the software design in our technical architecture is as follows:

Some of our implementations are summarized as follows:

We activated external network access for many ECS servers to facilitate management, but the actual usage rate is not high. The cost of external network bandwidth took up a large part of the ECS cost. Now we have disabled the external bandwidth for all ECS servers and route the traffic through the Server Load Balancer. The Server Load Balancer's external bandwidth is shared among all ECS servers. Requests to ports of all apps including the SSH ones are forwarded by the Server Load Balancer. The bandwidth of the Server Load Balancer is unlimited. The speed is faster and the cost is lower. We think using Server Load Balancer this way was clever.

Our business environment changes fast. Some machines that are useful today may become useless tomorrow. We adopted the monthly subscription + automatic renewal model to increase or decrease machines at any time to scale the configuration.

This is Alibaba's Log Service which is called MQ inside Alibaba. It has a fast response, with high throughput. It can be applied to highly real-time scenarios such as real-time bidding.

The Log Service contains LogTail, LogStore, LogHub, LogShipper and LogSearch services. Among them, the LogShipper feature is very helpful as it automatically sends collected logs to the OSS so that you can directly load the data using Hive. However, this feature currently only supports the JSON format and Parquet.

Despite the fact that official examples and Alibaba Cloud documentations are based on Scala, we chose Java for Spark app development, as it is more convenient for our development team. If Java 8 is supported, the functional programming, especially Lambda expressions, will be very close to Scala in terms of performance. As per our advice, Alibaba Cloud's new version of E-MapReduce already supports Java 8.

It is worth noting that it does not matter if your data is stored in MaxCompute. E-MapReduce provides the SparkSQL service to enable seamless access to data in MaxCompute. MaxCompute users can also join the Spark ecosystem.

E-MapReduce currently provides the Storm component. If you require this component, you have two options: consume data in the Log Service, or install Kafka on E-MapReduce following the pilot operations to support adding nodes.

Object Storage Service (OSS) is used for storage and achieves the separation of computing and storage by combining with E-MapReduce.

This is really great. With it, business specialists can use HiveQL, SparkSQL, Phoenix, or Presto in a web form to perform exploratory and interactive queries of data, without programming or SSH logon. In addition, the query history can be saved and a simple bar chart or pie chart can also be generated. Our DMP engineers no longer have to write code overnight just to implement a statistical query of a specific figure, and business specialists can get things done independently.

HBase itself is a NoSQL database, and structured query is its weakness. We have a lot of OLAP requirements to deliver interactive results. Our original practice was to create our own HBase secondary indexes and perform jump queries for non-primary key fields. Later we found that Phoenix has had this feature ready for us on E-MapReduce. The HBase index table generated by its index mechanism is just the index table we originally created manually. So we fully switched to Phoenix for interactive queries. The default query timeout value of Phoenix on the old version of E-MapReduce is one minute, which was too short for us. But if we change the parameter, we have to reboot the service. As per our advice, Phoenix in the new version of E-MapReduce now has a default timeout value of half an hour.

Batch calculation: LogTail + LogHub + LogShipper + OSS + Hive + SparkSQL

Batch calculation focuses on data collection. LogTail configures the collection rules, LogShipper automatically delivers the data to the OSS, Hive directly loads the data to form a data warehouse, and SparkSQL enables direct query of data in Hive on the Zeppelin interface. The entire ETL process is very smooth, with almost no coding effort required.

Interactive calculation: LogTail + LogHub + Storm + HBase + Phoenix

For OLAP services with more stringent response time requirements, we can build an OLAP database with HBase as the center. In order to shorten the available data cycle, we can open a separate channel. We use LogTail to collect data and synchronize the data in LogHub to Storm to use Storm to convert data and write data to the HBase, and then we use Phoenix for queries on the Zeppelin interface.

Real-time calculation: Servlet + ONS + Spark Streaming + Redis

For real-time bidding and other real-time computing businesses, we can take full advantage of ONS's ultra-fast response (within 1 ms) and high concurrency features, use Spark Streaming for computing and finally store the data in Redis.

Spark 2.0 was released, Hadoop 3.0 released Alpha, and HBase 2.0 released SNAPSHOT. Many features in these components are highly anticipated. We will pay close attention to E-MapReduce new releases of Alibaba Cloud, in hopes to try out the new open-source components soon.

To learn more about Alibaba Cloud E-MapReduce, click: https://www.alibabacloud.com/product/e-mapreduce

Author: Ai Jia, graduated from the Software Engineering major of Tsinghua University. Previously in Accenture and IBM, Ai is now a big data platform architect in OpSmart Technology.

2,593 posts | 793 followers

FollowAlibaba Clouder - August 25, 2020

Alibaba Clouder - June 22, 2020

Alibaba Clouder - July 13, 2020

Alibaba Cloud MaxCompute - February 17, 2021

Alibaba Cloud MaxCompute - December 22, 2021

Alibaba EMR - March 18, 2022

2,593 posts | 793 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT March 18, 2019 at 3:36 pm

Interesting...Spark streaming for in-memory and Redis too for KV in-memory? Maybe if you can provide more details on why the decision of these two :), it will be helpful.