As a senior technical expert at Alibaba Group, I will share my thoughts on what there is to say about big data, past, present, future.

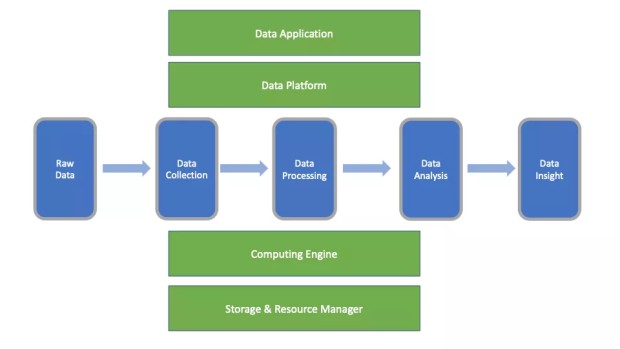

First, before we can get anywhere, we will need to get clear what exactly "big data" is. The concept of big data has been around for more than 10 years, but there has never been an accurate definition, which is perhaps not required. Data engineers see big data from a technical and system perspective, whereas data analysts see big data from a product perspective. But, as far as I can see, big data cannot be summarized as a single technology or product, but rather it comprises a comprehensive, complex discipline surrounding data. I look at big data from mainly two aspects: the data pipeline (the horizontal axis in the following figure) and the technology stack (the vertical axis in the following figure).

Social media has always focused on emphasizing, even sensationalizing the "big" in big data. I don't think this really shows big data actually is, and I don't really like the terming "big data." I'd prefer just to say "data" because the essence of big data essentially just lies in the concept and application of "data".

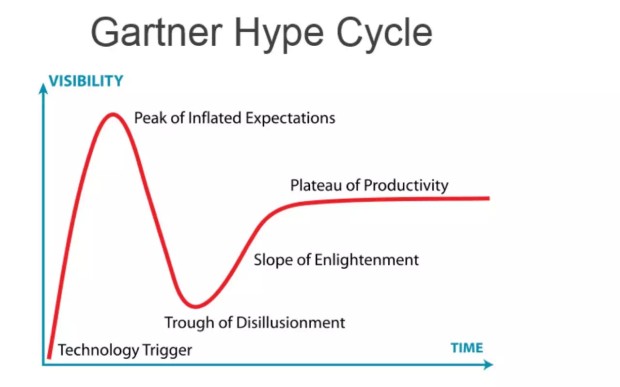

After figuring out what big data is, we have to look at where big data is on the maturity curve. Looking at the development of technologies in the past, each emerging technology has its own lifecycle that is typically characterized by the technology maturity curve seen below.

People tend to be optimistic about an emerging technology at its inception, holding high expectations that this technology will bring great changes to society. As a result, the technology gains popularity at a considerable speed but then reaches a peak at which time people begin to realize that this new technology is not as revolutionary as originally envisioned and their interests wane. After this, everyone's thoughts about this technology then falls into the trough of disillusionment. But, after a certain period of time, people start to understand how the technology can benefit them and start to apply the technology in a productive way, with the technology gaining mainstream adoption.

After going through the peak of inflated expectations and trough of disillusionment, big data is now at a stage of steady development. We can verify this from the curve of big data on Google Trends. Big data started to garner everyone's attention somewhere around 2009, reaching its peak at around 2015, and then attention slowly fell. Of course, this curve does not fit perfectly with the preceding technology maturity curve. But you can imagine that this has something to do with what this data represents. That is, one could imagine and suppose that a downward shift in the actual technology curve may lead to the increase in searches for this technology on Google and other search engines.

This article mainly describes the architecture and features of Alibaba Cloud's general-purpose computing engine MaxCompute.

By Yongming Wu

On January 18, 2019, the Big Data Technology Session of Alibaba Cloud Yunqi Developer Salon, co-hosted by the Alibaba Cloud MaxCompute developer community and Alibaba Cloud Yunqi Community, was held at Beijing Union University. During this session, Wu Yongming, Senior Technical Expert at Alibaba, shared MaxCompute, a serverless big data service with high availability, and the secrets behind the low computing cost of MaxCompute.

This article is based on the video of the speech and the related presentation slides.

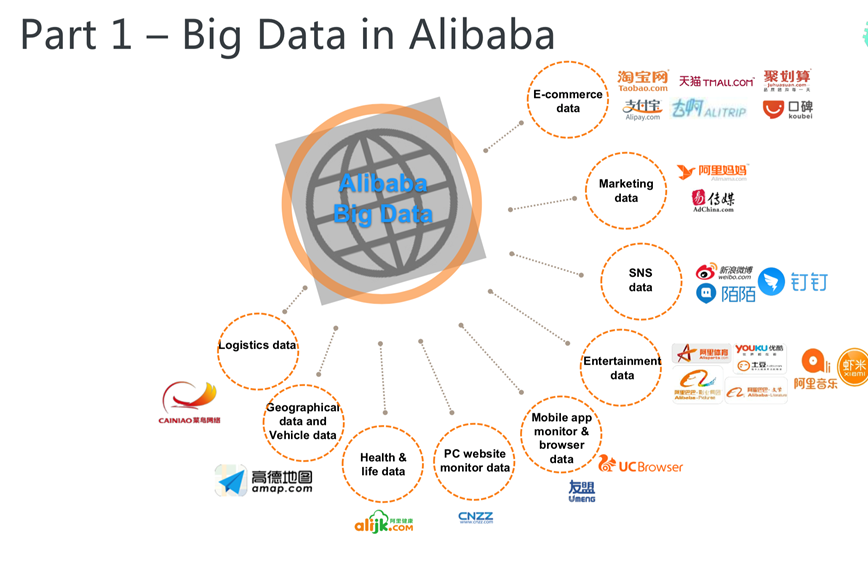

First, let's see some background information about big data technologies at Alibaba. As shown in the following figure, Alibaba began to establish a network of big data technologies very early, and it was safe to say that Alibaba Cloud was founded to help Alibaba solve technical problems related to big data. Currently, almost all Alibaba business units are using big data technologies. Big data technologies are applied both widely and deeply in Alibaba. Additionally, the whole set of big data systems at Alibaba Group are integrated together.

The Alibaba Cloud Computing Platform business unit is responsible for the integration of Alibaba big data systems and R&D related to storage and computing across the whole Alibaba Group. The following figure shows the structure of the big data platform of Alibaba, where the underlying layer is the unified storage platform - Apsara Distributed File System that is responsible for storing big data. Storage is static, and computing is required for mining data value. Therefore, the Alibaba big data platform also provides a variety of computing resources, including CPU, GPU, FPGA, and ASIC. To make better use of these computing resources, we need unified resource abstraction and efficient management. The unified resource management system in Alibaba Cloud is called Job Scheduler. Based on this resource management and scheduling system, Alibaba Cloud has developed a variety of computing engines, such as the general-purpose computing engine MaxCompute, the stream computing engine Blink, the machine learning engine PAI, and the graph computing engine Flash. In addition to these computing engines, the big data platform also provides various development environments, on which the implementation of many services is based.

How should we build the architecture of a big data platform? This article studies the case of OpSmart Technology to elaborate on the business and data architecture of the IoT for enterprises.

Abstract: How should we design the architecture of a big data platform? Are there any good use cases for this architecture? This article studies the case of OpSmart Technology to elaborate on the business and data architecture of Internet of Things for enterprises, as well as considerations during the technology selection process.

How should we build the architecture of a big data platform? Are there any good use cases for this architecture? This article studies the case of OpSmart Technology to elaborate on the business and data architecture of the Internet of Things for enterprises, as well as considerations during the technology selection process.

Based on the "Internet + big data + airport" model, OpSmart Technology provides wireless network connectivity services on-the-go to 640 million users every year. As the business expanded, OpSmart technology faced the challenge of increasing amounts of data. To cope with this, OpSmart Technology took the lead to build an industry-leading big data platform in 2016 with Alibaba Cloud products.

Below are some tips shared by OpSmart Technology's big data platform architect:

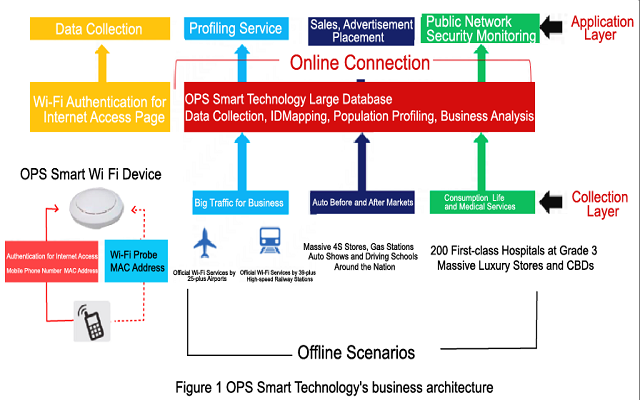

OpSmart Technology's business architecture is shown in the figure above. Our primary business model is to use our own devices to explore value in the data, and then apply the data to our business.

On the collection layer, we founded the first official Wi-Fi brand for airports in China, "Airport-Free-WiFi", covering 25 hub airports and 39 hub high-speed rail stations nationwide and providing wireless network services on-the-go to 640 million people each year. We also have the nation's largest Wi-Fi network for driving schools and our driving school Wi-Fi network is expected to cover 1,500-plus driving schools by the end of 2017. We are also the Wi-Fi provider of China's four major auto shows (Beijing, Shanghai, Guangzhou, and Chengdu) to serve more than 1.2 million people. In addition, we are also running the Wi-Fi network for 2,000-plus gas stations and 600-plus automobile 4S (sales, spare parts, service, survey) stores across the country.

On the data application layer, we connected online and offline behavioral data for user profiling to provide more efficient and precise advertisement targeting including SSP, DSP, DMP and RTB. We also worked with the Ministry of Public Security to eliminate public network security threats.

OpSmart Technology's big data and advertising platforms also offer technical capabilities for enterprises to help them establish their own big data platforms and improve their operation management efficiency with a wealth of quantitative data.

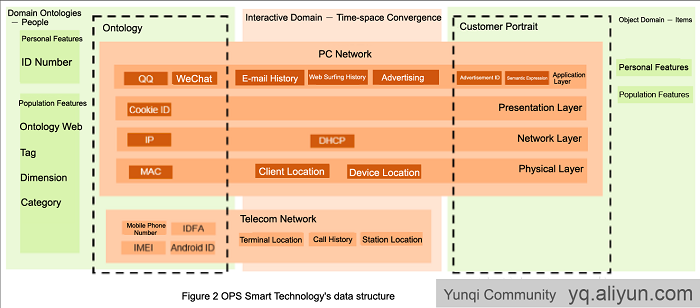

We abstracted our data architecture, which contains a number of themes as shown in the figure. The subject in the figure can be understood as users, and the object can be understood as things. The subject and object are connected through various forms. Such connections are established in time and space and are completed through computer and telecommunication networks. The subject has its own reflection in the connection network, which can be understood as a virtual identity (Avatars). The object also has its own reflection in the connection network, such as the Wikipedia description of a topic, or a commercialized product or service. These reflections are then packaged by advertisements as an advertising image. All these are object mirrors. The interaction between the subject and the object is actually the interaction between the subject image and the object image, and such interactions leave traces in both time and space.

The individual and group characteristics of the subject and object, as well as the subject-object relationships, all constitute big data. Through in-depth mining and learning, this information will give birth to powerful insights and have immeasurable value to businesses.

This blog series is aimed at showing you how to make effective use of Big Data and Business Intelligence to decipher insights quickly from raw enterprise data.

By Priyankaa Arunachalam, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

The volume of data generated every day is a mystery as it is increasing continually at a rapid rate. Although data is everywhere, the intelligence that we can glean from it matters more. These large volumes of data is what we call "Big Data". Organizations generate and gather huge volumes of data believing that this data might help them in advancing their products and improving their services. For example, a shop may have its customer information, stock details, purchase history, and website visits.

Often times, organizations store these data for regular business activities but fail to use it for further Analytics and Business Relationships. This data which is unanalyzed and left unused is what we call "Dark Data".

"Big Data is indeed a buzzword, but it is one that is frankly under-hyped," Ginni Rometty

The problem of untangling insights from data obtained from multiple sources has been around from the day when software applications were found. This is normally time consuming and becomes obsolete for any form of decision making with the data moving so fast. The main aim of this blog series is to make effective use of big data and extend the use of business intelligence to decipher insights quickly and accurately from raw enterprise data on Alibaba Cloud.

In the simplest terms, when the data you have is too large to be stored and analyzed by traditional databases and processing tools, then it is "Big Data". If you have heard about the 3Vs of big data, then it is simple to understand the underlying definition of big data.

Every individual and organization has data in one form or another, which they tried managing using spreadsheets, Word documents, and databases. With emerging technologies, the size and variety of data is increasing day by day, and it is no longer possible to analyze the data through traditional means.

The most important aspect of big data analytics is understanding your data. A good way to do this is to ask yourself these questions:

Before exploring Alibaba Cloud's E-MapReduce, in this article we will target answering the above listed questions to get started with big data.

Data is typically generated when a user interact with a physical device, software, or system. These interactions can be classified into three types:

For most enterprises, data can be categorized into the following types.

Wang Liang, CEO of YouYue Beijing Tech Inc., shares his experience of using an Alibaba Cloud Data Lake Analytics for big data analysis on blockchain logs.

By Wang Liang, CEO of YouYue Beijing Tech Inc.

I started my own company in the business of big data for promotion. A major difficulty that I had to conquer was processing offline data and cold backup data.

We needed to collect hundreds of millions of search results from the Apple's App Store. To reduce costs, we only kept data from the past one month in the online database and backed up historical data in Alibaba Cloud Object Storage Service (OSS). When we needed the historical data, we had to import it to the database or Hadoop cluster. Similar methods are used for data like users' click logs by companies with limited budgets. This method is inconvenient as it requires a large amount of work for ETL import and export. Currently, frequent requirements such as historical data backtracking and analysis, due to increasingly complex data operations, are consuming a considerable part of R&D engineers' time and energy.

Alibaba Cloud's Data Lake Analytics was like a ray of hope. It allows us to query and analyze data in the OSS using simple database methods that supports MySQL. It simplifies big data analysis and delivers satisfying performance. To a certain extent, it can directly support some of the online services.

Here I'd like to share my experience, using an example of big data analysis for blockchain logs. I picked blockchain data because the data volume is huge, which can fully demonstrate the features of Data Lake Analytics. Another reason is that all the blockchain data is open.

The data set used in this article contains all the data of Ethereum as of June 2018. More than 80% of today's DApps are deployed in Ethereum, so the data analysis and mining are of high values.

The data logic of Ethereum is chained data blocks, and each block contains detailed transaction data. The transaction data is similar to common access logs, which include the From (source user address), To (destination user address), and Data (sent data) fields. For example, ETH exchange between users and Ethereum program (smart contract) invoking by users are completed through transactions. Our experimental data set contains about 5 million blocks and 170 million transaction records.

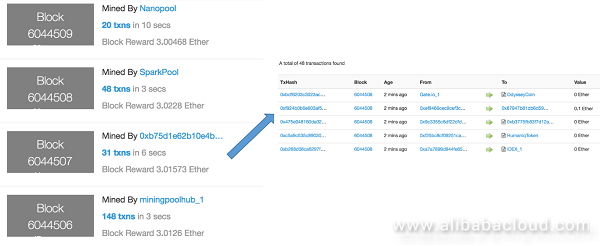

We can see the data structure of Ethereum in Ethscan.io, as shown in the following figure:

Figure 1. Data structure of Ethereum (blocks on the right and transaction data on the right)

By the time when this article was completed (July 25, 2018), there were around 6 million blocks and over 200 million transaction records in Ethereum. We also took out the Token application data from the transaction data because Token (also known as virtual currency) is still a main application. Based on the data, we can analyze token transactions that are worth thousands of billions of US dollars.

The process of obtaining Ethereum data includes three steps. The entire process is completed in the CentOS 7 64-bit ECS server environment of Alibaba Cloud. We recommend preparing two 200 GB disks, one for original Ethereum data and the other for data exported from Ethereum, and ensure that the memory is not less than 4 GB.

The job market for architects, engineers, and analytics professionals with Big Data expertise continue to increase. The Academy’s Big Data Career path focuses on the fundamental tools and techniques needed to pursue a career in Big Data. Work through our course material, learn different aspects of the Big Data field, and get certified as a Big Data Professional!

Alibaba Cloud's Big Data Certification Associate is designed for engineers who can use Alibaba Cloud Big Data products. It covers basic distributed system theory and Alibaba Cloud's core products like MaxCompute, DataWorks, E-MapReduce and ecosystem tools.

Learn how to utilize data to make better business decisions. Optimize Alibaba Cloud's big data products to get the most value out of your data.

This course is associated with Alibaba Cloud Big Data Quickstart Series: Data Integration. You must purchase the certification package before you are able to complete all lessons for a certificate.

Learn how to utilize data to make better business decisions. Optimize Alibaba Cloud's big data products to get the most value out of your data.

This course briefly explains the basic knowledge of Alibaba Cloud big data product system and several products in large data applications, such as MaxCompute, DataWorks, RDS, DRDS, QuickBI, TS, Analytic DB, OSS, Data Integration, etc. Students can refer to the application scenarios explained, combine with the enterprise's own business and demand, apply what we have learned to practice.

Quickly learn the basic concepts and architectures of MaxCompute and DataWorks, and how to perform data integration with multiple data sources and formats.

Big data instance families are designed to deliver cloud computing and storage for a large amount of data to support big data-oriented business needs. These instances are applicable to scenarios that require offline computing and storage of a large amount of data, such as Hadoop distributed computing, extensive log processing, and large-scale data warehousing. The instance families are suitable for businesses that use a distributed network and require high-performance storage systems.

These instance families are suitable for customers in Internet, finance, and other industries that need to compute, store, and analyze big data. Big data instance families use local storage to ensure a large amount of storage space and high storage performance.

Common features of big data instances:

When you use big data instances, take note of the following items:

The Open Data Processing Service (MaxCompute) is formerly known as the Big Data Computing Service (ODPS ). It stores and computes structured data in batches, providing solutions for massive data warehouses as well as big data analysis and modeling.

With the data integration service, RDS data can be imported into MaxCompute to achieve large-scale data computing.

MaxCompute (previously known as ODPS) is a general purpose, fully managed, multi-tenancy data processing platform for large-scale data warehousing. MaxCompute supports various data importing solutions and distributed computing models, enabling users to effectively query massive datasets, reduce production costs, and ensure data security.

Alibaba Cloud Elasticsearch is based on the open-source Elasticsearch engine and provides commercial features. Designed for scenarios such as search and analytics, Alibaba Cloud Elasticsearch features enterprise-level access control, security monitoring, and automatic updates.

"Ask Me Anything" with Hongxia Yang, a Judge of KDD Cup 2020 Challenges from Alibaba DAMO Academy

2,593 posts | 793 followers

FollowAlibaba Clouder - August 8, 2018

Lana - April 14, 2023

Alibaba Clouder - February 27, 2019

Alibaba Clouder - April 23, 2020

Maya Enda - June 16, 2023

Alibaba Cloud Native - March 28, 2024

2,593 posts | 793 followers

Follow Dataphin

Dataphin

As a unified PaaS platform for intelligent data creation and management at Exabyte Scale, Dataphin applies Alibaba Group’s unique and proven OneData, OneID & OneService technologies to help enterprises thrive in the new era of data intelligence.

Learn More Data Integration

Data Integration

Data Integration is an all-in-one data synchronization platform. The platform supports online real-time and offline data exchange between all data sources, networks, and locations.

Learn MoreMore Posts by Alibaba Clouder