This article is compiled from the presentation of JD search and recommendation algorithm engineers Zhang Ying and Liu Lu at Flink Forward Asia 2021.

This article is compiled from the presentation of JD search and recommendation algorithm engineers Zhang Ying and Liu Lu at Flink Forward Asia 2021. It includes:

- Background

- Current Situation of Machine Learning for JD Search and Recommendation

- Online Learning Based on Alink

- Tensorflow on Flink Application

- Planning

1. Background

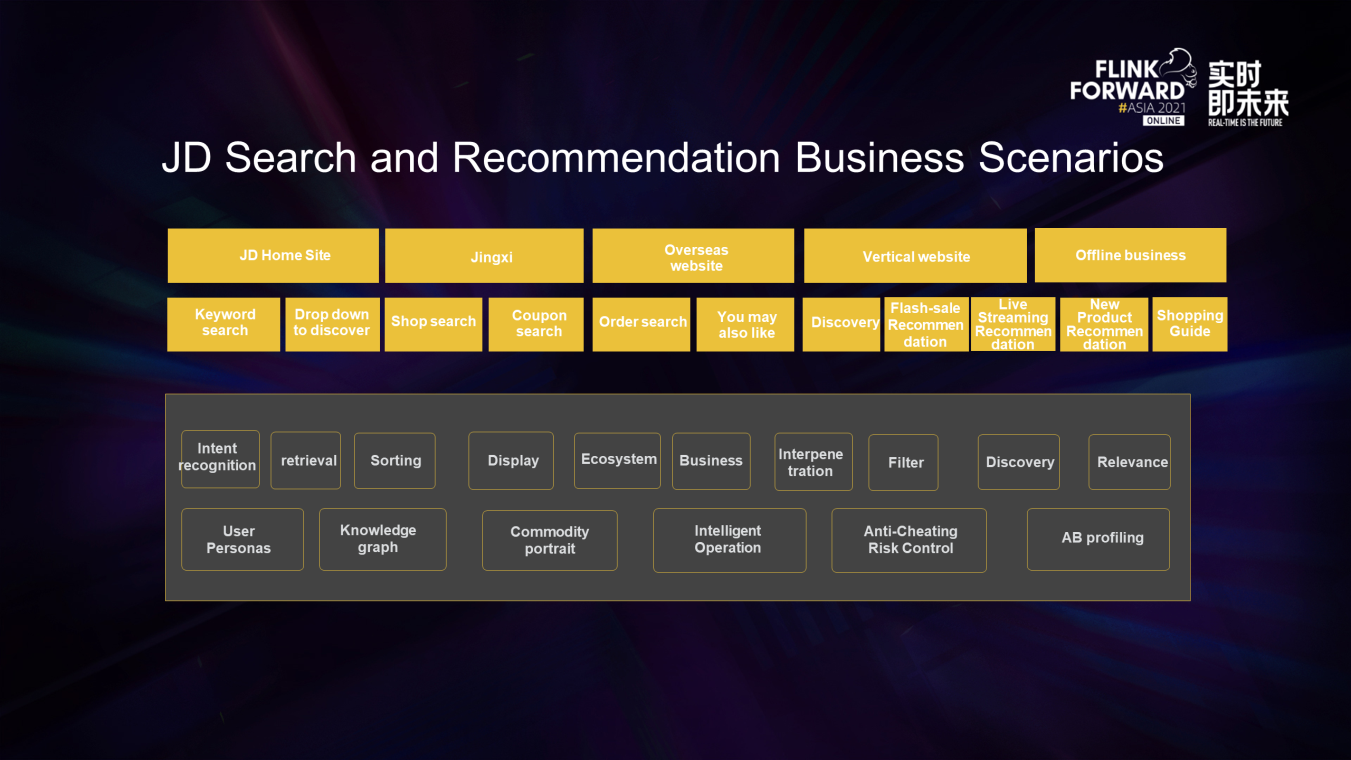

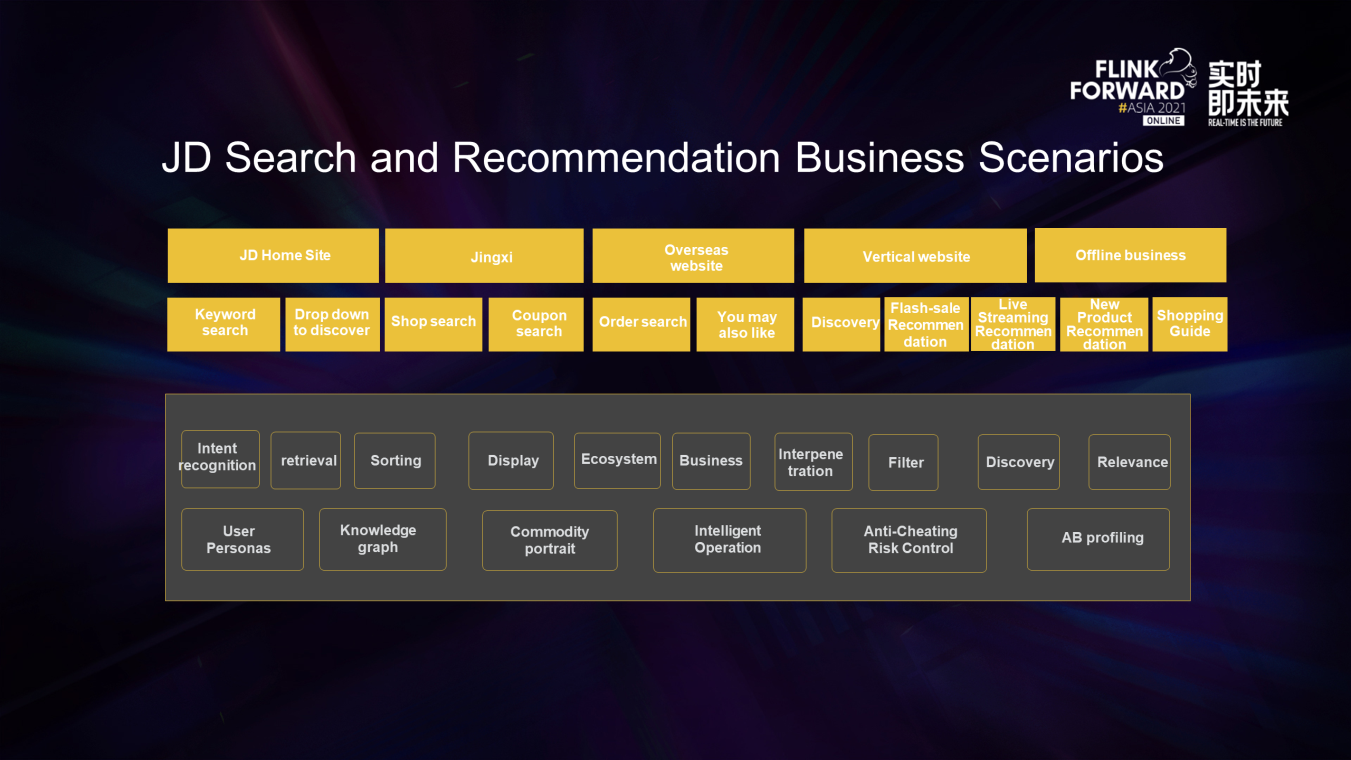

Search and recommendation are the two core portals of Internet applications. Most traffic comes from the two scenarios. JD Retail is divided into the home site, Jingxi, overseas site, and other sites (according to different fields).

In the search business, there are several sub-pages, including keyword search, discovery, shops, coupons, and orders on each site. In the recommendation business, hundreds of recommendations are divided according to different application fields.

Each of the preceding business scenarios contains more than a dozen policy links, which need to be supported by machine learning models. A large amount of commodity data and user behaviors can be used as the trait sample of machine learning.

JD search and recommendation include the traditional intent recognition, retrieval, sorting, and correlation models. It also introduces models to make decisions in intelligent operations, intelligent risk control, and effect analysis. This can help maintain the ecology of users, merchants, and platforms.

2. Current Situation of Machine Learning for JD Search and Recommendation

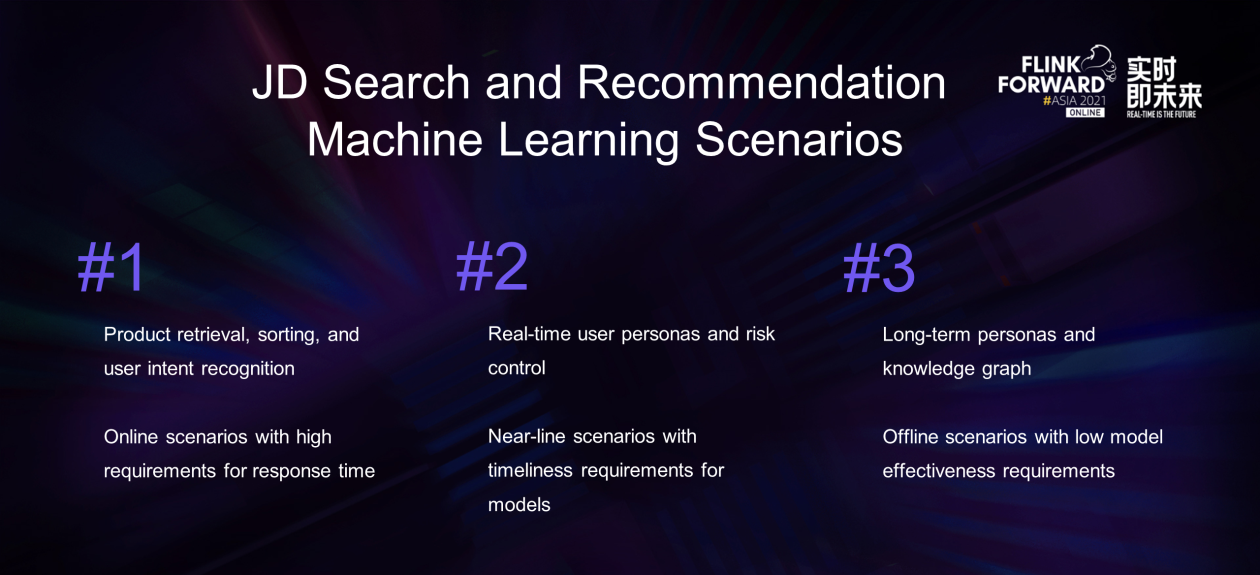

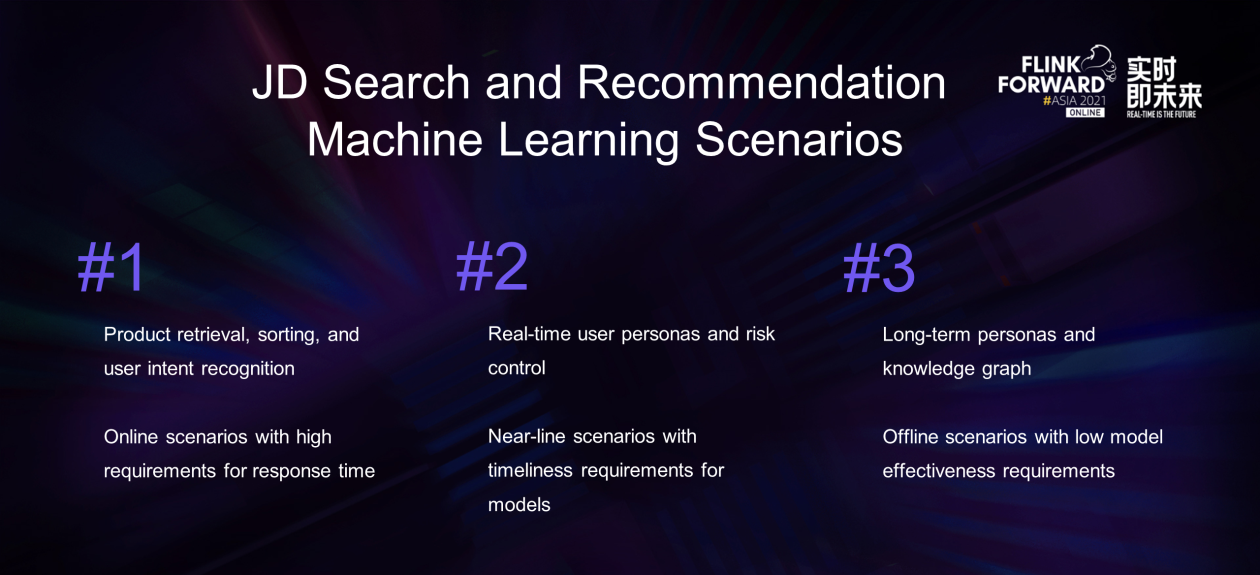

We divide these machine learning scenarios into three categories based on differences in service scenarios and effectiveness:

- The first one is the retrieval, sorting, and intent recognition of products that are immediately requested when users visit search or recommendation pages. This type of model requires a short response time at the service level, and the estimation service resides in the online system.

- The second one does not require a short service response time but has certain timeliness requirements for model training and estimation, such as real-time user portraits and anti-cheating models. We call it the near-line scenario.

- The third one is offline model scenarios, such as long-term portraits of products or users and knowledge graphs for various material tags. The training and prediction of these scenarios have low timeliness requirements and are all performed offline.

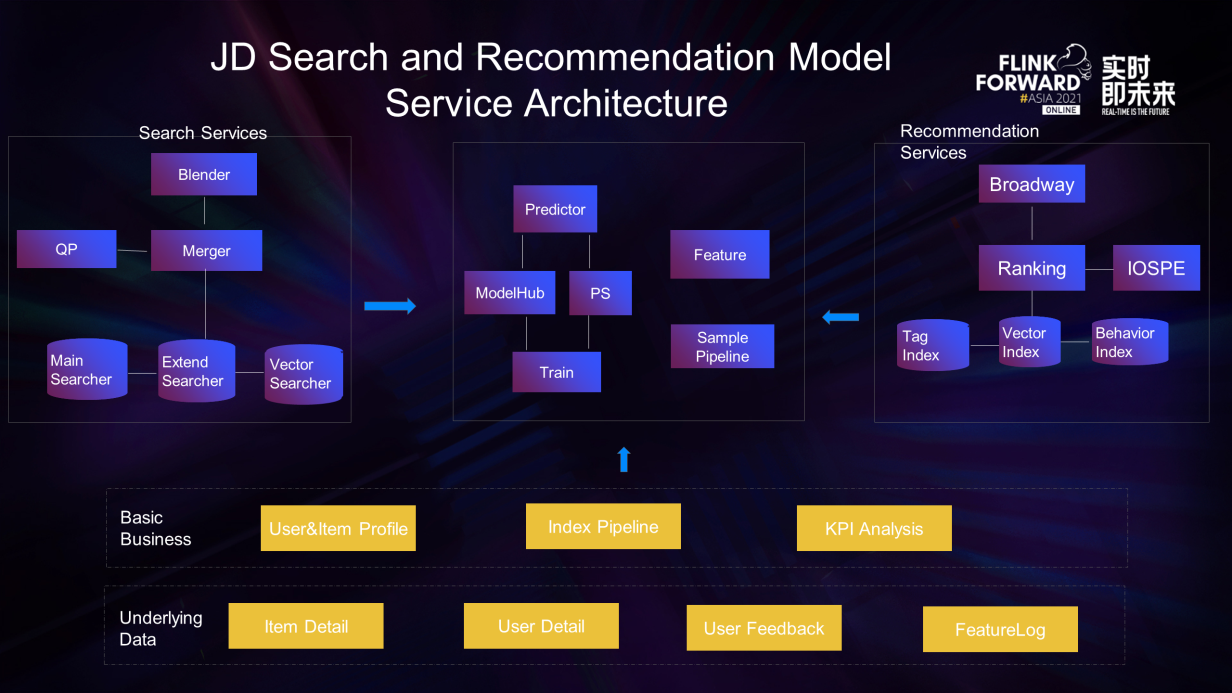

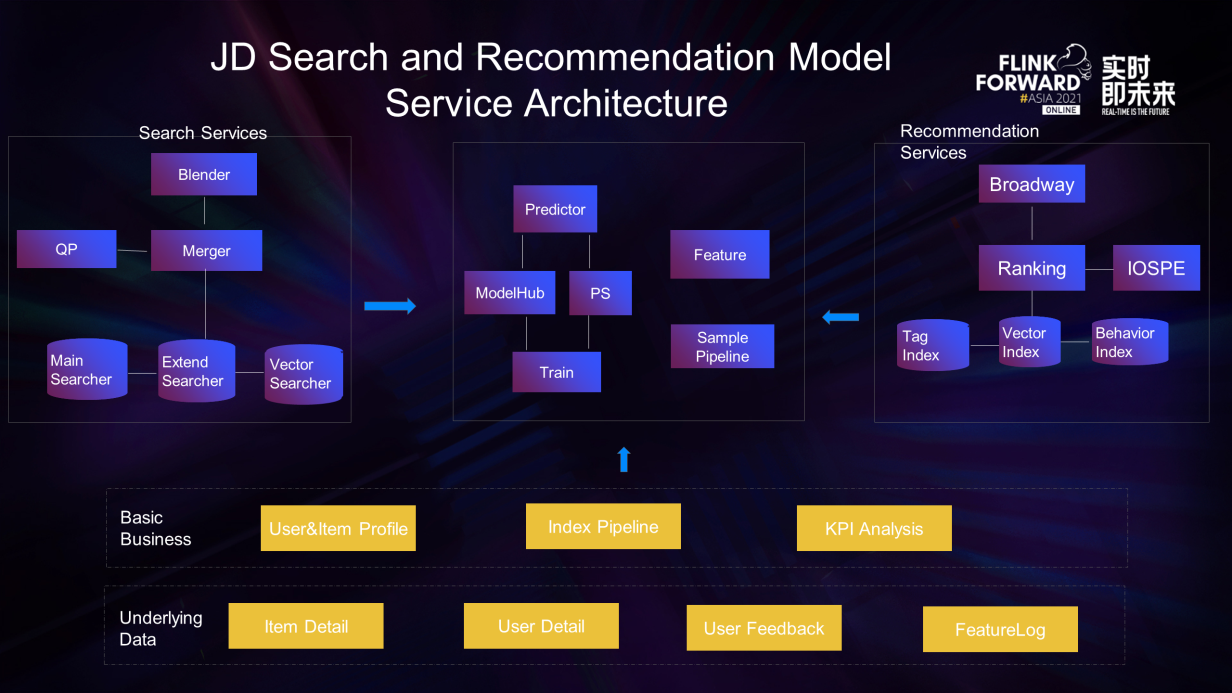

Let's look at the current major model service architecture:

Due to the differences in the business system, theJD search and recommendation system produces the search system and recommendation system by different kernel chain modules.

One user search will request link services at all levels. It will apply QP service for keywords in the intent-recognition model first. Then, it recalls service concurrent requests and then calls the retrieval model, correlation model, and coarse row model. After that, it sorts the service summary results set and calls the fine sorting model, rearrangement model, etc.

The business process will be different from one user search, but the overall process is close.

The lower layers of these two major businesses share some offline and near-line basic usage models, such as user portraits, material tags, and various metric analyses.

The model service architecture they access consists of training and estimation, with the model warehouse and parameter service bridging in the middle. In terms of features, the online scenario requires the feature server, while the offline scenario consists of data links.

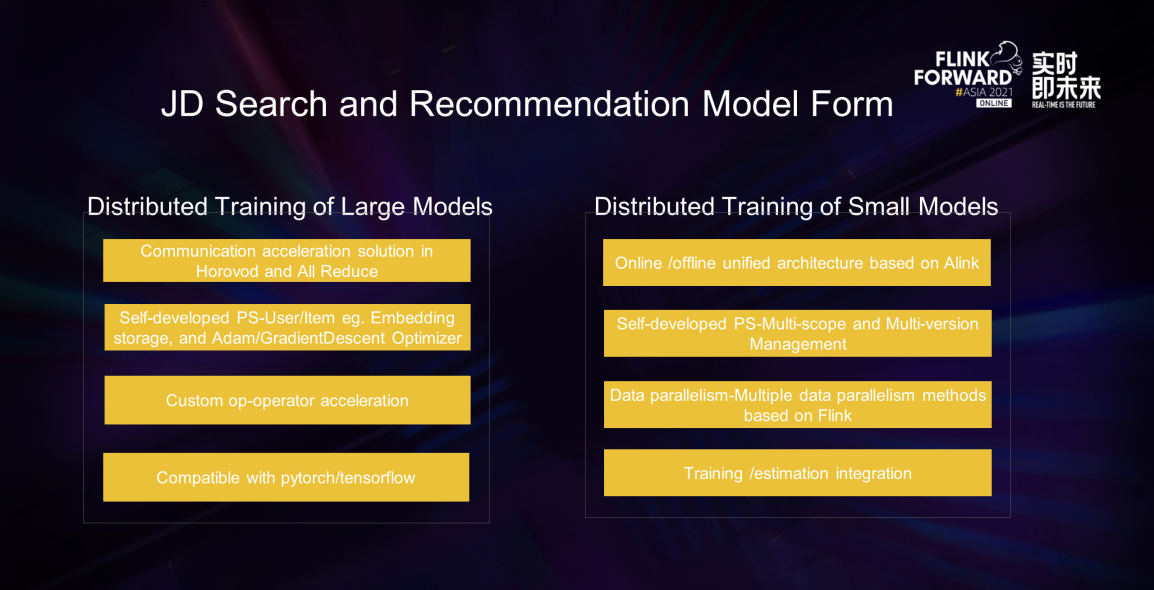

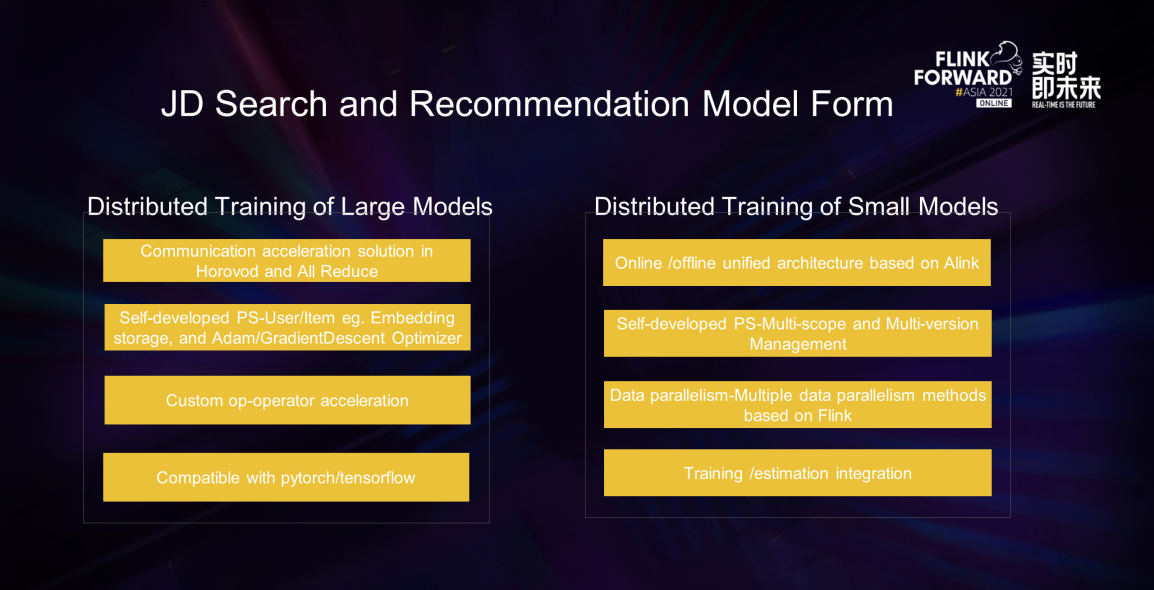

We can divide the existing models into two forms:

- In the left column, the monolithic scale is complex. It uses parallel data to train the same set of parameters and uses the self-developed parameter server to train ultra-large-scale sparse parameters. The architecture of training and estimation is separated from each other.

- In the right column, the monolithic scale is simple, with a wide range of data volume and business granularity. Data is divided according to different business granularity and modeled separately. The streaming computing framework drives data flow, so the architecture of training and estimation is integrated.

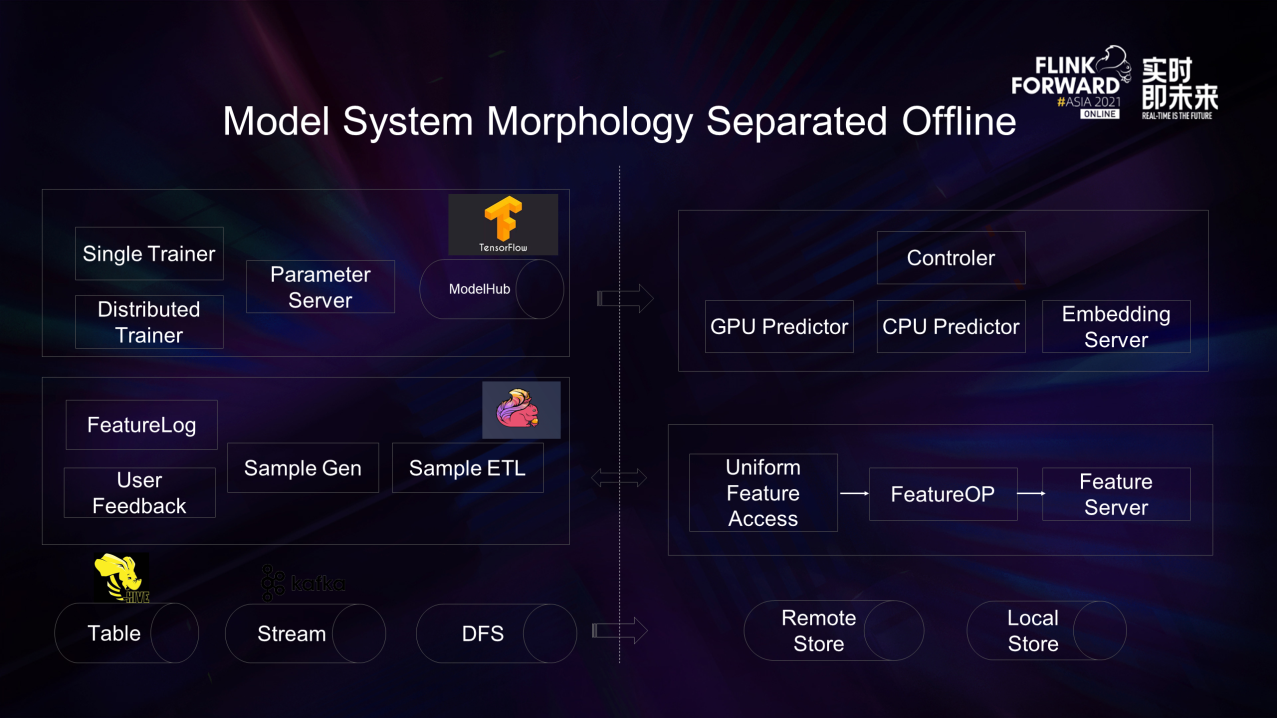

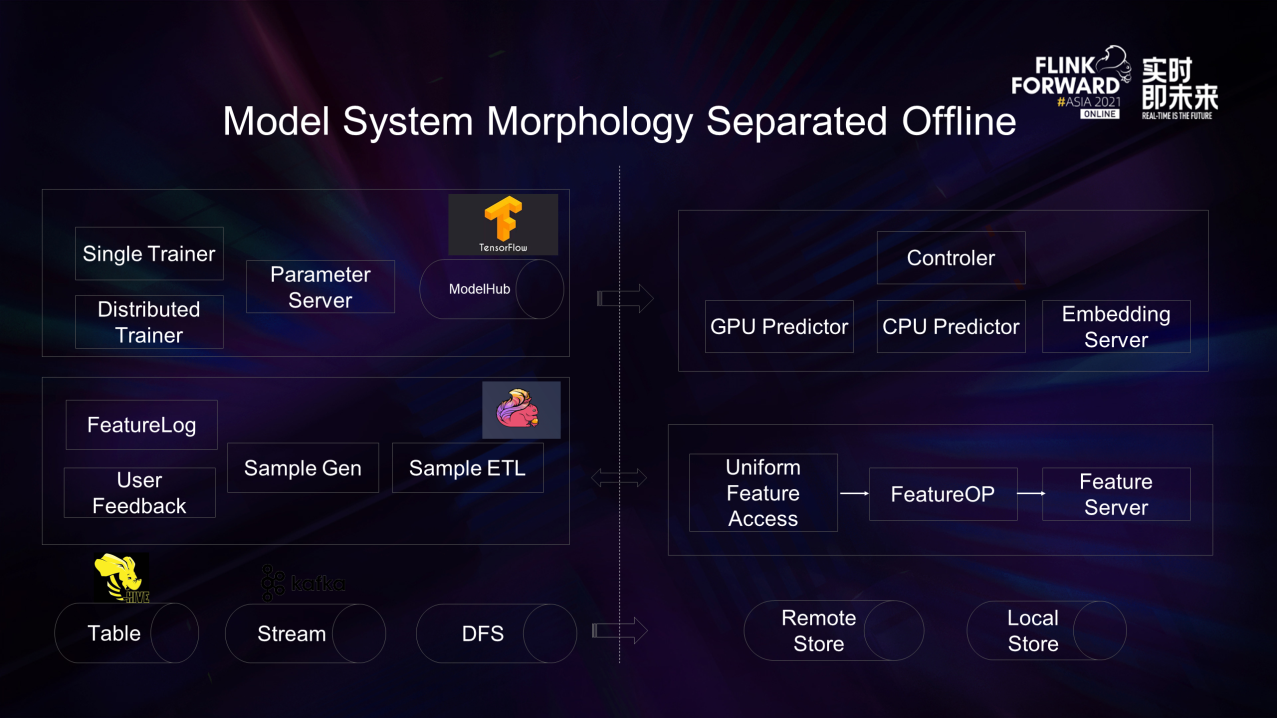

Based on the architectural differences between online services and offline training, most model systems will be in this form of a system that separates online and offline. The training process is encapsulated based on Tensorflow and PyTorch.

Sample production and preprocessing are sample link frameworks built based on Flink. Many of the features of online services are derived from the Featurelog of online services. Model training and sample production constitute the offline part and rely on common basic components, such as Hive, Kafka, and HDFS. The estimation process is based on a self-developed estimation engine, which performs Inference calculations on the CPU or GPU. Large-scale sparse vectors are provided by independent parameter servers. The feature service provides input data for the estimation process, which is also composed of self-developed feature services. Due to the differences in feature sources during estimation and training, there is a unified feature data acquisition interface and corresponding feature extraction library.

Feature extraction and model estimation constitute the separation of the online part and the offline part.

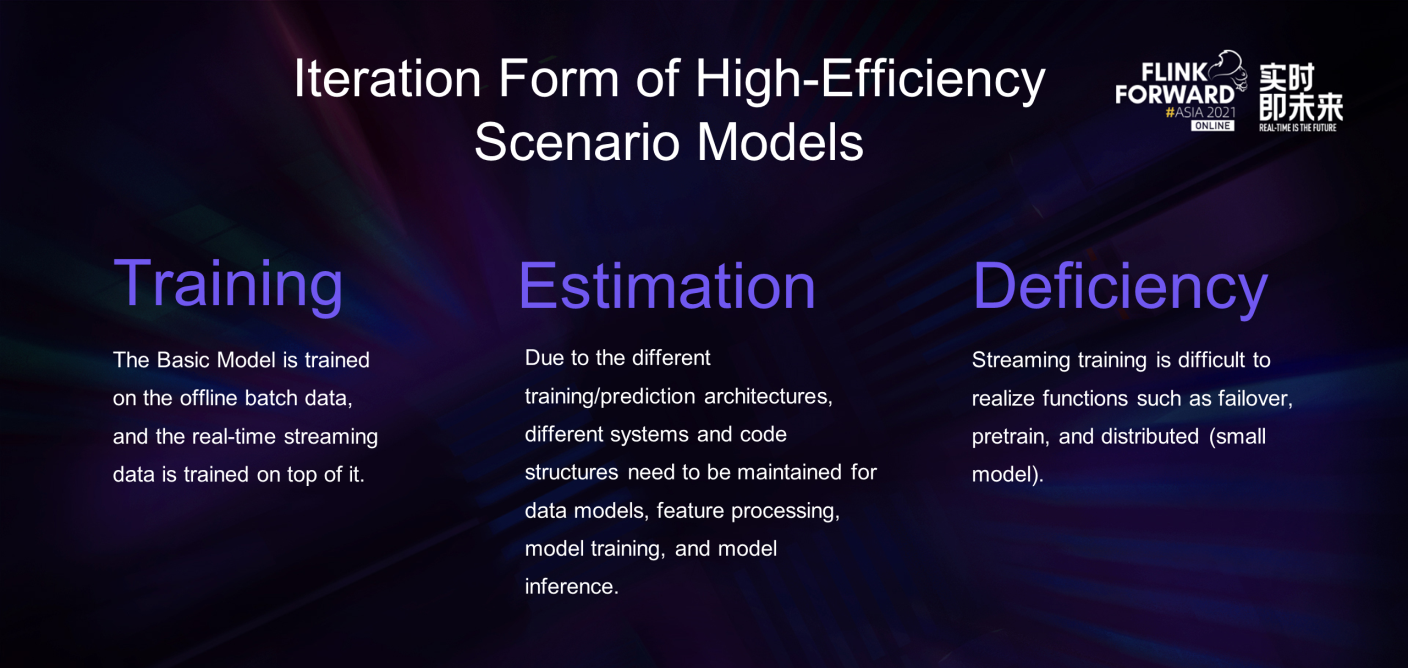

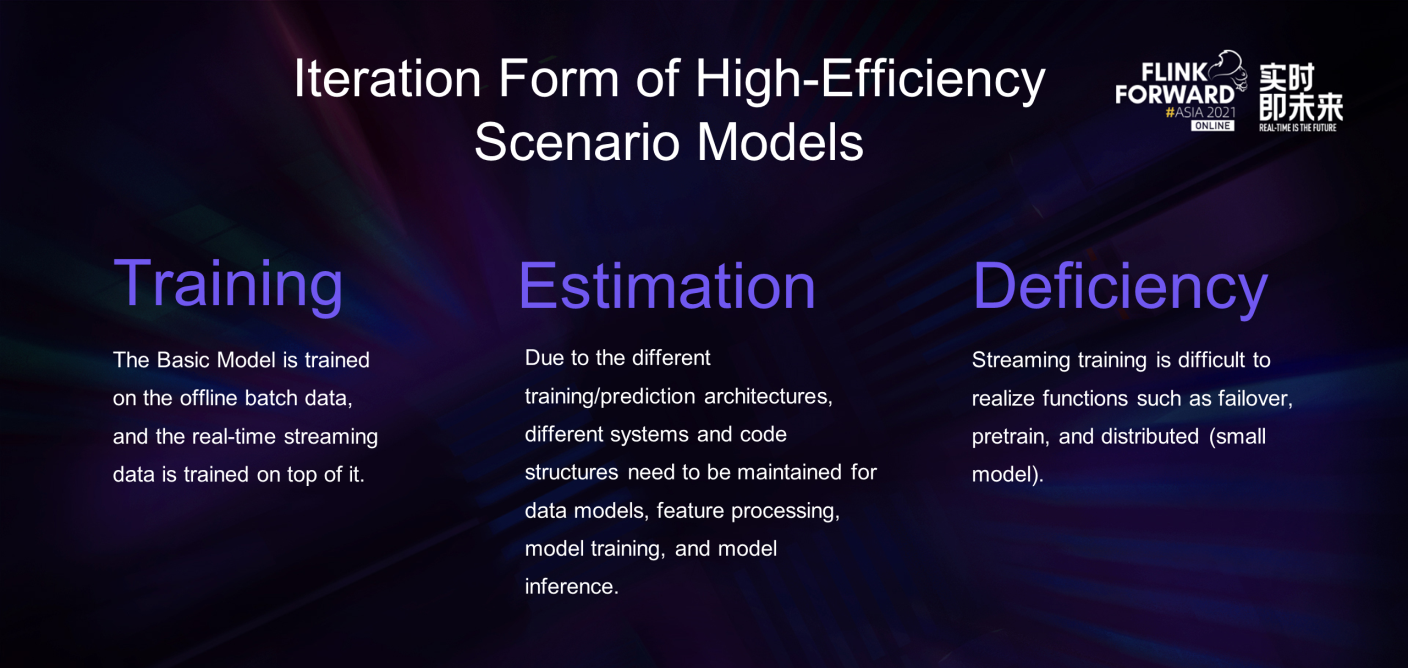

In the form of model iteration, models with high requirements for timeliness are trained offline using historical accumulated batch data to obtain the Base model. After deployment, they are trained and iterated based on real-time data samples.

Since the estimation and training are under two architectures, the continuous iteration process involves the interaction, data transfer, and consistency requirements of both architectures.

Training and estimation need to be combined with the data state to implement fault tolerance transfer and recovery. Figuring out how to combine the distributed processing of data with the distribution pattern of the model into an integration to deploy and maintain is a difficult function to achieve. It is difficult for different models to unify the modes of loading and switching pre-training parameters.

3. Online Learning Based on Alink

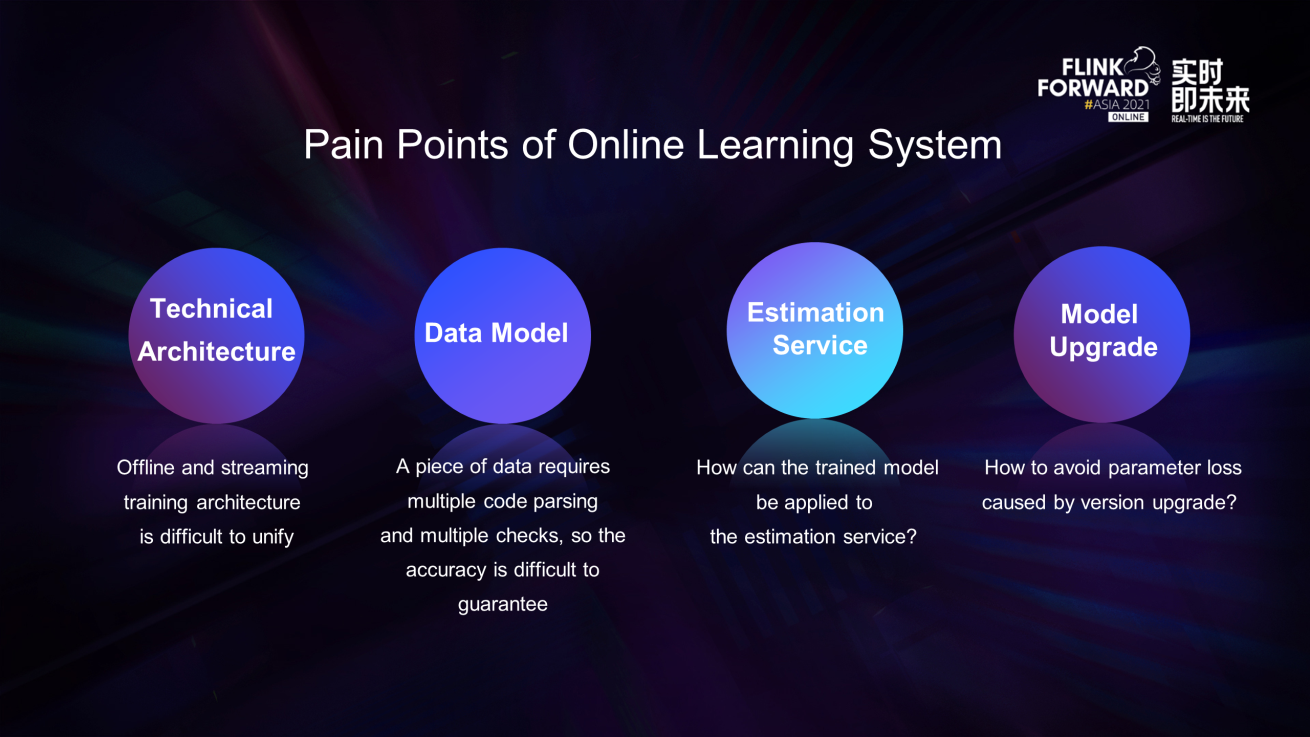

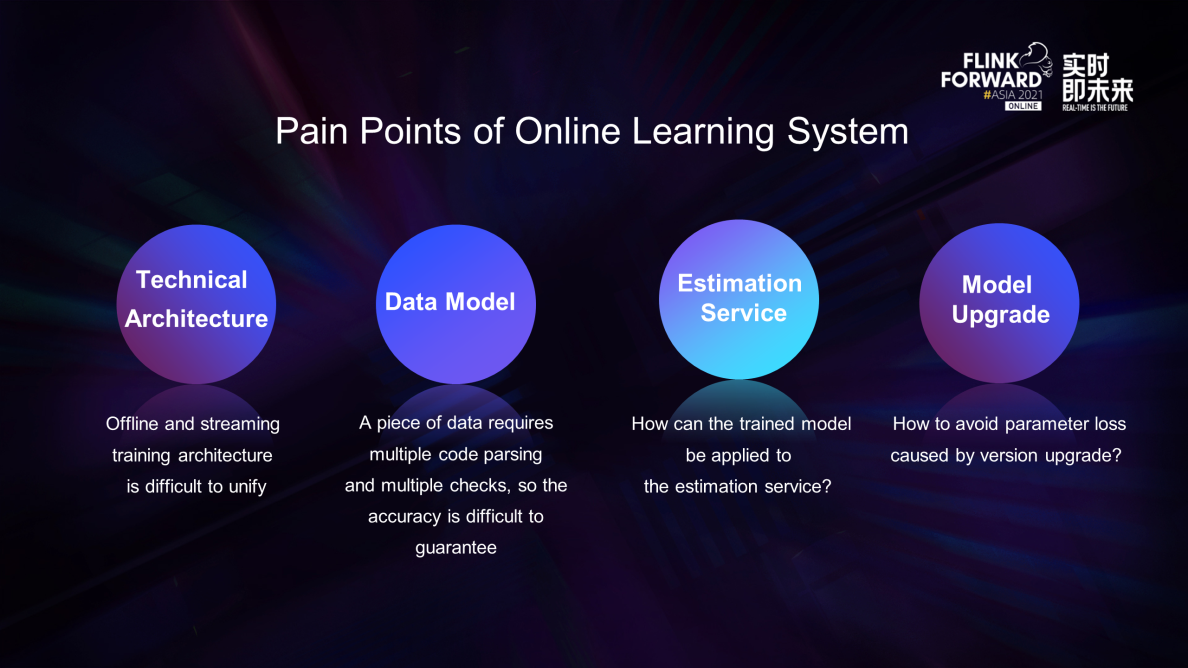

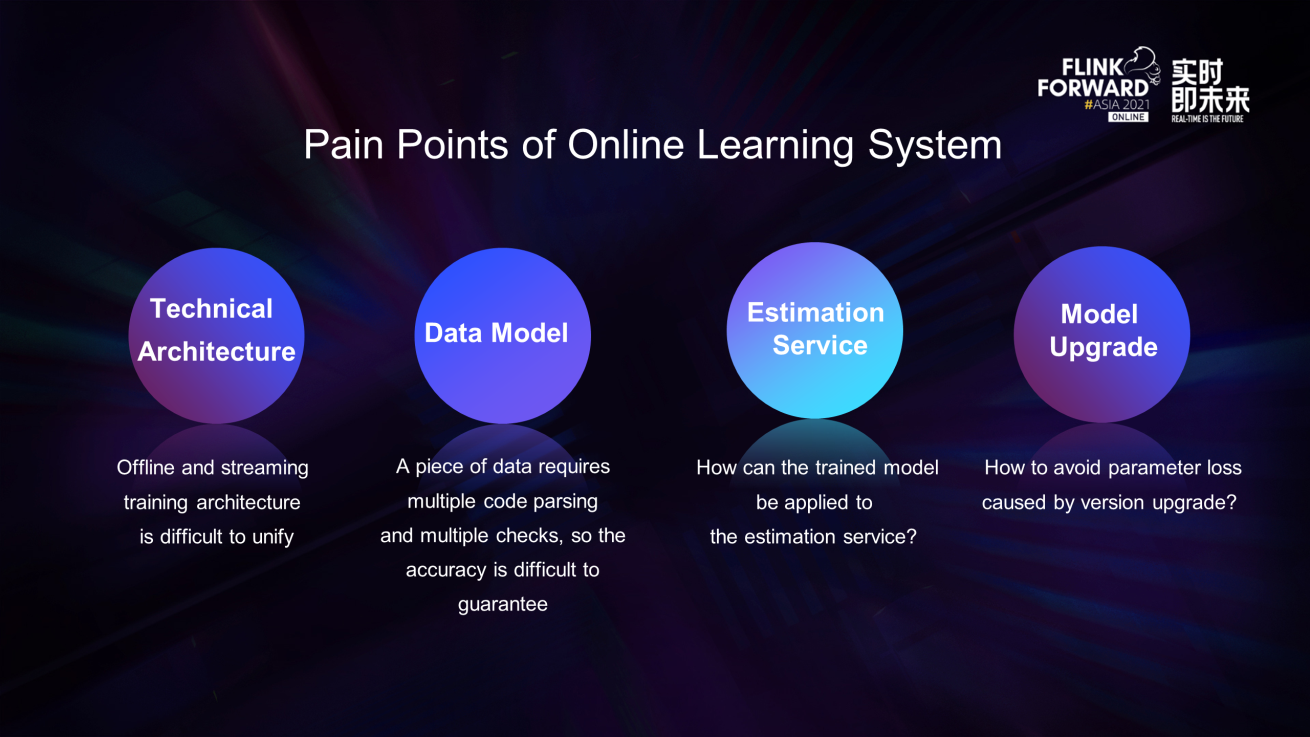

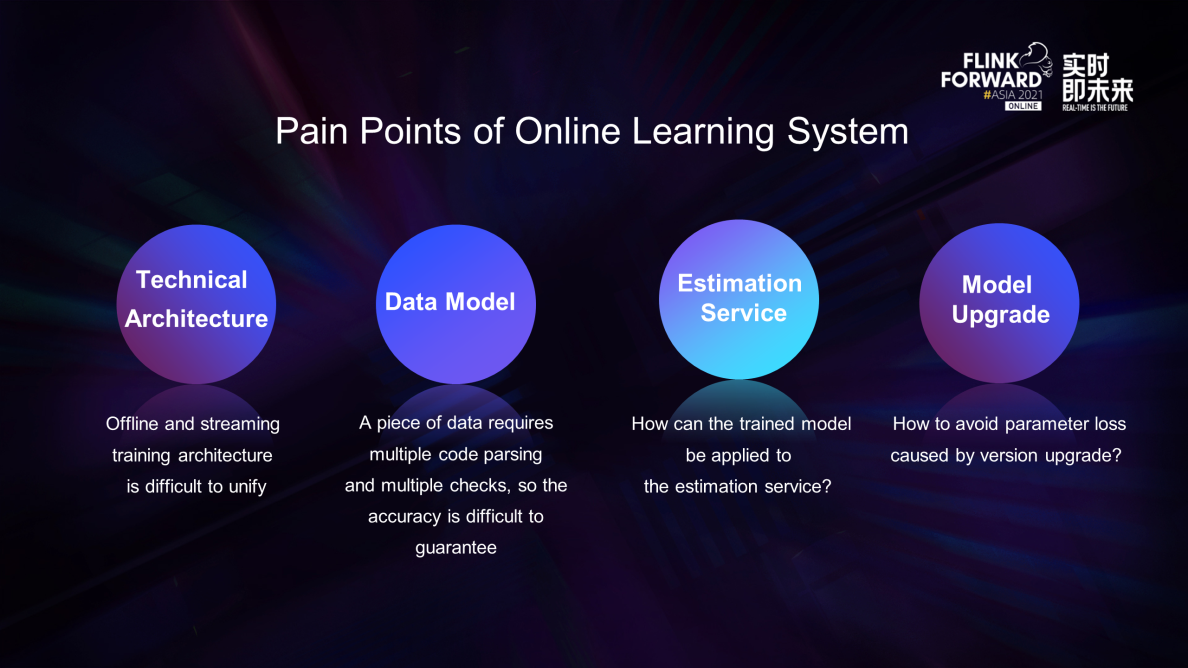

First, let's analyze the pain points of the online learning systems:

-

It is difficult to unify the offline and streaming training architectures. In typical online learning, a model is trained from a large amount of offline data as a basic model first. Then, streaming training is performed on this model. However, streaming training and offline training are two different systems and code systems in this chain. For example, the offline train and online train are two different architectures. Offline training may be a common offline task, while online training may be a continuous training task started by a single machine. The two tasks have different systems. If online training is run with Spark/Flink, the code may be different.

-

Data Model: As mentioned above, it is difficult to unify the entire training architecture. Therefore, users in a business engine need to maintain two sets of environments and codes. Many commonalities cannot be reused, so it is difficult to ensure the quality and consistency of data. In addition, the underlying data models and parsing logic may be inconsistent. As a result, we need to do a lot of patchwork logic. A large number of data comparisons, such as year-on-year, month-on-month, and secondary processing, need comparison for data consistency, which are inefficient and prone to errors.

-

Estimation Service: In traditional model estimation, you need to deploy a single model service and call the task in the form of http/rpc to obtain the estimation result. However, this requires extra maintenance. The rpc/http server does not need to exist all the time in real-time or offline estimation scenarios. They only need to start and end with the task. It is also a challenge for trained offline models to serve online.

-

Model Upgrade: Any form of upgrade will affect the model. Here, we mainly discuss the impact of the model upgrade on the loss of model parameters.

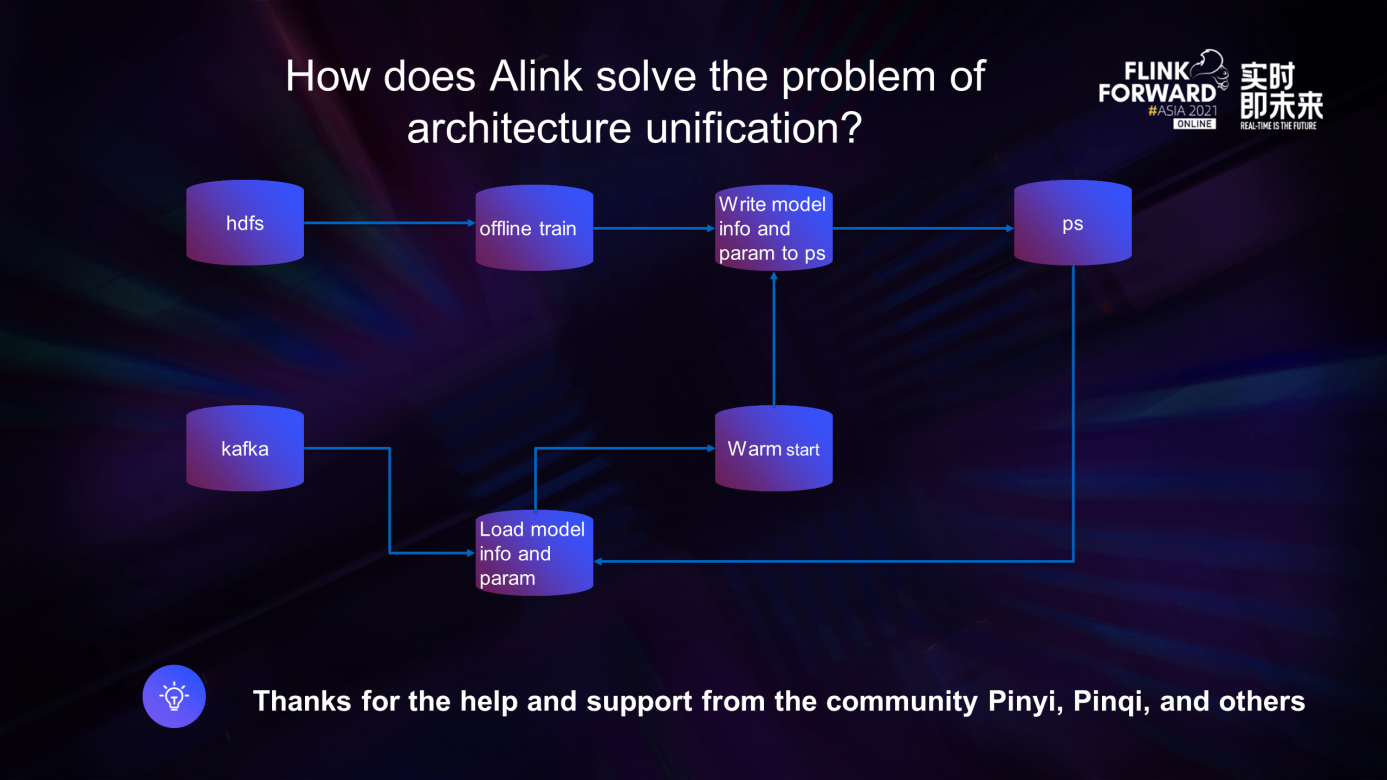

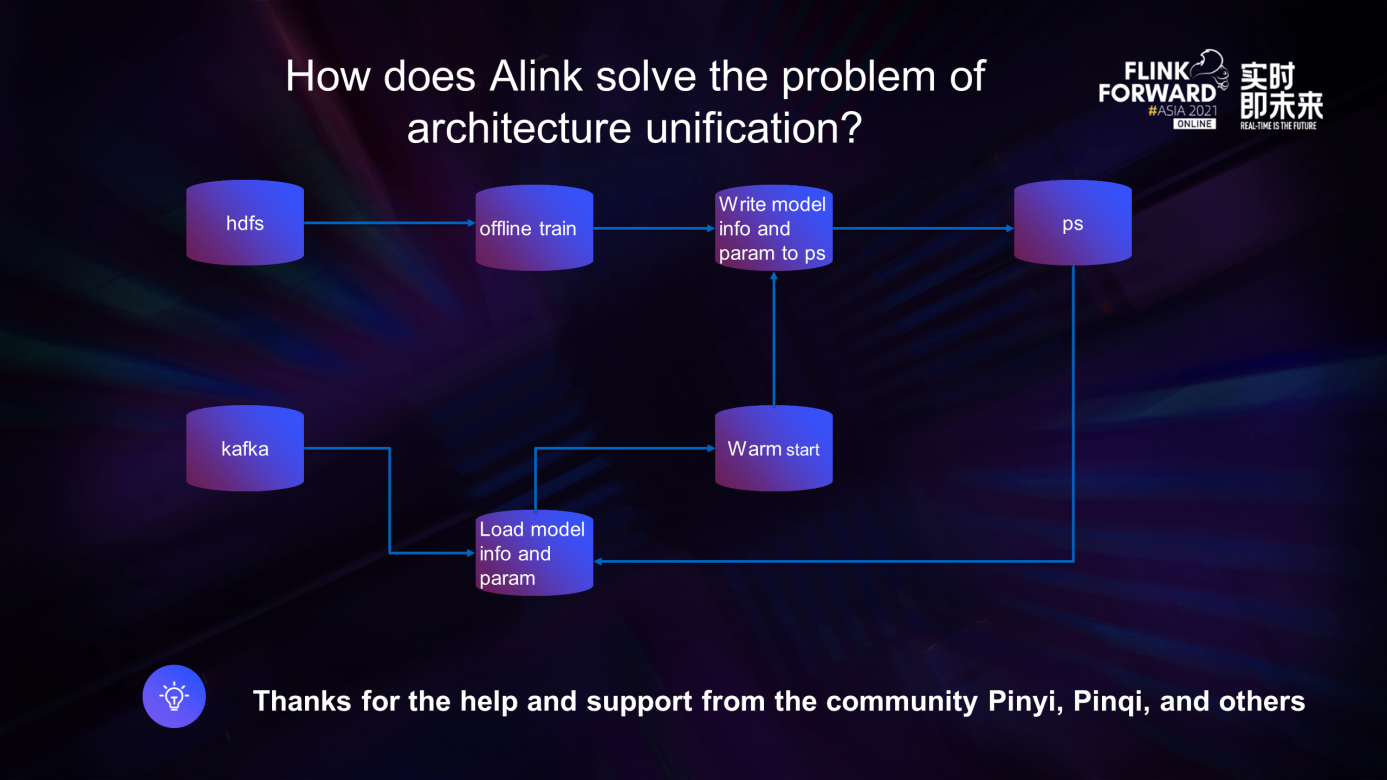

This is a simple online learning flowchart. Let me explain how this flowchart is implemented in the Alink link:

-

Offline Training Task: This Alink task loads the training data from HDFS. After the training data is processed for feature engineering, the model is offline trained. Then, the model info and model parameters are written to the parameter server. The task runs on a day-level basis, such as 28 days of data per run.

-

Real-Time Training Tasks: The Alink task reads sample data from Kafka. After the sample data is accumulated to a certain extent, such as hour-level, minute-level, and number-level, it performs small batch training. First, it uses the parameter server pull model parameters and hyperparameter data. It may predict if there is an estimation requirement. If not, it can perform model training and push the trained model data to the parameter server.

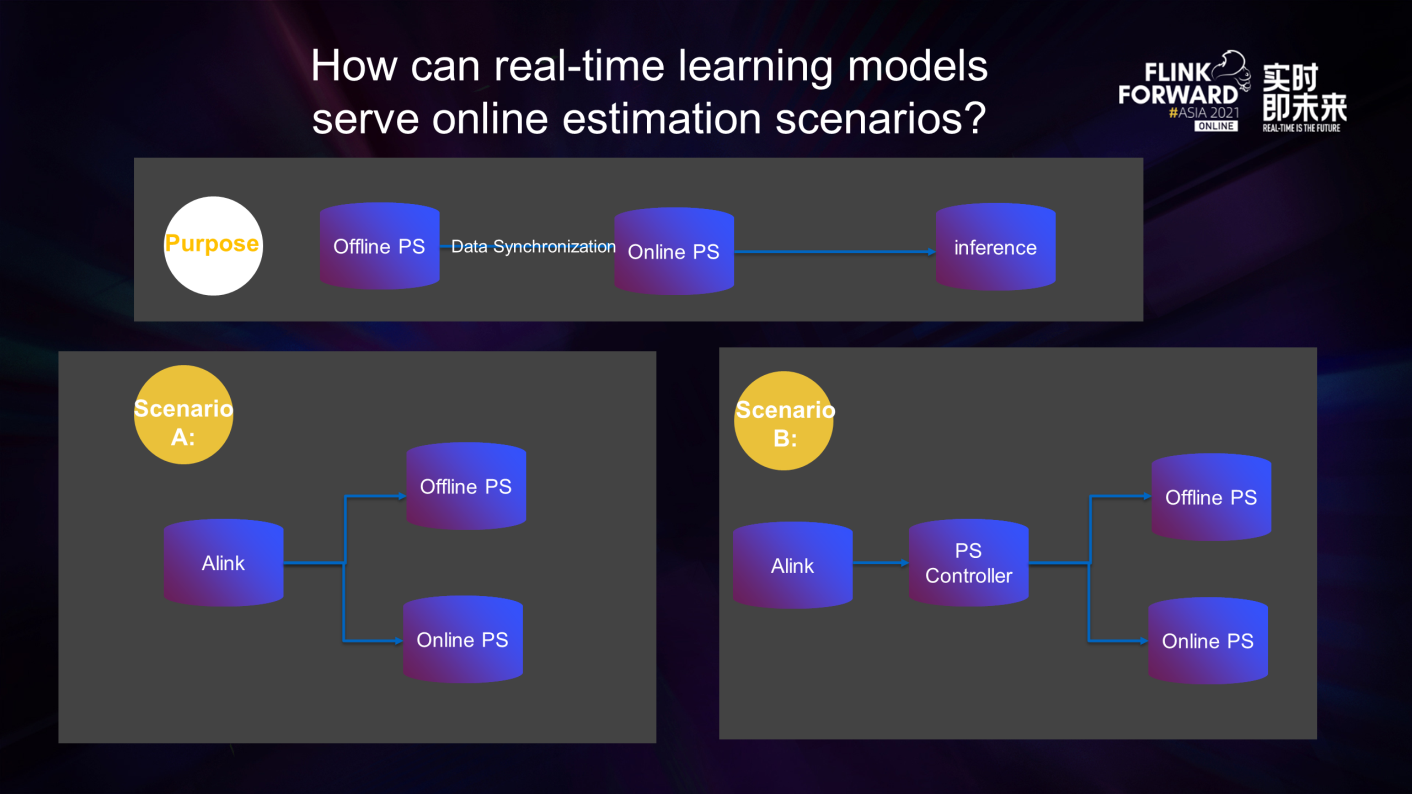

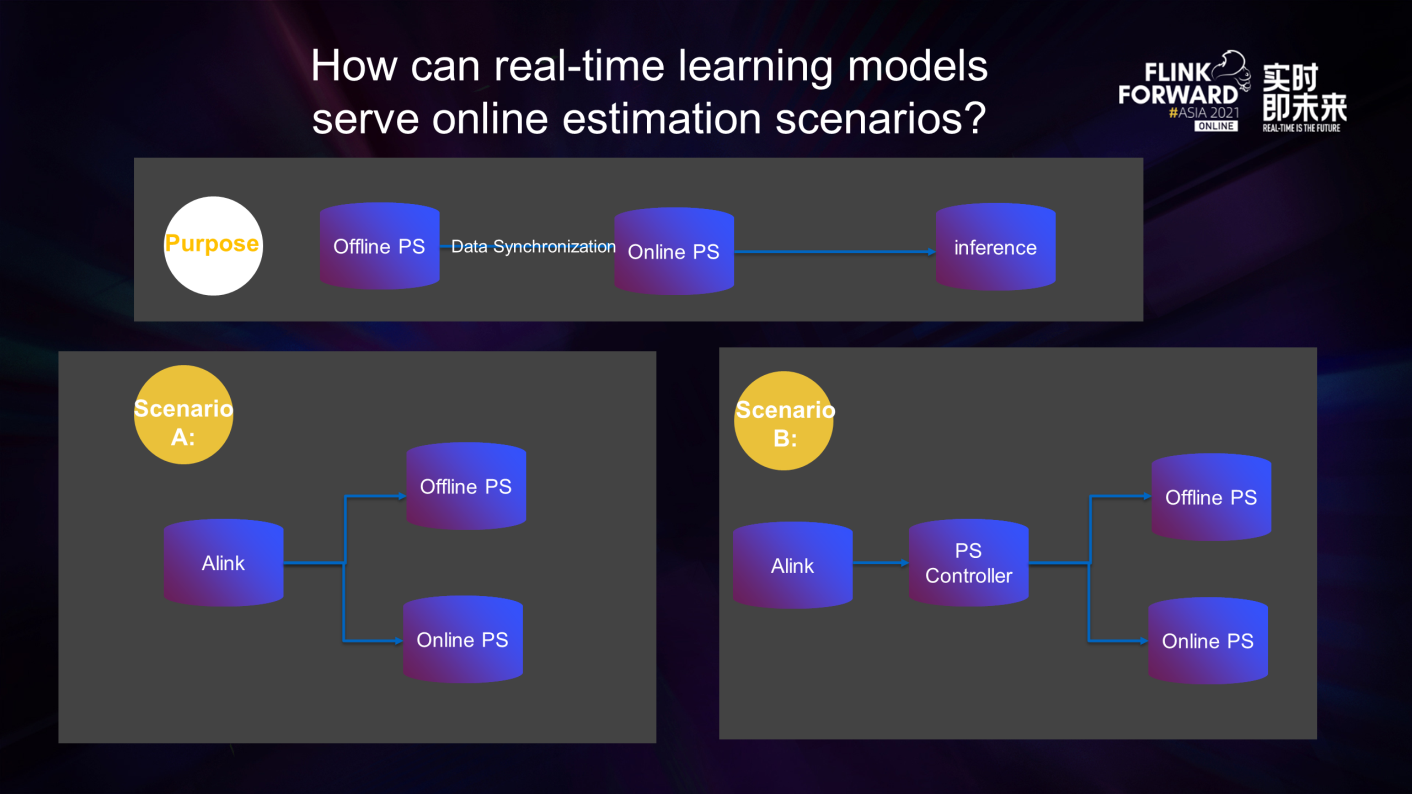

Next, let's look at how the real-time learning model serves the scenario of online estimation:

- First of all, real-time training will not affect the model structure. It will affect the update of model parameters.

- Second, the estimated and trained PS must be separated. Therefore, the problem becomes how to synchronize the estimated and trained PS data.

There are two implementations in the industry:

-

Scenario A: This is for training some small models, allowing Alink's task to push the trained parameters to both offline and online PS.

-

Scenario B: Introduce a role similar to the PS controller. This role is responsible for calculating parameters and pushing the parameters to both offline and online PS.

However, we can also let Alink's training task write PS and construct a role similar to the PS server to synchronize parameters. At the same time, write a Kafka-like queue for server updates and start an estimated PS server to consume parameter information in the Kafka queue, thus achieving data synchronization between training PS and estimated PS.

There are many scenarios; just choose the right one.

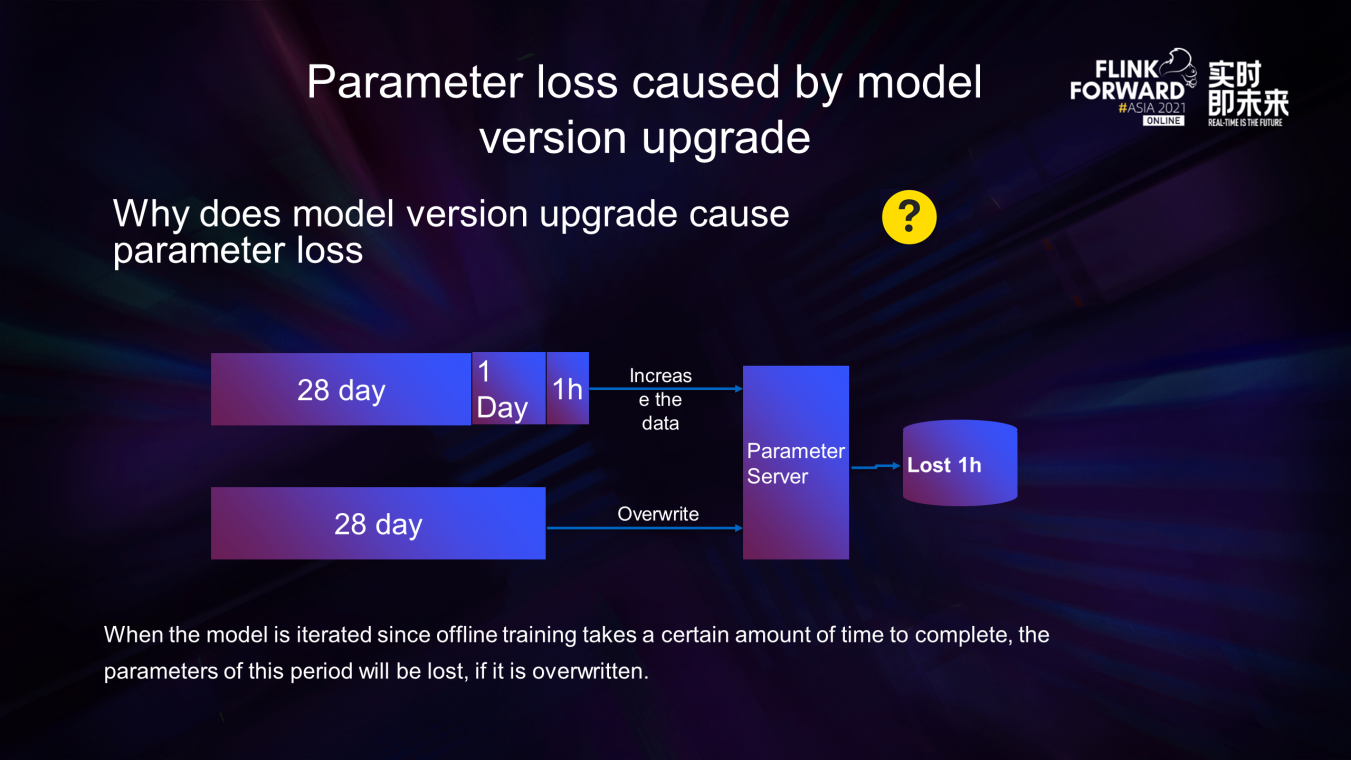

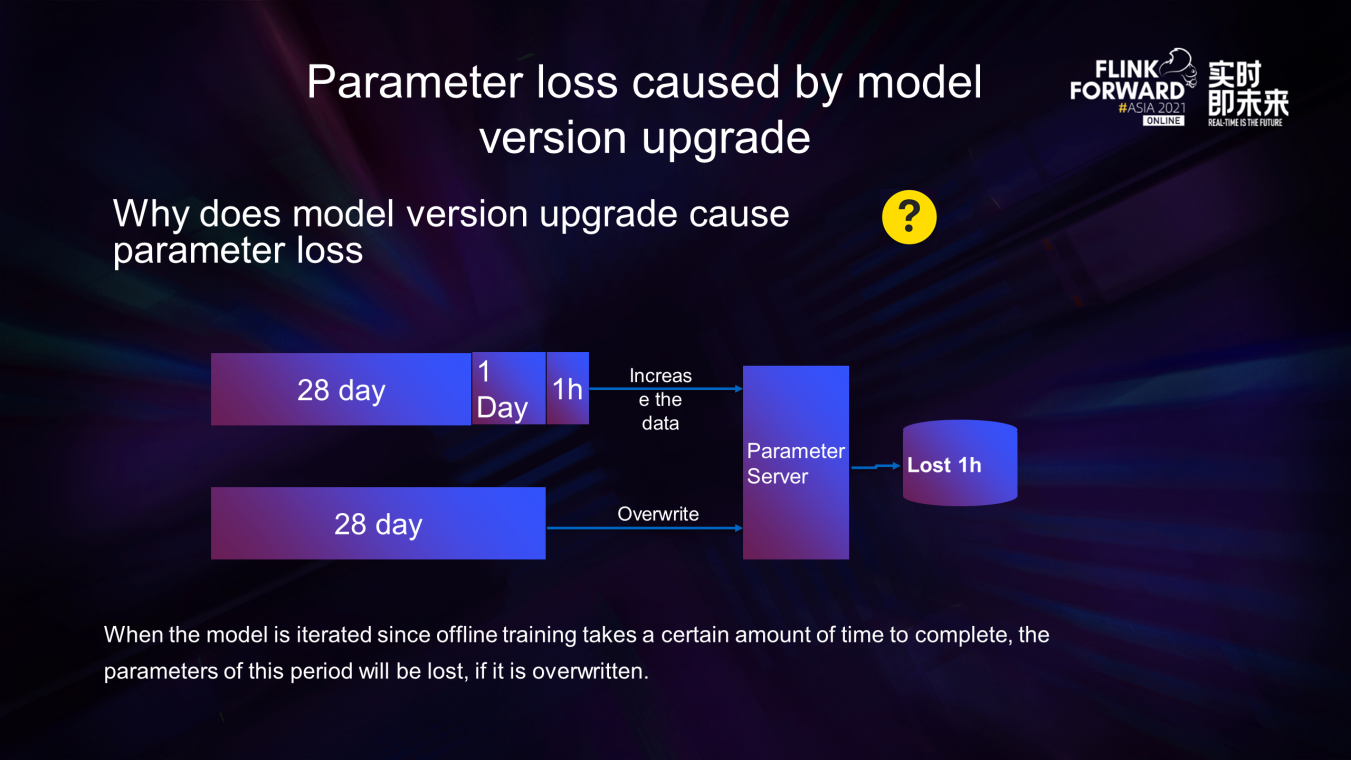

Let's take a look at the parameter loss caused by the model upgrade:

- Let's suppose the data of the last 28 days are trained in the early morning of the 1st. After the training is completed, the parameters are written into the parameter server. The training has been streaming from the 1st to the 2nd, and the parameter server has been written incrementally until the early morning of the 2nd.

- The data of the last 28 days is trained in the early morning of the 2nd. Assuming the training time is 1h, if PS is written at this time, the 1h data will be overwritten, which is not too bad for some time-insensitive models, at least there are no errors. However, there will be problems with the Prophet time series model. Since the model parameters are missing 1h of data, the model accuracy may be degraded.

In summary, when the model iterates, it takes some time for offline training. If it is covered, the parameters of this period will be lost. Therefore, we must ensure that the parameters in PS are continuous in time.

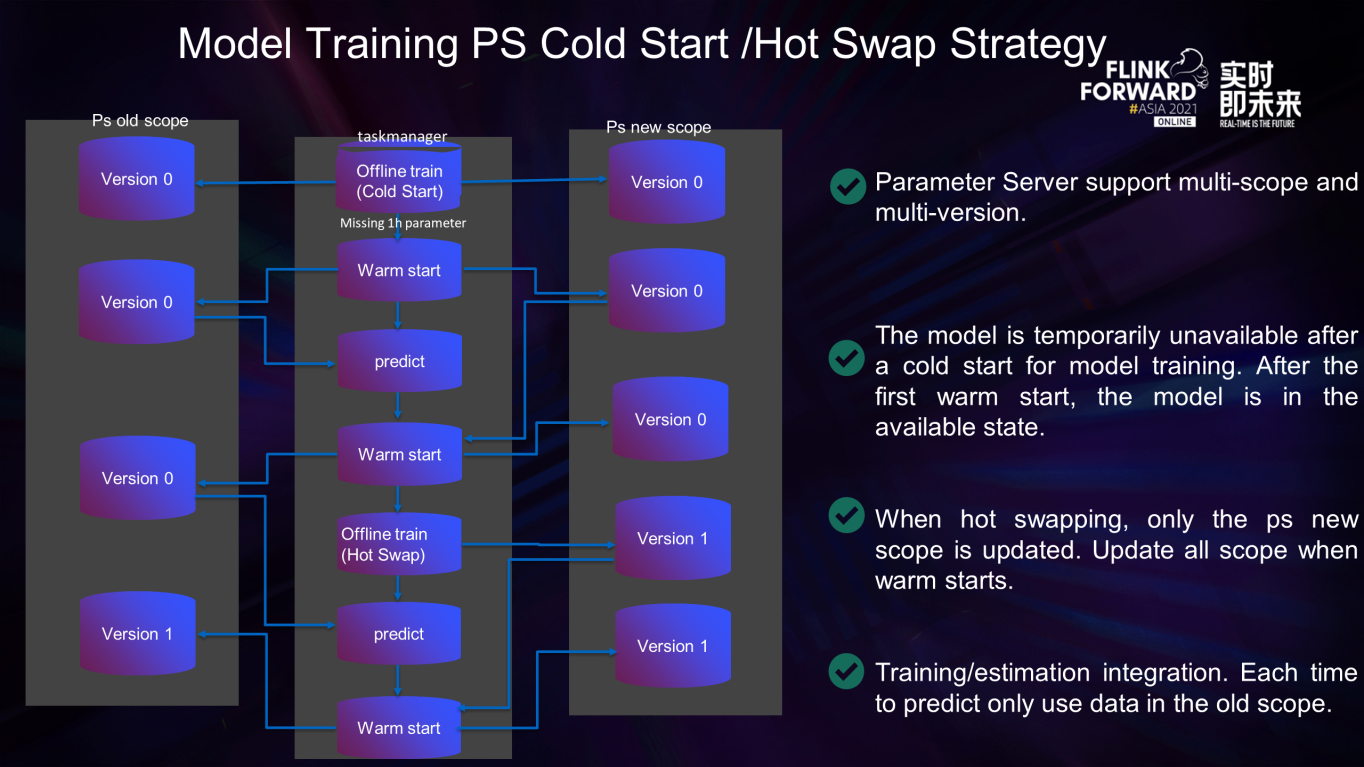

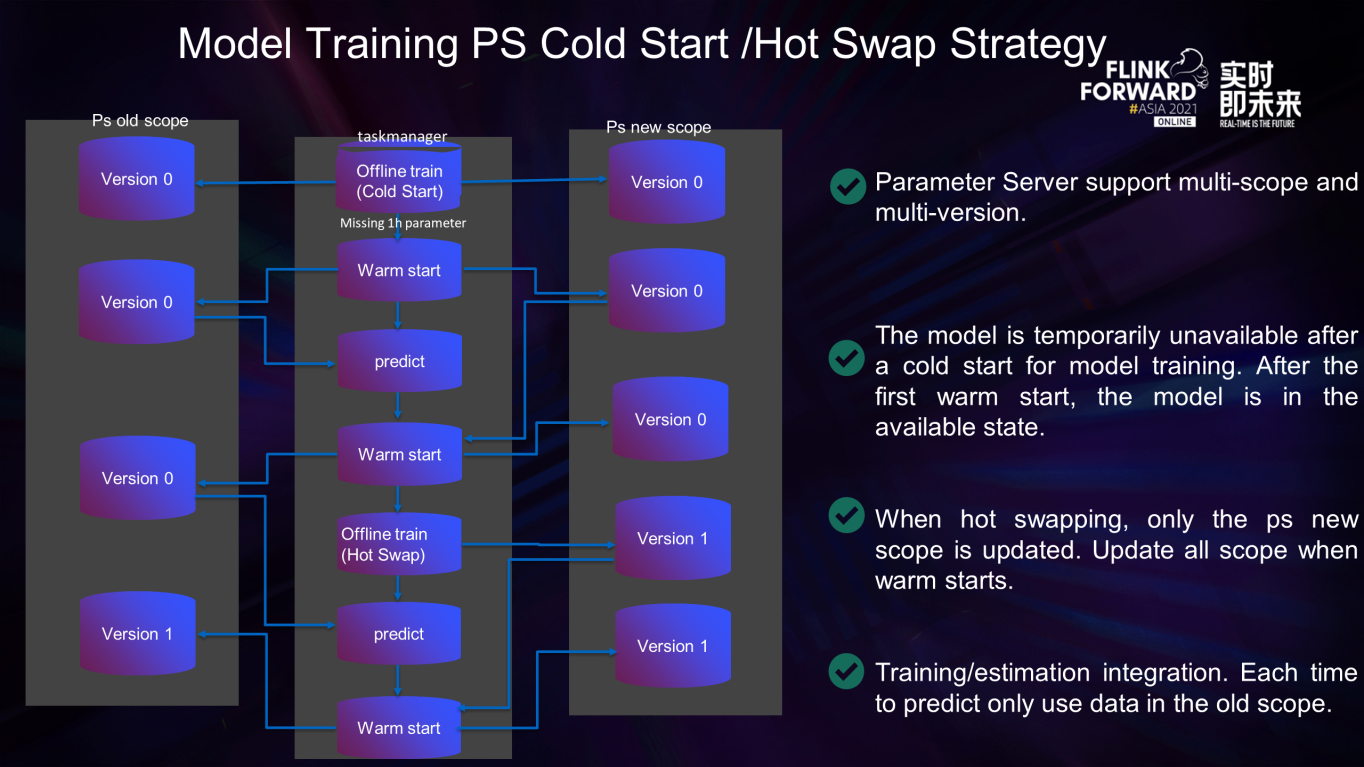

This figure shows the process of the cold start and hot swap of PS:

- After the cold start of model training, the model is temporarily unavailable due to parameter loss until the first warm start.

- Parameter Server supports multi-scope and multi-version. Only the PS new scope is updated in the hot swap, and all the scopes are updated in the warm start.

- The model pulls old scope data when predicting and pulls new scope in the warm start.

The following is a detailed explanation of the entire link process:

- During a cold start, it takes some time to train the model for offline tasks. Therefore, the parameters in PS lack the data of this period, so we can only use warm start to complete the parameters and write them into the PS old scope and the PS new scope.

- After that, the normal prediction and warm start process are carried out, in which the prediction only pulls the PS old scope since the data in the PS new scope will be overwritten during the hot swap, resulting in parameter loss. Also, the PS of lost parameters fails to be predicted.

- Wait until the hot switch is made early morning the next day and only update the PS new scope

- Then, pull the PS old scope to predict and pull the PS new scope to warm start

Next, let me introduce the pain points of streaming training:

- Failover is not supported for online training. Everyone should know that online training will be interrupted due to various reasons (such as network jitter). As such, the appropriate failover strategy is important. We introduced Flink's failover strategy to our self-developed model training operator to support model failover.

-

Appropriate Pretrain Strategy: The training embedding layer of any model does not need to be pulled from PS every time. The industry will develop some forms similar to local PS to store these sparse vectors locally. We can also introduce this local PS into Flink to solve this problem. However, for Flink, we can replace local PS with state backends in some scenarios. By using Flink's state to integrate the parameter server, part of the hot data of the parameter server is loaded into the state during init or failover to pretrain the model.

- It is difficult to implement distributed requirements. It is good to have some architectures that support distribution, but some algorithms do not support it (such as the open-source prophet of Facebook). If the amount of data is large and no distribution is needed, it may take time to run a large amount of data. Alink supports distribution. It is an upper-layer algorithm library based on Flink. Therefore, Alink has all the distributed functions of Flink. All scheduling policies of Flink Master are supported, and even various refined data distribution policies are supported.

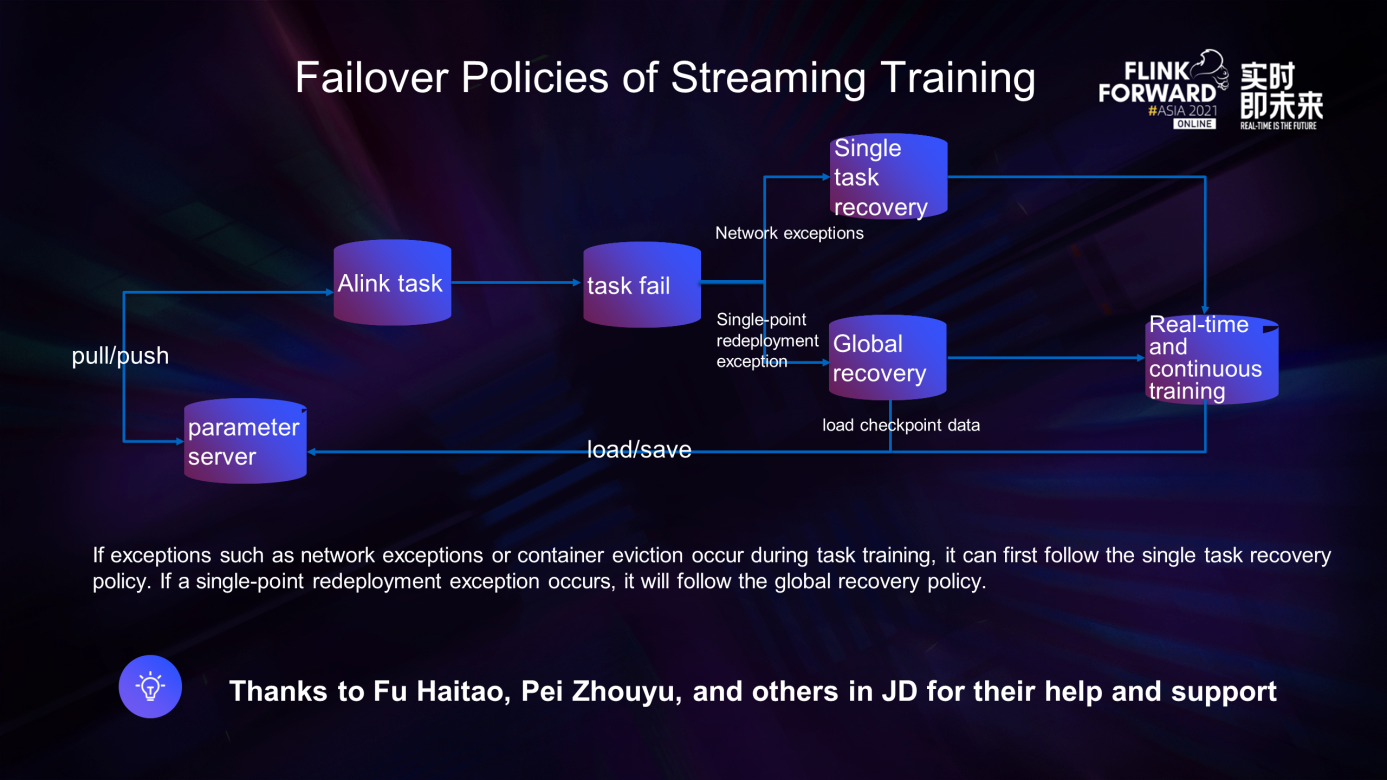

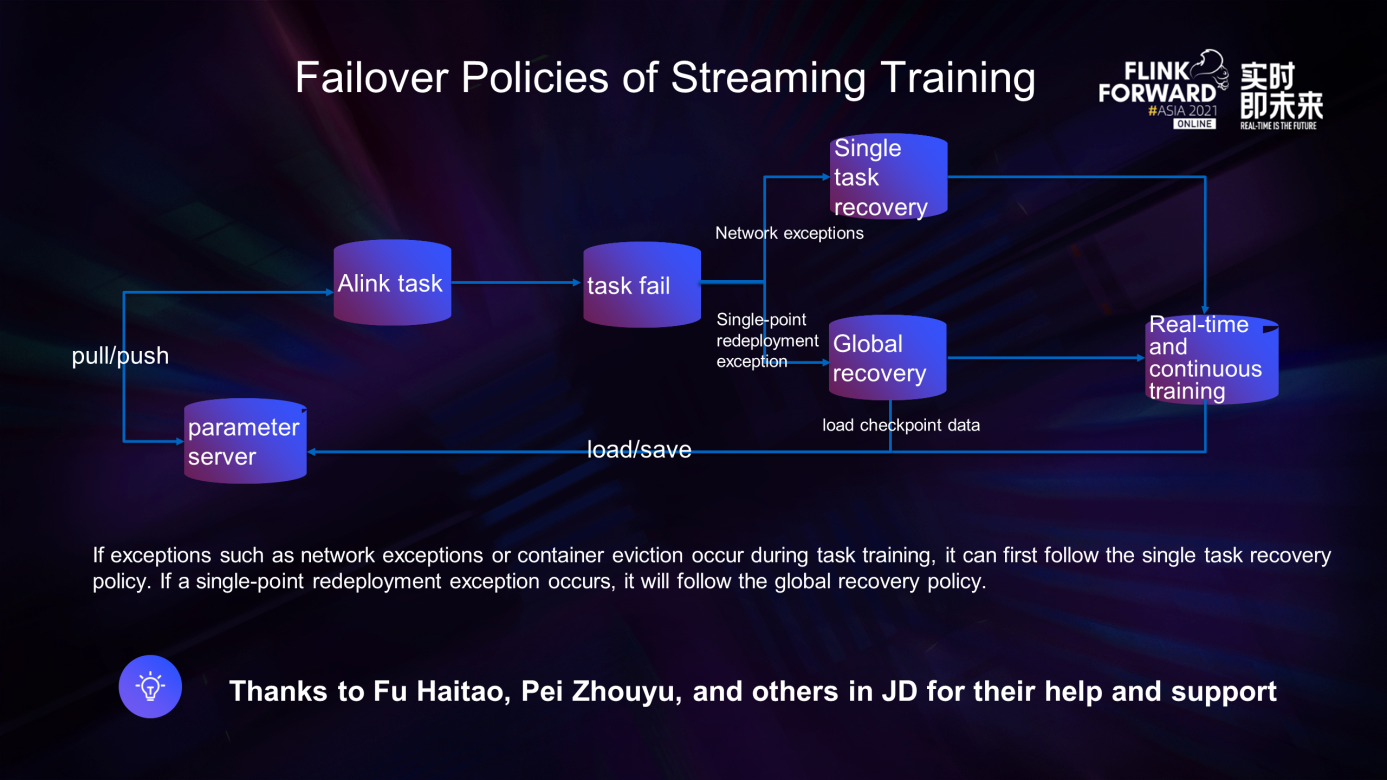

The failover policy for streaming training:

During online distributed training, a machine is often abnormal due to some reasons (such as the network). If you want to recover, there are two situations:

1. Data loss is allowed.

General training tasks allow a small amount of data loss, so we hope to sacrifice some data in exchange for continuous training of the overall task. The introduction of a local recovery strategy can improve the continuity of the task to avoid the task due to some external reasons caused by a single point of failure and all recovery.

2. Data loss is not allowed.

Here, we discuss the at least once situation (exactly once requires PS to support transactions). If the service has high requirements for data, we can adopt the global failover strategy. The global failover strategy will also be adopted in case of abnormal single-point redeployment. In this service, we adopt the local recovery strategy to ensure the continuous training of tasks first.

The following describes the restart strategy of the training task in detail:

-

Global Recovery: This is the concept of failover commonly used in Flink.

-

Single Task Recovery: A taskmanager has a heartbeat timeout due to a network exception. Then, a Flink task will failover and recover from the last checkpoint to ensure data consistency. However, if a small amount of data is lost and the task continues to output, you can enable local recovery. At this point, the task only restarts the taskmanager to ensure the continuity of training.

-

Single-Point Redeployment Exception: If a task fails due to any reason, an exception occurs during the single-point recovery process of the task, which results in a single-point recovery failure. A single-point redeployment exception occurs, and the exception fails to be resolved. You can only failover the task to resolve the problem and configure the task to recover from the checkpoint or not.

- Here, I will focus on the scenario of recovering from a checkpoint during task failover. When the task fails, it will save the current PS state snapshot and the data at the backend of Flink state. When the task recovers, the load method is executed to restore PS. If you think about it carefully, you can find that this operation will cause repeated training of some parameters. (The time point of cp is inconsistent with the time point of save.) Pay attention to it.

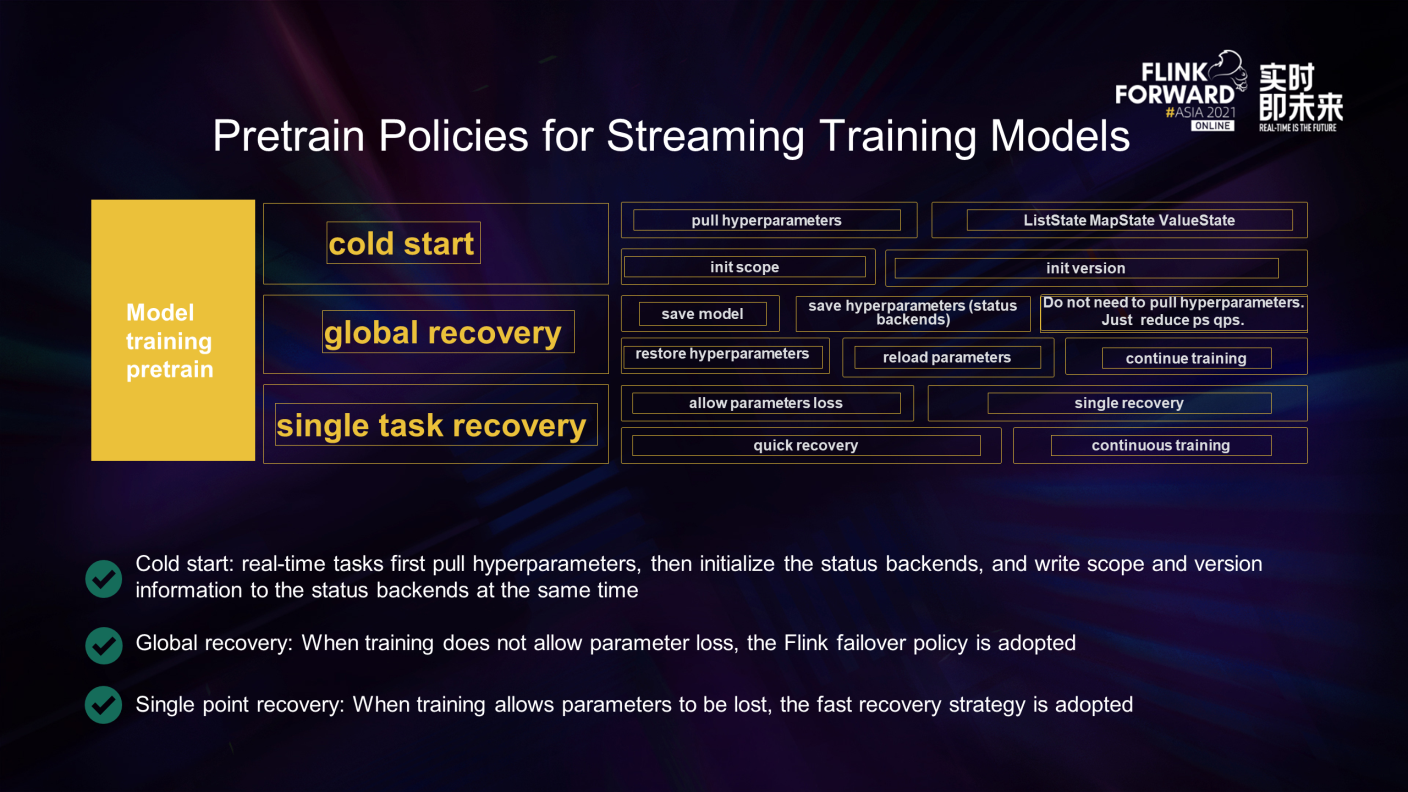

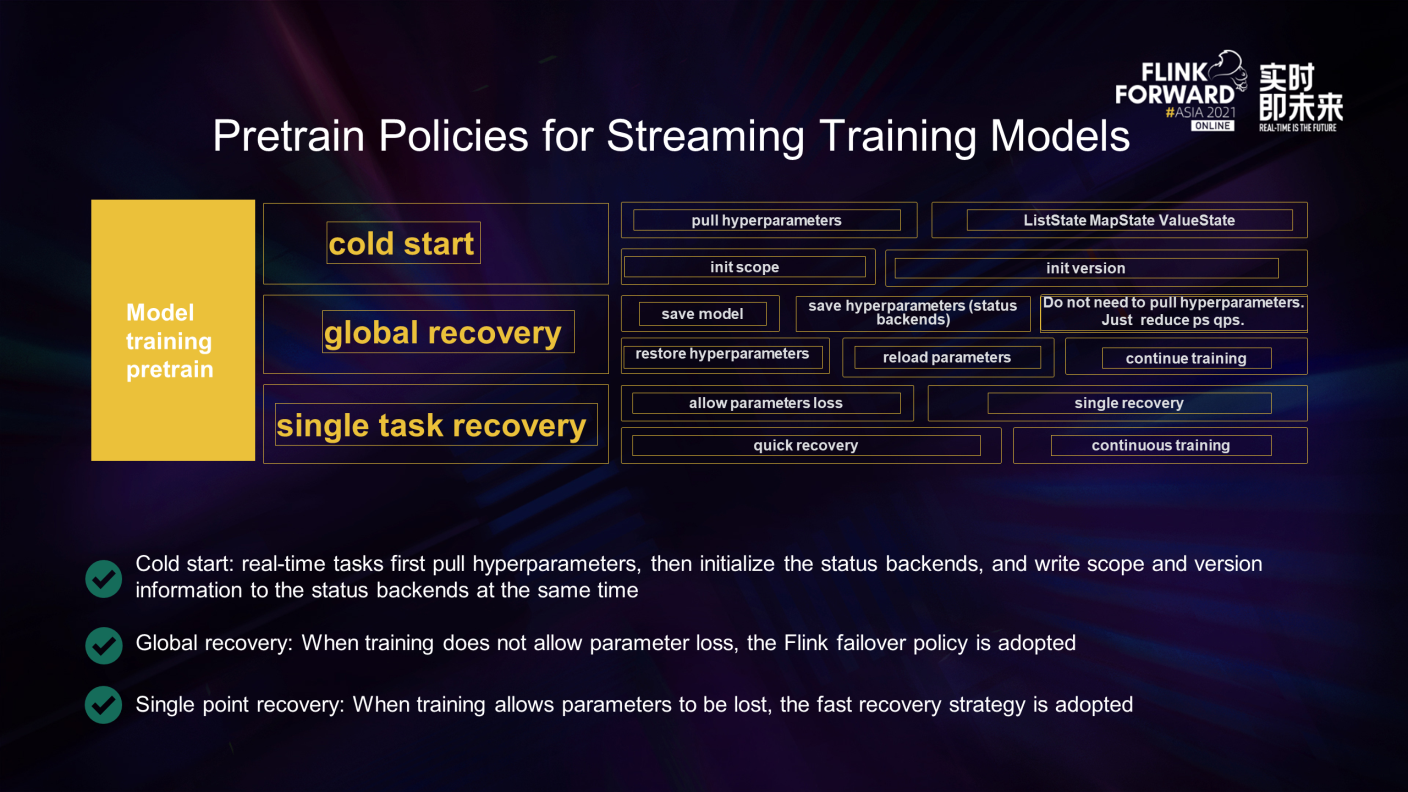

Alink-based streaming training pretrain policies can be divided into three modes: cold start, global recovery, and single-point recovery:

- During cold start, it will pull model parameters and hyperparameter information from PS first. Then, it will initialize ListState, MapState, ValueState, and other state backends and then initialize the scope and version information of PS at the same time.

- The global recovery is Flink's default failover strategy. In this mode, the task will save the model first, which means serializing the model information in PS to the hard disk. Then, it saves the data of the state backend in the Flink task. It does not need to pull hyperparameters and other information during initialization but selects to recover hyperparameters from the state backend and reloads the parameters of the model for continuous training.

-

Single Task Recovery: This mode allows a small amount of data to be lost and is adopted to ensure the continuous output of the task. In this mode, the task only restarts the tm, which can ensure the stable and continuous training of the training task to the greatest extent.

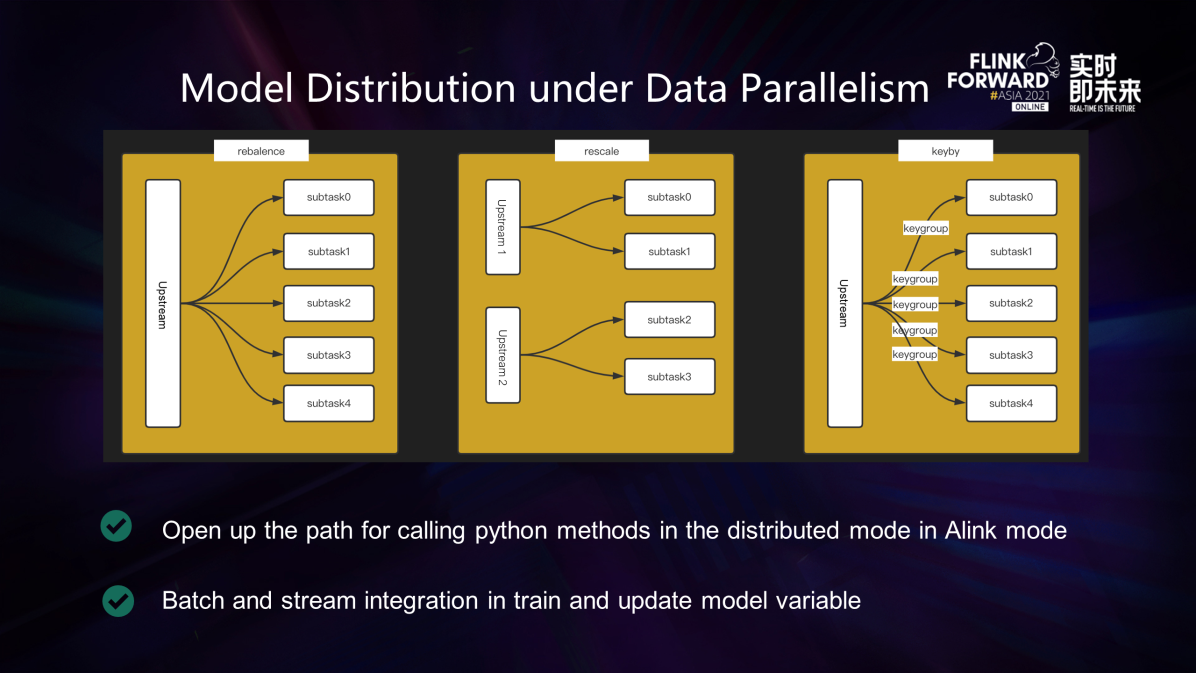

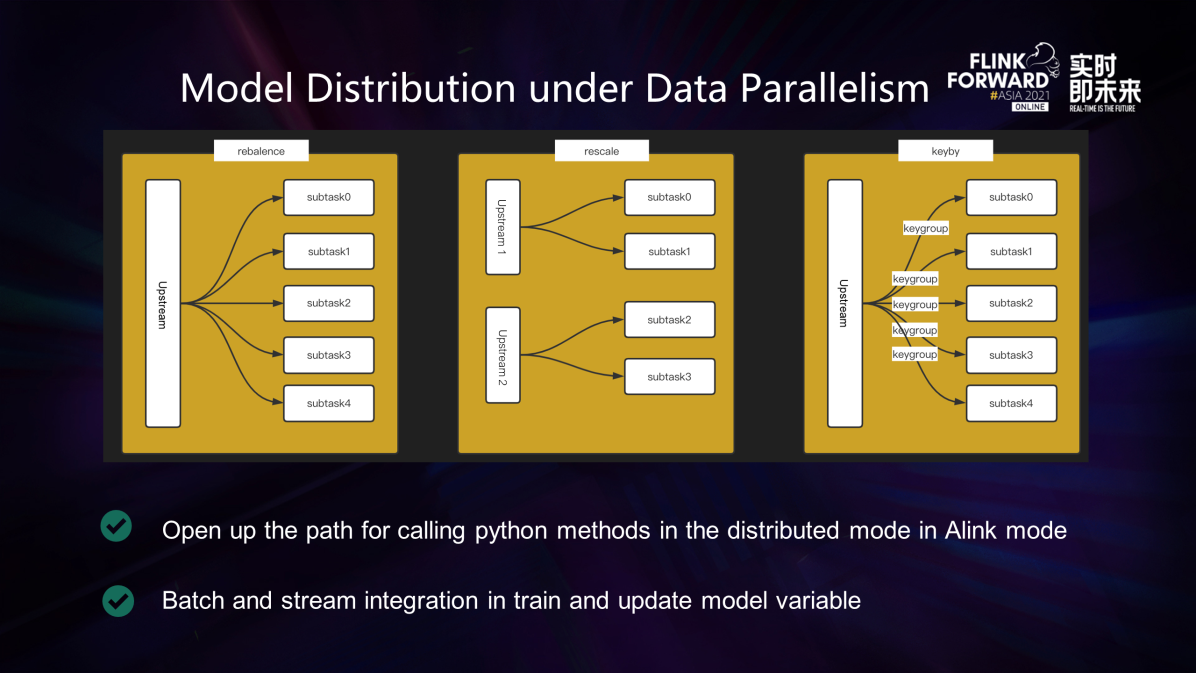

- Data parallel is the most basic and important link in the currently popular 3D parallel and 5D parallel architecture.

- The most basic data distribution strategy of Flink includes a variety of options such as rebalance, rescale, hash, and broadcast. Users can implement streampartitioner freedom to control the data distribution strategy. Users can implement free data parallelism (such as load balance) to solve the problem that model parameters wait for each other caused by data skew.

- In this mode, we open up the path of distributed calls to Python methods in Alink mode, which can maximize the efficiency of data parallelism.

- Data parallelism ignores streams and batches. We integrate the mapper component of Alink to integrate batches and streams of training and updating model variables.

4. Tensorflow on Flink Application

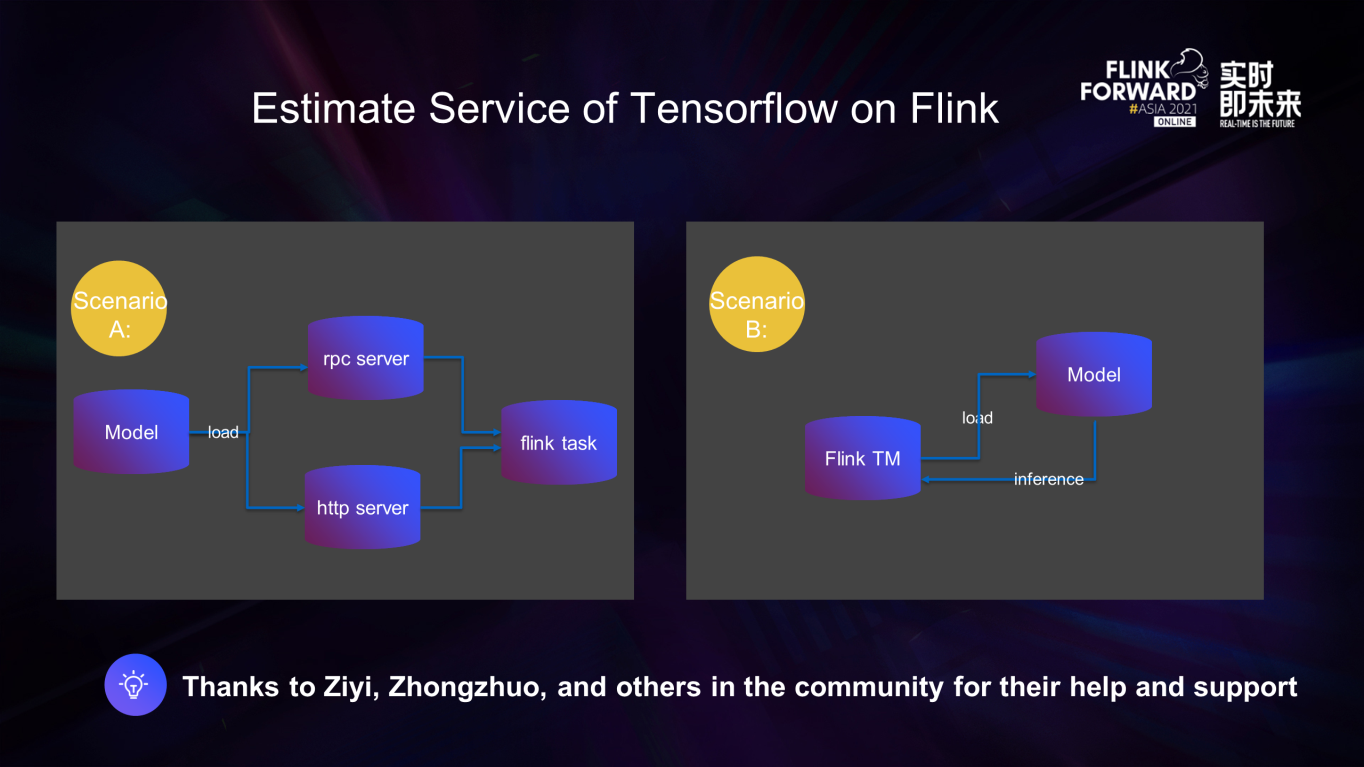

Let me introduce the differences between Tensorflow on Flink estimation service and traditional online estimation links:

- They are different from online estimation. Real-time/offline estimation does not require services to exist all the time, and loading to tm can save manpower maintenance and resource costs.

- The different architecture of the whole link leads to the need to maintain separate systems and code structures for data modeling, data processing, model training, and model inference.

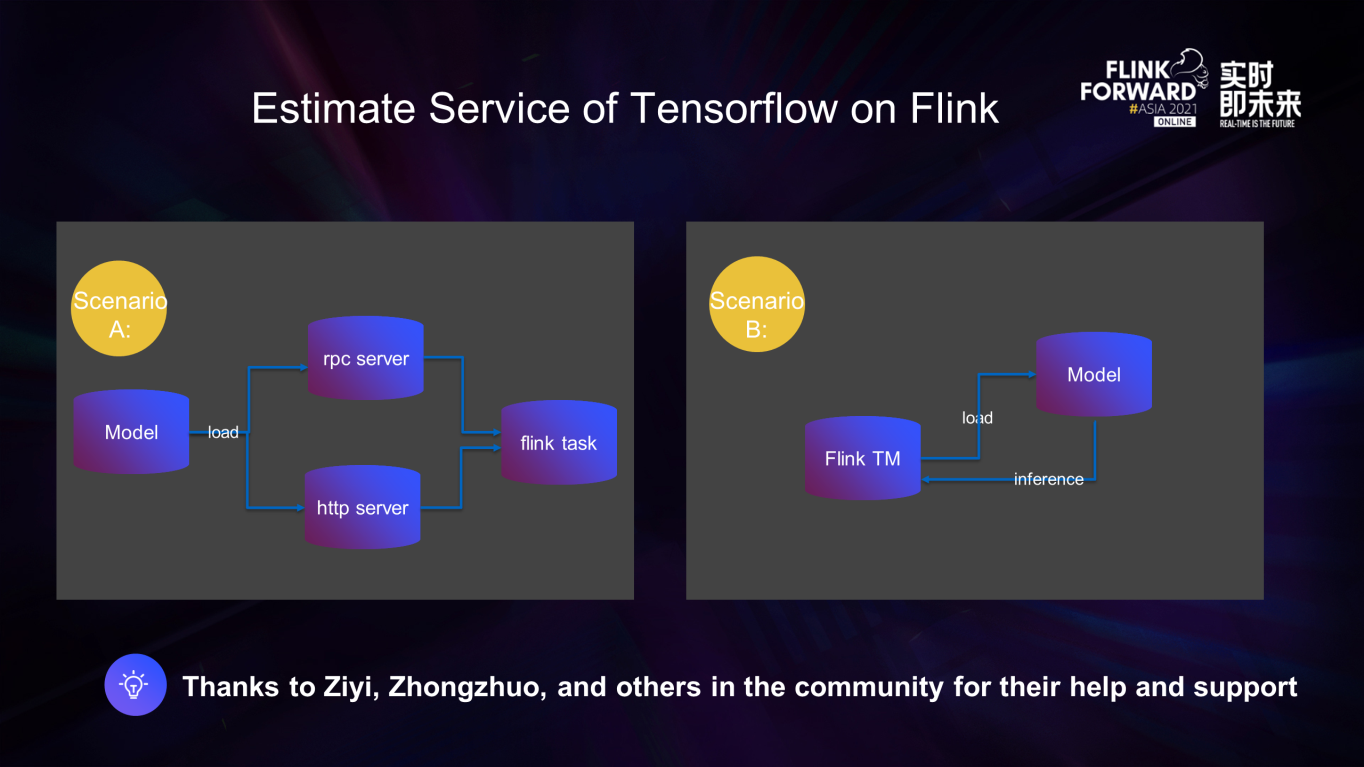

The Tensorflow on Flink estimation service currently has multiple scenarios:

-

Scenario A: Deploy an rpc or http server and use Flink to call it through rpc or http as a client

-

Scenario B: Load the Tensorflow model to flink tm and call it directly

Scenario A has the following disadvantages:

- Rpc or http server requires extra maintenance manpower.

- The difference between real-time/offline estimation and online estimation is that the rpc or http server does not need to exist all the time. They only need to start and end with the task. However, if this architecture is adopted, it will require more maintenance costs.

- It is still the problem of unified architectures. Rpc or http server and real-time/offline data processing are not in a set of systems, which still involves the problem of inconsistent architecture that has been emphasized previously.

5. Planning

- Flink SQL is used to train a batch and flow integration model to make model training more convenient.

- The Tensorflow Inference on Flink implementation supports large models and implements dynamic embedding storage based on PS. There are a large number of id class features in business scenarios, such as search and recommendation. ID class usually adopts embedding mode. These features will expand under certain circumstances, thus swallowing most of the task manager's memory. Moreover, the variable of native Tensorflow will have inconveniences, such as the need to specify dimension size in advance and fail to support dynamic expansion. Therefore, we plan to replace the embedded Parameter Server with our self-developed PS to support hundreds of billions of distributed serving.

- The embedding in PS is dynamically loaded into the state of taskmanager to reduce the demand for PS access pressure. Flink usually uses the keyby operation to hash some fixed keys to different subtasks, so we can cache the embedding corresponding to these keys into the state to reduce the access pressure to PS.

6. Acknowledgment

I want to thank all colleagues on the Flink Optimization Team of the JD Data and Intelligence Department for their help and support. Thanks to all our colleagues in the Alink community for their help and support. Thanks to all our colleagues on the Flink Ecosystem Technical Team of the Alibaba Cloud Computing Platform Division for their help and support.

Links

Platform For AI

Platform For AI

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution