By Zhiwen

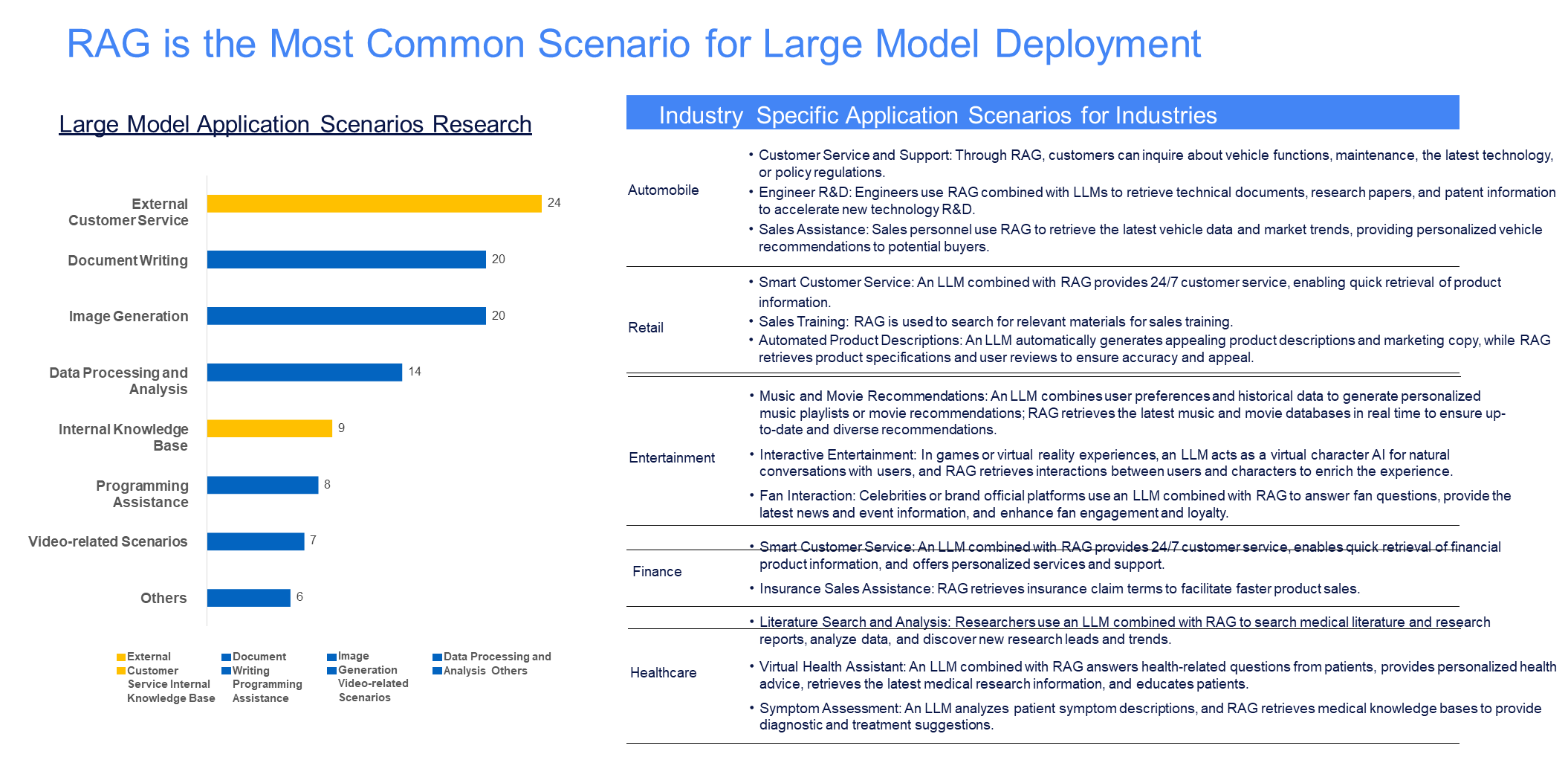

When the large model is implemented, there are some issues, mainly focusing on the following aspects:

• Lack of vertical domain knowledge: Although large models compress a large amount of human knowledge, they have clear limitations in vertical scenarios, which requires specialized services to solve specific problems.

• Hallucinations and high entry barrier: There are issues with hallucinations and compliance in the use of large models, making them difficult to implement effectively. In addition, there is insufficient supporting work and a lack of ready-made solutions for managing unstructured text, testing, operations, and management.

• Redundant development efforts: Businesses operate in isolation, unable to accumulate assets, leading to low-level duplication of efforts, which results in low ROI and inefficiency for companies.

From an application perspective, there is a need for a technical solution that can effectively address the shortcomings of large models in the vertical domain, so as to lower the entry barrier, increase efficiency, and leverage economies of scale.

Currently, RAG is a relatively effective solution to address the aforementioned issues (platform capabilities, out-of-the-box vertical private-domain data).

To enable basic features isn't too challenging, but truly optimizing RAG performance requires substantial effort. Here, based on personal understanding, I summarize some common RAG optimization methods to provide references for future RAG implementations.

• First, introduce a few papers on RAG optimization.

• Then, describe some common engineering practices for RAG.

I hope this provides some useful insights. Going forward, I will focus more on RAG and look forward to engaging in more discussions with you all.

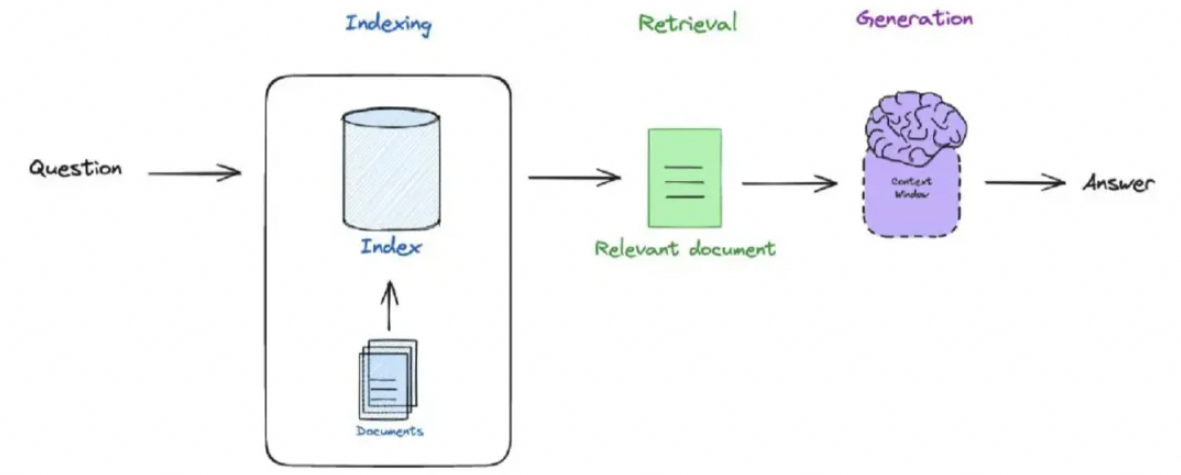

RAG stands for Retrieval-Augmented Generation, which combines retrieval systems and generative models to enhance the accuracy and relevance of language generation.

The strength of RAG lies in its ability to incorporate external knowledge during response generation, making the content more accurate and informative. This is particularly effective for handling questions that require specialized knowledge or extensive background information. With the development of large language models (LLMs), RAG technology is also evolving to accommodate longer contexts and more complex queries.

• Currently, most companies prefer using RAG methods for information retrieval because vector databases are less costly than using long texts.

• During RAG application, some companies fine-tune embedding models to improve retrieval capabilities; others opt for non-vector database RAG methods like knowledge graphs or ES.

• Most third-party individuals and enterprise developers use pre-built RAG frameworks such as LlamaIndex, LangChain, and etcs, or the built-in RAG tools available in LLMOps.

Paper address: https://arxiv.org/pdf/2401.18059

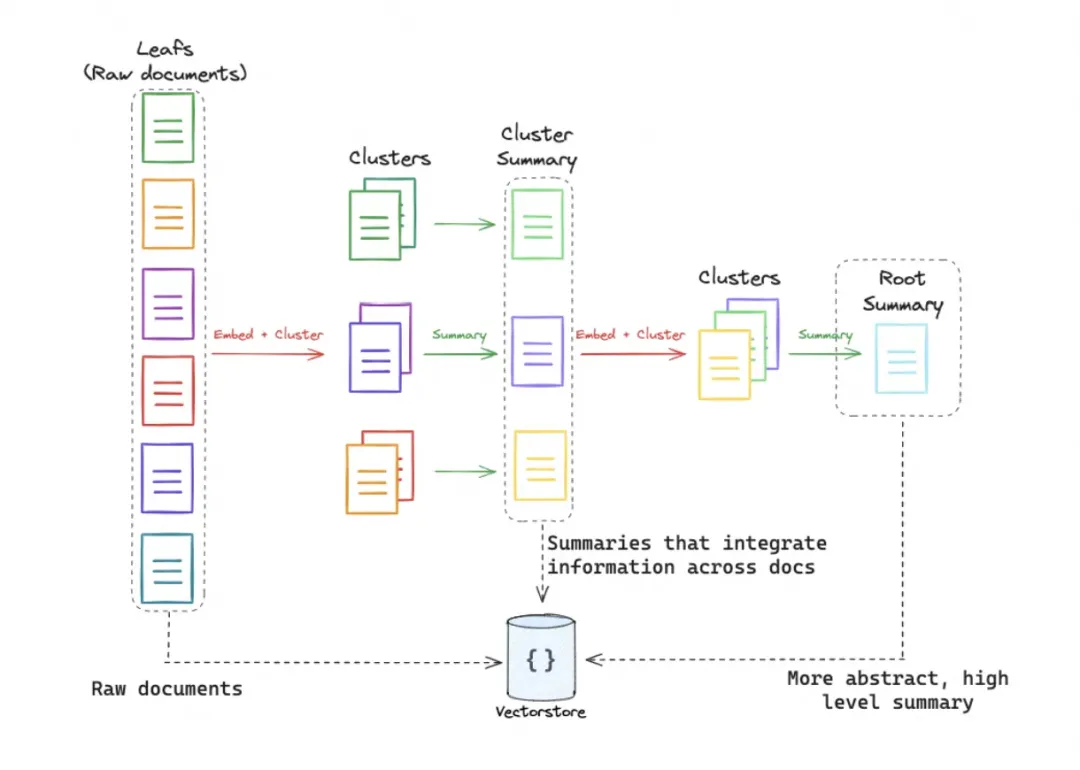

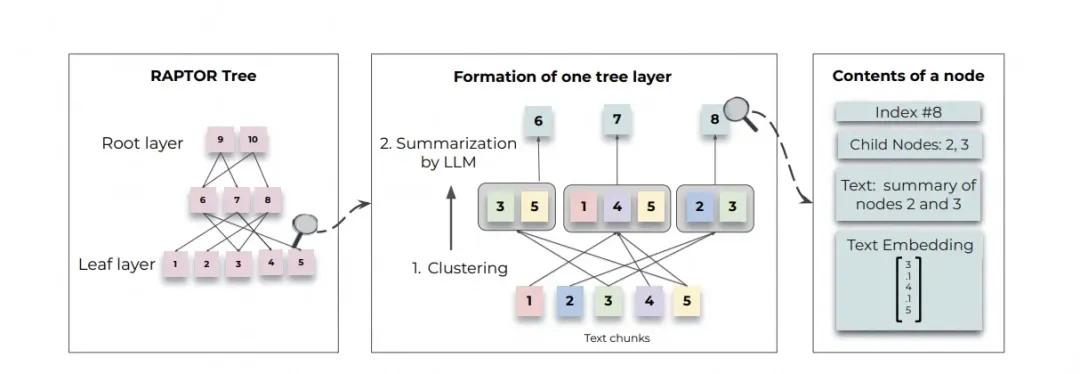

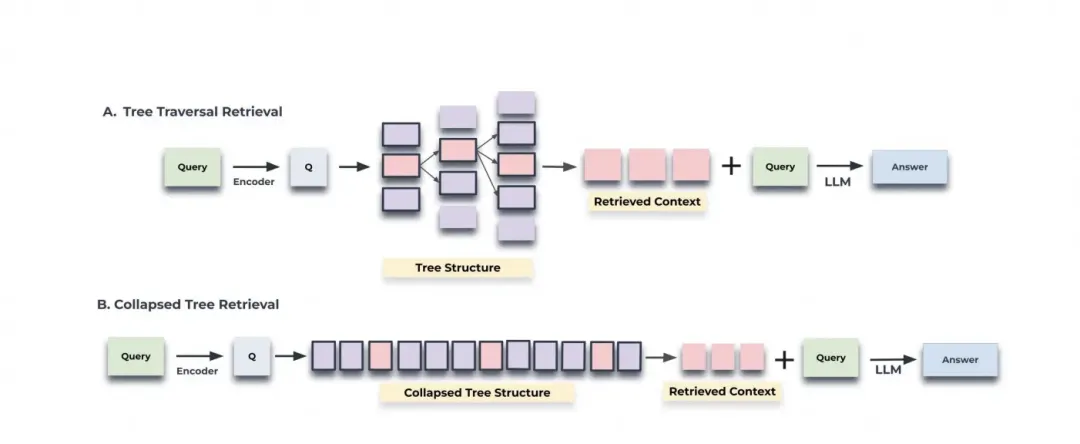

Traditional RAG methods typically retrieve only short, contiguous text segments, limiting the comprehensive understanding of the overall document context. RAPTOR (Recursive Abstractive Processing for Tree-Organized Retrieval) builds a bottom-up tree structure by recursively embedding, clustering, and summarizing text segments, from which it retrieves information at inference time, integrating information from long documents at different levels of abstraction.

1. Tree Structure Construction:

o Text Chunking: The retrieval corpus is first divided into short, contiguous text segments.

o Embedding and Clustering: These text segments are embedded using SBERT (Sentence-BERT, a BERT-based encoder), and then clustered using Gaussian Mixture Models (GMM).

o Summary Generation: Language models are used to generate summaries for the clustered text segments. These summary texts are re-embedded and then further clustered and summarized until no further clustering is possible, ultimately constructing a multi-level tree structure.

2. Query Methods:

o Tree Traversal: Starting from the root level of the tree, select the node with the highest cosine similarity to the query vector at each layer until reaching the leaf nodes. Concatenate all selected node texts to form the retrieval context.

o Flattened Traversal: Flatten the entire tree structure into a single layer and compare all nodes simultaneously, selecting those with the highest cosine similarity to the query vector until reaching a predefined maximum number of tokens.

3. Experimental Results:

RAPTOR significantly outperforms traditional retrieval-augmented methods on multiple tasks, especially in question-answering tasks involving complex multi-step reasoning. When combined with GPT-4, RAPTOR improves accuracy by 20% on the QuALITY benchmark.

• Code: The source code for RAPTOR will be open-sourced on GitHub.

• Datasets: Q&A datasets such as NarrativeQA, QASPER, and QuALITY are used in the experiment.

Reference video: https://www.youtube.com/watch?v=jbGchdTL7d0

Paper address: https://arxiv.org/pdf/2310.11511

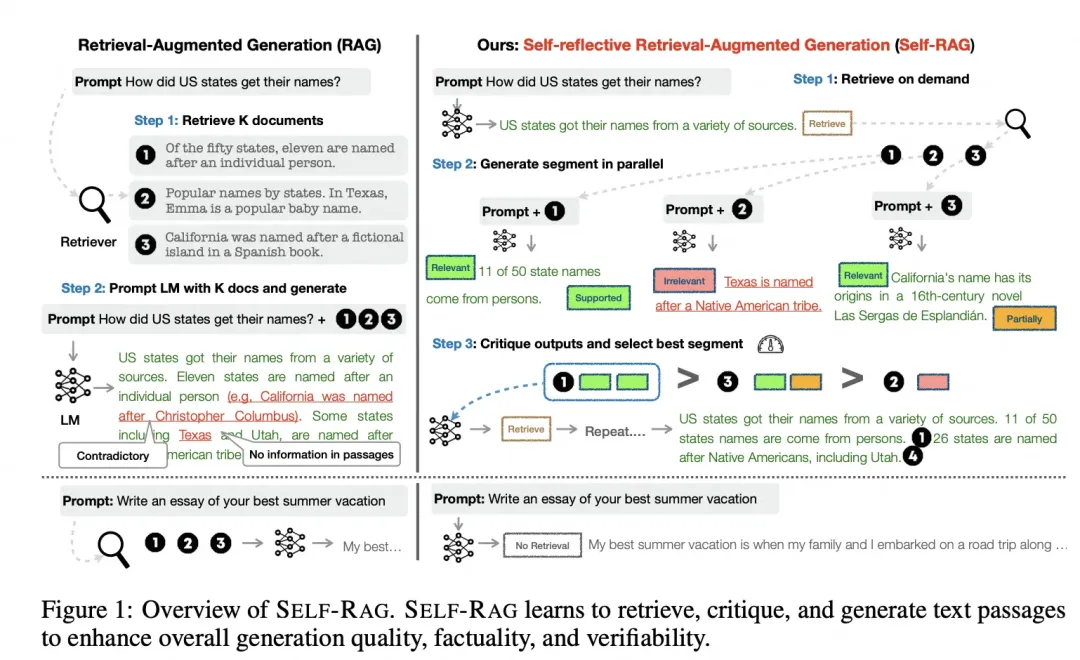

SELF-RAG (Self-Reflective Retrieval-Augmented Generation) is a new framework designed to improve the quality and factual accuracy of generated text while preserving the diversity of the language model.

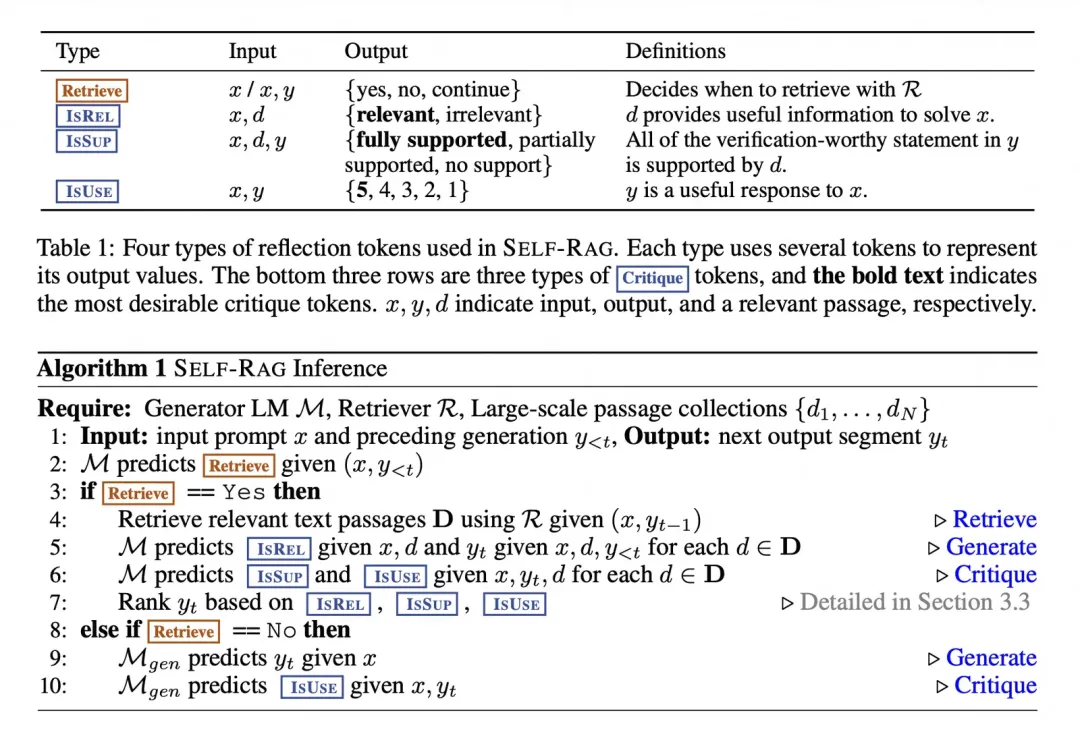

Main Processes:

Explanation:

• Generator Language Model M

• Retriever R

• Passage Collections {d1,d2,...,dN}

Input:

Receives an input prompt (x) and previously generated text (y<t), where (y−t) represents the text generated by the model based on the current query?

Retrieval Prediction:

The model (M) predicts whether retrieval is needed based on (x,y<t).

Search Judgment:

If ( Retrieve ) ==Yes:

1. Retrieve Relevant Text Passages: Use ( R ) to retrieve relevant text passages ( D ) based on ( (x, y<t) ).

2. Relevance Prediction: The model (M) predicts relevance (ISREL) for each paragraph (d) based on (x,d,y<t).

3. Supportiveness and Usefulness Prediction: The model (M) predicts supportiveness (ISSUP) and usefulness (ISUSE) for each paragraph (d) based on (x,y<t,d).

4. Ranking: Rank (y<t) based on ( ISREL ), ( ISSUP ), and ( ISUSE ).

If ( Retrieve ) ==No:

1. Generate the Next Passage: The model ( M ) generates ( y_t ) based on ( x ).

2. Usefulness Prediction: The model ( M ) predicts usefulness ( ISUSE ), which is evaluated based on ( x, y_t ).

• Traditional RAG: Retrieves a fixed number of documents and then combines these documents to generate answers. This can easily introduce irrelevant or false information.

• SELF-RAG: Through a self-reflective mechanism, it retrieves relevant documents as needed, evaluates the quality of each generated passage, and selects the best passage to generate the final response, improving the accuracy and reliability of the generation.

Paper address: https://arxiv.org/pdf/2401.15884

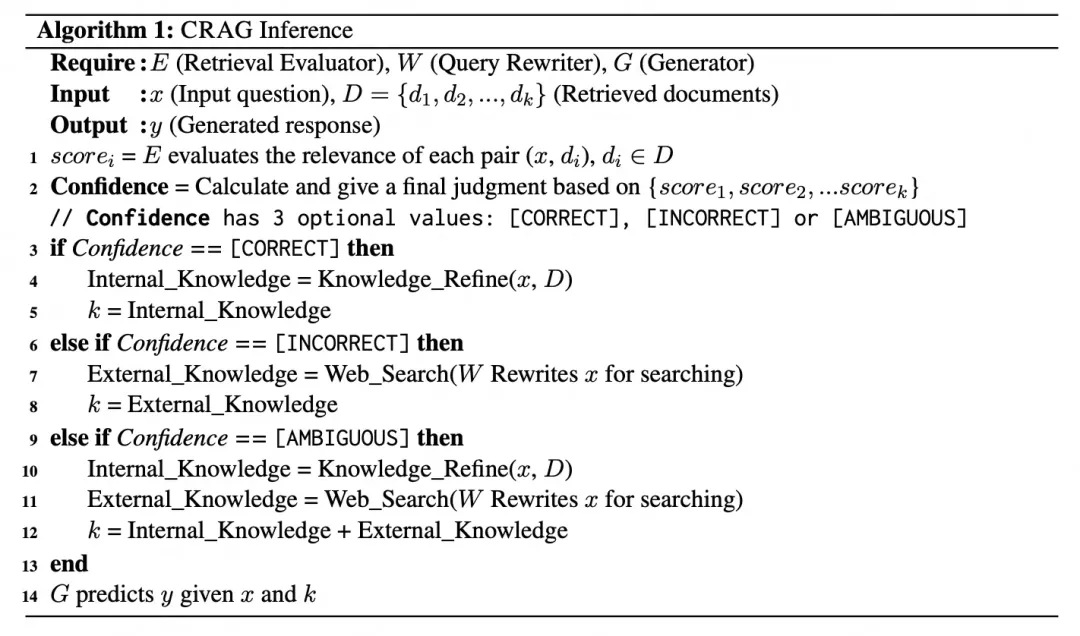

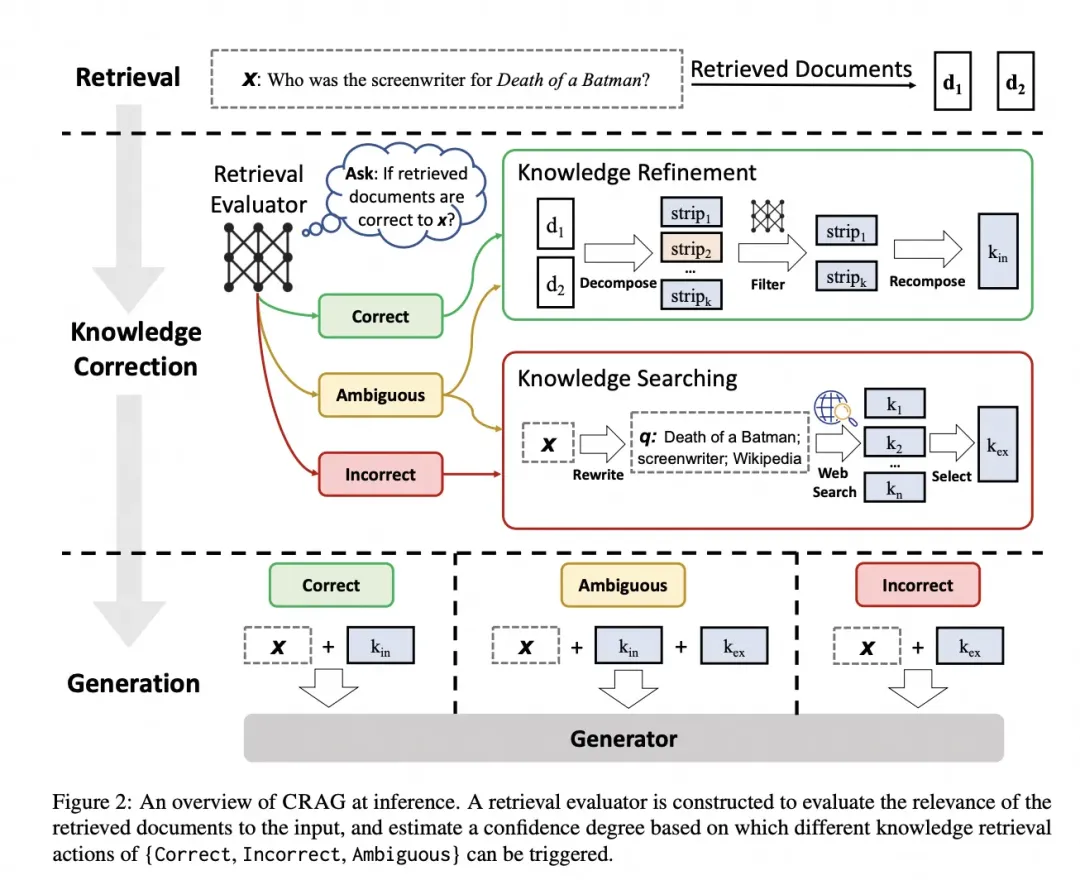

Corrective Retrieval-Augmented Generation (CRAG) is designed to solve the problem of hallucinations that may appear in the generation process of existing large language models (LLMs).

Core Idea:

• Background: LLMs inevitably generate hallucinations during the generation process because the parameter knowledge in the model alone cannot guarantee the accuracy of the generated text. While Retrieval-Augmented Generation (RAG) is an effective supplement for LLMs, it heavily relies on the relevance and accuracy of the retrieved documents.

• Solution: To tackle this, the CRAG framework is proposed to enhance the robustness of the generation process. Specifically, a lightweight retrieval evaluator is designed to assess the overall quality of the retrieved documents and trigger different knowledge retrieval operations based on the evaluation results.

Methods:

Explanation:

1. Input and Output:

• x is the input question.

• D is the collection of retrieved documents.

• y is the generated response.

2. Evaluation Steps:

• scorei is the relevance score for each question-document pair, calculated by the retrieval evaluator E.

• Confidence is the final confidence judgment calculated based on all relevance scores.

3. Action Triggering:

• If the confidence level is CORRECT, extract internal knowledge and refine it.

• If the confidence level is INCORRECT, perform a web search to obtain external knowledge.

• If the confidence level is AMBIGUOUS, combine both internal and external knowledge.

4. Generation Steps:

• The generator G generates the response y based on the input question x and the processed knowledge k.

Key Components:

1. Retrieval Evaluator:

• Based on the confidence score, different knowledge retrieval operations are triggered: Correct, Incorrect, and Ambiguous.

2. Knowledge Reorganization Algorithm:

• For highly relevant retrieval results, a method of decomposing and recombining knowledge is designed. First, each retrieved document is decomposed into fine-grained knowledge segments by heuristic rules.

• Then, a retrieval evaluator is used to calculate a relevance score for each knowledge segment. Based on these scores, irrelevant knowledge segments are filtered out, leaving only relevant ones, which are then reordered.

3. Web Search:

• Since retrieval from static and limited corpora can only return suboptimal documents, large-scale web search is introduced as a supplement to expand and enrich the retrieval results.

• When all retrieved documents are considered incorrect, web search is introduced as a supplementary knowledge source. Web search expands and enriches the initially acquired documents.

• Input queries are rewritten into keyword-based queries and a series of URLs are generated using a search API such as Google Search API. The content of the web pages linked by these URLs is transcribed, and the same knowledge reorganization method used for internal knowledge is applied to extract relevant web knowledge.

Experimental Results

Experiments on four datasets (PopQA, Biography, PubHealth, and Arc-Challenge) demonstrate that CRAG significantly improves the performance of RAG methods, validating its generality and adaptability in both short and long-text generation tasks.

Paper address: https://arxiv.org/pdf/2312.06648

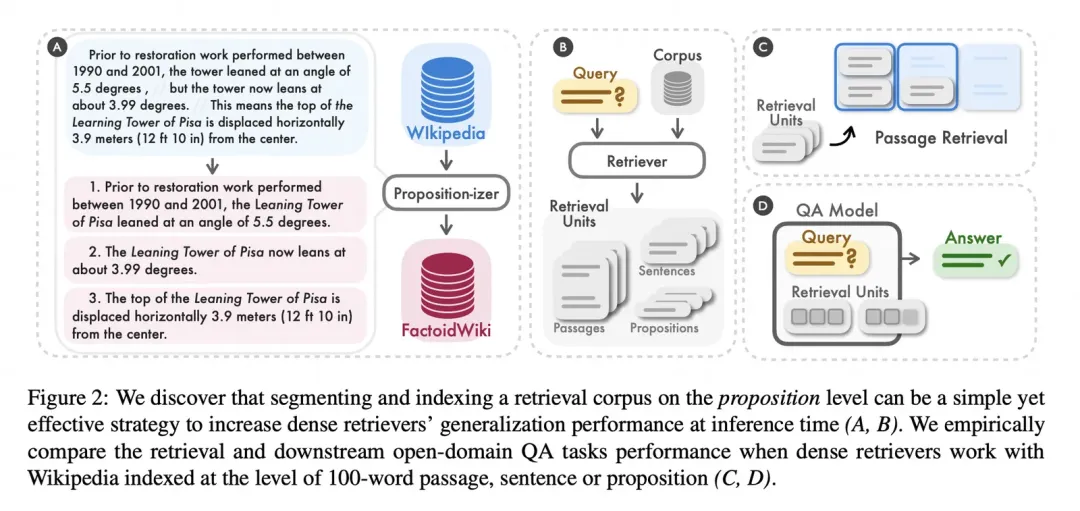

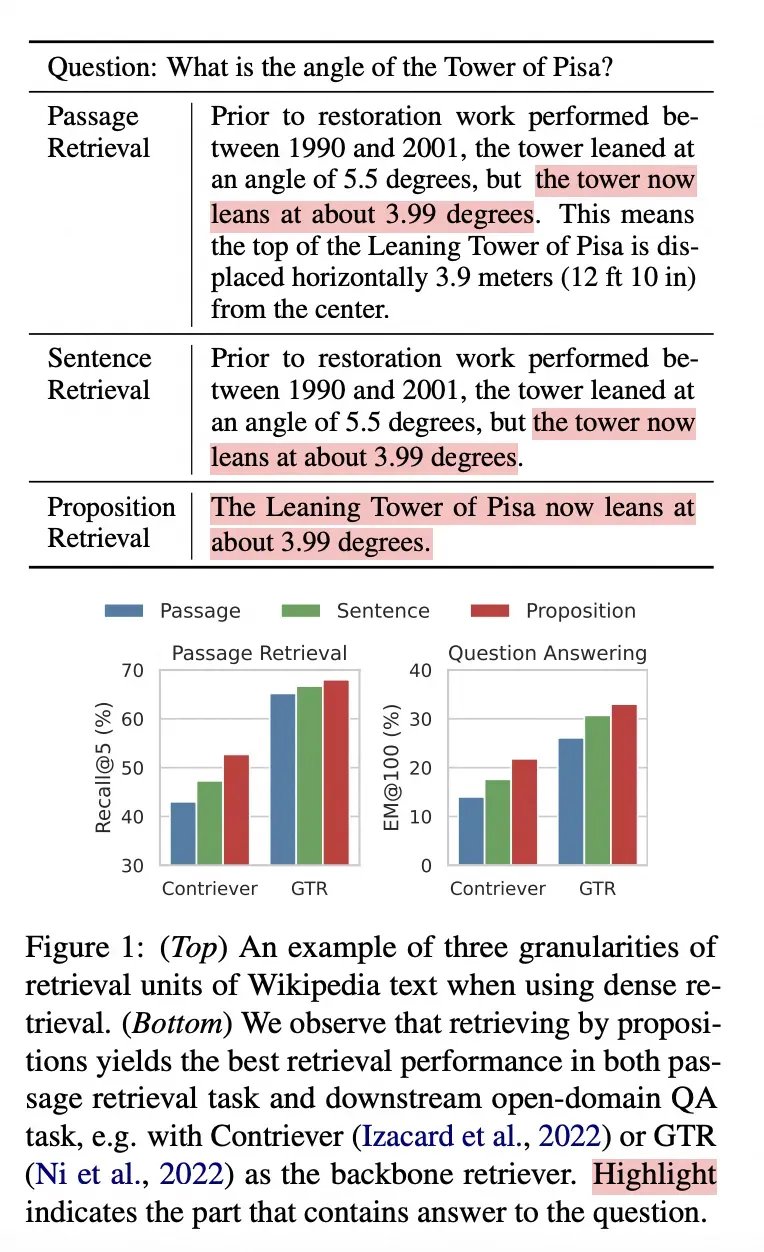

To explore the importance of selecting appropriate retrieval unit granularity such as document, paragraph, or sentence in intensive retrieval tasks, the author proposes a new retrieval unit named "proposition". It is proved through experiments that proposition-based retrieval outperforms traditional paragraph or sentence retrieval methods in dense retrieval and downstream tasks such as open-domain question answering.

Proposition Definition: A proposition is defined as an atomic expression in the text, where each proposition contains an independent fact presented in a concise and self-contained natural language form. A proposition represents an atomic meaning expression in a text, defined as follows:

a. Independent meaning: Each proposition should correspond to an independent meaning in the text, and the combination of all propositions should represent the semantics of the entire text.

b. Minimization: The proposition should be the smallest unit, that is, it cannot be further divided into smaller propositions.

c. Contextual self-containment: Propositions should be contextual and self-contained. That is, the proposition should contain all the necessary contextual information to independently understand its meaning.

Methods:

Steps:

• Segment Paragraphs into Propositions (Part A)

• Create FactoidWiki (Part B) (A proposition processor segments paragraphs into independent propositions, creating a new retrieval corpus called FactoidWiki.)

• Compare Retrieval Units of Different Granularity (Part C)

• Used in Open-Domain Question Answering Tasks (Part D)

Empirical comparisons were conducted for retrieval units of different granularities (paragraphs, sentences, propositions).

|

| • (Recall@5): The proportion of finding the correct answer among the top 5 retrieval results. • (EM@100): The proportion of finding the correct answer within the first 100 characters. • The figure demonstrates the advantage of proposition retrieval in improving the precision of dense retrieval and the accuracy of question-answering tasks. By directly extracting relevant information, proposition retrieval can answer questions more effectively and provide more accurate retrieval results. • Decomposing paragraphs and sentences into smaller, contextually self-contained propositions results in more independent units, each of which needs to be stored in the index, resulting in increased storage. |

Prompt words from paragraph to proposition:

Decompose the "Content" into clear and simple propositions, ensuring they are interpretable out of context.

1. Split compound sentences into simple sentences. Maintain the original phrasing from the input whenever possible.

2. For any named entity that is accompanied by additional descriptive information, separate this information into its own distinct proposition.

3. Decontextualize the proposition by adding necessary modifiers to nouns or entire sentences and replacing pronouns (e.g., "it", "he", "she", "they", "this", "that") with the full name of the entities they refer to.

4. Present the results as a list of strings, formatted in JSON.

**Input:**

Title: Ìostre. Section: Theories and interpretations, Connection to Easter Hares.

Content:

The earliest evidence for the Easter Hare (Osterhase) was recorded in south-west Germany in 1678 by the professor of medicine Georg Franck von Franckenau, but it remained unknown in other parts of Germany until the 18th century. Scholar Richard Sermon writes that "hares were frequently seen in gardens in spring, and thus may have served as a convenient explanation for the origin of the colored eggs hidden there for children. Alternatively, there is a European tradition that hares laid eggs, since a hare’s scratch or form and a lapwing’s nest look very similar, and both occur on grassland and are first seen in the spring. In the nineteenth century the influence of Easter cards, toys, and books was to make the Easter Hare/Rabbit popular throughout Europe. German immigrants then exported the custom to Britain and America where it evolved into the Easter Bunny."

**Output:**

[

"The earliest evidence for the Easter Hare was recorded in south-west Germany in 1678 by Georg Franck von Franckenau.",

"Georg Franck von Franckenau was a professor of medicine.",

"The evidence for the Easter Hare remained unknown in other parts of Germany until the 18th century.",

"Richard Sermon was a scholar.",

"Richard Sermon writes a hypothesis about the possible explanation for the connection between hares and the tradition during Easter.",

"Hares were frequently seen in gardens in spring.",

"Hares may have served as a convenient explanation for the origin of the colored eggs hidden in gardens for children.",

"There is a European tradition that hares laid eggs.",

"A hare’s scratch or form and a lapwing’s nest look very similar.",

"Both hares and lapwing’s nests occur on grassland and are first seen in the spring.",

"In the nineteenth century the influence of Easter cards, toys, and books was to make the Easter Hare/Rabbit popular throughout Europe.",

"German immigrants exported the custom of the Easter Hare/Rabbit to Britain and America.",

"The custom of the Easter Hare/Rabbit evolved into the Easter Bunny in Britain and America."

]

---

**Input:**

<an new passage>

**Output:**Video address: https://www.youtube.com/watch?v=8OJC21T2SL4&t=1933s

Main Points:

• Text slicing is a critical step in optimizing the performance of language models.

• Slicing strategies should be customized based on the nature of the data type and the language model task.

• Traditional physical location-based slicing methods such as character-level slicing and recursive character slicing are simple but may not effectively organize semantically related information.

• Semantic slicing and generative slicing are more advanced methods that improve slicing accuracy by analyzing the semantic content of the text.

• Using multi-vector indexing can provide richer text representations, leading to more relevant information during retrieval.

• The selection of tool and technology should be based on a deep understanding of the data and the specific requirements of the final task.

Five Levels of Text Slicing:

1. Character-Level Slicing: Simply slice text by a fixed number of characters.

2. Recursive Character Slicing: Slice text in a recursive manner based on its physical structure, such as line breaks and paragraphs.

3. Document-Specific Slicing: Use specific delimiters to slice according to different types of documents such as Markdown, Python, JavaScript, and PDFs.

4. Semantic Slicing: Utilize the embedding vectors of a language model to analyze the meaning and context of the text to determine slicing points.

5. Generative Slicing: Create an Agent that decides how to slice the text to more intelligently organize information.

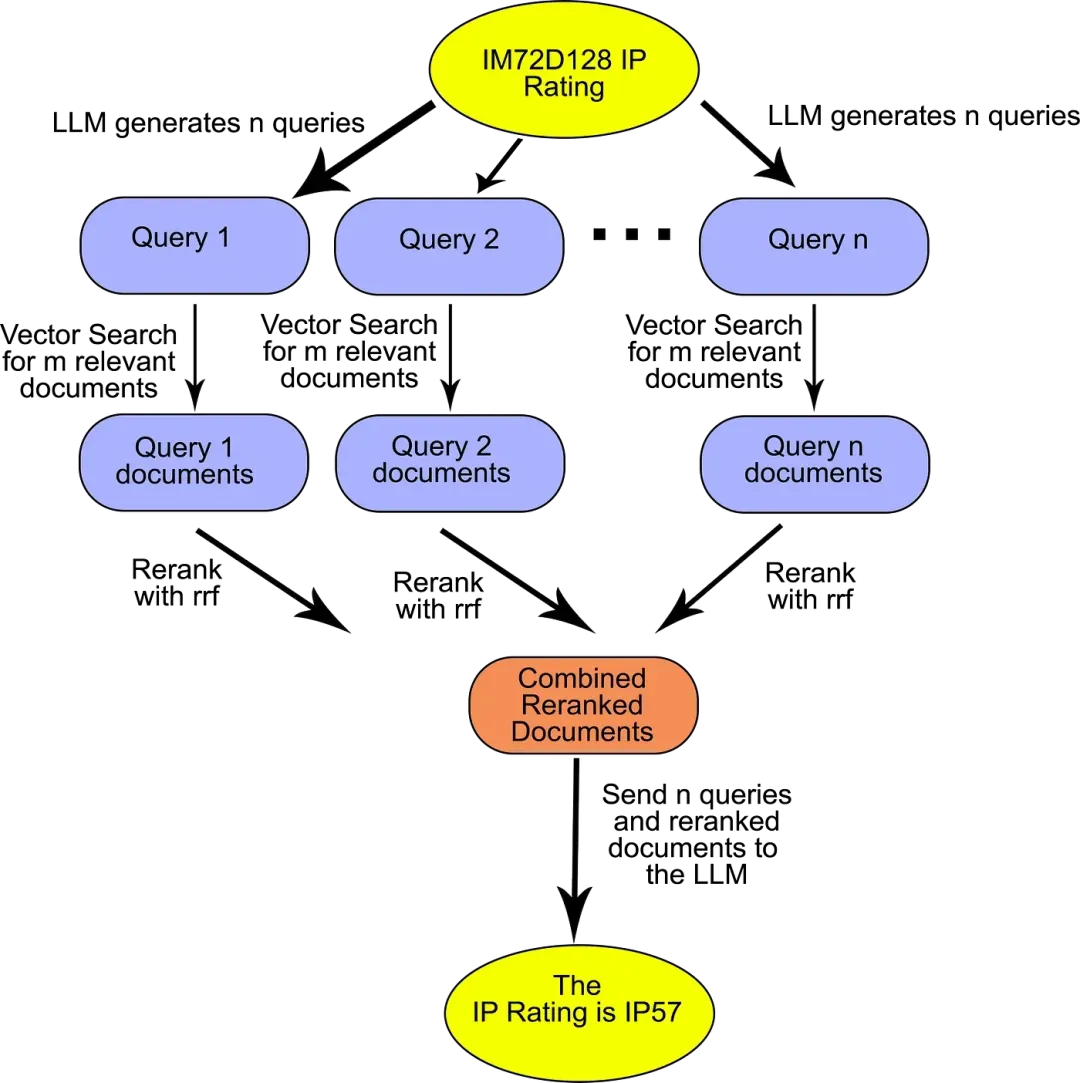

Reference article: https://raghunaathan.medium.com/query-translation-for-rag-retrieval-augmented-generation-applications-46d74bff8f07

1. Input questions:

2. Generate subqueries: The input question is decomposed into multiple subqueries (Query 1, Query 2, Query n).

3. Document retrieval: Each subquery retrieves the relevant documents and obtains the corresponding retrieval results (Query 1 documents, Query 2 documents, Query n documents).

4. Combine and rerank documents: All the retrieved documents from subqueries are combined and reranked (Combined ReRanked Documents).

5. Output answer:

Prompt words:

You are a helpful assistant that generates multiple sub-questions related to an input question.

The goal is to break down the input into a set of sub-problems / sub-questions that can be answers in isolation.

Generate multiple search queries related to: {question}

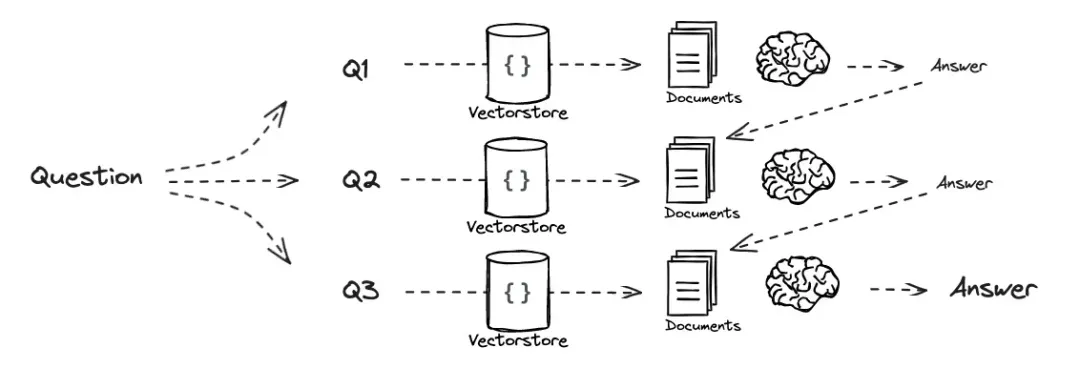

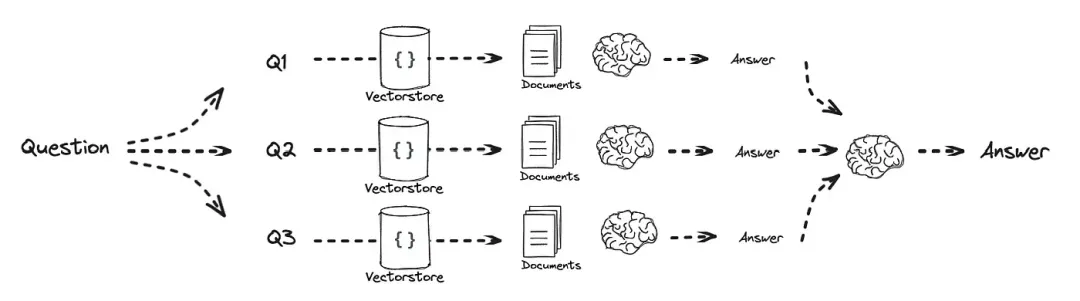

Output (3 queries):The above process involves decomposing the input question into multiple subqueries, then combining and reranking the documents recalled by these subqueries before passing them to a large model for answering. The subsequent strategy iterates on the questions generated by the subqueries to derive new answers.

Other Strategies for Query Decomposition:

1. Recursive Answering: We continue to pass the question along with previous Q&A responses and the context obtained for the current question. This retains the old perspective and synchronizes the solution with a new one, which is effective for very complex queries.

2. Parallel Answering: The user prompt is decomposed into detailed parts, with the difference being that we attempt to solve them in parallel. Each question is answered separately, and then combined to provide more detailed context, so as to answer the user's query. This is an effective approach for most scenarios.

Others:

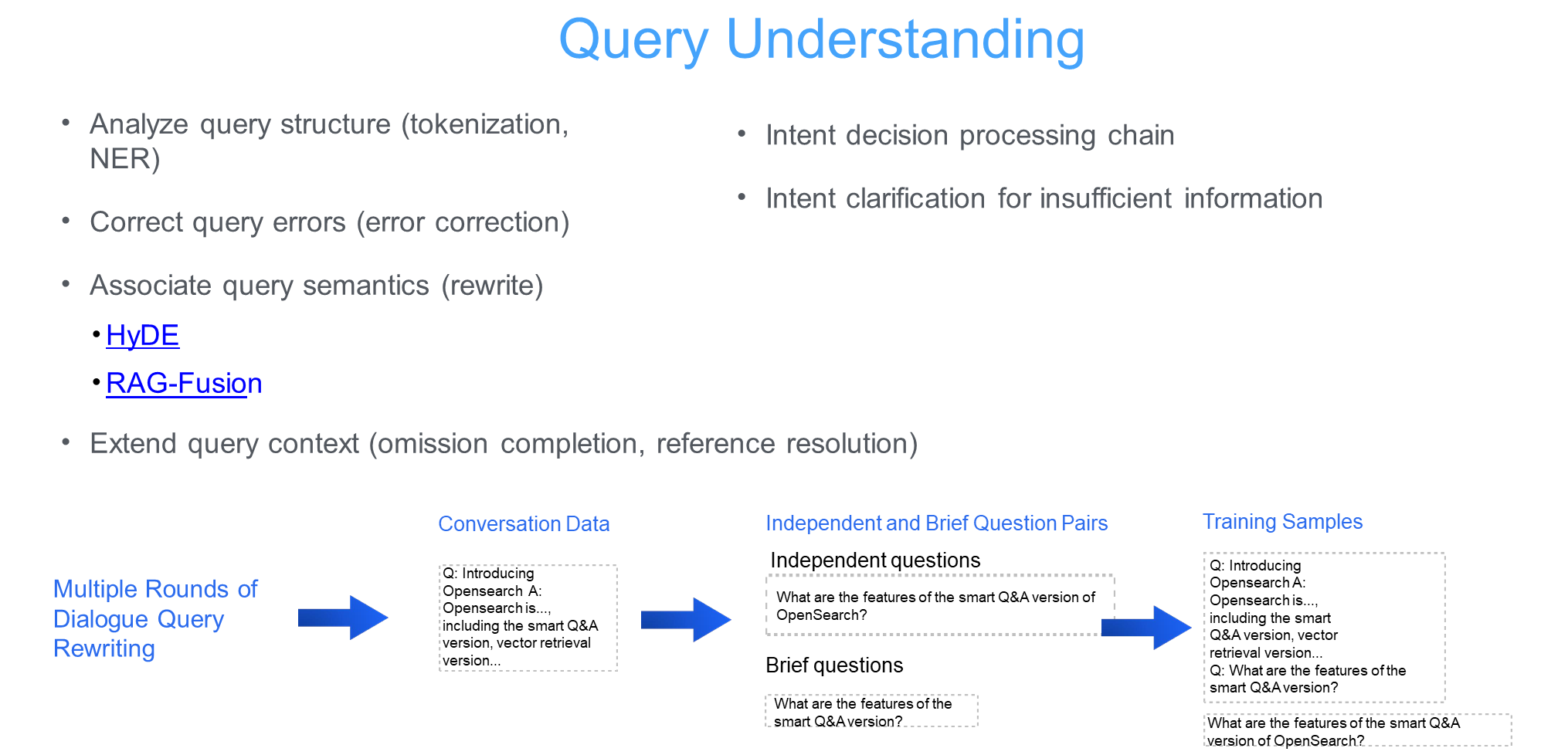

1. Analyze Query Structures (tokenization, NER)

o Tokenization: A query is decomposed into independent words or phrases for further processing.

o NER (Named Entity Recognition): Named entities in a query such as person names, locations, and organizations are identified to help understand the specific content of the query.

2. Correct Query Errors (Error Correction)

o Spelling or grammatical errors in queries are automatically corrected to improve retrieval accuracy.

3. Associate Query Semantics (Rewriting)

o The query is rewritten semantically to make it more expressive and retrieval effective.

o HyDE: A context-based query rewriting method that leverages existing information to generate more effective queries.

o RAG-Fusion: Combine retrieval and generative techniques to enhance the expressiveness of the query.

4. Expand Query Context (Omission Completion and Reference Resolution)

o Expand the query context by filling in omitted content or resolving referential relationships, making the query more complete and explicit.

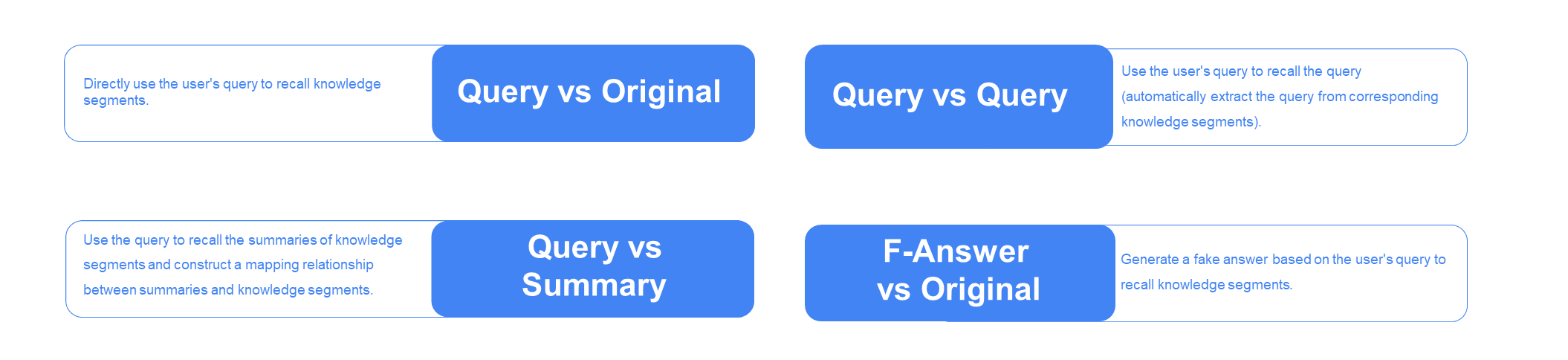

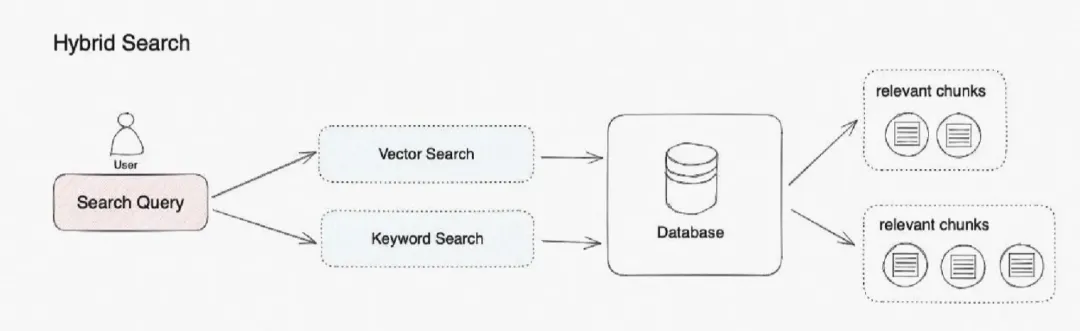

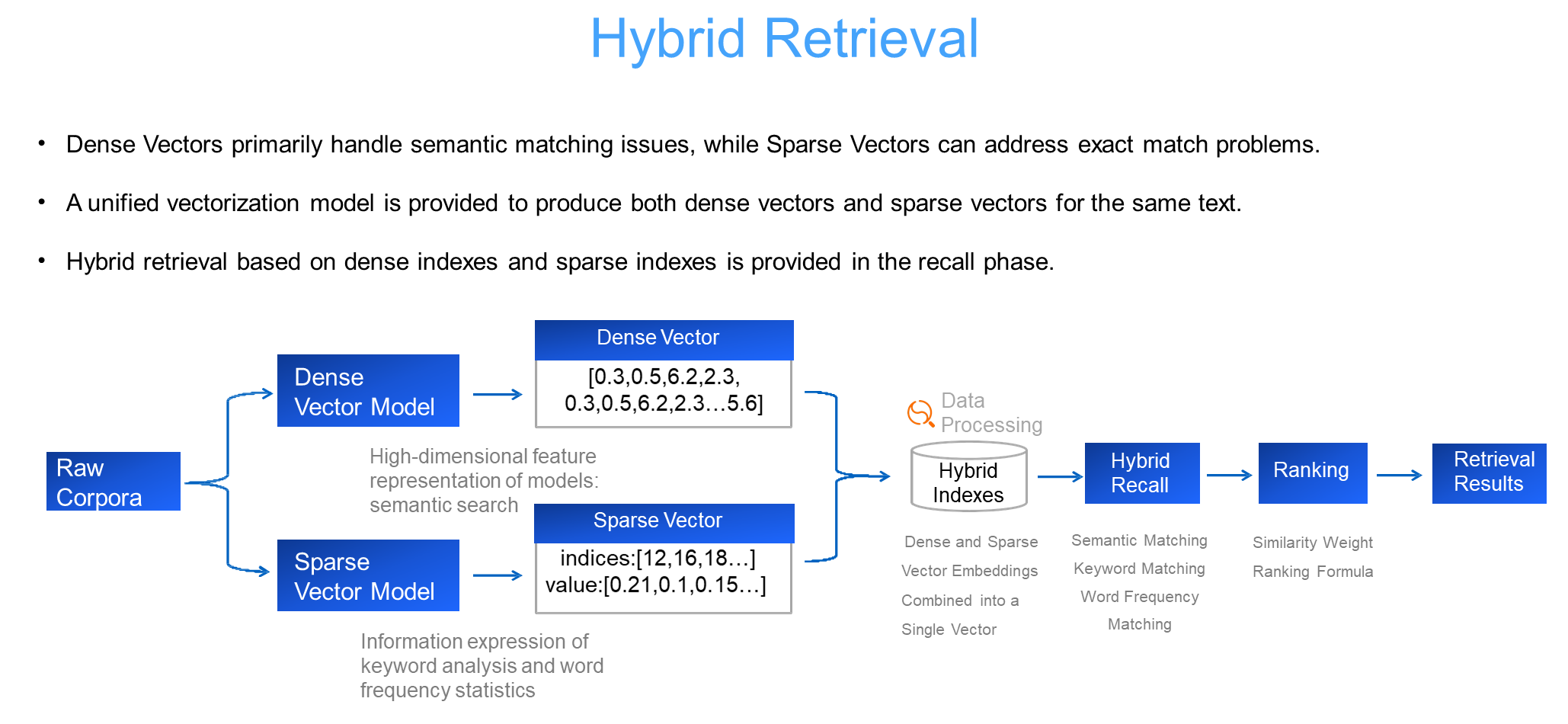

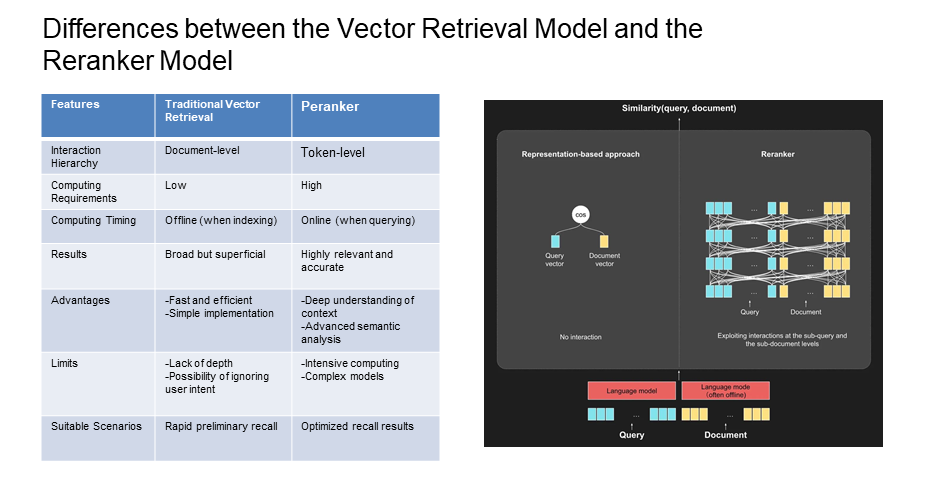

Hybrid retrieval is a strategy that combines sparse retrieval and dense retrieval, aiming to leverage the strengths of both approaches to improve retrieval effectiveness and efficiency.

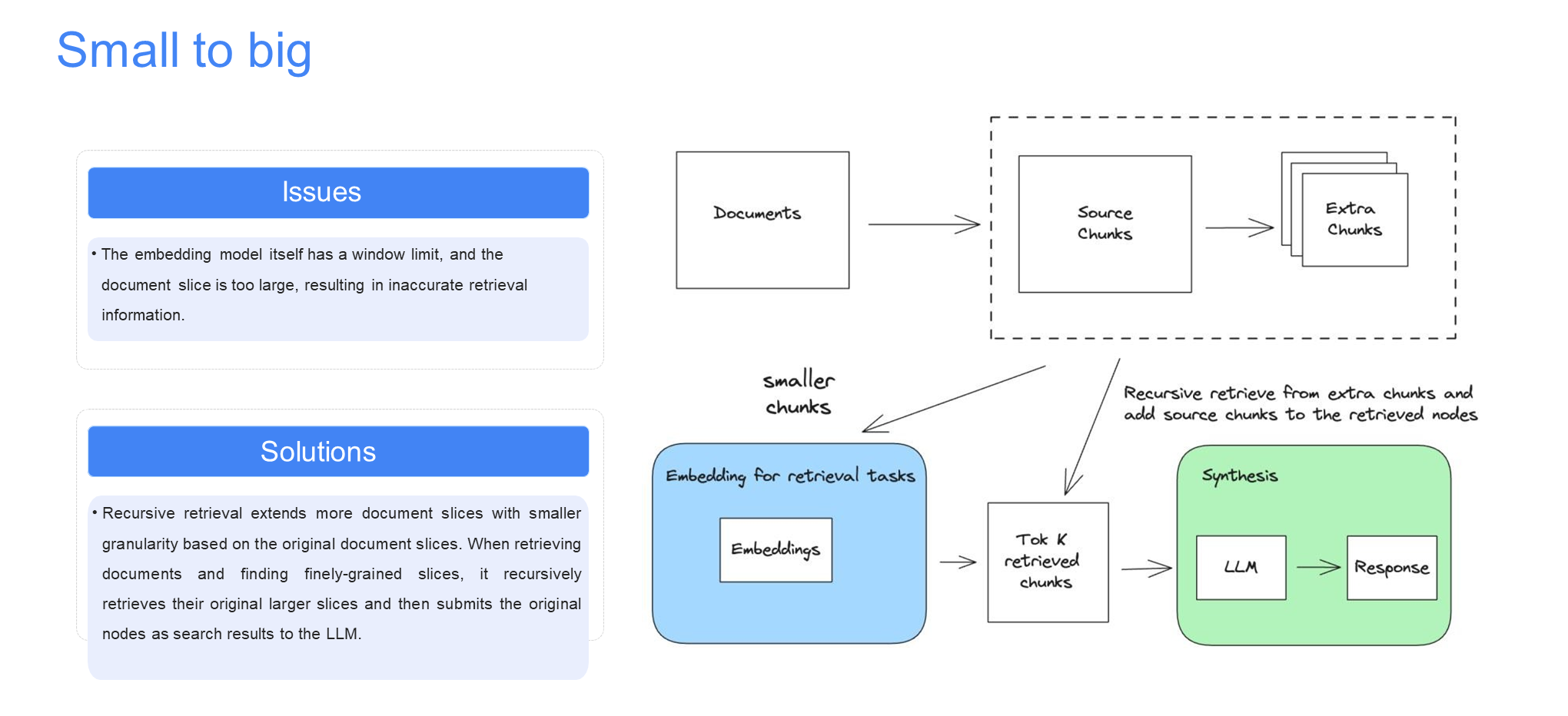

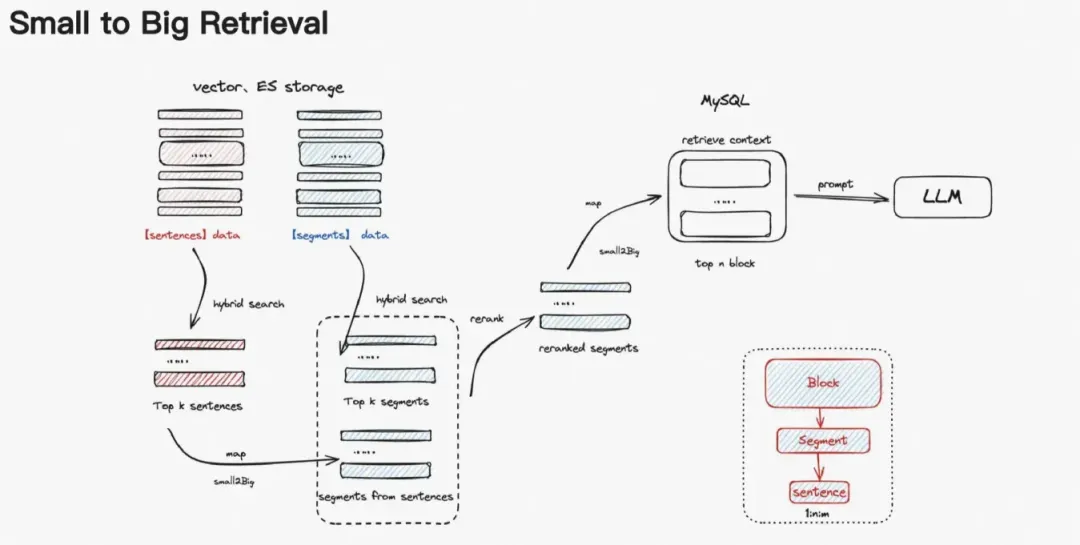

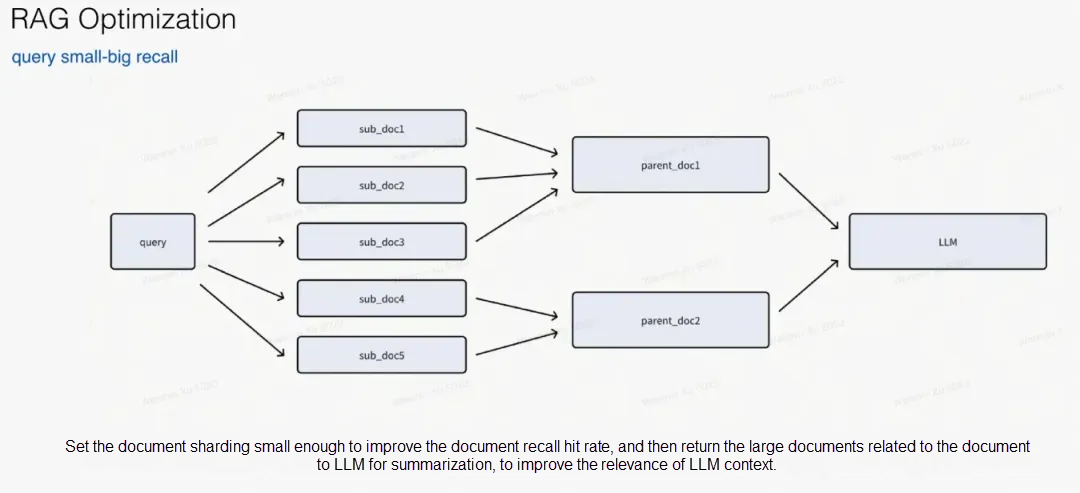

The Small to Big retrieval strategy is a progressive multi-granularity retrieval method. The basic idea is to start with small textual units such as sentences or paragraphs and gradually expand the retrieval scope to larger textual units such as documents or document collections until sufficient relevant information is obtained.

This strategy could be combined with Dense X Retrieval.

The advantages of the Small to Big retrieval strategy are:

1. Improved retrieval efficiency: By starting with smaller textual units, you can quickly pinpoint the approximate location of relevant information, avoiding time-consuming full-text searches across large volumes of irrelevant text.

2. Improved retrieval accuracy: Gradually expanding the search scope allows for maintaining relevance while capturing more comprehensive and contextual information, reducing the problem of semantic fragmentation leading to inaccurate retrievals.

3. Reduced irrelevant information: Through progressive retrieval from small to large, a significant amount of irrelevant text can be effectively filtered out, minimizing the impact of unrelated information on subsequent generation tasks.

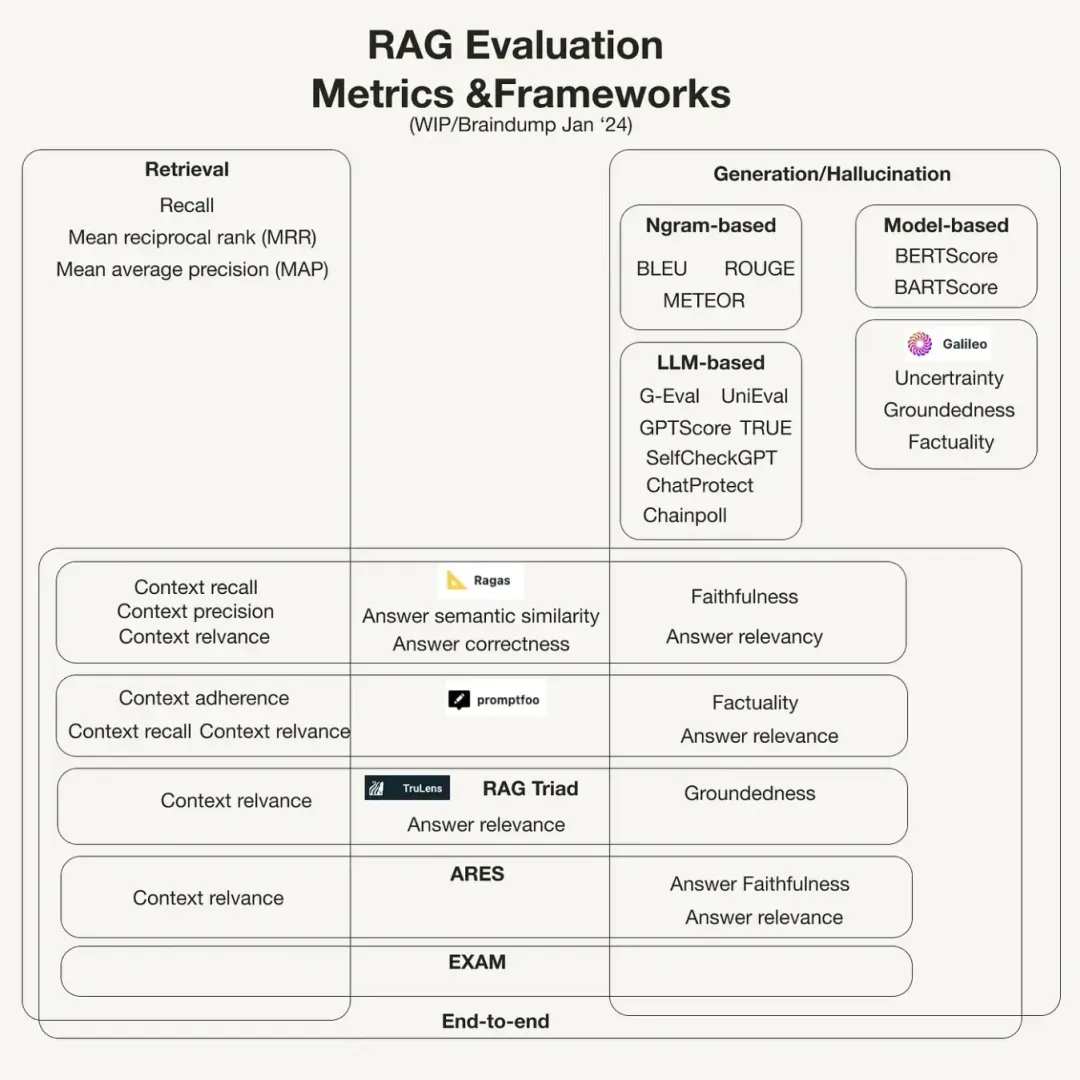

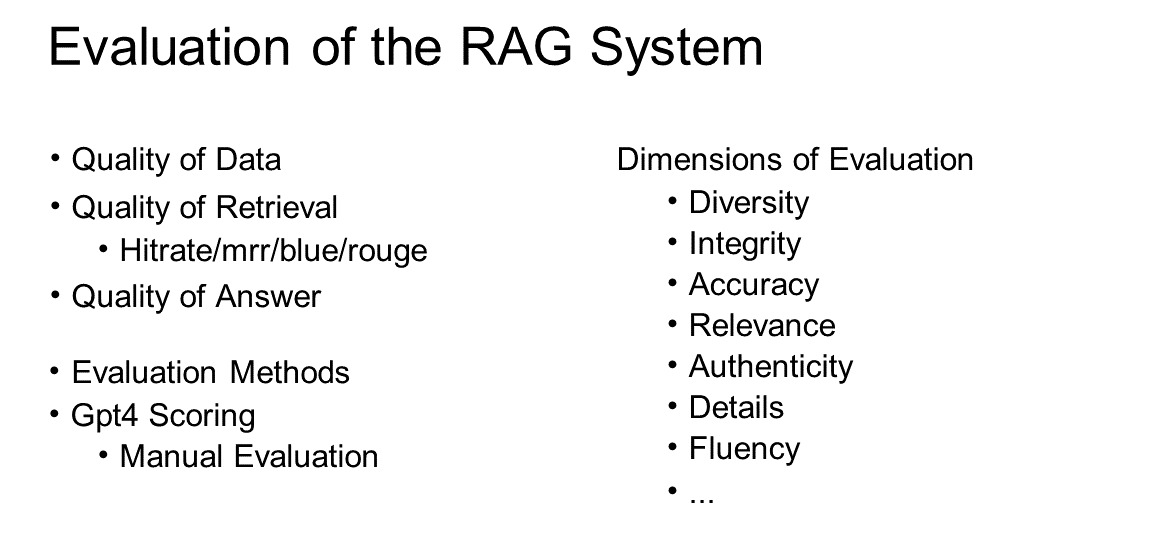

The RAG Evaluation System is an indispensable part of the model development, optimization, and application process. Through a unified evaluation standard, it enables fair comparisons between different RAG models or optimization methods, helping to identify best practices.

In the practical application of RAG systems, collaborative efforts from engineering, algorithms, and other areas are needed. While there are many theoretical methods, extensive experimental comparisons are also necessary during implementation to continuously validate and optimize the system. Also, many detailed issues may arise, such as loading and processing heterogeneous data sources, presenting knowledge in various forms such as text, images, and tables together to improve user experience, and setting up an automated evaluation mechanism. Of course, ongoing model iteration and support for training both large and small models are also important considerations.

• RAPTOR: https://arxiv.org/pdf/2401.18059

• Self-RAG: https://arxiv.org/pdf/2310.11511

• CRAG: https://arxiv.org/pdf/2401.15884

• Dense X Retrivel: https://arxiv.org/pdf/2312.06648

• The 5 Levels Of Text Splitting For Retrieval: https://www.youtube.com/watch?v=8OJC21T2SL4&t=1933s

• RAG for long context LLMs: https://www.youtube.com/watch?v=SsHUNfhF32s

• LLamaIndex Building Production-Grade RAG: https://docs.llamaindex.ai/en/stable/optimizing/production_rag/

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

1,319 posts | 463 followers

FollowData Geek - February 25, 2025

Alibaba Cloud Indonesia - April 14, 2025

Amuthan Nallathambi - July 12, 2024

Data Geek - January 22, 2025

Alibaba Cloud Data Intelligence - November 27, 2024

Alibaba Cloud Data Intelligence - June 20, 2024

1,319 posts | 463 followers

Follow Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More Vector Retrieval Service for Milvus

Vector Retrieval Service for Milvus

A cloud-native vector search engine that is 100% compatible with open-source Milvus, extensively optimized in performance, stability, availability, and management capabilities.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn MoreMore Posts by Alibaba Cloud Community