Photo credit: Shutterstock

Alibaba Cloud said on Friday that it’s releasing two open-source large vision language models that understand images and text.

Qwen-VL, a pre-trained large vision language model and its conversationally finetuned version Qwen-VL-Chat, are available for download on Alibaba Cloud’s AI model community ModelScope and the collaborative AI platform Hugging Face.

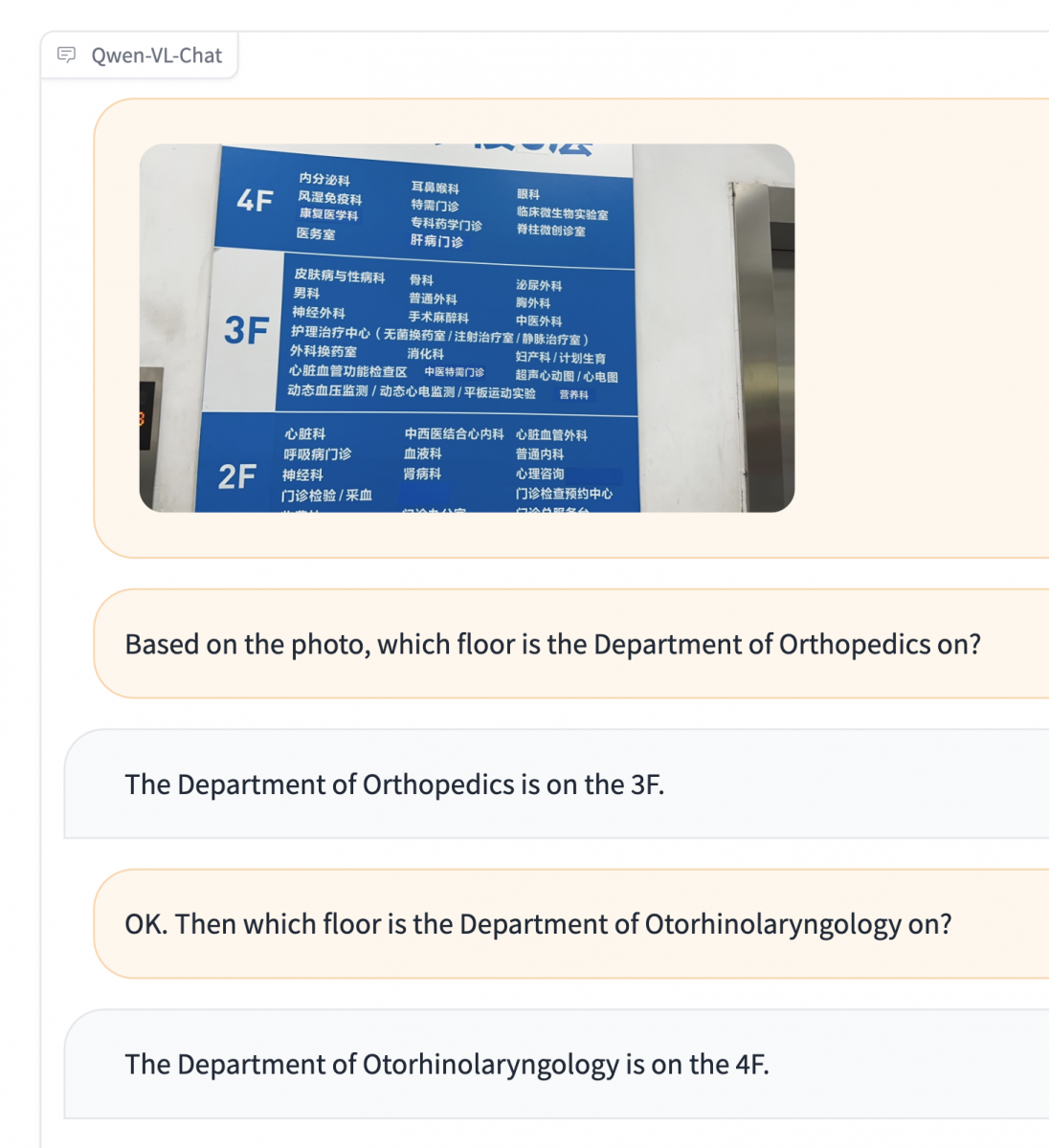

The two models can understand both image and text input in English and Chinese. They can perform visual tasks, such as answering open-ended questions based on multiple images and generating image captions. Qwen-VL-Chat can perform more sophisticated tasks, such as doing mathematical calculations and creating a story based on multiple images.

The two models are trained based on the 7-billion-parameter version of its large language model Qwen-7B that it open-sourced earlier this month. Alibaba Cloud said that compared with other open-source large vision language models, Qwen-VL can comprehend images in higher resolution, leading to better image recognition and understanding performance.

The release underscores the efforts of the cloud computing company in developing advanced multi-modal capabilities for its large language models, capable of processing data types including images and audios along with text. The incorporation of other sensory input into large language models opens up possibilities for new applications for researchers and commercial organizations.

The two models promise to transform how users interact with visual content. For example, researchers and commercial organizations can explore practical uses, such as leveraging the models to generate photo captions for news outlets or assisting non-Chinese speakers that can’t read street signs in Chinese.

The Qwen-VL-Chat model can support multiple rounds of Q&A. Photo credit: Alibaba Cloud

With the capabilities of visual question answering, they also hold the potential to make shopping more accessible to blind and partially sighted users, an endeavor that Alibaba Group has undertaken.

Alibaba’s online marketplace Taobao added Optical Character Recognition technology to its pages to help the visually impaired read text, such as product specifications and descriptions on images. The newly launched large vision language models can simplify the process by making it possible for visually impaired people to get the answer that they need from the image based on multi-round conversation.

Alibaba Cloud said its pre-trained 7-billion-parameter large language model Qwen-7B, and its conversationally finetuned version, Qwen-7B-Chat have garnered over 400,000 downloads since their launch in a month. It has previously made the two models available to help developers, researchers and commercial organizations to build their generative AI models more cost-effectively.

This article was originally published on Alizila, written by Ivy Yu.

Indonesia Hackathon 2023: Igniting Innovation with Generative AI

Large Language Models (LLMs): The Driving Force Behind AI's Language Processing Abilities

1,353 posts | 478 followers

FollowAlibaba Cloud Native Community - April 18, 2025

Alibaba Cloud Community - January 2, 2024

Alibaba Cloud Community - January 30, 2026

Alibaba Cloud Community - August 15, 2025

Alibaba Developer - March 8, 2021

Alibaba Cloud Community - August 12, 2024

1,353 posts | 478 followers

Follow Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More AgentBay

AgentBay

Multimodal cloud-based operating environment and expert agent platform, supporting automation and remote control across browsers, desktops, mobile devices, and code.

Learn MoreMore Posts by Alibaba Cloud Community