By Zongzhi Chen

Currently, after PolarDB initiates a physical copy to the RO node and starts to flush, the unpushed apply LSN remains stagnant for 120 seconds. Even if the load decreases, the RO node cannot recover. Why is this?

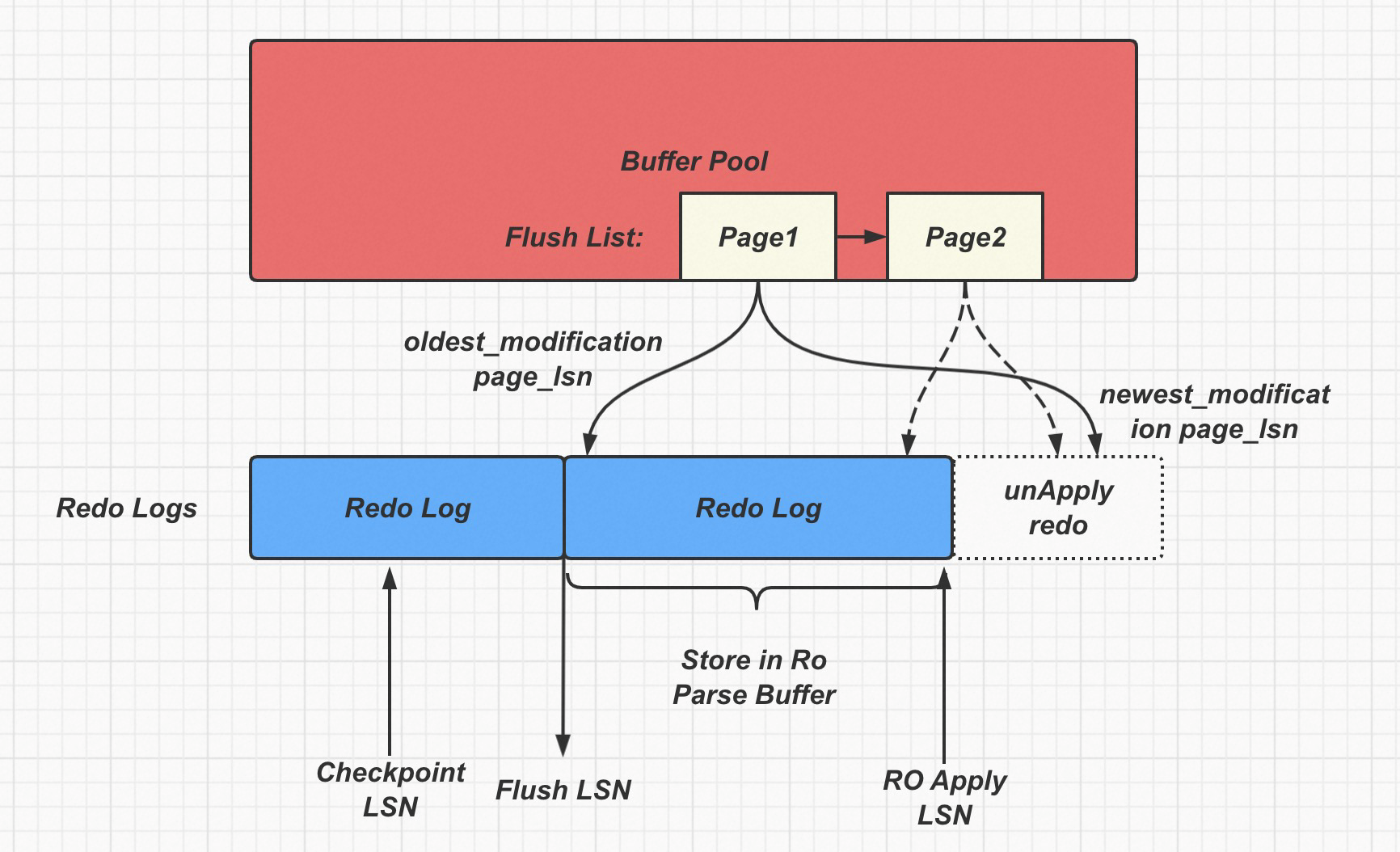

In the extreme scenario depicted in the figure, if the page LSN of Page 1, which is the oldest page on the read-write (RW) node or the page with the oldest position on the flush list according to the oldest_modification_lsn, is already greater than the apply LSN on the RO node, the flushing process cannot proceed. This is because physical replication requires the page to be resolved in the RO parse buffer before it can be flushed. Additionally, pages like Page 2 cannot be flushed even if the difference between newest_modification and oldest_modification is not significant because the parse buffer is full.

However, at this point, the apply LSN on the RO node is not pushed forward due to the full preceding parse buffer. To push it forward, the parse buffer needs to wait for the RW node to flush the old page so that the old parse buffer chunk can be released. However, since the oldest page on the preceding RW node cannot be flushed, the parse buffer chunk has no chance of being released.

This creates a deadlock loop where the RO node cannot recover even if the write pressure stops.

Therefore, once the oldest page on the RW node exceeds the size of the parse buffer, meaning that the page LSN of the oldest page is greater than the apply LSN on the RO node, a deadlock is formed that cannot be avoided later on.

Why is copy page invalid here?

Currently, the copy page mechanism is triggered when flushing dirty pages. In the figure shown, even after copying the newest_modification page_lsn using the copy page mechanism, it is still greater than the RO apply_lsn, preventing it from being flushed. Therefore, the copy page mechanism becomes ineffective.

The solution is to force the flushing of pages when it is identified that the newest_modification page_lsn may exceed a certain size. Delaying the flushing process may lead to complications later on.

How does enabling multiple versions of LogIndex help avoid this problem?

As shown in the previous figure, while Page 1 and Page 2 cannot be flushed due to the constraint imposed by the full parse buffer, other pages can be flushed if their newest_modification page_lsn is less than the RO apply_lsn. Therefore, there aren't many dirty pages in the RW node buffer pool.

Enabling LogIndex allows the RO node to freely discard its parse buffer, preventing crashes.

However, there is still a problem. Even with LogIndex enabled, if Page 1 continues to undergo modifications and its newest_modification page_lsn keeps updating, the page still cannot be flushed. This poses a challenge in pushing forward the RW checkpoint. Nevertheless, LogIndex enables the flushing of other pages, mitigating the issue of insufficient RW dirty pages. How can we solve the problem of flushing Page 1, then?

We can resolve this issue by using the copy page mechanism.

If the RW node enables copy page functionality, although Page 1 in the figure above may not be immediately flushed after being copied, the RO apply_lsn can freely progress due to LogIndex. As the RO apply_lsn advances, this copied page can be flushed after a certain period, effectively avoiding the problem.

Therefore, in the current version, the best practice to address these issues is to use LogIndex and copy page mechanisms, which solve almost all the problems.

In addition, two scenarios of flushing constraints have been verified:

In the case of a large-volume write scenario, even if flushing constraints occur, they can be recovered later. It is only in the hot page scenario where recovery becomes impossible. It is worth noting that hot pages are not necessarily user-modified pages, but rather other pages on the Btree, such as the root page, which are difficult to identify.

Furthermore, it has been confirmed that an increase in write delay for pages and redo logs will not necessarily cause flushing constraint problems, but hot page scenarios can lead to such issues.

As shown in the preceding figure: ro parse buffer = ro appply_lsn - rw flush_lsn

Here, apply_lsn represents the speed at which the RO node reads and applies redo logs, while flush_lsn represents the speed at which the RW node flushes pages.

According to the formula, since I/O delay affects both redo and page, the RO parse buffer does not grow rapidly.

According to the formula, if the speed of redo log pushing is accelerated while the speed of page flushing is slowed down, flushing constraints are more likely to occur. In other words, if the redo I/O speed remains constant while the page I/O speed decreases, the RO parse buffer is more likely to become full, leading to a deadlock caused by hot pages.

If there are no hot pages, as the parse buffer continues to progress, an automatic crash will not occur. Instead, the RW node encounters flushing constraints, resulting in a large number of dirty pages in the buffer pool and eventually exhausting all available pages. Consequently, a crash occurs in the RW node.

How do multiple versions or Aurora solve this problem?

The analysis above reveals two interdependent constraints:

Multiple versions and Aurora resolve Constraint 2, allowing the RO node to release the old parse buffer freely. This eliminates the issue of a full parse buffer. Therefore, if the RO node accesses pages that have not been flushed on the RW node but the RO node has already released the parse buffer, the desired version will be generated through LogIndex and the pages stored on disk.

However, Constraint 1 still needs to be addressed. The flushing of dirty pages on the RW node is limited by the RO node. In RW flushing, if the newest_modification page_lsn is greater than the RO apply_lsn, this page cannot be applied in Aurora. However, Aurora has the capability to remove this page from the buffer pool, whereas we cannot. This leads to a significant number of dirty pages remaining in the buffer pool. Eventually, there are too many dirty pages, resulting in the inability to find a free page. In the multiple-version engine, it is also crucial to support the release of pages from the buffer pool if the newest_modification page_lsn is greater than the RO apply_lsn.

Common Deadlock Scenarios in MySQL: REPLACE INTO Statement Concurrency

How Does PolarDB Optimize Performance in the AUTO_INC Scenario

ApsaraDB - January 31, 2024

ApsaraDB - May 29, 2024

Jack008 - May 6, 2020

digoal - October 31, 2022

ApsaraDB - June 1, 2022

ApsaraDB - September 19, 2022

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB