By Kang Wang

Buffer Pool is a vital component of InnoDB and one of the most critical elements for database users. The basic features of Buffer Pool are not complicated, and the design and implementation are clear. However, as an industrial-level database product with a history of decades, it inevitably incorporates more and more features and optimizations in the details, which makes it a bit redundant and obscure.

This article focuses on the features of the Buffer Pool and introduces its interfaces, memory organization, page fetching, and flushing, interspersed with discussions of key optimization strategies. Additionally, a separate section is devoted to the more intricate aspect of concurrency control, particularly the design and implementation of mutexes.

In addition, although many features such as Change Buffer, page compaction, and Double Write Buffer are interspersed in the implementation of Buffer Pool, they do not belong to the core logic of Buffer Pool. Therefore, this article does not include these features. The relevant code in this article is based on MySQL 8.0.

Data in traditional databases is completely stored on disks, but computing can only occur in memory. Therefore, a good mechanism is needed to coordinate data interaction between memory and disk. This is the meaning of Buffer Pool. Therefore, Buffer Pool usually manages memory based on a fixed-length page to easily swap in and out data with disks.

In addition, there is a huge gap between disk and memory access performance. How to minimize disk I/O has become the core design goal of Buffer Pool. As introduced in the article Past and Present of the Recovery Mechanism of Database Failure, mainstream databases use REDO LOG plus UNDO LOG instead of limiting the order of flushing to ensure the ACID (atomicity, consistency, isolation, and durability) of the database. This approach also ensures that the Buffer Pool can focus more on implementing efficient cache policies.

As a whole, the Buffer Pool provides a very simple interface for external users, which is called the FIX-UNFIX interface. The reason why FIX and UNFIX are required is that the Buffer Pool does not know how long it takes for the upper layer to use the page. During this process, it is necessary to ensure that the page is correctly maintained in the Buffer Pool.

• The upper-layer caller first obtains the page number to be accessed through the index.

• After that, this page number calls the FIX interface of the Buffer Pool to obtain the page and access or modify it. The FIX page will not be swapped out of the Buffer Pool.

• After that, the caller releases the locked state of the page through the UNFIX.

The string of concurrent calls to the FIX-UNFIX interface of the Buffer Pool by different transactions and different threads is called the page reference string. This string itself is independent of the Buffer Pool but only depends on the load type, load concurrency, upper-layer index implementation, and data model on the database. The Buffer Pool design of the generic database is aimed at achieving the goal of minimizing disk I/O and efficient access with most page reference strings as much as possible.

To achieve this goal, a lot of work has been done within the Buffer Pool, and the replacement algorithm is the most important part. Since the memory capacity is usually much smaller than the disk capacity, the replacement algorithm needs to kick out the existing memory page and replace it with a new referenced page when the memory capacity reaches the upper limit. A good replacement algorithm can minimize the occurrence of Buffer Miss at a given Buffer Size. Ideally, we replace the farthest page in the future reference string each time, which is also the idea of the OPT algorithm, but it is impractical to obtain the future page string, so the OPT algorithm is just an ideal model as an optimal boundary for judging the replacement algorithm. In contrast, the random algorithm which is the worst boundary is based on completely random replacement.

In most cases, page references are popularity-differentiated, which makes it possible for the replacement algorithm to determine the future string through the historical string. There are usually two indicators to refer to:

Both the FIFO (First In First Out) algorithm which only considers the access distance and the LFU (Least Frequently Used) algorithm which only considers the number of references are flawed in particular strings. A good practical replacement algorithm should consider both of the two indicators. Such algorithms include the LRU (Least Recently Used) algorithm and the Clocks algorithm. This article then introduces in detail the implementation of the LRU replacement algorithm in InnoDB, in addition to how to implement efficient page lookup, memory management, flushing strategy, and concurrent access to pages.

First, let's look at how the features of Buffer Pool are used in InnoDB.

In the two articles B+ Tree Database Locking History and B+ Tree Database Fault Recovery Overview, it is pointed out that in order to achieve higher transaction concurrency, B+ tree databases distinguish logical content and physical content in concurrency control and fault recovery. Physical content refers to access to pages, while a logical transaction can initiate and submit multiple system transactions at different times. System transactions will be submitted in a short time without rollback. Usually, only a few pages are involved, such as parent and child nodes, data nodes, and undo nodes that are split or merged. System transactions use Redo + No Steal to ensure the Crash Safe of multiple pages. Latch, which is lighter than Lock, is used to ensure secure concurrent access between different system transactions.

In short, system transactions need to obtain several different pages in sequence, add a latch to the obtained pages, use or modify pages, and write redo logs to ensure the atomicity of multiple page accesses. In InnoDB, this system transaction is the MTR (Mini-Transaction). Buffer Pool provides the interface for obtaining the corresponding page through Page No. Therefore, it can be said that in InnoDB MTR is mainly used for Buffer Pool.

The following is the code for the upper layer to obtain the required page through Buffer Pool. buf_page_get_gen interface corresponds to the FIX interface mentioned above:

buf_block_t* block = buf_page_get_gen(page_id, page_size, rw_latch, guess, buf_mode, mtr);The buf_block_t is the memory management structure corresponding to the page, and the complete page content can be accessed through the block->frame pointer. The first parameter page_id specifies the required page number, and this page_id is usually obtained through the upper B Tree retrieval. The third parameter rw_latch specifies the read-write Latch mode to be added to the page. The last parameter MTR is the Mini-Transaction mentioned above. When the same MTR accesses multiple pages, this MTR structure is passed on each call to buf_page_get_gen.

In the buf_page_get_gen, the required page needs to be obtained first. This process will be described in detail later. After that, two things will be cleared: mark the FIX state of the page (page->buf_fix_count) to prevent it from being swapped out, and add the corresponding rw_latch mode lock (block->lock) to the page.

./* 1. Mark the FIX state of the page to prevent it from being swapped out. Here, a counter buf_fix_count */ buf_block_fix (fix_block) on the page structure is used.

.../* 2. Add a latch corresponding to the rw_latch mode, that is, the lock on the block to the page */mtr_memo_type_t fix_type;switch (rw_latch) {

... case RW_S_LATCH:

rw_lock_s_lock_inline(&fix_block->lock, 0, file, line);

fix_type = MTR_MEMO_PAGE_S_FIX; break;

...

}/* The last block pointer and the locking mode are also recorded in the MTR structure to facilitate the release when MTR is committed */mtr_memo_push(mtr, fix_block, fix_type);

...The MTR structure contains one or more pages that already hold the lock. When the MTR is committed, perform the UNFIX together and release the lock:

static void memo_slot_release(mtr_memo_slot_t *slot) { switch (slot->type) {

buf_block_t *block; case MTR_MEMO_BUF_FIX: case MTR_MEMO_PAGE_S_FIX: case MTR_MEMO_PAGE_SX_FIX: case MTR_MEMO_PAGE_X_FIX:

block = reinterpret_cast<buf_block_t *>(slot->object); /* 1. UNFIX the page, that is, buf_fix_count-- */

buf_block_unfix(block); /* 2. Release the lock of the page, block->lock */

buf_page_release_latch(block, slot->type); break;

...

}

...

}This section has introduced how InnoDB uses the interface provided by Buffer Pool to access pages. Before specifically describing how to maintain pages to support efficient search and flushing, let's first understand the organizational structure of Buffer Pool as a whole.

To reduce concurrent access conflicts, InnoDB divides the Buffer Pool into innodb_buffer_pool_instances Buffer Pool instances. There is no lock conflict between instances, and each page belongs to one of the instances. In terms of structure, each instance is equivalent. Therefore, the following content is described from the perspective of one instance.

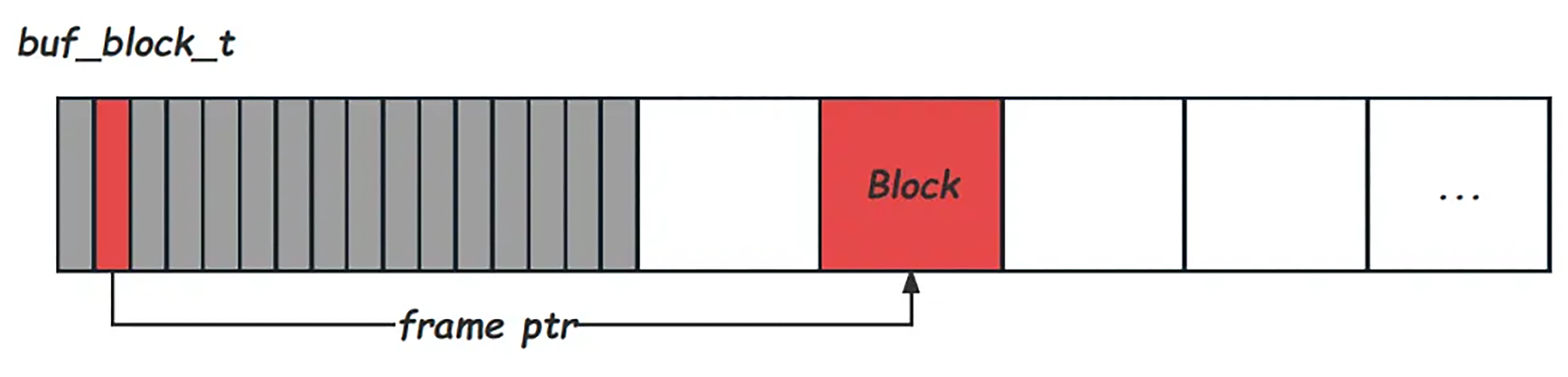

Buffer Pool divides the allocated memory into blocks with equal size and allocates a memory management structure buf_block_t for each block to maintain block-related state information, locking information, and memory data structure pointers. Blocks are the carrier of pages in memory. In many scenarios, a block is a page. According to the code, buf_block_t starts with buf_page_t which maintains page information (including page_id, modified lsn information oldest_modification, and newest_modification) so that they can be directly and explicitly converted:

struct buf_block_t {

buf_page_t page; /*!< page information; this must

be the first field, so that

buf_pool->page_hash can point

to buf_page_t or buf_block_t */

...

}A single buf_block_t requires several hundred bytes of storage. Taking a 100 GB Buffer Pool and a 16 KB page size as an example, there will be 6 MB blocks, and the memory usage of such a large number of buf_block_t is also very considerable. To facilitate the allocation and management of this part of memory, InnoDB splices it directly into the block array. This is also the reason why the actual memory usage of Buffer Pool will be slightly larger than the configured innodb_buffer_pool_size. Later, to facilitate online resizing, Buffer Pool divided the memory into 128 MB chunks by default from version 5.7. Each chunk has the following memory structure:

At startup, the buf_chunk_init function allocates all the memory required by Buffer Pool through mmap, so InnoDB does not really take up such a large amount of physical memory at startup, but it will increase with the allocation of pages. In addition, since the memory address of each block is required to be aligned according to the page size and buf_block_t does not necessarily have a divisor relationship with the page size, there may be some memory fragments that will not be used before the page array.

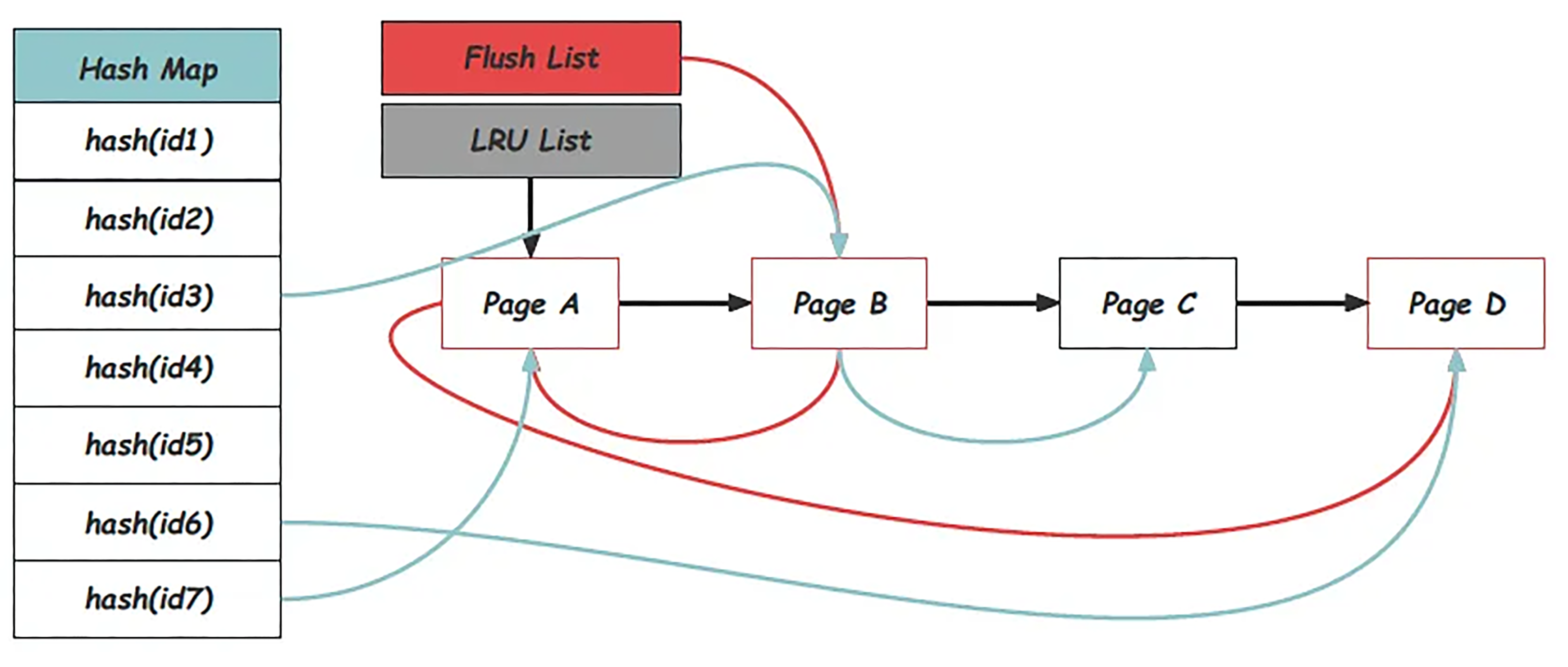

From the usage point of view, it is a centralized and frequent operation to call the interface buf_page_get_gen with the specified page_id. InnoDB uses a Hash Map from page_id to blocks to support efficient queries. All pages in the Buffer Pool can be found in the Hash Map. This Hash Map is implemented in the form of chain conflict and is accessed through the page_hash pointer in buf_pool_t.

In addition, the Buffer Pool also maintains many trace lists in memory to manage blocks, of which the LRU List undertakes the role of the stack in the LRU replacement algorithm. Blocks will be moved to the header of the LRU List when accessed, while pages that have not been accessed for a long time will be gradually pushed to the tail position of the LRU List until it is swapped out.

The Free List maintains unused blocks. Each block must exist on the LRU List or the Free List at the same time. Modified pages are called dirty pages in InnoDB, and dirty pages need to be flushed when appropriate. To obtain the location of the checkpoint, it is necessary to push the smallest dirty page location that has not been flushed. Therefore, a dirty page sequence ordered by oldest_modification is required. This is the meaning of the Flush List. Dirty pages must be blocks in use, so they must also be on the LRU List. The entire memory structure is shown in the following figure:

As a centralized external interface of the Buffer Pool, buf_page_get_gen will first use the given page ID to find the corresponding page from the Hash Map. The simplest one is that the Page has already been in the Buffer Pool and can be directly marked with FIX, added a Lock, and then returned. A well-configured Buffer Pool can meet most of the page requirements. The Buffer Pool Section of the command result of show engine innodb status contains specific hit rate statistics. If a page is not in the Buffer Pool, you need to find a free memory block, initialize the memory structure, and then load the page corresponding to the disk.

The logic for obtaining a free block is implemented in the function buf_LRU_get_free_block.

All free blocks are maintained in the Free List, which can be directly picked out by buf_LRU_get_free_only for use. However, more commonly, the Free List has no blocks at all, and all blocks have already been on the LRU List. At this point, the LRU replacement algorithm is needed to kick out an existing page and allocate its block to the new page for use. The buf_LRU_scan_and_free_block will traverse the innodb_lru_scan_depth pages forward from the tail of the LRU. The selected page must meet three conditions: it is not a dirty page, not fixed by the upper layer, and not in the I/O process. If no page meets the conditions, the second round of traversal will cover the entire LRU.

In extreme conditions, you still cannot get a page that can be swapped out here, probably because there are too many dirty pages. At this time, you need to directly flush a page that is not fixed and has no I/O through buf_flush_single_page_from_LRU, and then turn it into a page that can be swapped out as mentioned above. The selected page that can be swapped out will be deleted from the LRU List and page Hash through buf_LRU_free_page and then added to the Free List for the page accessed this time.

The obtained free block is first initialized by buf_page_init, in which the buf_block_t field, including the buf_page_t field, is initialized and populated, then added to the Hash Map, and added to the LRU List by buf_LRU_add_block. Finally, the frame field of buf_block_t is populated with page data through disk I/O. During I/O reading, the I/O FIX state of the page is marked to prevent the page from being swapped out by other threads buf_page_get_gen, and the lock of buf_block_t is held to prevent the page content from being accessed by other threads.

To make better use of the sequential reading performance of the disk, InnoDB also supports two pre-reading methods. Every time a page is read successfully, it will judge whether to load the surrounding pages into the Buffer Pool together. Random pre-reading will refer to whether a large number of pages have been accessed recently in the same Extend, which can be configured through innodb_random_read_ahead, while sequential pre-reading refers to whether a large number of pages are being accessed sequentially, which can be configured in innodb_read_ahead_threshold.

The strict LRU replacement algorithm moves the corresponding page to the Header of the LRU List each time it is accessed, which increases the popularity of the recently accessed page and makes it less likely to be swapped out. However, there is a problem with such implementation. Generally, a scan operation of the database may access a large number of pages that even exceed the memory capacity, but these pages may not continue to be used after the scan is completed. In this process, the LRU List is replaced once, resulting in a low hit rate of Buffer Pool for a period of time after the scan. This is of course what we do not want to see.

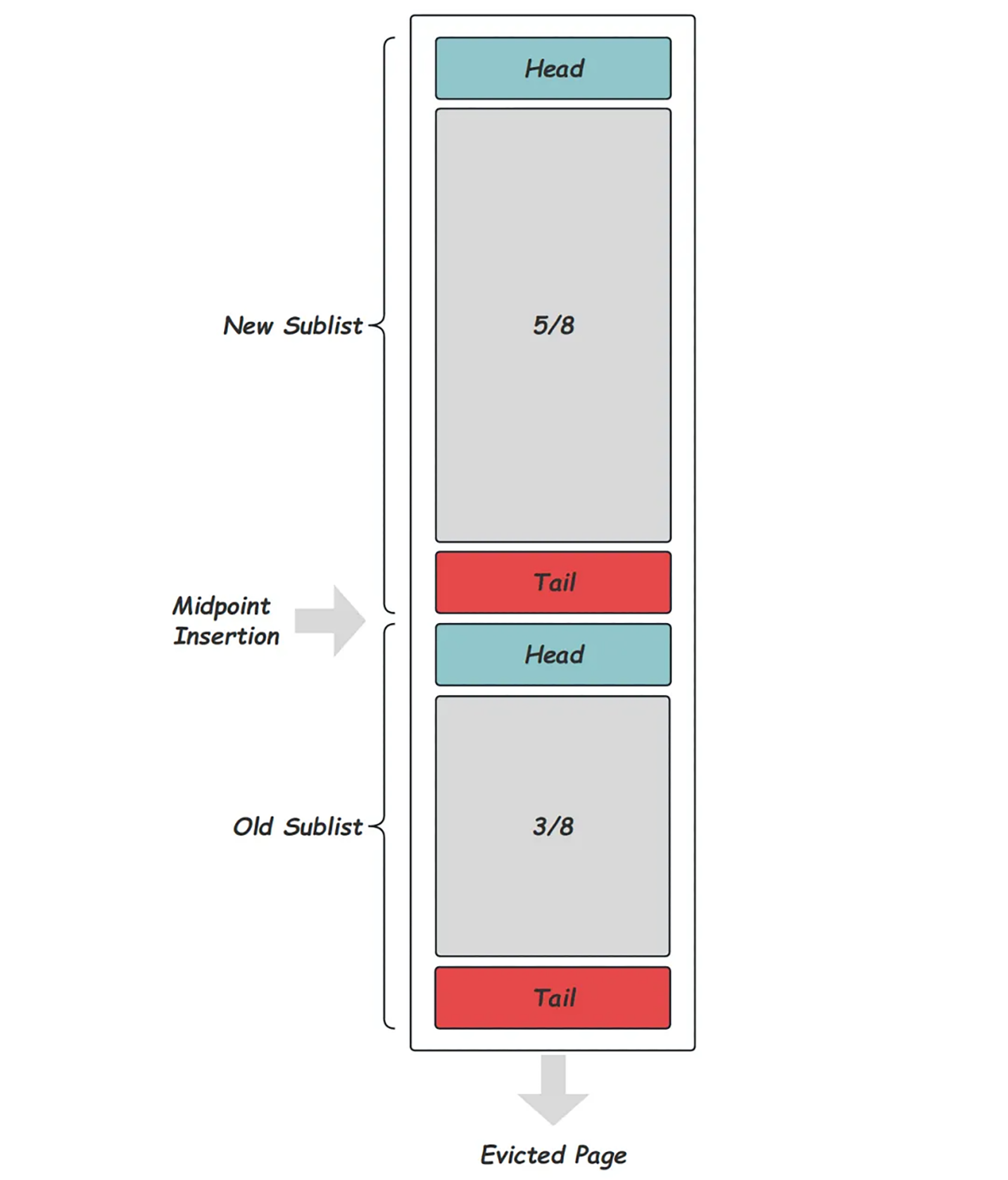

InnoDB splits the LRU List into two segments. The following figure shows the LRU implementation. A midpoint is used to split the LRU List into two segments: New Sublist and Old Sublist. Each time a page needs to be swapped out, the page will be selected from the tail of the list.

When the length of the LRU List exceeds BUF_LRU_OLD_MIN_LEN(512), the new insertion will start to maintain the midpoint position. The implementation is a pointer called LRU_old which points to the position of the LRU List about 3/8 from the tail. After that, the new buf_LRU_add_block will insert the page into the position of LRU_old instead of the header of the LRU list. Every time a page is inserted or deleted, it is necessary to try to adjust the LRU_old position through buf_LRU_old_adjust_len and keep the LRU_old pointer at the 3/8 position as much as possible. The reason why it is said to be as much as possible is that InnoDB sets a BUF_LRU_OLD_TOLERANCE(20) tolerance interval to avoid frequent adjustment of LRU_old.

So, when will the page be inserted into the Header? Every time a page is obtained through the buf_page_get_gen, whether it is a direct hit or a disk swap-in, it will judge whether to move the page to the Header position of the LRU List through the buf_page_make_young_if_needed. There are two situations to choose to move:

innodb_old_blocks_time parameter configured time. As a result, no matter how big the scan is, only about 3/8 of the LRU List will be polluted at most, thus avoiding the problem of Buffer Pool efficiency reduction mentioned before.The modified page in the Buffer Pool is called a dirty page. The dirty page eventually needs to be written back to the disk. This is the flush process of the Buffer Pool. In addition to the LRU List, dirty pages will also be inserted into the Flush List. Pages on the Flush List are roughly arranged according to oldest_modification. However, due to concurrency, it is accepted that there is a disorder in a small range (log_sys->recent_closed capacity size). Of course, this needs to be processed when confirming the checkpoint position.

First, let's look at the generation process of dirty pages. When the DB needs to modify a page, it will specify the latch mode of RW_X_LATCH when buf_page_get_gen obtains the page to add X Lock to the obtained page. After modifying the content of the page, it will write the corresponding Redo Log into the exclusive MTR buffer. When MTR is committed, it will copy the log to the global Log Buffer, add the page to the Flush List of the Buffer Pool through the buf_flush_note_modification function, and update the oldest_modification and newest_modification of the page with the start_lsn and end_lsn of MTR.

Dirty pages eventually need to be written back to the disk, and the timing of this write-back is actually determined by the fault recovery policy of the database. InnoDB uses the Redo + Undo policy described in Past and Present of the Recovery Mechanism of Database Failure to completely separate the flushing from the commit time of transactions, making the Buffer Pool flushing policy more flexible. In theory, assuming that the Buffer Pool is large enough, it must be the most efficient to cache pages in the Buffer Pool all the time and write pages after all modifications are completed, because this minimizes disk I/O that is slower than memory access. However, this is obviously unrealistic. There are two main influencing factors. These two factors also determine the timing of InnoDB Buffer Pool flushing:

1. Total Number of Dirty Pages:

Since the capacity of the Buffer Pool is usually much smaller than the total amount of disk data, the old page needs to be swapped out through LRU when the memory is insufficient. As mentioned earlier, dirty pages cannot be swapped out directly.

The factor of the total number of dirty pages tends to preferentially flush pages near the LRU tail.

2. Total Number of Active Redo:

It is the total amount of Redo after Checkpoint LSN. As introduced in the In-depth Analysis of REDO LOG in InnoDB, Redo in InnoDB is recycled in the number of redo configured in the innodb_log_files_in_group. Checkpoints that lag behind will lead to a too-large total amount of active redo, resulting in insufficient remaining available redo space, while the location of the oldest dirty page is the most direct reason to limit the advance of the checkpoint.

The total amount of active redo tends to preferentially flush pages with the smallest oldest_modification, that is, the tail position of the Flush List.

According to these two factors, InnoDB's Buffer Pool provides three modes of flush. Single Flush deals with the extreme case that the total amount of dirty pages is too large, which is triggered by the user thread when it cannot find a clean page that can be swapped out at all, and it flushes one page synchronously at a time. Sync Flush deals with the extreme case that the total amount of active redo is too large, which is triggered when the available redo space is seriously insufficient or a checkpoint is required. Sync Flush will flush all pages whose oldest_modification is smaller than the LSN as much as possible, so a large number of pages may be involved and it will seriously affect user requests. Therefore, ideally, both of these two modes should be avoided as much as possible. More often, you should rely on the Batch Flush that has been running in the background.

Batch Flush consists of a page coordinator thread and a group of page cleaner threads. The specific number is bound to the number of instances in the Buffer Pool. All threads share a page_cleaner_t structure for statistics and state management.

In most cases, the page coordinator is periodically awakened. It calculates the number of pages that need to be flushed in each round by page_cleaner_flush_pages_recommendation, then sends this requirement to all page cleaner threads, and waits for all page cleaners to complete the flushing. The page coordinator also takes on a flushing task. When the page_cleaner_flush_pages_recommendation determines the number of flushing, it will comprehensively consider the current total amount of dirty pages, the total amount of active redo, and the carrying capacity of disk I/O. Among them, the disk capacity can be specified by the parameters innodb_io_capacity and innodb_io_capacity_max. The following is the sorted calculation formula:

n_pages = (innodb_io_capacity * (ut_max(pct_for_dirty, pct_for_lsn)) / 100

+ avg_page_rate

+ pages_for_lsn

) / 3; /* The upper limit is limited by the parameter innodb_io_capacity_max */

if (n_pages > srv_max_io_capacity) {

n_pages = srv_max_io_capacity;

}1. Total Static Dirty Pages (pct_for_dirty):

It is a flushing ratio calculated based on the total number of dirty pages.

If the amount of dirty pages is lower than innodb_max_dirty_pages_pct_lwm, flushing is not required. If it is higher than innodb_max_dirty_pages_pct_lwm, dirty pages will be flushed according to the percentage of dirty pages in innodb_max_dirty_pages_pct, that is to say, if it is greater than innodb_max_dirty_pages_pctpct_for_diry, it will become 100%.

In other words, the pct_for_dirty is a value that linearly increases by the dirty page rate from 0 to 100 between pct_lwm and pct.

2. Static Active Redo (pct_for_lsn):

It is a flushing ratio calculated based on the current active redo.

If the amount of active redo exceeds the value log_sys->max_modified_age_async close to the full redo space, or the user configures innodb_adaptive_flushing, the current active redo water level is used to calculate a pct_for_lsn. The implementation here is not a purely linear relationship, but the pct_for_lsn growth rate is also accelerating with the increase of active redo.

3. Dynamic Dirty Page Changes (avg_page_rate):

Because the judgment process of n_pages is a periodic doting behavior, it is obviously not enough to only consider the static water level. Here, the dirty page growth rate in this period is also calculated as a factor.

4. Dynamic Active Redo Changes (pages_for_lsn):

Similarly, the growth rate of redo in this period will also be considered here. The calculation method here is to project the LSN after the growth of Redo in unit time onto the oldest_modification of the page in BP, and the number of pages covered is the pages_for_lsn value.

The number of n_pages calculated by the above process will be divided equally among multiple page cleaners, and then they will be awakened. Each page cleaner will be responsible for its own independent Buffer Pool instance, so there is no conflict between them. After each page cleaner is awakened, it will flush dirty pages from the LRU List and Flush List successively, and the next round of flushing will be initiated only after one round of flushing is completed.

The reason for flushing from the LRU List is to keep enough free pages, so it will only be initiated when the pages on the Free List are less than innodb_lru_scan_depth. If it is not a dirty page, it can be directly deleted from LRU with buf_LRU_free_page. Otherwise, it is necessary to call the buf_flush_page_and_try_neighbors to flush the dirty page first. As can be seen from the function name, when flushing each page, it will try to flush other dirty pages around it. This is mainly to make use of the sequential write performance of the disk, and you can configure it through innodb_flush_neighbors to enable or disable it. If the pages on the LRU List are not enough to flush, you need to traverse the Flush List and call buf_flush_page_and_try_neighbors to flush.

All these flushing methods will eventually enter the buf_flush_write_block_low write disk. Except for single flush, all flush operations are performed asynchronously. After I/O is completed, it will call back buf_page_io_complete in the I/O thread to perform finishing work, including clearing I/O FIX states, releasing page locks, and deleting from the Flush List and LRU List.

There may be a large number of threads competing for access to the Buffer Pool at the same time in InnoDB, including all user threads and background threads that obtain pages through the buf_page_get_gen, the flush thread mentioned above, and the I/O thread.

As the data center of the entire database, the ability of Buffer Pool to support concurrent access directly affects the performance of the database. It can also be seen from the code that there are a large number of lock-related logic. As an industrial-level database implementation, these logics have been optimized in a large number of details, which increases the complexity of the code to a certain extent. The idea of lock optimization is to reduce lock granularity, reduce lock time, and eliminate lock requests. This section introduces the design and implementation of locks in the Buffer Pool.

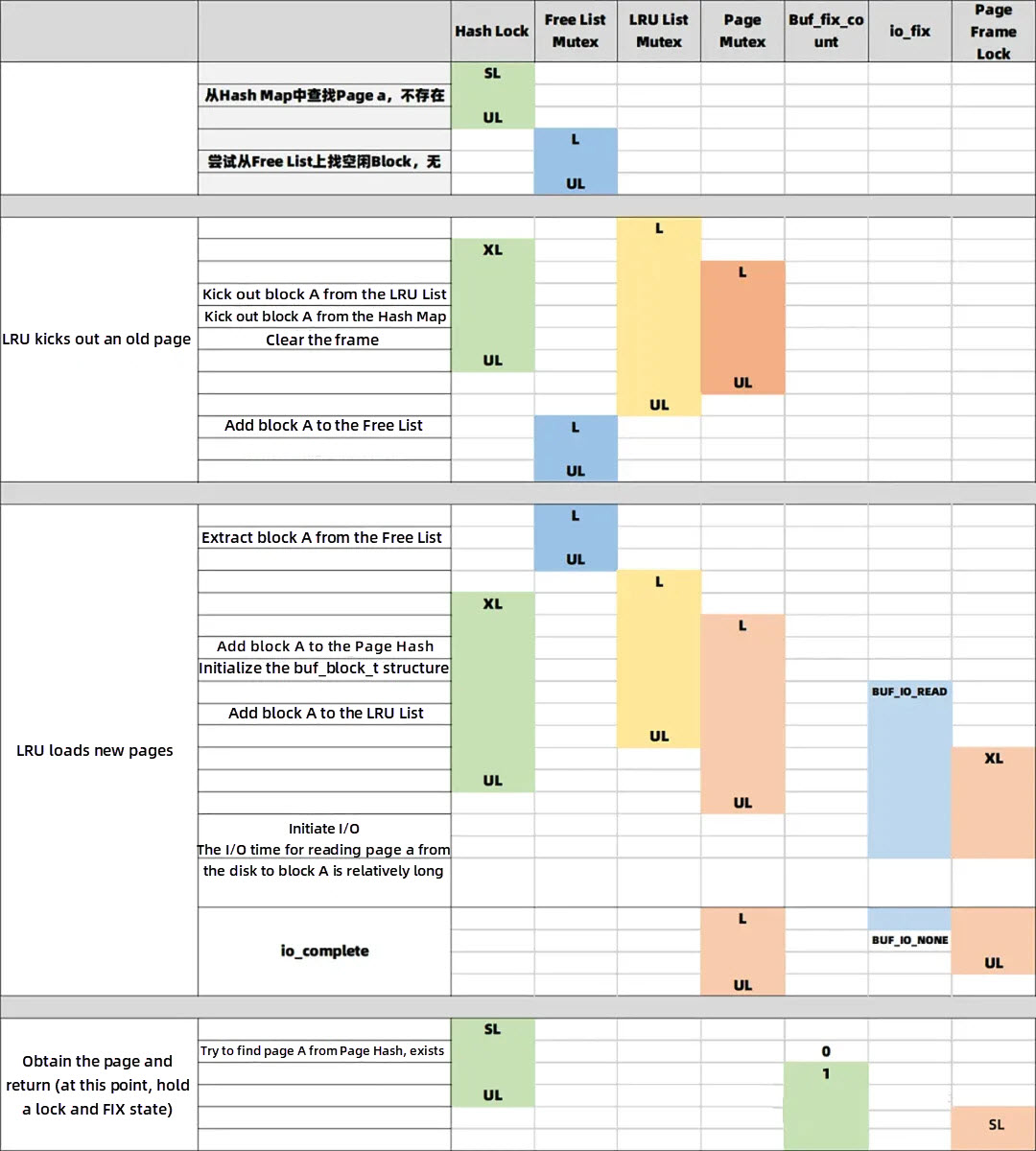

According to the level of lock protection objects, the locks involved in the Buffer Pool are divided into: Hash Map Lock to protect hash tables, List Mutex to protect list structures, Block Mutex to protect structures in buf_block_t, and Page Frame Lock to protect real page content.

The first step of all buf_page_get_gen requests is to judge whether Block exists in the Buffer Pool through Hash Map. It is conceivable that the competition here is extremely strong. InnoDB adopts the method of partition lock. The number of partitions can be configured through innodb_page_hash_locks(16). Each partition will maintain an independent read-write lock. Each request is mapped to a partition by page_id and then requests the read-write lock of this partition. In this way, only requests that map to the same partition will conflict.

As mentioned above, blocks in the Buffer Pool are maintained according to the list. The most basic includes maintaining LRU Lists that use blocks in full, Free Lists of free pages, and Flush Lists of dirty pages. Each of these lists has its own independent Mutex. To read or modify a list, you must hold the Mutex of the list itself. The purpose of these locks is to protect the data structure of the corresponding list itself, so it will be minimized to the scope of access and modification to the data structure of the list itself.

Each Page's control structure buf_block_t has a block->mutex to protect some state information of this block, such as io_fix, buf_fix_count, and access time. Compared with Hash Map and List Mutex, the lock granularity of Block Mutex is much smaller. It is cost-effective to use Block Mutex to avoid holding the lock of the upper container for a longer period of time.

However, information such as io_fix and buf_fix_count can also significantly reduce the competition for page locks. For example, when the Buffer Pool needs to kick out an old page from LRU, it needs to make sure that the page is not being used and is not doing I/O operations. This is a very common behavior, but it does not care about the content of the page itself. At this point, holding the Block Mutex briefly and determining the io_fix state and buf_fix_count count will obviously be lighter than scrambling for page frame locks.

In addition to Block Mutex, buf_block_t also has a read-write lock structure block->lock. This read-write lock protects the real page content, that is, block->frame. This lock is the latch that protects pages mentioned in the article B+ Tree Database Locking History. This lock may need to be acquired during traversal and modification of B+ Trees. In addition, the I/O process involving pages also needs to hold this lock. Pages need to hold X Lock because it needs to directly modify the content of the memory frame, while the I/O writing process holds SX Lock to avoid other write I/O operations occurring at the same time.

When multiple of these locks need to be acquired at the same time, in order to avoid deadlocks between different threads, InnoDB stipulates a strict locking order, that is, Latch Order. As shown below, all locks must be acquired from bottom to top in this order. This order is consistent with the use of most scenes, but there are exceptions. For example, when selecting pages from the Flush List to flush, because the Flush List Mutex level is relatively low, you can see the situation of releasing Flush List Mutex and then obtaining Block Mutex.

enum latch_level_t {

...

SYNC_BUF_FLUSH_LIST, /* Flush List Mutex */

SYNC_BUF_FREE_LIST, /* Free List Mutex */

SYNC_BUF_BLOCK, /* Block Mutex */

SYNC_BUF_PAGE_HASH, /* Hash Map Lock */

SYNC_BUF_LRU_LIST, /* LRU List Mutex */...

}To better understand the locking process of the Buffer Pool, imagine such a scenario: a user read request needs to obtain page a through buf_page_get_gen. First, we search the Hash Map and find that it is not in memory, and then check the Free List and find that there is no free page. We have to kick out an old page from LRU's Tail, add block A to the Free List, and then read page a into block A from the disk. Finally, we get page a and hold its lock and FIX state. The locking process is shown in the following table:

As can be clearly seen in this table:

This article focuses on the core features of the Buffer Pool in InnoDB. First, it introduces its background from a macro perspective, including design objectives, interfaces, problems encountered, and the choice of replacement algorithms. Then, from the user's point of view, it introduces the centralized interface and call mode exposed by the Buffer Pool as a whole. After that, it describes the detailed process of obtaining pages inside the Buffer Pool and the implementation of the LRU replacement algorithm. Next, it introduces the trigger factors and process of dirty page flushing. Finally, it organizes how the Buffer Pool safely achieves high concurrency and high performance.

ApsaraDB - June 1, 2022

ApsaraDB - April 1, 2024

Jack008 - May 6, 2020

ApsaraDB - April 9, 2025

ApsaraDB - January 7, 2025

Alibaba Clouder - November 9, 2017

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn MoreMore Posts by ApsaraDB