Download the "Cloud Knowledge Discovery on KDD Papers" whitepaper to explore 12 KDD papers and knowledge discovery from 12 Alibaba experts.

By Xichuan Niu, Bofang Li, Chenliang Li, Rong Xiao, Haochuan Sun, Hongbo Deng, Zhenzhong Chen

Shop search is an important service provided by Taobao, the China's largest e-commerce platform. Taobao currently has nearly 10 million shops, including several million 7-day active ones. The shop search scenario registers tens of millions of user views (UVs) per day and hundreds of millions of GVMs.

You can click the tab shown in the following figure to use the shop search feature of Taobao on its PC client or mobile client.

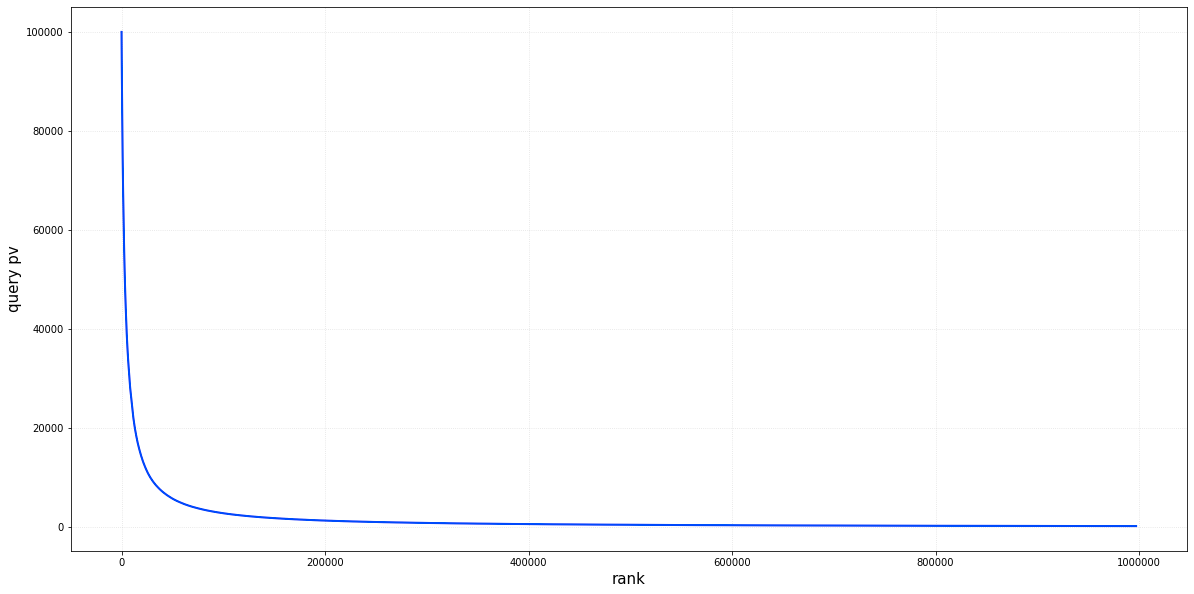

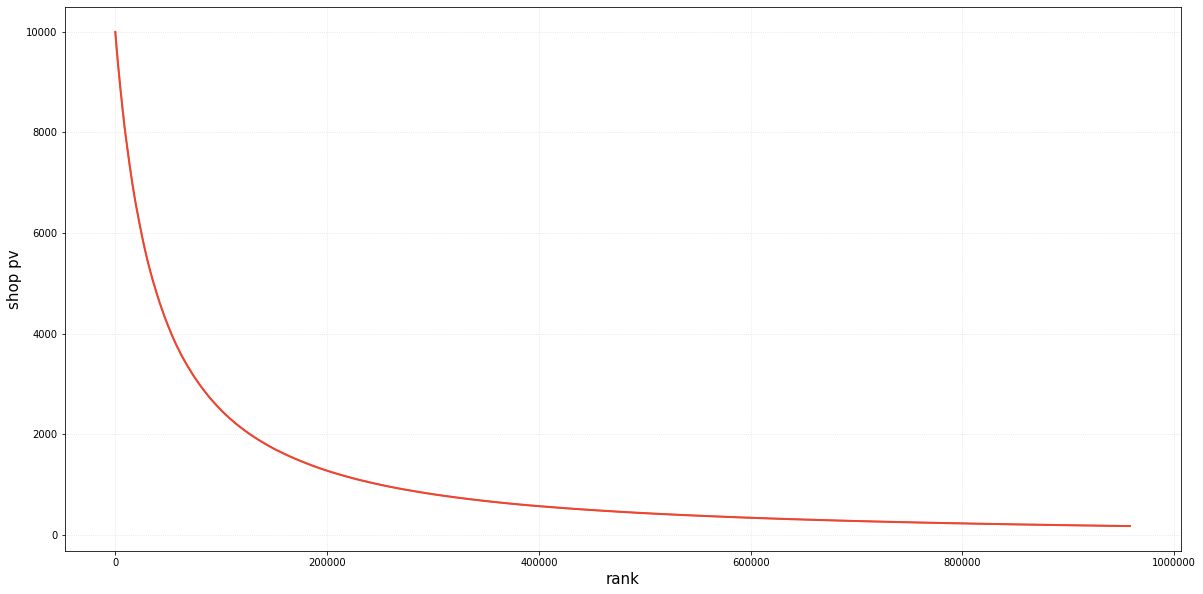

On the Taobao platform, the vast majority of exposure goes to queries, items, or shops that are frequently searched for, and this leads to long tails. The following figure shows the relationships between the search frequencies of queries and shops and their ranks. The y-axis shows the number of daily query page views (QPVs) or shop page views (SPVs), and the x-axis shows the ranks of the queries or shops.

We build a dual heterogeneous graph attention network (DHGAN) for long-tail queries and long-tail shops that are highly relevant to a query. DHGAN is devised to attentively adopt heterogeneous and homogeneous neighbors of queries and shops to enhance vectorized representations of themselves, which can help relieve the long-tail phenomenon.

Why can heterogeneous information improve the long-tail performance? We found the following examples during training and prediction.

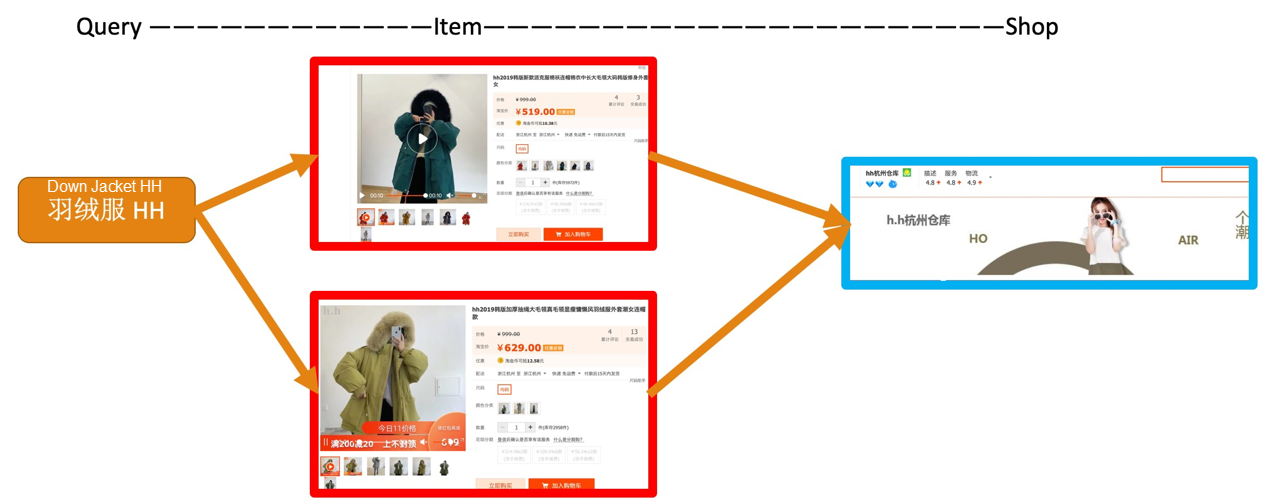

In the preceding figure, the query and target shop that users search for do not co-occur in the training data, and their semantics is not associated. However, they can be associated by using query-item and item-shop embeddings.

This is a more complex example. The query and target shop in the preceding figure do not co-occur in the training data, but they can be associated by using heterogeneous information.

In the preceding examples, the NCF baseline alone won't lead to a prediction success, but the DHGAN model described next will.

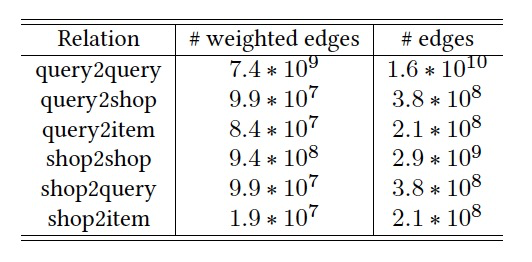

We combine query, shop, and item nodes of shop search scenarios to form heterogeneous edges.

For example, the query2query relation connects to a query in the same shop, and the shop2item relation connects to an item in a shop. The following figure shows the detailed data. Specifically, the edges column shows the total number of heterogeneous edges, and the weighted edges column indicates the total number of heterogeneous edges after aggregate-level deduplication.

Heterogeneous graph: A graph structure that has multiple types of nodes and multiple edge relations. The counterpart of a heterogeneous graph is a homogenous graph which only contains one type of nodes and edges.

Graph neural network (GNN): A category of algorithms that apply neural networks to irregular graph structures. GNNs are primarily applied to learning a semantic vector for a graph node, and then applying the learned node representation to downstream tasks, such as node classification and link prediction.

Attention mechanism: A computational system that imitates human attention and is widely used in natural language processing and computer vision. The core goal of attention mechanisms is to select the information that is more critical to the current task objectives from a massive amount of information.

We propose the DHGAN to solve the long tail problem. The model first builds a heterogeneous graph network based on the logs of user behavior in shop searches and item searches, and then mines and uses the homogeneous and heterogeneous neighbors of queries or shops in the heterogeneous graph. The model uses these neighbors to enhance vectorized representation of the queries and shops, and then migrates the knowledge and data in item searches to bridge the text semantic gap between user-searched queries and shop names with the help of item titles. Finally, the model introduces user characteristics to personalize the search recall results.

The overall framework of the model is a two-tower architecture, as shown in the following figure.

The inputs on the left side are users and queries, including homogeneous and heterogeneous neighbors, and the information after the titles of the sold items in the item searches. DHGAN and transfer knowledge from product search (TKPS) modules aggregate data into one vector. (For more information, see the next section.) The inputs on the right side are shops. Similar to the query information, DHGAN and TKPS modules aggregate the shop information into one vector. Finally, the correlation is measured by the inner product of the vectors at the top of the two-tower architecture, and serves as the basis for assigning weights to shop recalls.

The DHGAN module adopts heterogeneous and homogeneous neighbors of queries and shops to enhance vectorized representations of themselves, as shown in the preceding figure.

Take a user query as an example. We first mine its homogeneous neighbors (query neighbors) and heterogeneous neighbors (shop neighbors). Then, we use the Attention Net layer graphs acting on the two types of neighbors to fuse the information. The first layer of Attention Net respectively fuses the homogeneous information and the heterogeneous information. Specifically, the homogeneous information is directly fused by the Attention Net, while the heterogeneous information is processed by the Heterogeneous Neighbor Transformation Matrix (HNTM) which maps the query to the vector space of the shop. The second layer of Attention Net further fuses the homogeneous and heterogeneous information to ultimately enhance the vectorized representation by using both homogeneous and heterogeneous neighbors. The Attention Net of the layer graphs acting on shops is exactly the same as that on queries, except that their network parameters are different.

As shown in the preceding figure, we take a user query as an example and first migrate the knowledge in item searches. That is, we use the item neighbors titles in item searches based on the same query, and then vectorize the target query text and the item neighbors title. The vectorized representation is obtained by using a pooling operation. Finally, we aggregate the item neighbors title, concatenate it with the query, and transform it to get an enhanced text vectorized representation of the query. In the case of a shop, we utilize the item text information in the shop to enhance the text vectorized representation of the shop.

The base loss of our model is the traditional cross entropy and is defined as follows.

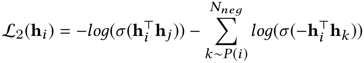

Intuitively, both query and shop neighbor nodes should be similar to the query and the shop. Therefore, in addition to cross entropy, we have also defined neighbor proximity loss, as shown in the following formula.

where, h_i is the current node, h_j is the neighbor node of h_i and acts as a positive sample, and h_k is a randomly sampled node and acts as a negative sample.

The final loss of the model is the result of the two loss values after regularization. The penalties are controlled by the α and λ parameters, respectively.

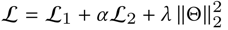

To demonstrate the advantage of the DHGAN model in optimizing long-tail queries and shops, we constructed two special datasets, namely a hard dataset and a long tail dataset, in addition to testing all the test data. The hard dataset contains the query-shop pairs that do not appear in the training data, and the long tail dataset contains the queries and shops that appear only once in the training dataset. The following figure shows the detailed dataset statistics. The interactions column indicates the number of positive records in the training and test data, the queries, shops, items, and users columns indicate the number of respective data records in the training and test data. Note that item data was not used during the test to avoid feature traversal issues.

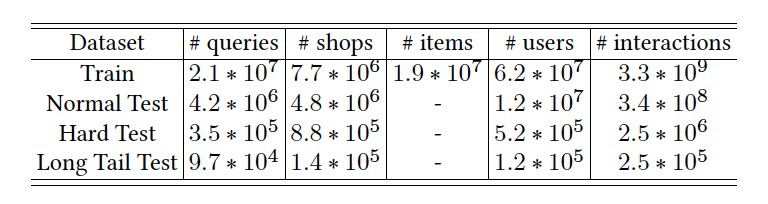

We used 11 types of baselines for comparison and tested the DHGAN versions with and without (DHGAN_{NP}) personalized information added. The final results are as follows.

It can be seen that the DHGAN model marks an improvement of 1% across the metrics in all the tests, and an improvement of 4% to 8% across the metrics of the hard and long tail models that focus on optimization.

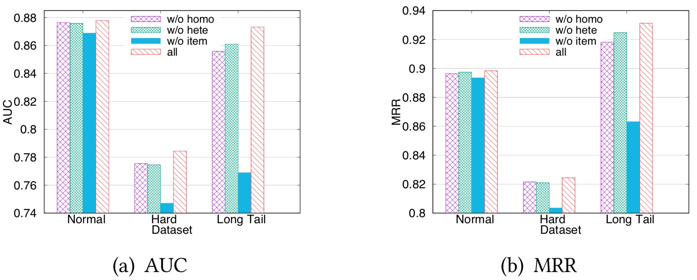

In the following figure, w/o homo means to remove query2query and shop2shop data, w/o here means to remove query2shop and shop2query data, and w/o item means to remove query2item and shop2item data. As the figures shows, different types of heterogeneous information all improves the model performance, and the heterogeneous item information records the most marked improvement.

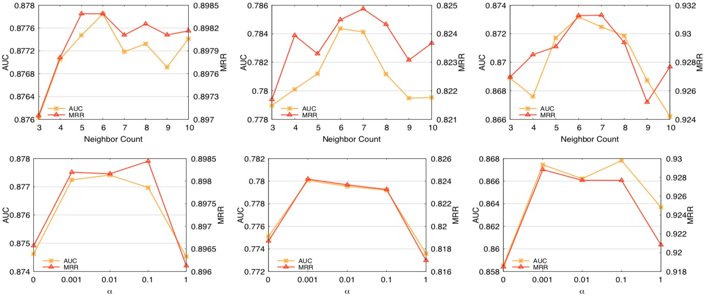

We also analyzed the neighbor count and loss weight α. As the following figure shows, the two parameters need to be tuned to deliver the best performance. The final neighbor count of the model is set to 6, and α is set to 0.001.

Taobao shop search recall is challenged by the long tail and semantic gap issues. To solve these issues, we supplemented the semantics of the DHGAN model in shop search recalling scenarios to achieve better performance. Currently, the user side of the model only contains the profile information. In the future, we will introduce heterogeneous information such as user-item and user-shop to the model to improve its performance.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Prediction and Profiling the Audience Competition for Online TV Series

2,593 posts | 792 followers

FollowKaiwai - September 9, 2019

Alibaba Cloud MaxCompute - February 28, 2020

Alibaba Clouder - January 21, 2020

Alibaba Clouder - October 15, 2020

Alibaba Clouder - April 11, 2019

Alibaba Clouder - October 15, 2020

2,593 posts | 792 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Image Search

Image Search

An intelligent image search service with product search and generic search features to help users resolve image search requests.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Clouder