Download the "Cloud Knowledge Discovery on KDD Papers" whitepaper to explore 12 KDD papers and knowledge discovery from 12 Alibaba experts.

By Di Yin, Tan Jiwei (Zuoshu), Zhang Zhe (Fanxuan), Deng Hongbo (Fengzi), Huang Shujian, and Chen Jiajun

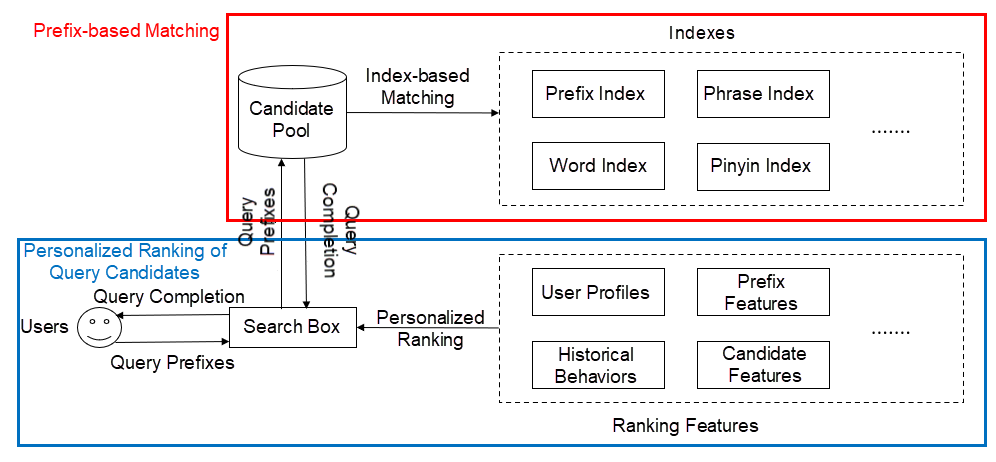

Query Auto-Completion (QAC) is one of the most significant features of modern search engines. The following figure shows how it works. For example, when a user enters a query prefix, such as "blue" in the search box, the QAC system will automatically recommend a ranked list of completed queries, such as "Bluetooth headset," for the user to choose for the follow-up searching. This feature improves users' search efficiency.

The performance requirements for QAC include the following two aspects:

Similar to most search or recommendation tasks, QAC generally adopts a two-stage pipeline-like architecture: matching and ranking. In matching, a candidate pool is formed, which contains plenty of popular queries mined from historical search logs, Query indexes are created in multiple dimensions, such as prefixes, pinyin, and single words segmented from a phrase. Then, indexes are used to perform multi-feature matching, and the results are combined to be an initial candidate set. In ranking, a learning-to-rank personalized model is used to score and rank candidates in the candidate set. Finally, several of the top-ranked candidates will be presented to users in sequence as the final recommended query auto-completions.

With the development of search and recommendation technologies, QAC has been updated and renewed many times. However, most existing QAC systems still have the following two major problems, which seriously affect the QAC performance and user experience.

To solve the two problems, we proposed a multi-view multi-task attentive (M2A) approach to QAC, which considers user behaviors in multiple views. This approach comprises two parts:

To improve the prediction accuracy of the generation and ranking models, we need to consider a key question when we design the models: how can we better model and exploit users' historical sequential behaviors in multiple views.

In search engines, user behavior often refers to searching a query or browsing some content. To extract more comprehensive historical behavior information, we introduced two distinct views of historical sequential behaviors, including searched queries and browsed items. These sequential behaviors have the following characteristics:

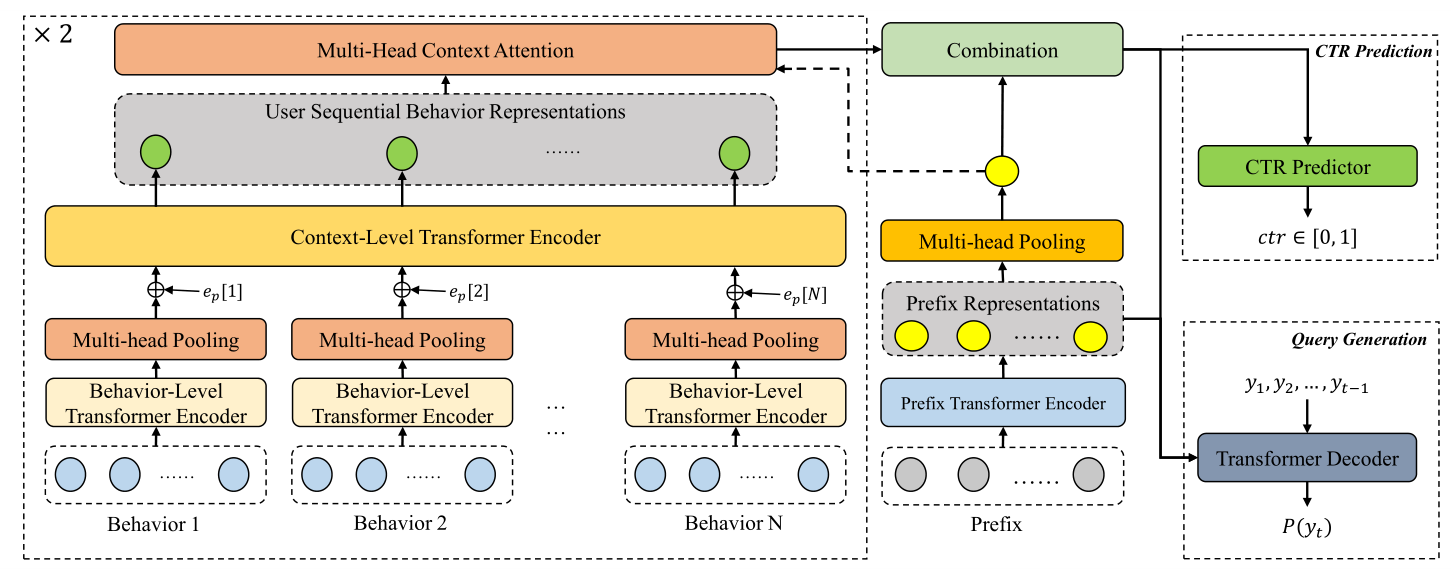

Given the preceding characteristics, we introduced a self-attention-based Transformer encoder into multi-view modeling and proposed a new hierarchical encoder model for sequential behaviors. As shown in the left block of the following figure, this encoder model includes the Behavior-Level Transformer Encoder and Context-Level Transformer Encoder.

To model each behavior, most recommender systems rely completely on representations of interaction learning but ignore the information of behaviors. This leads to poor representations of long-tail behaviors. To solve the problem, we proposed to use the Transformer encoder that can encode the word-level and phrase-level information in each behavior. This can effectively improve representations of long-tail behaviors and also generalize new behaviors that have never been seen.

On this basis, we introduced the Multi-head Pooling mechanism to obtain the upper-layer representation of each behavior, and input the corresponding behavior-level representation into the Context-Level Transformer Encoder for encoding. Then, we use the self-attention mechanism of the Transformer to integrate contextual information and accurately understand semantics. This mechanism can also explicitly describe the dependencies between different behaviors.

By using the hierarchical Transformer encoder, we can obtain more accurate and informative representations of sequential behaviors. However, not all historical behaviors are strongly relevant to users' current search intentions. To reduce redundant information during prediction, we introduced a multi-head attention module. This module uses the query prefix representation as the key to filter information in historical sequential behaviors. Then, the module weighs and combines strongly relevant information and integrates the information with the query prefix. The integrated result serves as the basis for subsequent model prediction.

After we obtain the prediction basis, we need to consider how to predict users' complete queries. Currently, two solutions are available for QAC. One solution is to consider the QAC module as a small search engine and use the "retrieving and ranking" method to recommend results. The other solution is to consider QAC as a text generation task and use the neural network model to automatically generate a complete query. Both solutions must depend on users' historical sequential behaviors for decision-making. They are different in the training objective functions and the training data forms used.

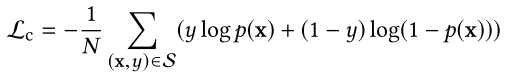

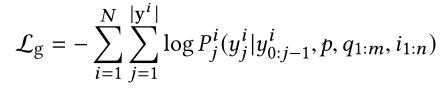

1. Ranking is often considered as a click-through rate (CTR) prediction task. You can use a pointwise objective function, such as Lc, to train a CTR model. The training of the generation model is to guide the model to accurately generate every word, with more fine-grained training objective functions, such as Lg.

2. In terms of training data, the ranking model can use only log data that contains a user's click behaviors for training, whereas the generation model can use some complete queries that the user actively enters to construct training data through random splitting.

Therefore, we proposed multi-task learning for ranking and generation by sharing the hierarchical encoder model. Then, the encoder can achieve better encoding representations by optimizing multiple objectives and using more data in training.

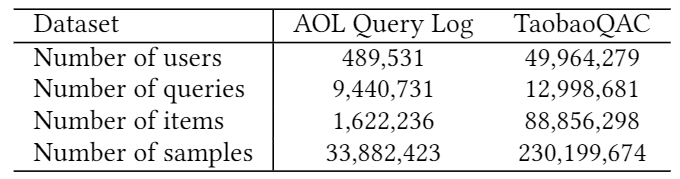

To validate the effect of our solution, we built a large-scale query log dataset and named it TaobaoQAC. This dataset contains more than 200 million query logs collected from the search engine of Taobao Mobile between September 1, 2019 and September 10, 2019. Different from existing AOL log datasets, TaobaoQAC contains not only the complete queries entered by users but also their click behaviors from prefix input to query completion. Therefore, TaobaoQAC is more suitable for studying QAC than AOL datasets. The following table lists the specific comparison information.

We use logs of the first seven days as the training set, logs of the eighth day as the validation set, and logs of the last two days as the test set for model training and evaluation. The evaluation involves seen queries and unseen queries. Seen queries are used to evaluate the performance of models on test data that contains query candidates, whereas unseen queries are used to evaluate the performance of models on test data that does not contain query candidates. In terms of evaluation metrics, we used the Bilingual Evaluation Understudy (BLEU) metric commonly used in machine translation to evaluate the similarity between the prediction results generated by the generation model and the complete queries clicked or entered by users. We also used Mean Reciprocal Rank (MRR) and Unbiased MRR (UMRR) to evaluate the ranking performance of different models. The decoder part of the query generation model can obtain an overall score by multiplying the probabilities of decoding in all steps, and then converting it into a ranking model.

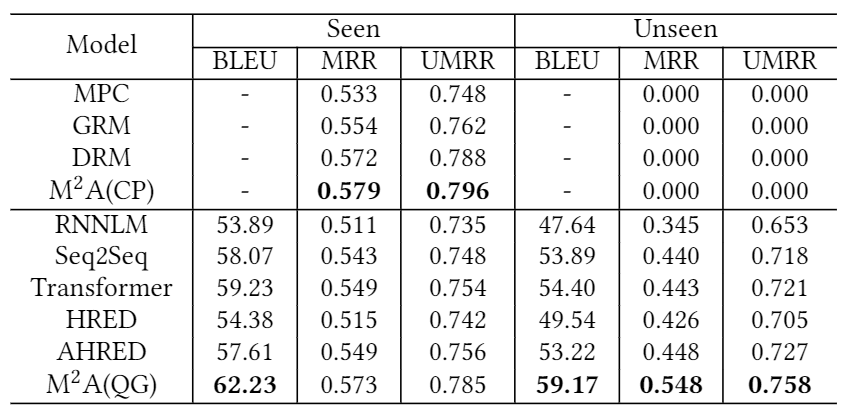

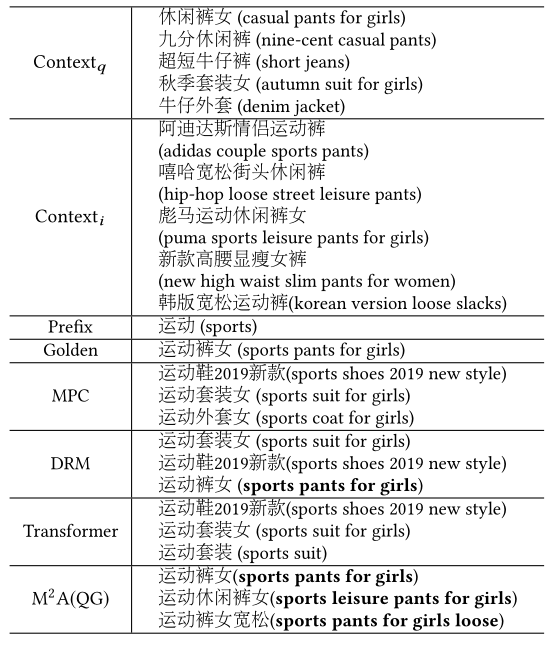

The baseline system includes such ranking models as the statistics-based Most Popular Completion (MPC), GBDT-based Ranking Model (GRM), and Deep Learning-based Ranking Model (DRM) based on deep neural networks, as well as such generation models as the Recurrent Neural Network-based Language Model (RNNLM), standard sequence-to-sequence model (Seq2Seq), Transformer, Hierarchical Recurrent Encoder Decoder (HRED), and Attentive HRED (AHRED.) The experimental result table shows that the ranking module (CP) and generation module (QG) of our M2A framework achieved the best generation and ranking performance, respectively. Most baselines failed to produce results for unseen queries, resulting in zero relevant indexes, whereas our QG model achieved the best result among all generation models.

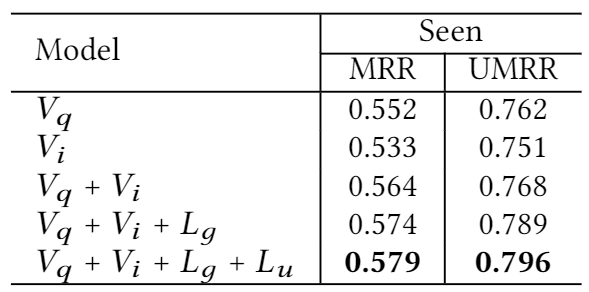

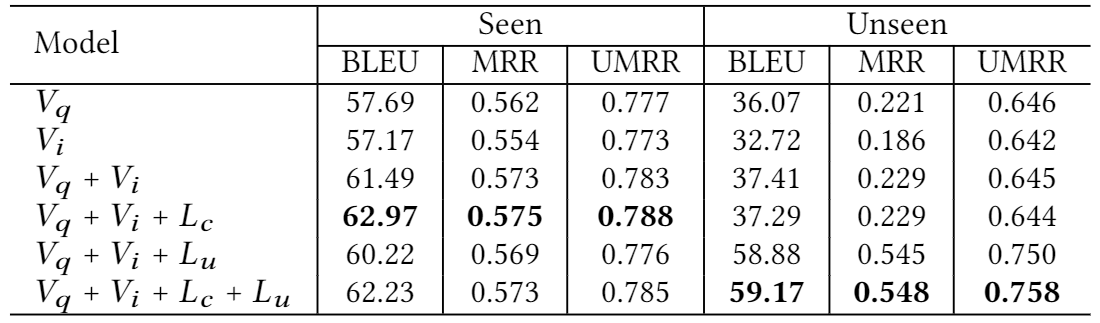

The framework proposed in this article includes multiple views and multiple tasks, so we conducted an ablation experiment. In the following two tables, Vq represents the view of search queries, Vi represents the view of browsed items, Lg represents the query generation task for clicked queries, and Lu represents the query generation task for entered queries. We start from the CTR prediction model based on a single view and gradually add responding modules.

The following table shows the ablation experiment result of the CTR prediction model, which indicates that each view or task can bring an obvious gain to the model.

The following table shows the ablation experiment result of the query generation model. According to the result, when logs of queries actively entered by users are used as the training data, the performance of the model has a slight decrease in terms of seen queries, but has a large increase in terms of unseen queries. Overall, the model brings sound gains.

We also observed some recommendation cases and found that most ranking-based or generation-based methods tend to place high-frequency queries at the top of the list of recommended queries. However, these high-frequency queries are often irrelevant to users' current search intentions, resulting in weak personalization of ranking results. Our solution can mitigate this problem. Top-ranked query candidates are most relevant to users' current search intentions.

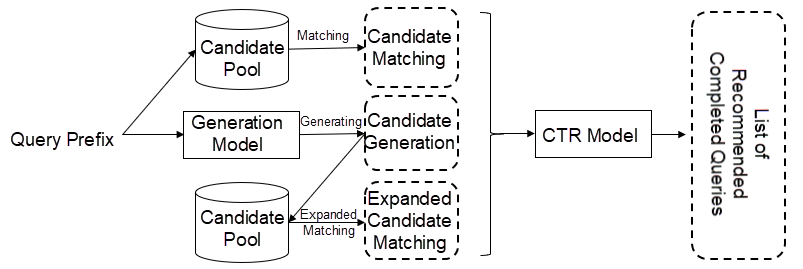

To validate whether our solution can benefit online service, we conducted A/B testing in Taobao's main search engine. The beam search-based generation model responds slowly when it generates multiple candidate policies. Therefore, we mined the long-tail queries with fewer or no results in the last week in advance to generate query candidates in offline mode and create relevant indexes. In the online scenario, we combined query candidates generated in offline mode, queries obtained through expanded matching, and existing query candidates into a complete candidate set. Then, we used the CTR prediction model in the framework to rank the query candidates.

This approach ensures that the online response time meets the requirement and also achieves better overall performance by combining the automatic generation capability of the generation model with the personalized ranking capability of the CTR model. During nearly two months of A/B testing, our solution raised the pageview (PV), unique visitor (UV), and CTR of Taobao Mobile's QAC service by 3.84%, 1.6%, and 4.12%, respectively. Moreover, it contributes an 11.06% increase in the service response ratio. Our solution has brought sound business gains.

In this article, we proposed the M2A framework for QAC. This framework models and uses rich personalized information in multiple views of users' sequential behaviors, so the QAC model can predict users' current search intentions more accurately. This framework uses multi-tasking to learn candidate ranking and query generation and uses multiple learning objectives and training data to train the QAC model. As such, the framework achieves complementary advantages among different tasks. The whole framework has achieved good results in both online and offline experiments, bringing significant benefits to the QAC service of Taobao's search engine. We plan to open source the TaobaoQAC dataset to help developers probe deeper into personalized QAC. For more information about the dataset, click this link.

In the future, we will consider modeling longer user behavior sequences to improve the prediction accuracy of the model. We will also develop more effective query generation models or approaches, so query candidates can be generated online in real-time.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Prediction and Profiling the Audience Competition for Online TV Series

Alibaba Cloud Releases an Enterprise-Level IoT Platform with a SLA of 99.99%

2,593 posts | 792 followers

FollowAlibaba Clouder - January 21, 2020

Alibaba Clouder - October 15, 2020

Alibaba Clouder - October 15, 2020

Alibaba Clouder - October 15, 2020

Alipay Technology - November 4, 2019

Alibaba Clouder - June 22, 2020

2,593 posts | 792 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Clouder