11.11 Big Sale for Cloud. Get unbeatable offers with up to 90% off on cloud servers and up to $300 rebate for all products! Click here to learn more.

Not too long ago, the paper "Adversarial Learning on Heterogeneous Information Networks" by Hu Binbin, an algorithm engineer with Ant Financial, was selected by the Knowledge Discovery and Data Mining (KDD) 2019 conference. KDD is the world's top conference for discovering knowledge and mining data. In this article, we're going to take a deep dive into the topics covered in this paper. If interested, you can download and read the original here.

Network representation learning (NRL) is one means for representing network data in low-dimensional space, which has received wide application in the analysis of heterogeneous information networks. Although existing representation learning methods for heterogeneous information networks do offer performance improvements, they also suffer from several major limitations. The biggest catch is that the negative sampling method, which is often used to select random nodes from networks, is done without first learning the underlying distribution, which leads to a less than robust representation.

Inspired by Generative Adversarial Networks (GANs), we developed HeGAN (standing for Heterogenous Generative Adversarial Networks), which is a new framework that can be used for the representation learning of heterogeneous information networks. HeGAN trains a discriminator and generator simultaneously in a maximin game. Compared with previous representation learning methods for heterogeneous information networks, our generator can learn node distribution to generate better, more accurate negative samples. Also, compared with the GANs of homogeneous networks, our discriminator and generator are relation-aware, meaning that they are capable of capturing rich semantic information on heterogeneous networks. In addition, our generalized generator to improve the sampling efficiency. The generalized generator can sample the "potential" nodes in continuous distribution and is not limited to nodes in the original network as are previous methods. Finally, we conducted many experiments on four datasets. The experiment results on all datasets and tasks showed that our method was superior to previous representation learning methods.

Network structures are widely used in real applications from social and biological networks, and from transportation to telecommunication systems. Therefore, network analysis is increasingly important for solving key issues such as supporting personalized user recommendations on social networks and simplifying gene recognition on biological networks. These issues arise when node clustering, node classification, and link prediction need to be performed for network data. Therefore, these issues can be completely resolved by effective network representation. In recent years, network representation learning has become a promising method for the learning representation of unsupervised nodes. Network representation learning is intended to project network nodes to low-dimensional space, while also maintaining the structural characteristics of the original network.

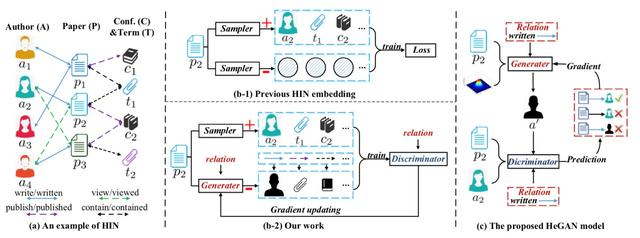

Above are Figure (a), Figure (b-2), Figure (b-2), and Figure (c)

Heterogeneous information networks. Although the earlier network representation learning was in many ways successful, it was only applied to homogeneous networks that comprise nodes and edges of the same type. However, in actual use cases, nodes comprise several different entities that are interconnected through various kinds of relationships. A network which is composed of these nodes is a heterogeneous network, as shown in Figure (a). The heterogeneous information network consists of multiple node types including an author node and paper node. These nodes are interconnected through various relationships, such as the write-and-writing relationship between the author and paper, and the publish-and-published relationship between the paper and conference.

Heterogeneous information networks often have rich and complex semantics due to their heterogeneity. Therefore, many researchers began to study representation learning on heterogeneous information networks. Among them, the most noteworthy research items are metapath2vec and HIN2vec. As shown in Figure (b-1), the existing representation learning method for heterogeneous information networks is as follows: two samplers select "context nodes" as positive samples such as author a2 and negative samples like those indicated by shadow circles from a given "central node" such as paper p2 on the network. Each node can serve as the center or context, which is similar to a skip-gram model. Then, a Loss function is trained based on these samples to optimize the node representation. These methods can improve the performance to some extent, but also have severe limitations. First, these methods usually use negative sampling to select random nodes from the existing network as negative samples. Therefore, these negative samples are at random and are limited to the original network. Second, these methods focus on capturing rich semantic information from the heterogeneous information networks but ignore the node distribution at the underlying layer. Therefore, these methods are not sufficiently robust for real networks that contain loose and noisy data. Third, many existing representation learning methods for heterogeneous information networks use metadata paths to match the required semantics, which usually requires expertise. However, expertise involves some level of subjectivity and is difficult for this type of system to obtain.

Adversarial learning. GANs have been developed to learn the potential representations that are robust in various applications. GANs rely on adversarial learning, in which a discriminator and generator compete against each other. The discriminator and generator need to train better discrimination models and also learn the data distribution at the underlying layer. The generator makes models more robust for loose or noisy data [13,24], and also provides better samples to reduce callout requirements. Thanks to these advantages, GAN-based network representation learning has been used for some research studies. However, these research studies were only performed on homogeneous networks, without considering the heterogeneity of nodes and relationships. Therefore, they did not perform well on heterogeneous information networks with rich semantics.

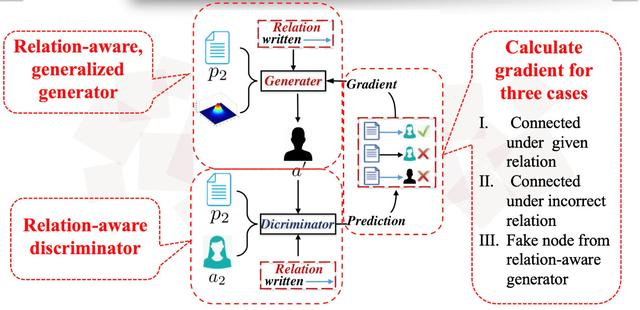

HeGAN and its contributions. To conquer existing limitations, we proposed HeGAN, which was a new framework that could represent heterogeneous information networks based on GAN. Specifically, we proposed a new discriminator and generator, as shown in Figure (b-2). Our discriminator and generator are aware of relationships to distinguish nodes interconnected by different relationships. For any relationship, a discriminator can determine whether a node pair is true or false, and a generator can generate a false node pair that can simulate a true node pair. A node pair is considered to be a positive sample pair only if the node pair is positive for the network topology and the node pair is formed in a correct relationship. We also designed a generalized generator that can directly extract potential nodes from continuous distribution. Therefore, softmax computing is not required, and false samples may not be existing nodes. The Adversarial Learning on Heterogeneous Information Networks page made the following contributions:

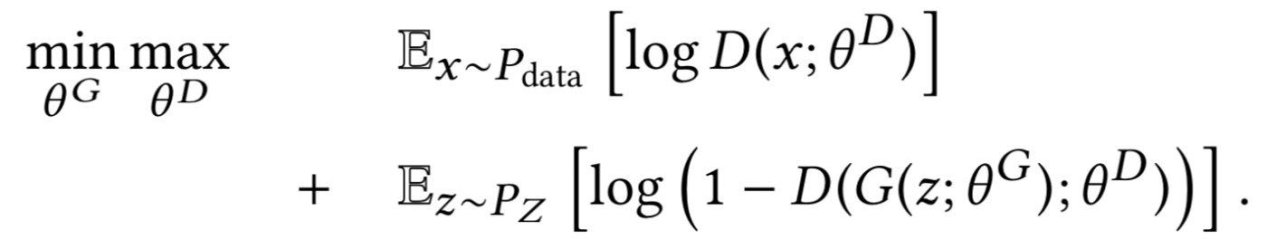

GANs. Inspired by GANs, we consider GANs as a maximin game between players, which are generator G and discriminator D. The optimization method can be expressed by the following formula:

Overall framework of HeGAN. As shown in Figure (c), our framework consists of two modules that compete against each other, which are the discriminator and the generator. When a node is given, the generator attempts to generate a false sample that is associated with the given node and provides the false sample for the discriminator. The discriminator attempts to improve the parameters of the false sample to separate the false sample from the true sample that is connected to the given node. During this repeated process, the trained discriminator forces the generator to generate better false samples and also enhances its own discrimination capability. Therefore, both the generator and discriminator are enhanced in these iterations.

In existing research studies, GANs were only used to determine whether the structural connection between a node and a given node was true or false, but different semantics on heterogeneous information networks were ignored. For example, paper p2 is given, nodes a2 and a4 are considered true, and nodes a1 and a3 are considered false based on the network topology shown in Figure (a). However, a2 and a4 connect to p2 for different reasons: a2 writes data to p2, and a4 reads data from p2. Therefore, valuable semantics on the heterogeneous information network are ignored. a2 and a4 assume different semantic roles, but they cannot be discriminated. To implement representation learning with semantics retained, HeGAN introduced a discriminator and generator that was relation-aware to distinguish different semantics. On the preceding heterogeneous information network, node p2 and a relationship such as write and write are given. Our discriminator can distinguish a2 from a4, and the generator will attempt to generate a false sample that is more similar to a2, but not a4.

In addition, many previous research studies were not effective or efficient in generating false samples. They often use the softmax function in a certain way to generate false samples of all nodes on the original network. Previous research studies are limited to existing nodes on a network, but the most representative false sample may not be among the existing nodes that can be observed. Therefore, existing research studies are not effective. For example, when node p2 is given, existing research studies can only select samples such as a1 and a3 from space V, which is the collection of all nodes on the network. However, a1 or a3 is not fully similar to the true node a2. To generate better samples, we introduced a generalized generator, which can generate false samples such as a' that may not belong to V. a' could be the average of a1 and a3, and this average is more similar to the true sample a2. The softmax function has a high computing overhead, and approximate methods such as negative sampling and graphic softmax must be used. Therefore, the computing efficiency is low. However, our generator can directly sample false nodes from continuous space without using softmax. The following figure shows our framework.

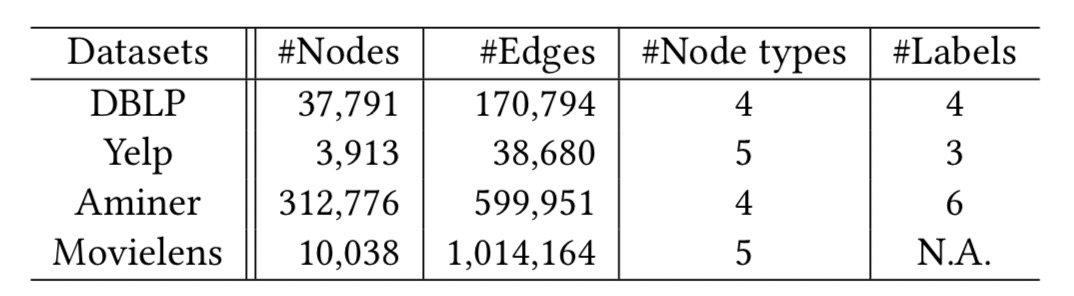

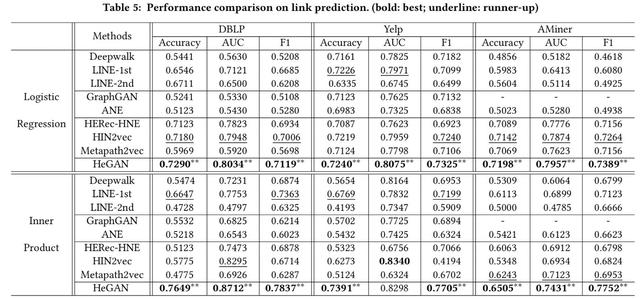

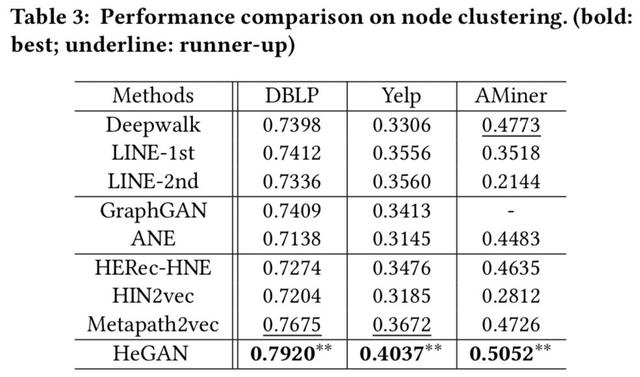

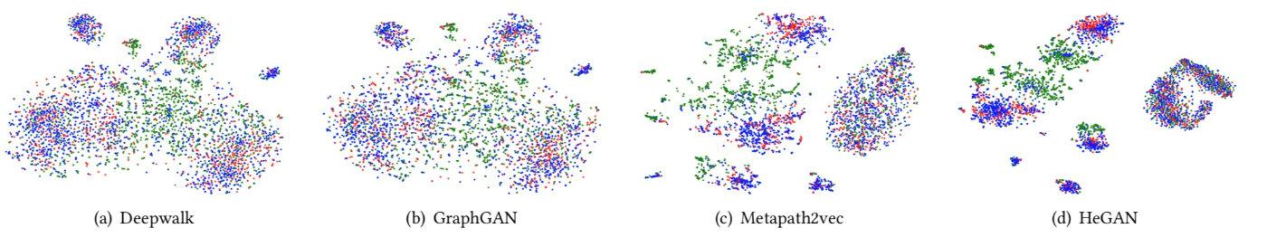

We conducted experiments on the DBLP, Yelp, Aminer, and Movielens datasets, and verified the effectiveness on the node clustering, node classification, link prediction, and recommendation tasks. The following table describes the four datasets.

Below are the experiment results on the node classification, link prediction, node clustering, and recommendation tasks.

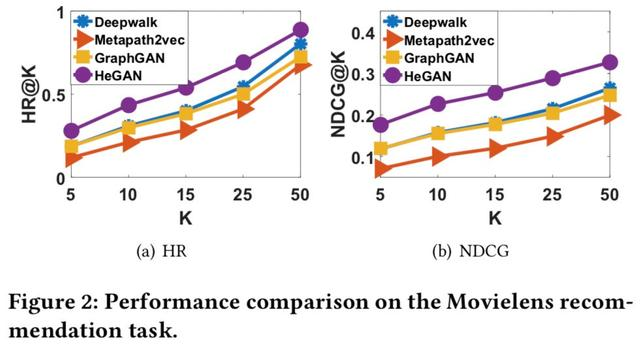

Below is a visualization of the space of the Yelp dataset represented by the nodes.

As shown in the figure, the boundaries of HeGAN is clearer and the cluster is denser.

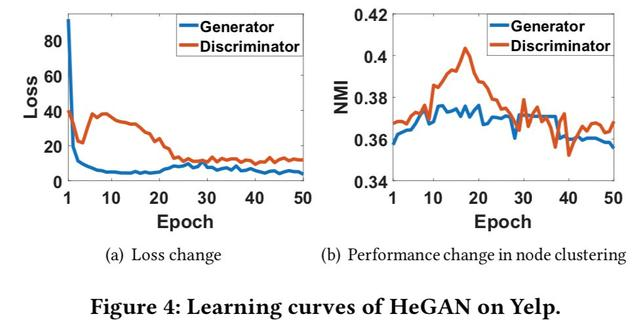

We provided the learning curves of the HeGAN generator and discriminator on the Yelp dataset, and analyzed the loss change and performance change in node clustering. After the initial loss fluctuation, the generator and discriminator started their maximin game, and their losses gradually decreased. After adversarial training for about 20 epochs, their losses stabilized with little fluctuation, and the winner achieved a better performance. Note that after adversarial training for more epochs, the node clustering performance decreases due to overfitting.

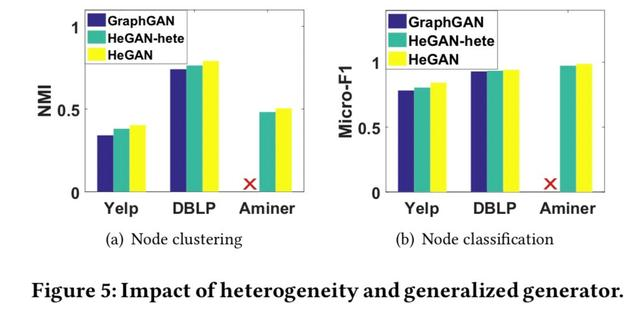

Then, we verified the heterogeneous information and the effectiveness of the generalized generator on the node clustering and node classification tasks. From that, we drew the following conclusions: 1. On heterogeneous information networks, nodes and relationships of different types must be distinguished. 2. Our generalized generator can generate more representative samples.

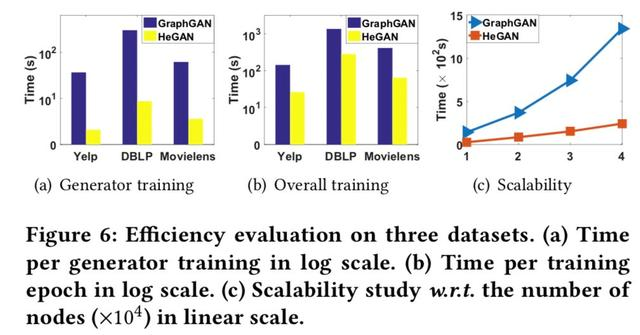

Finally, the efficiency of HeGAN is shown below.

As shown in the above figure, the training time of HeGAN is linear with the number of nodes, and the time performance of HeGAN is much better than that of GraphGAN based on softmax.

The technologies covered in this article include heterogeneous information networks and adversarial learning. A real network often contains nodes or relationships of multiple types, and networks with increasingly complex relationships will boom in the future. Therefore, research in this area focuses on how to better use and represent these complex networks to produce more value. In addition, existing networks often have a lot of noise or are weak at noise immunity. This encourages us to discover more robust representations of networks.

Alipay Unveils AI-Powered Recycling Bin That Sorts Your Waste for You

12 posts | 2 followers

FollowAlibaba Clouder - October 15, 2020

Alibaba Clouder - August 10, 2018

Alibaba Clouder - October 15, 2020

Alibaba Clouder - October 15, 2020

Alibaba Clouder - October 15, 2020

Alibaba Clouder - August 10, 2018

12 posts | 2 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Edge Network Acceleration

Edge Network Acceleration

Establish high-speed dedicated networks for enterprises quickly

Learn MoreMore Posts by Alipay Technology

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free