By Qingshan Lin (Longji)

The development of message middleware has spanned over 30 years, from the emergence of the first generation of open-source message queues to the explosive growth of PC Internet, mobile Internet, and now IoT, cloud computing, and cloud-native technologies.

As digital transformation deepens, customers often encounter cross-scenario applications when using message technology, such as processing IoT messages and microservice messages simultaneously, and performing application integration, data integration, and real-time analysis. This requires enterprises to maintain multiple message systems, resulting in higher resource costs and learning costs.

In 2022, RocketMQ 5.0 was officially released, featuring a more cloud-native architecture that covers more business scenarios compared to RocketMQ 4.0. To master the latest version of RocketMQ, you need a more systematic and in-depth understanding.

Today, Qingshan Lin, who is in charge of Alibaba Cloud's messaging product line and an Apache RocketMQ PMC Member, will provide an in-depth analysis of RocketMQ 5.0's core principles and share best practices in different scenarios.

This course delves into the architecture of RocketMQ 5.0. As we learned in the previous course, RocketMQ 5.0 can support a wide range of business scenarios, including business messages, stream processing, Internet of Things, event-driven scenarios, and more. Before exploring specific business scenarios, let's first understand RocketMQ's cloud-native architecture from a technical perspective and see how it supports diversified scenarios based on this unified architecture.

First, we will explore the core concepts and architecture of RocketMQ 5.0. Then, we will learn about the control links, data links, and how the client and server interact with each other from a cluster perspective. Finally, we will return to the most critical module, the storage system, to learn how RocketMQ implements data storage, high data availability, and how to leverage cloud-native storage to further enhance competitiveness.

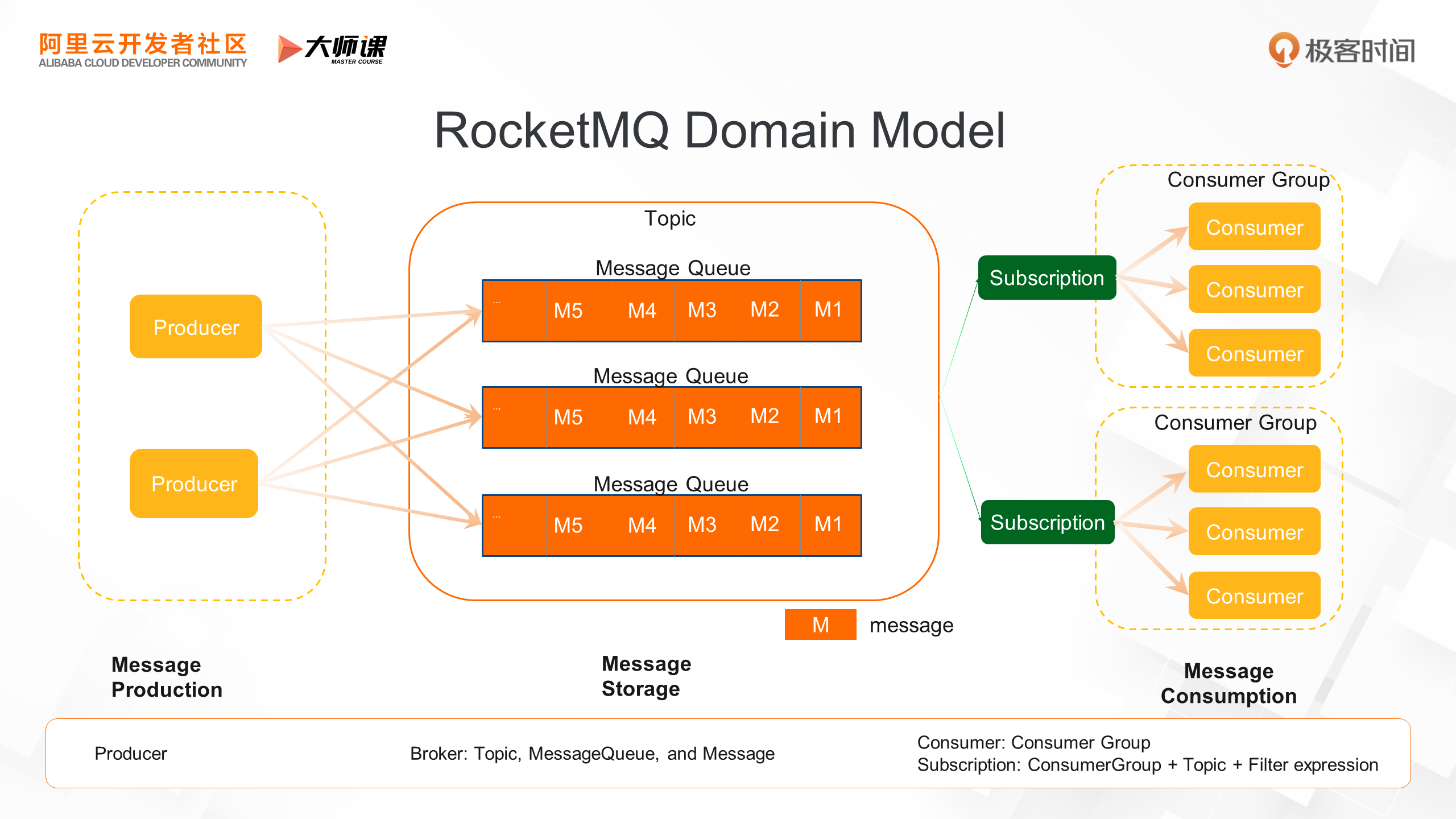

Before diving into RocketMQ's architecture, let's take a look at the key concepts and domain models of RocketMQ from the user perspective. The following figure shows the sequence of message forwarding.

On the far left is the message producer, which typically corresponds to the upstream application of a business system. It sends a message to the Broker after a business action is triggered. The Broker is the core of the message system's data link, responsible for receiving messages, storing messages, maintaining message status, and consumer status. Multiple Brokers form a message service cluster, serving one or more topics.

As mentioned earlier, producers produce messages and send them to the Broker. Each message contains a message ID, message topic, message body content, message attribute, message business key, and so on. Each message belongs to a topic, representing the same business semantics.

For example, in Alibaba, the topic of a transaction message is called Trade, and the shopping cart message is called Cart. The producer application sends the message to the corresponding topic. A topic also contains MessageQueue, which is used for message service load balancing and data sharding. Each topic contains one or more message queues distributed across different message Brokers. The producer sends the message, and the Broker stores it. The next step is for the consumer to consume the message. Consumers typically correspond to downstream applications of the business system. The same consumer application cluster shares the same consumer group. A consumer generates a subscription relationship with a topic, which is a triple of consumer groups, topics, and filter expressions. Messages that meet the subscription relationship are consumed by the corresponding consumer cluster.

Next, let's dive deeper into RocketMQ from a technical implementation perspective.

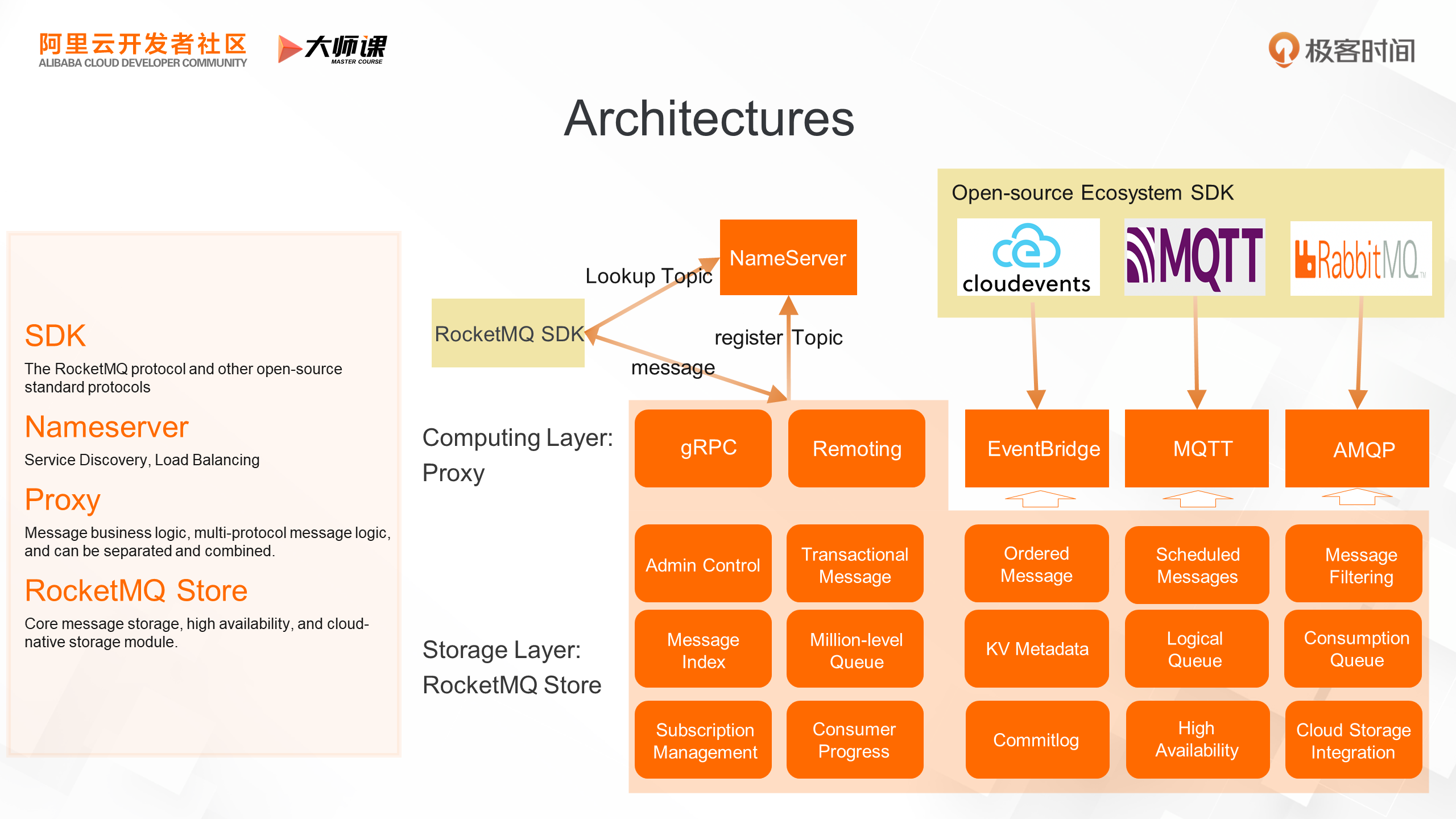

This is an overview of the architecture of the RocketMQ 5.0. It can be divided into the SDK, NameServer, Proxy, and Store layers.

Let's first look at the SDK layer, which includes the RocketMQ SDK. Users utilize this SDK based on the RocketMQ domain model. In addition to the RocketMQ SDK, it also includes industry-standard SDKs for sub-domain scenarios. For event-driven scenarios, RocketMQ 5.0 supports the SDK of CloudEvents. For IoT scenarios, RocketMQ supports the SDK of the IoT MQTT protocol. To facilitate the migration of more traditional applications to RocketMQ, we also support the AMQP protocol and will open-source it to the community version in the future. Another component is NameServer, which is responsible for service discovery and load balancing. By using the NameServer, the client can obtain the data shard and service address of the topic, and link the message server to send and receive messages.

Message services include the computing layer Proxy and storage layer RocketMQ Store. RocketMQ 5.0 is an architecture that separates storage and computing, emphasizing the separation of modules and responsibilities. Proxy and RocketMQ Store can be deployed together or separately for different business scenarios. The computing layer Proxy mainly carries the upper-layer business logic of messages, especially for multi-scenario and multi-protocol support, such as the implementation logic and protocol conversion of domain models that carry CloudEvents, MQTT, and AMQP. For different business loads, Proxy can also be separately deployed for independent elasticity. For example, in IoT scenarios, Proxy layer independent deployment can auto-scale for a large number of IoT device connections and decouple storage traffic expansion. The RocketMQ Store layer is responsible for core message storage, including the Commitlog-based storage engine, search index, multi-replica technology, and cloud storage integration extension. The status of the message system is transferred to the RocketMQ Store, while all other components are stateless.

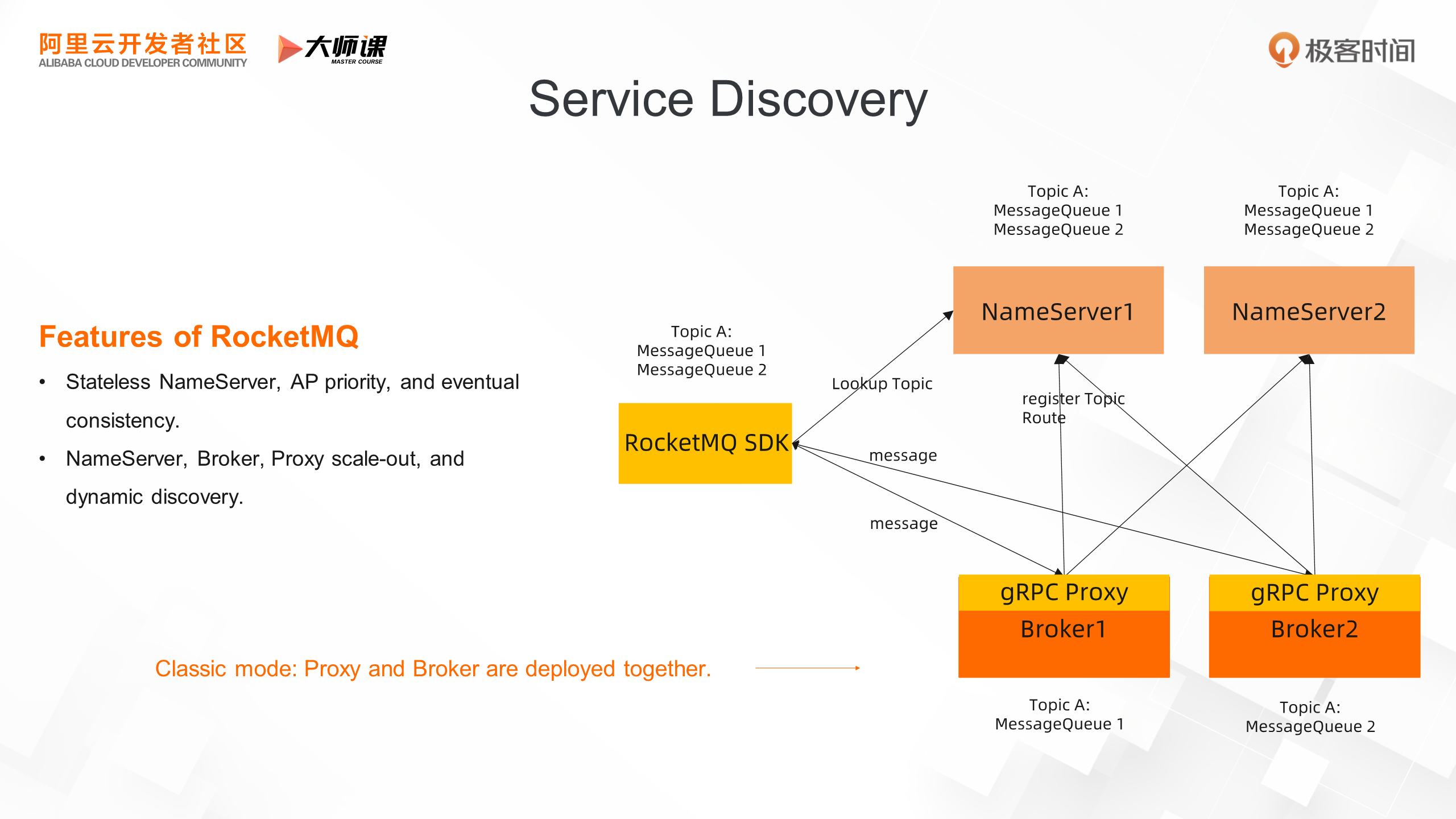

In the second part, let's delve into the service discovery of RocketMQ in detail. The service discovery of RocketMQ is implemented using NameServer (NS).

Let's explore the service discovery mechanism through the following figure, which shows the combined deployment mode of Proxy and Broker, also the most common mode of RocketMQ. As mentioned earlier, each Broker cluster will be responsible for the services of certain topics. Each Broker will register its own services' topics to the NameServer cluster, communicate with each NameServer, and regularly maintain the lease with NS through the heartbeat mechanism. The data structure of service registration contains topics and topic shards MessageQueue.

In this example, Broker1 and Broker2 each host a shard of Topic A. A global view is maintained on the NS machine, showing that Topic A has two shards, one in Broker1 and one in Broker2. Before sending messages to Topic A, the RocketMQ SDK randomly accesses a NameServer machine to discover the shards of Topic A and the Broker associated with each data shard. It then establishes long-term connections with these Brokers before sending and receiving messages. Unlike most projects, which use strongly consistent distributed coordination components like Zookeeper or etcd as their registration center, RocketMQ has its own characteristics. From a CAP perspective, its registration center adopts an AP mode, with stateless NameServer nodes and a ShareNothing architecture that ensures higher availability.

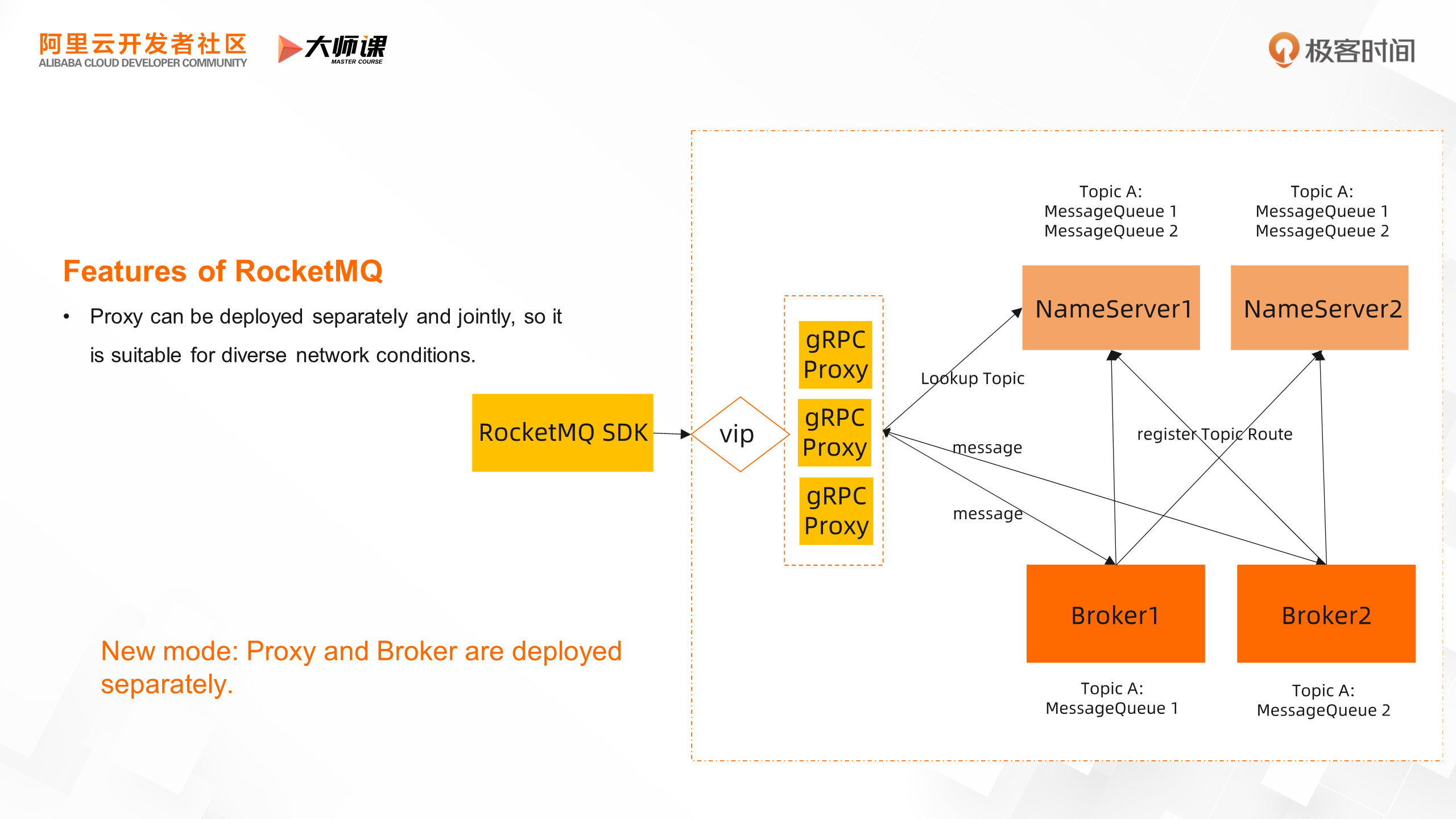

Now let's look at the following figure. We see that RocketMQ's storage-compute separation allows for separate and combined deployment modes. Here, the separated mode is used, where the RocketMQ SDK directly accesses the stateless Proxy cluster. This mode can handle more complex network environments and supports multiple network types of access, such as public network access, to achieve better security control.

In the entire service discovery mechanism, NameServer and Proxy are stateless and can add or remove nodes at any time. The increase or decrease of stateful node Brokers is based on the NS registration mechanism, which can be sensed and dynamically discovered by clients in real time. During the scale-in process, RocketMQ Broker can also control the read/write permissions of service discovery. The scale-in node can be blocked from writing and reading, and all unread messages can be consumed to achieve lossless and smooth disconnection.

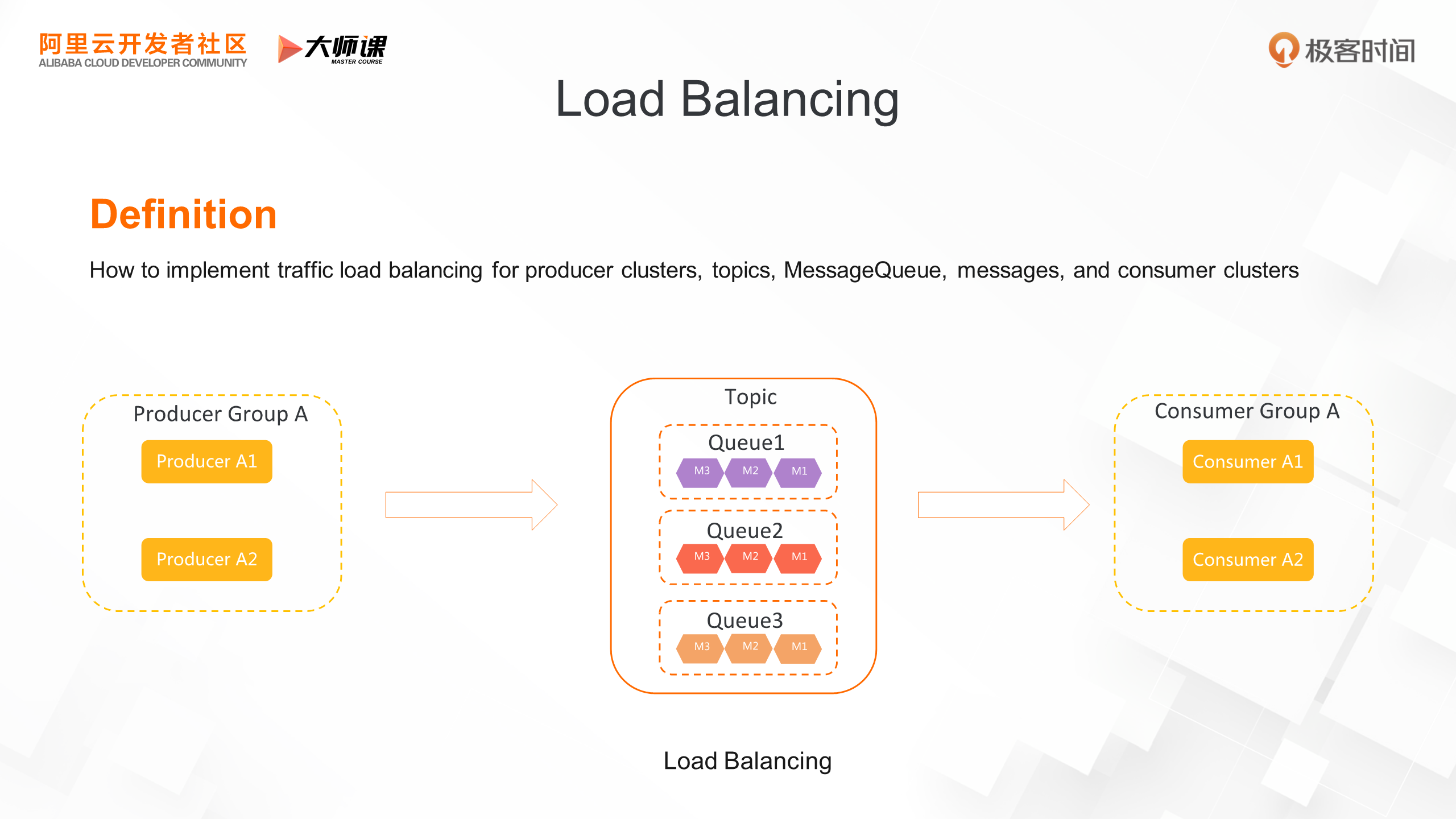

We have just learned how the SDK uses NameServer to discover the message queue and broker addresses of topic shards. Based on the metadata discovered by these services, let's take a closer look at how the message traffic load is balanced in the producer, RocketMQ Broker, and consumer clusters.

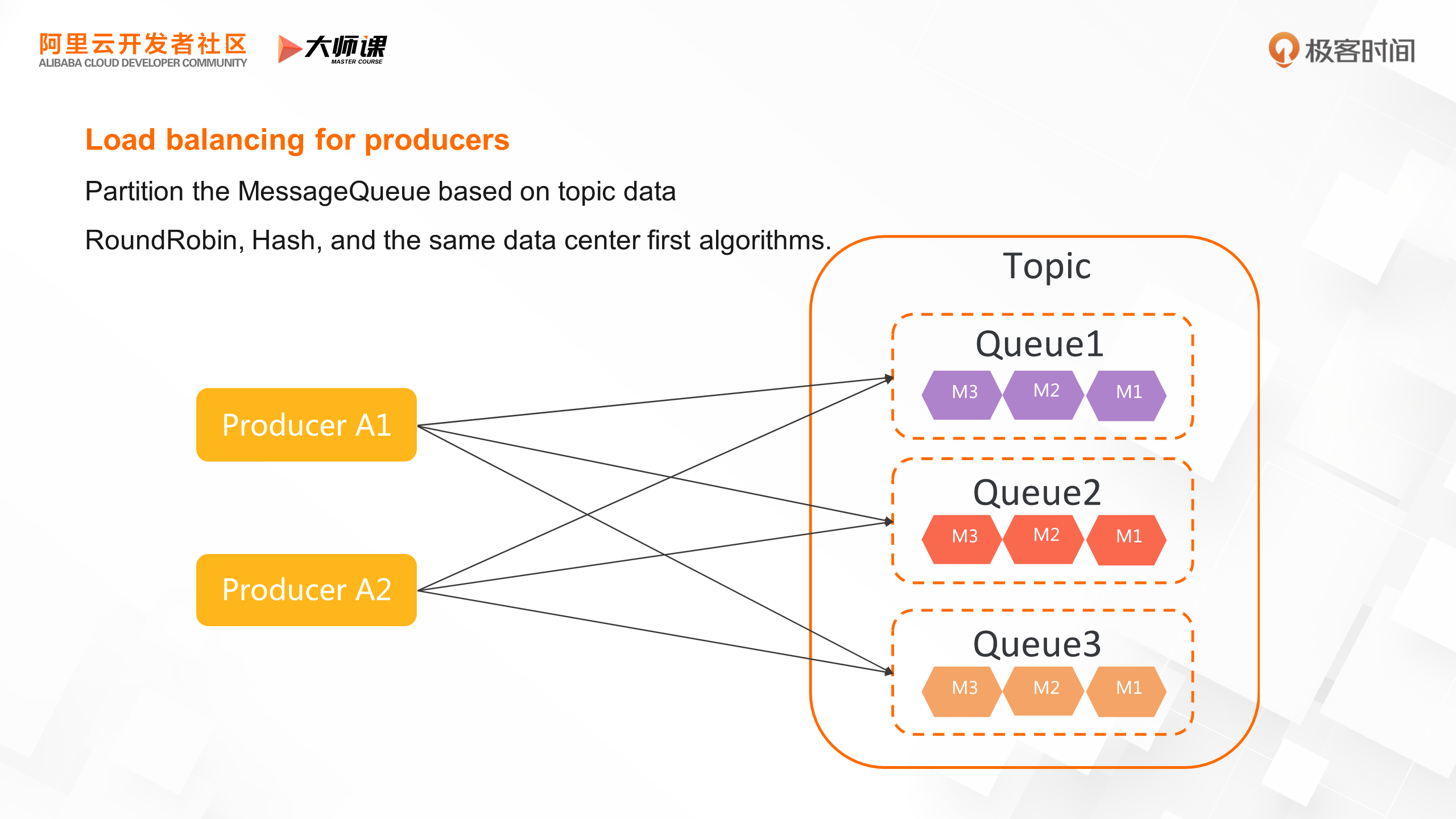

First, let's look at the load balancing of the production link. The producer knows the data shards of the topic and the corresponding Broker address through the service discovery mechanism. Its service discovery mechanism is relatively simple. By default, it uses round robin to poll and send messages to each topic queue, ensuring that the traffic of the broker cluster is balanced. In the scenario of ordered messages, messages are sent to a queue based on the hash of the business primary key. In this way, if there is a hot business primary key, the Broker cluster may also have hot spots. In addition, based on these metadata, we can also expand more load balancing algorithms based on business needs, such as the same data center first algorithm, which can reduce latency and improve performance in multi-data center deployment scenarios.

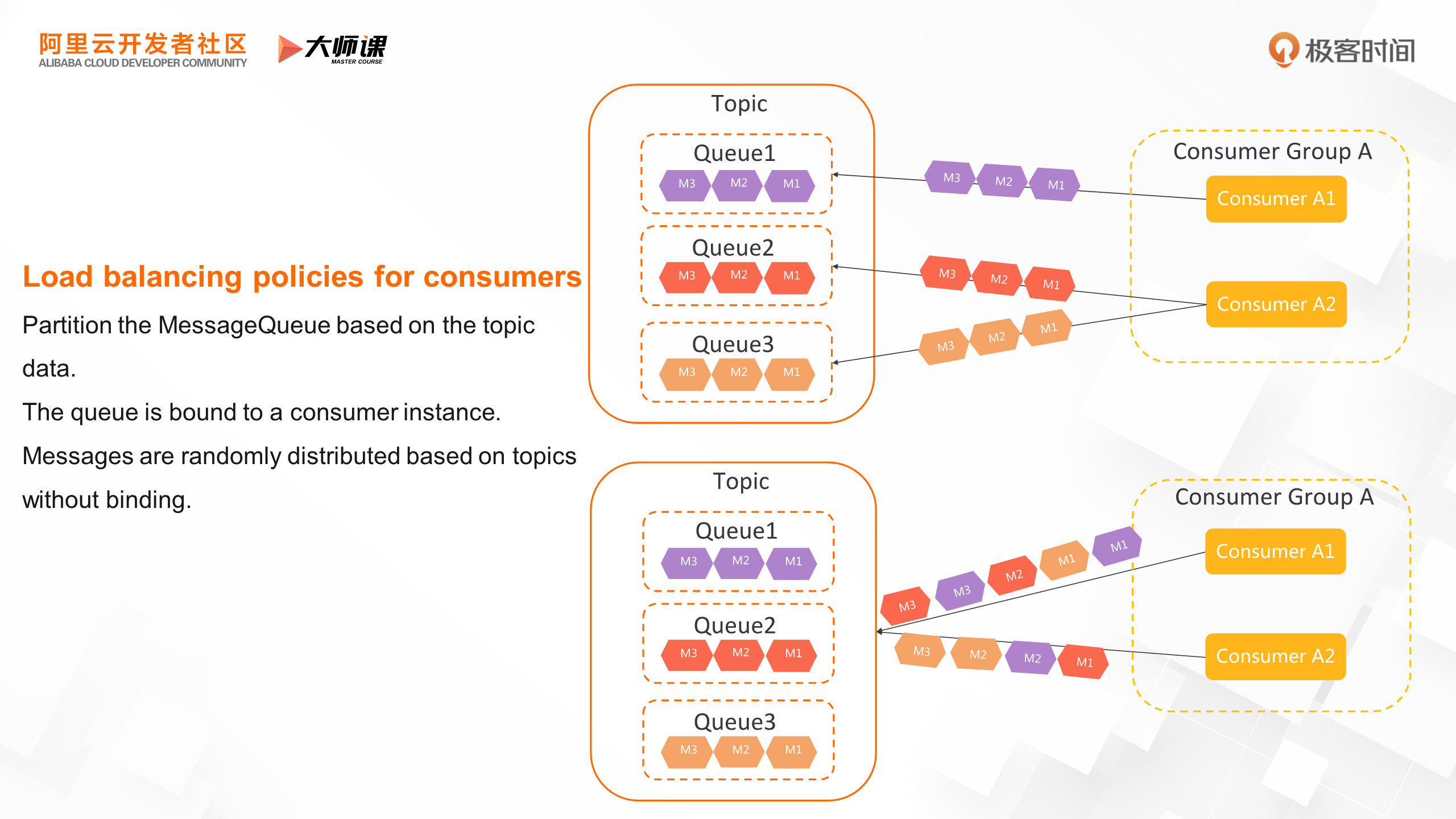

Let's look at the load balancing of consumers, which is relatively more complex than that of producers. There are two ways to realize load balancing. The most classic way is queue-level load balancing. Consumers know the total number of queues in a topic and the number of instances in the same consumer group. Then, similar to consistent hashing, consumers can bind each consumer instance to the corresponding queue based on a unified allocation algorithm, and only consume messages bound to the queue. Messages in each queue are consumed by only one consumer instance.

The biggest disadvantage of this way is that the load is unbalanced, the consumer instance needs to be bound to the queue, and there is a temporary state. If we have three queues and two consumer instances, then there must be one consumer who needs to consume 2/3 data. If we have four consumers, then the fourth consumer will run empty. Therefore, in RocketMQ 5.0, we have introduced a message-based load balancing mechanism. Messages are randomly distributed in consumer clusters without the need to bind queues. This ensures the load balancing of consumer clusters. More importantly, this way is more in line with the serverless trend. The number of machines for Brokers, the number of queues for topics, and the number of consumer instances are completely decoupled and can be scaled independently.

Through the architecture overview and service discovery mechanism, we have gained a relatively global understanding of RocketMQ. Next, we will delve into the storage system of RocketMQ, which plays a crucial role in its performance, cost, and availability.

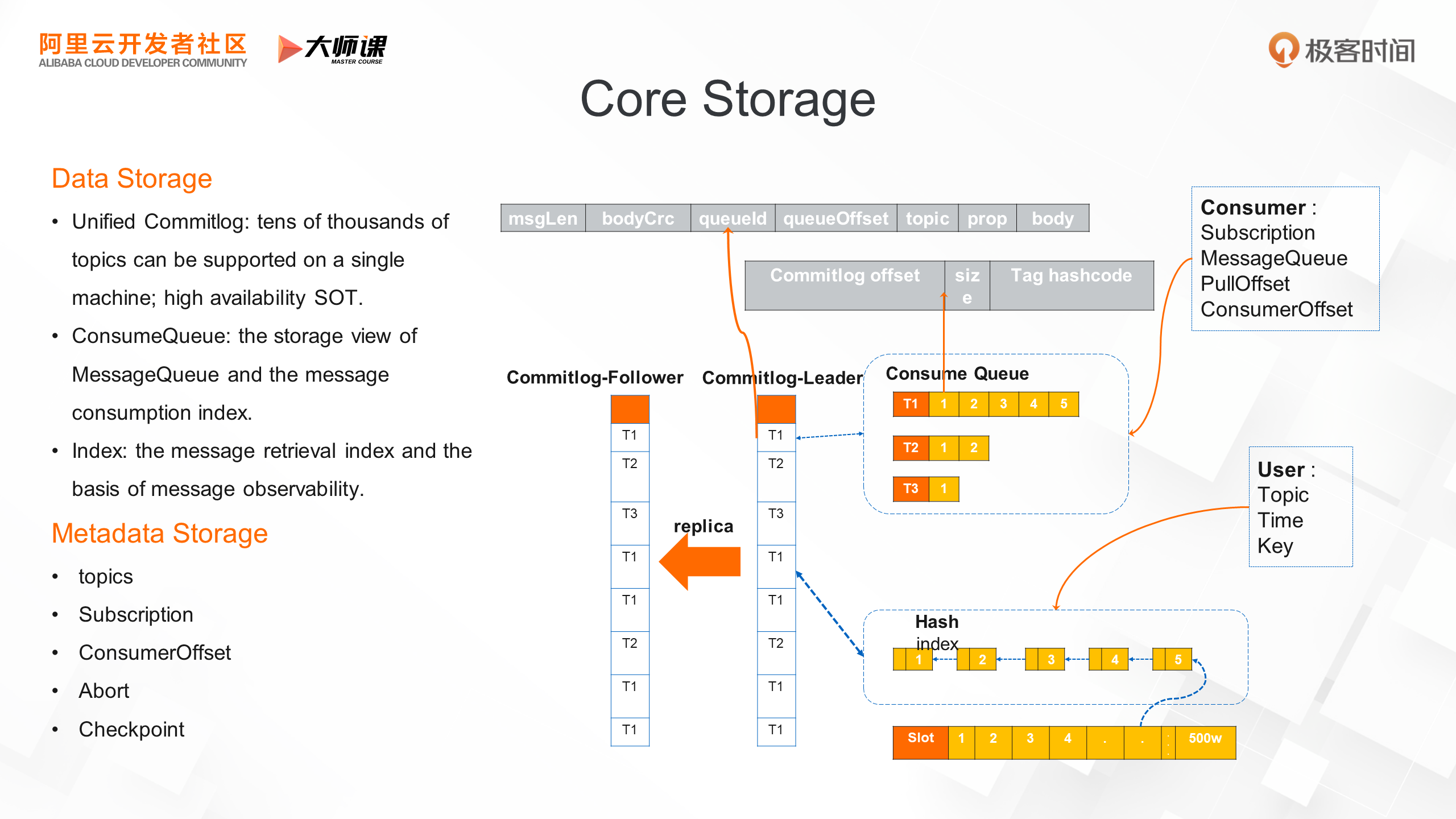

Let's take a closer look at the storage core of RocketMQ. The storage core consists of Commitlog, Consumequeue, and Index files. Message storage is first written to Commitlog, flushed, and copied to the slave node for persistence. Commitlog serves as the source of truth for stored messages, enabling the construction of a complete message index. Unlike Kafka, RocketMQ writes data from all topics to Commitlog files, maximizing sequential I/O and allowing a single RocketMQ instance to support tens of thousands of topics.

After the Commitlog is written, RocketMQ asynchronously generates multiple indexes. The first is the ConsumeQueue index, which corresponds to MessageQueue. Based on this index, messages can be precisely located by topic, queue ID, and offset. The message backtracking feature is also implemented based on this index. Another important index is the hash index, which is the foundation for message observability. A persistent hash table is used to query the primary key of the message service, enabling message tracing.

In addition to storing the message itself, the Broker also carries the storage of message metadata. This includes topic files, which indicate the topics that the Broker provides services for, as well as attributes such as the number of queues, read/write permissions, and ordering for each topic. There are also Subscription and ConsumerOffset files, which maintain the subscription relationship of the topic and the consumption progress of each consumer. The Abort and Checkpoint files are used to restore files after a restart, ensuring data integrity.

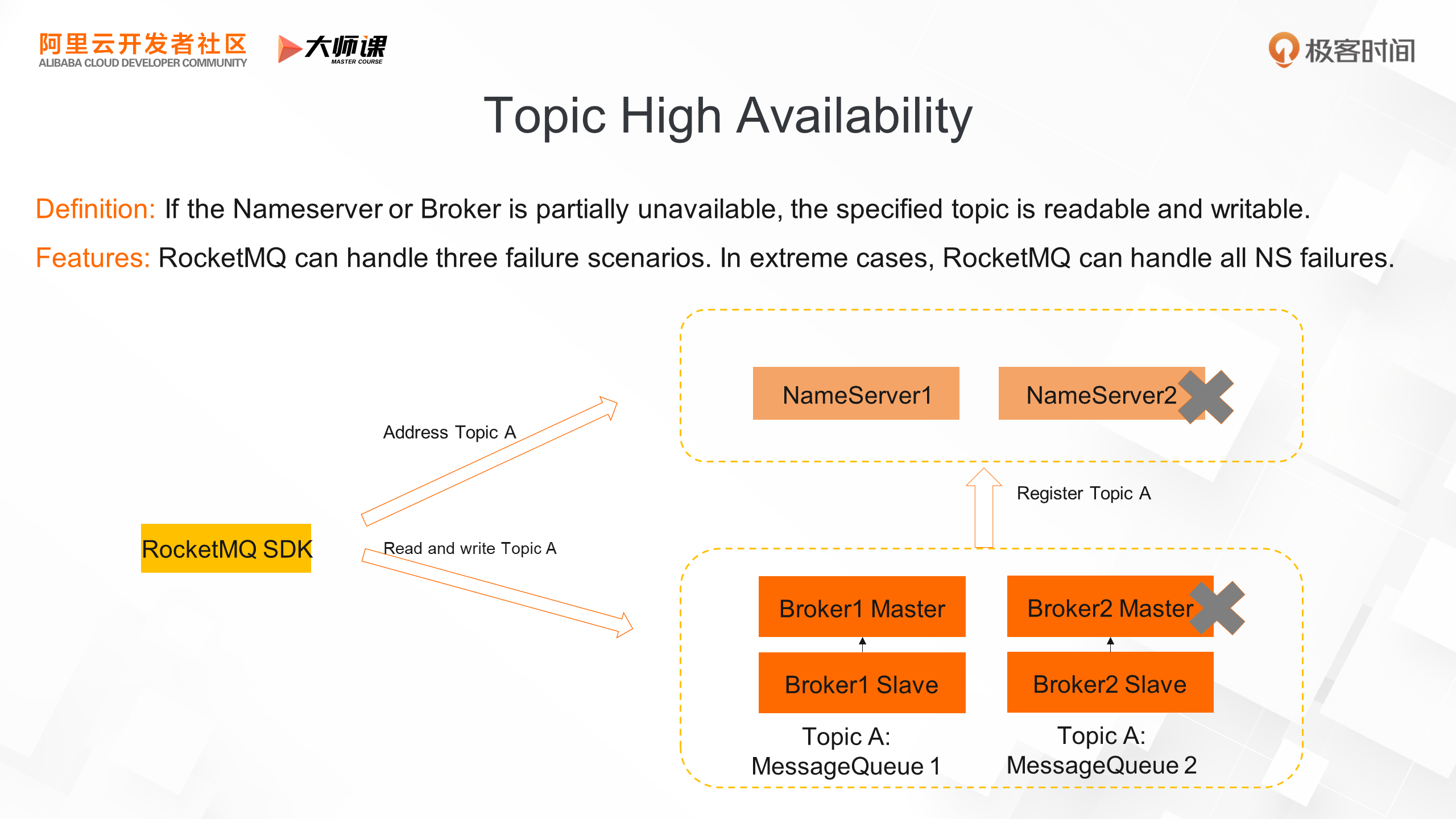

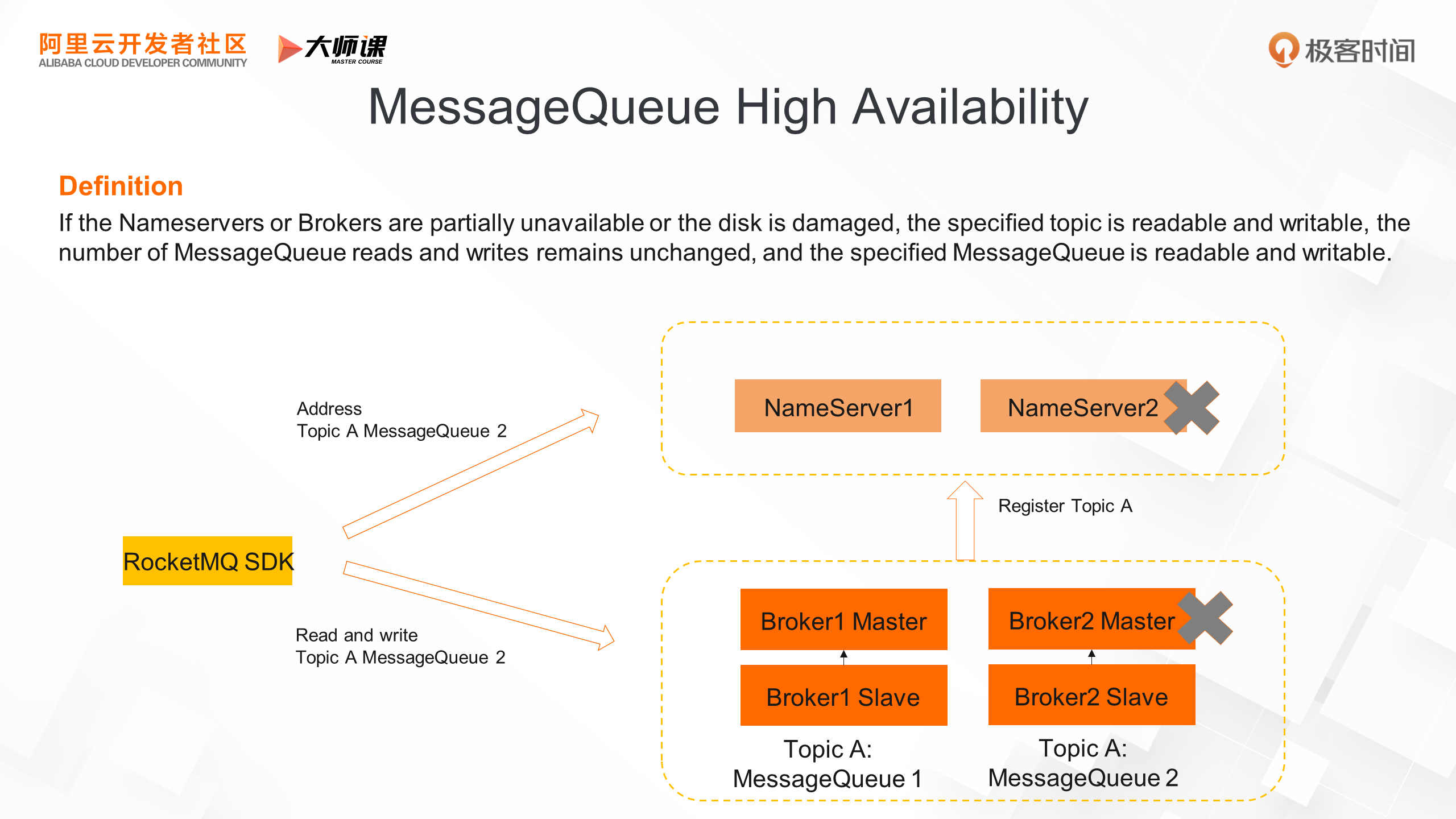

In the preceding content, we learned about the storage engine of RocketMQ from the perspective of a single machine, including Commitlog and index. Now let's jump out again and look at the high availability of RocketMQ from the perspective of clusters. First, let's define the high availability of RocketMQ. When the NameServer and Broker are partially unavailable in the RocketMQ cluster, the specified topic is still readable and writable.

RocketMQ can handle three types of failure scenarios.

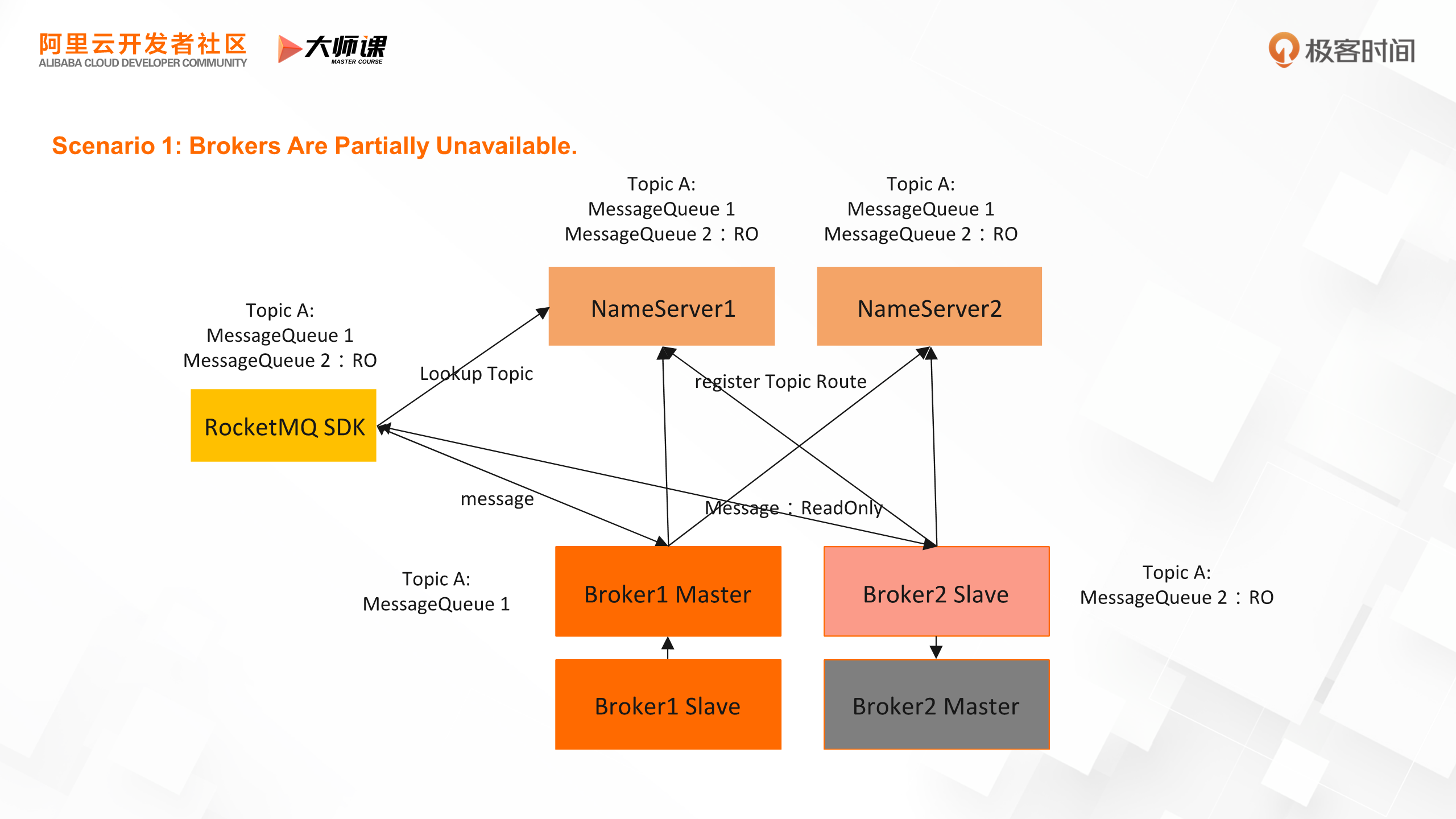

In the first case, a pair of primary and secondary machines is not available. In the diagram below, when the primary Broker2 is down, the secondary is available. Topic A is still readable and writable, including shard 1 and shard 2. The unread messages of topic A in shard 2 can still be consumed. To sum up, in a Broker cluster, as long as any one node still lives in any group of Brokers, the read/write availability of topics is not affected. If all the primary and secondary Brokers of a group are both down, the read/write of new data on the topic is not affected. Unread messages are delayed and cannot be consumed until any of the primary and secondary Brokers are started.

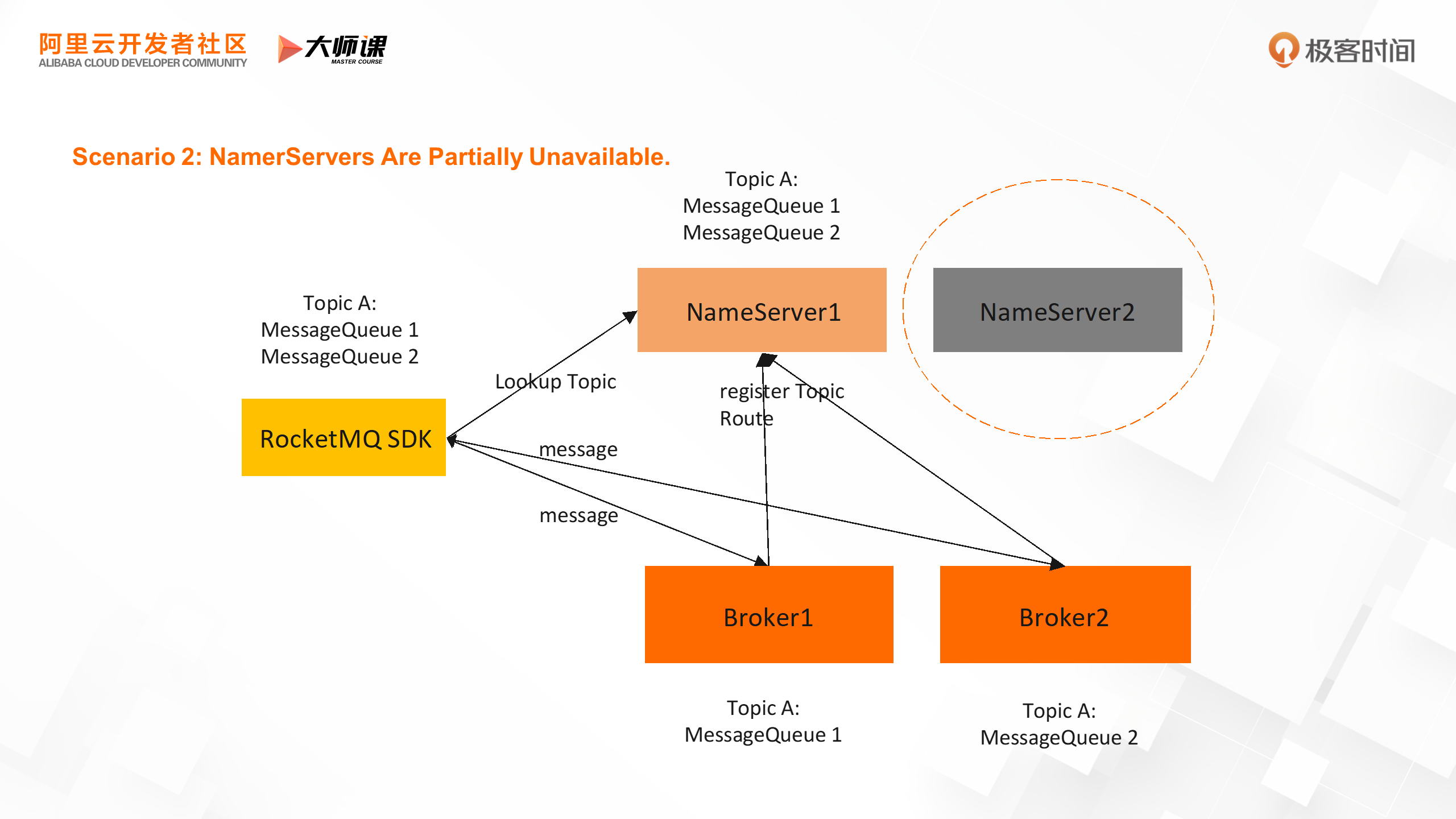

Next, look at the failure of the NameServer cluster. Since NameServer is a ShareNothing architecture, each node is stateless and in AP mode, and does not need to rely on the majority algorithm. Therefore, as long as one NameServer is alive, the entire service discovery mechanism is normal, and the read/write availability of topics is not affected.

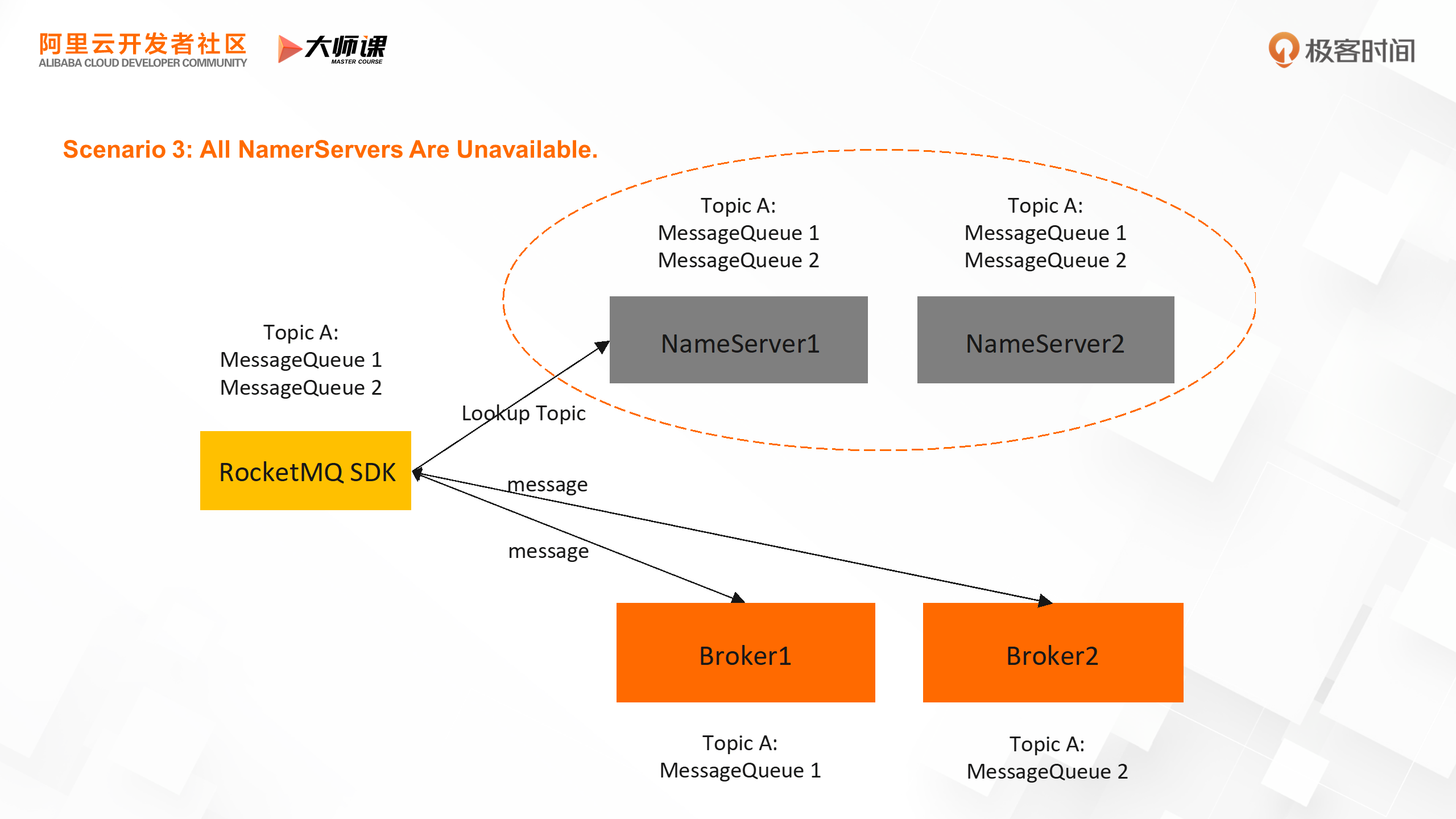

Even in more extreme cases, the entire NS is unavailable. Since RocketMQ SDK has a cache for service discovery metadata, as long as the SDK is not restarted, it can still continue to send and receive messages based on the current topic metadata.

From the implementation of high availability of topics, we found that although topics are constantly readable and writable, the number of read/write queues of topics will change. Changes in the number of queues may affect the business of certain data integration. For example, when binary logs are synchronized between heterogeneous databases, binary logs of the same record are written to different queues. In this case, binary logs may be replayed out of order, resulting in dirty data. Therefore, we need to further enhance the current high availability, to ensure that when local nodes are unavailable, not only the topic is readable and writable, but also the number of read/write queues of the topic remains unchanged, and the specified queue is also readable and writable.

As shown in the following figure, when the NameServer or Broker is unavailable at any single point, Topic A still maintains two queues, and each queue has read/write capabilities.

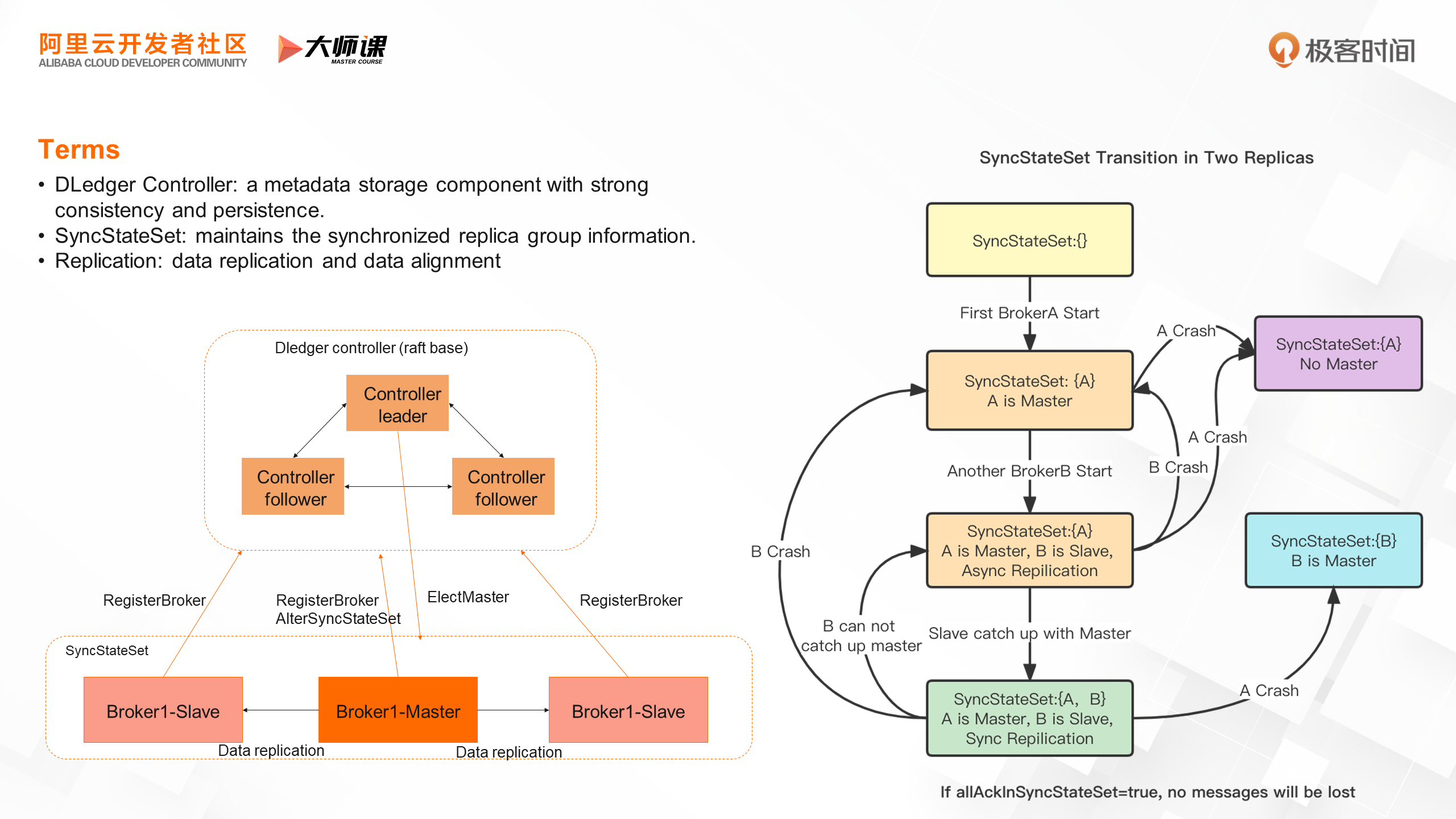

To address the high availability of MessageQueue, RocketMQ 5.0 introduced a new high-availability mechanism. Let's first understand the core concepts:

• Dledger Controller, which is a strongly consistent metadata component based on the raft protocol, is used to execute primary commands and maintain state machine information.

• SynStateSet, as shown in the figure, maintains a collection of replica groups in a synchronized state. All nodes in this collection have complete data. When the master node goes down, a new master node is selected from this collection.

• Replication is used for data replication, data verification, and truncation alignment between different replicas.

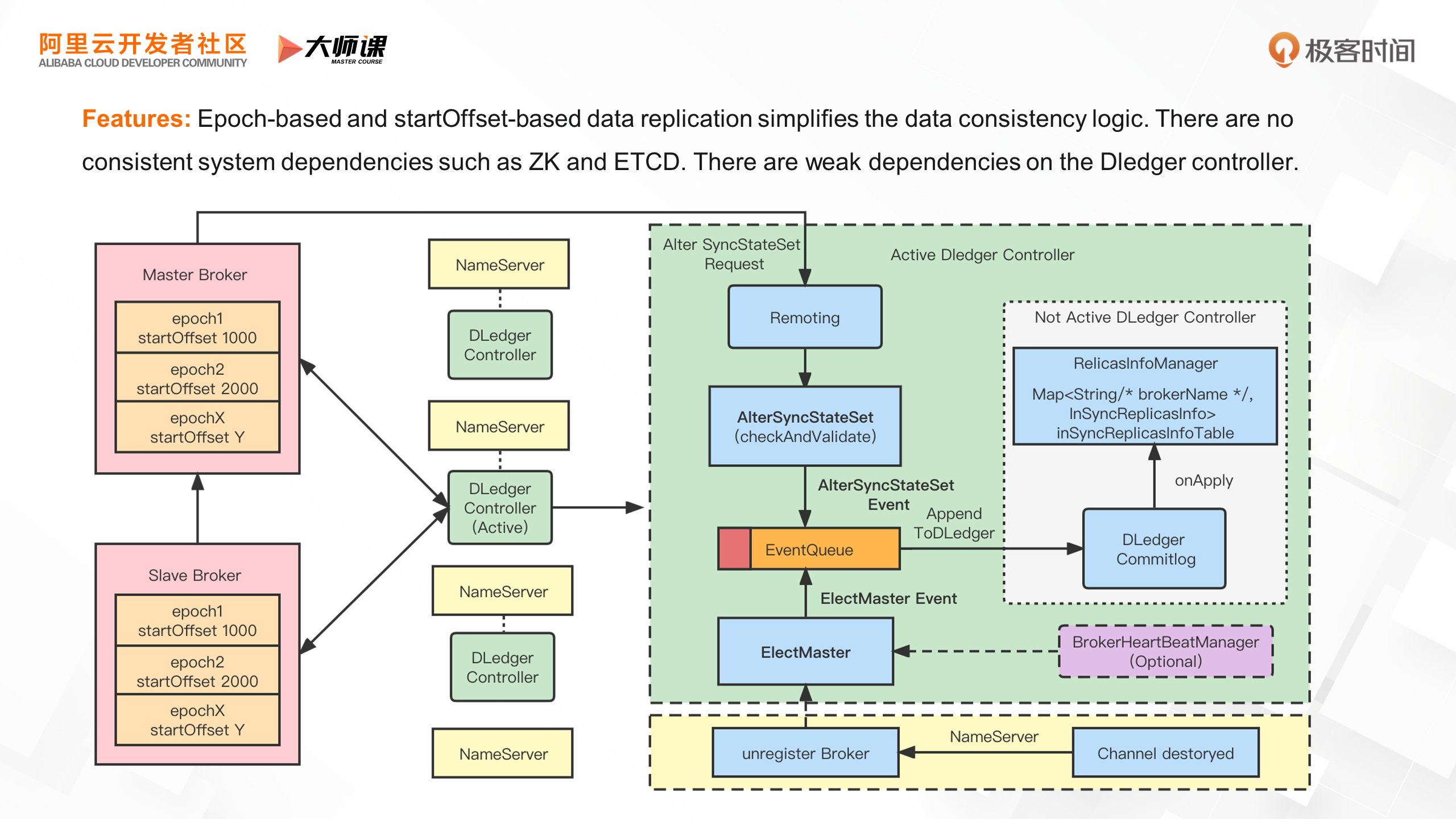

The following figure shows a panoramic view of the RocketMQ 5.0 HA architecture. This high availability architecture has multiple advantages.

First, it introduces the dynasty and start point in the message store. Based on these two data, data verification, truncation, and alignment are completed, simplifying the data consistency logic during the process of building a replica group.

Second, based on the Dledger Controller, we do not need to introduce external distributed consistency systems such as zk and etcd. Dledger Controller can be deployed together with NameServer to simplify O&M and save machine resources.

Third, RocketMQ is weakly dependent on the Dledger Controller. Even if Dledger is unavailable, only the host selection is affected, and the normal message-sending and receiving process is not affected.

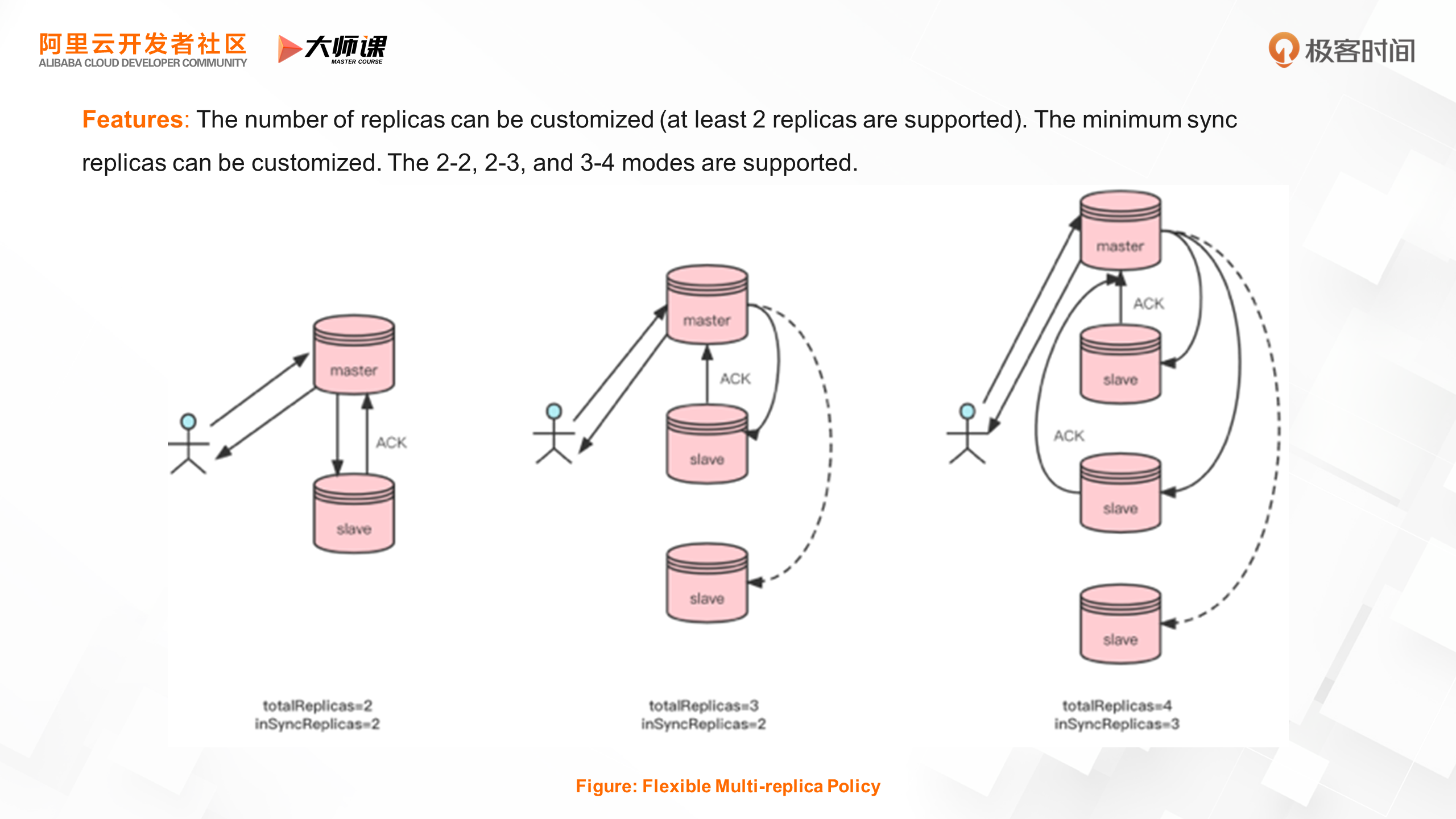

Fourth, you can select the reliability, performance, and cost of data based on the business. For example, the number of replicas can be 2, 3, 4, or 5. The replicas can be directly synchronized or asynchronously replicated. For example, the 2-2 mode indicates that two replicas and data are replicated synchronously. The 2-3 mode indicates that three replicas and the majority of the two replicas complete the replication. You can also deploy one of the replicas in a remote data center for asynchronous replication for disaster recovery.

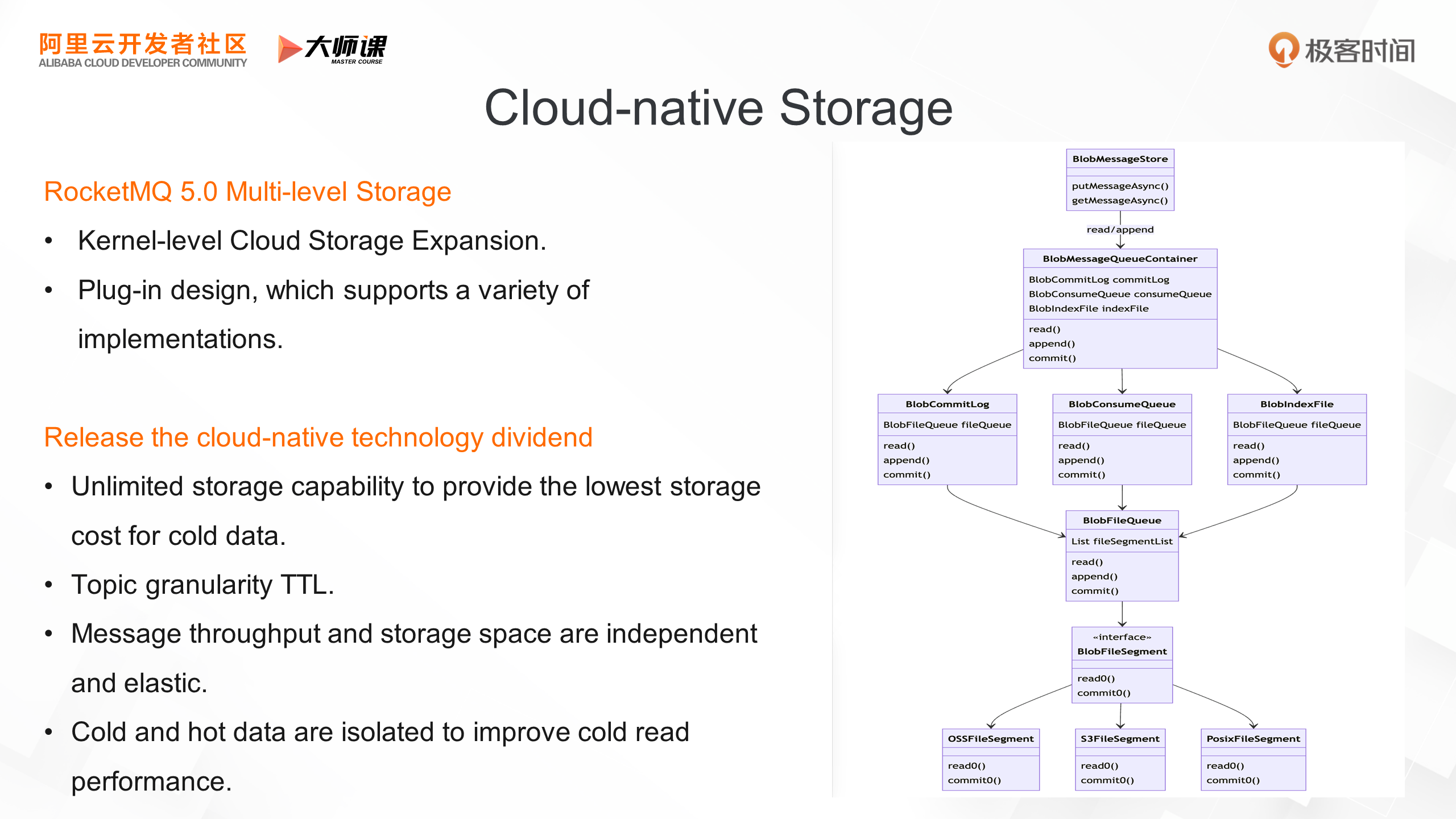

The storage systems we mentioned earlier are all implementations of RocketMQ for local file systems. However, in the cloud-native era, when we deploy RocketMQ to the cloud environment, we can further use cloud-native infrastructure, such as cloud storage, to further enhance the storage capacity of RocketMQ. In RocketMQ 5.0, we provide the feature of multi-level storage, which is a kernel-level storage extension. We extend the corresponding Commitlog, ConsumeQueue, and IndexFile for object storage. A plug-in design is adopted, and multi-level storage can be implemented in many ways. On Alibaba Cloud, we implement it based on OSS.

By introducing this cloud-native storage, RocketMQ has released many dividends:

• Unlimited storage capacity means that the message storage space is not limited by local disk space. It was originally stored for a few days, but now it can be stored for several months or even one year. Object Storage Service is also the industry's lowest-cost storage system, making it ideal for cold data storage.

• The TTL of multiple topics used to be bound to the Commitlog, and the retention time was unified. Now, each topic uses a separate object to store the Commitlog file and can have a separate TTL.

• The further storage-compute separation in the storage system can separate the elasticity of storage throughput from the elasticity of storage space.

• Hot and cold data isolation separates the read links of hot and cold data, which greatly improves the cold read performance without affecting online business.

In this course, we explored the overall architecture of RocketMQ and how it supports diverse business scenarios based on its storage-compute separation architecture.

Next, we delved into RocketMQ's service discovery and the interaction links of the entire RocketMQ system from a cluster perspective. RocketMQ's service discovery prioritizes AP protection and can be divided and combined to handle more complex network scenarios. Any component can scale out to cope with the explosive growth of internet business traffic.

Finally, we explained RocketMQ's storage design, including its standalone storage engine, how it supports 10,000-level topics, high availability implementation features, and how it extends cloud storage at the kernel level.

In the next course, we will enter the specific business field of RocketMQ and learn about the 5.0 version from the user and business perspectives.

Click here to go to the official website for more details.

Apache RocketMQ: How to Evolve from the Internet Era to the Cloud Era?

RocketMQ 5.0: What are the Advantages of RocketMQ in Business Message Scenarios?

212 posts | 13 followers

FollowAlibaba Cloud Native Community - November 20, 2023

Alibaba Cloud Native - June 6, 2024

Alibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native Community - February 15, 2023

Alibaba Cloud Native Community - November 23, 2022

212 posts | 13 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn MoreMore Posts by Alibaba Cloud Native