EMR Serverless Spark支援通過Notebook進行互動式開發。本文帶您快速體驗Notebook的建立、運行等操作。

前提條件

已準備阿里雲帳號,詳情請參見帳號註冊。

已完成角色授權,詳情請參見阿里雲帳號角色授權。

已建立工作空間和Notebook會話執行個體,詳情請參見建立工作空間和管理Notebook會話。

操作步驟

步驟一:準備測試檔案

本快速入門為了帶您快速熟悉Notebook任務,為您提供了測試檔案,您可以直接下載待後續步驟使用。

單擊employee.csv,直接下載測試檔案。

employee.csv檔案中定義了一個包含員工姓名、部門和薪水的資料列表。

步驟二:上傳測試檔案

上傳資料檔案(employee.csv)到阿里雲Object Storage Service控制台,詳情請參見檔案上傳。

步驟三:開發並運行Notebook

在EMR Serverless Spark頁面,單擊左側的資料開發。

建立Notebook。

在開發目錄頁簽下,單擊

表徵圖。

表徵圖。在彈出的對話方塊中,輸入名稱,類型使用,然後單擊確定。

在右上方選擇已建立並啟動的Notebook會話執行個體。

您也可以在下拉式清單中選擇建立Notebook會話,建立一個Notebook會話執行個體。關於Notebook會話更多介紹,請參見管理Notebook會話。

說明當前支援多個Notebook共用同一個Notebook會話執行個體。這意味著您可以同時在多個Notebook中訪問和操作相同的會話資源,而無需為每個Notebook單獨建立新的會話執行個體。

資料處理與可視化。

運行PySpark作業

拷貝如下代碼到新增的Notebook的Python儲存格中。

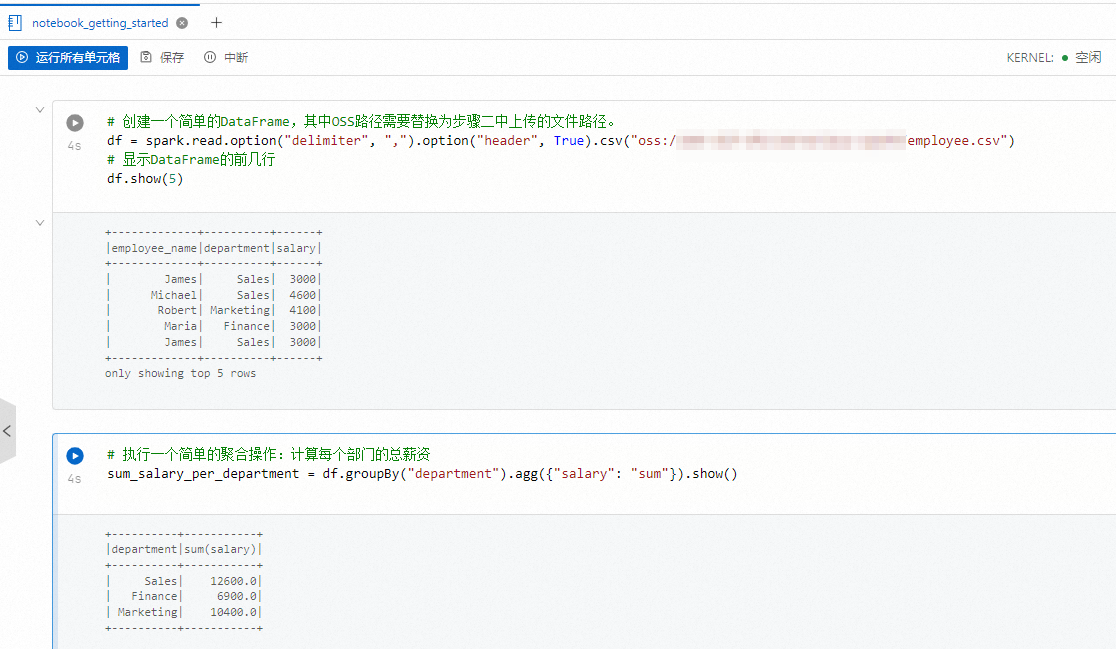

# 建立一個簡單的DataFrame,其中OSS路徑需要替換為步驟二中上傳的檔案路徑。 df = spark.read.option("delimiter", ",").option("header", True).csv("oss://path/to/file") # 顯示DataFrame的前幾行 df.show(5) # 執行一個簡單的彙總操作:計算每個部門的總薪資 sum_salary_per_department = df.groupBy("department").agg({"salary": "sum"}).show()單擊運行所有儲存格,執行建立的Notebook。

您也可以使用不同的儲存格,然後單擊儲存格前面的

表徵圖。

表徵圖。

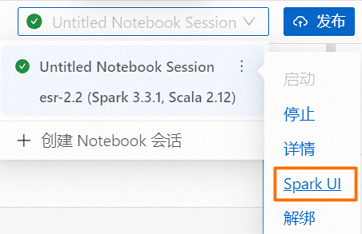

(可選)查看Spark UI。

您可以在會話下拉式清單中,將滑鼠移至上方在當前任務的Notebook會話執行個體的

上,然後單擊Spark UI跳轉至Spark Jobs頁面,可以查看Spark任務的資訊。

上,然後單擊Spark UI跳轉至Spark Jobs頁面,可以查看Spark任務的資訊。

通過第三方庫進行可視化分析

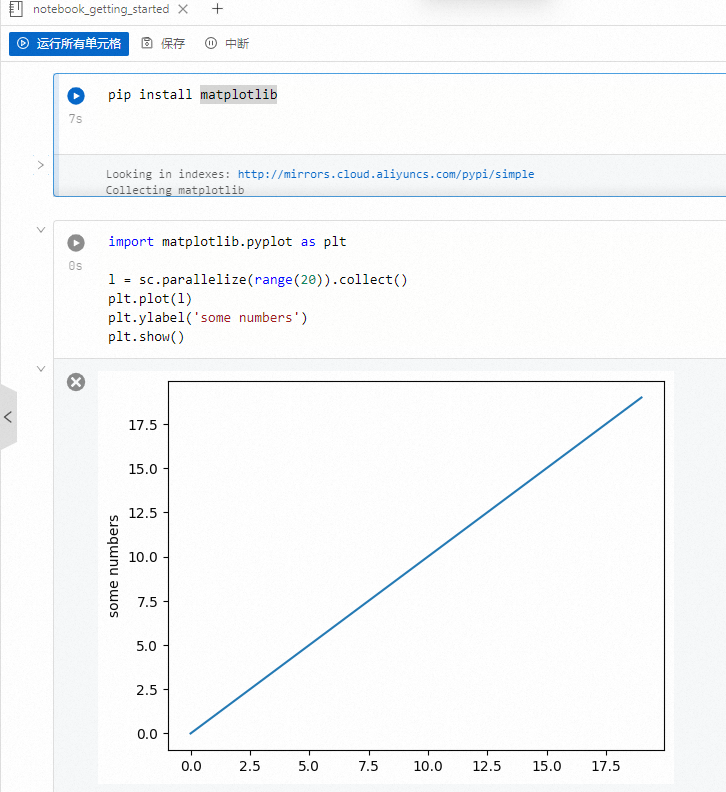

說明Notebook會話已預裝matplotlib、numpy、pandas庫,如果需要使用其他第三方庫,請參見在Notebook中使用Python第三方庫。

使用matplotlib庫進行資料視覺效果。

import matplotlib.pyplot as plt l = sc.parallelize(range(20)).collect() plt.plot(l) plt.ylabel('some numbers') plt.show()單擊運行所有儲存格,執行建立的Notebook。

您也可以使用不同的儲存格,然後單擊儲存格前面的

表徵圖。

表徵圖。

步驟四:發布Notebook

運行完成後,單擊右上方的發布。

在發布對話方塊,輸入發布資訊,然後單擊確定,儲存為一個版本。