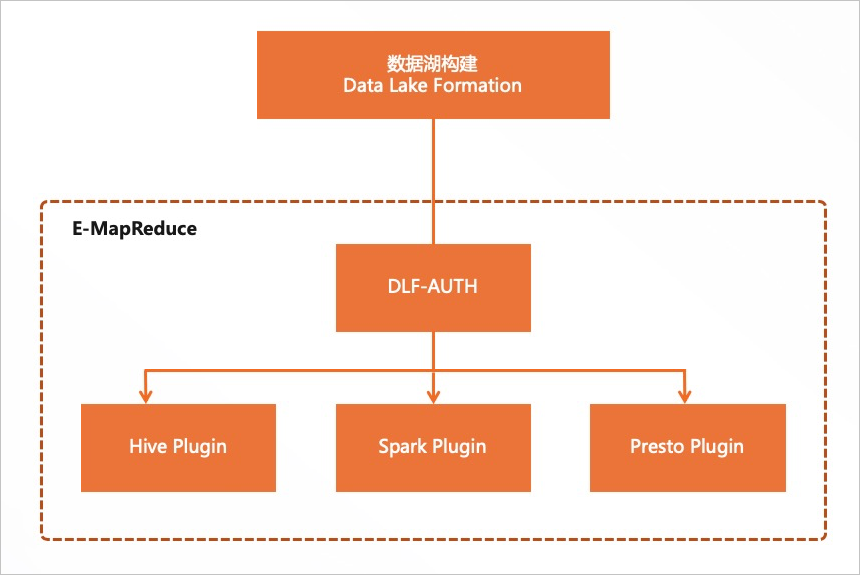

DLF-Auth組件是資料湖構建DLF(Data Lake Formation)產品提供的,通過該組件可以開啟資料湖構建DLF的資料許可權功能,可以對資料庫、資料表、資料列、函數進行細粒度許可權控制,實現資料湖上統一的資料許可權管理。本文為您介紹如何開啟DLF-Auth許可權。

背景資訊

資料湖構建DLF是一款全託管的快速協助使用者構建雲上資料湖的服務,提供了雲上資料湖統一的許可權管理和中繼資料管理,詳細資料請參見資料湖構建產品簡介。

前提條件

已建立E-MapReduce叢集,並選擇了OpenLDAP服務,詳情請參見建立叢集。

在建立叢集的軟體配置頁面,中繼資料使用預設的DLF統一中繼資料。

使用限制

資料湖構建DLF許可權僅支援通過RAM使用者進行許可權管理,因此需要在EMR控制台通過使用者管理功能添加使用者。

資料湖構建DLF的資料許可權管理功能支援地區請參見已開通的地區和訪問網域名稱。

DLF-Auth啟用Hive或Spark後,Ranger將無法點擊啟用或禁用Hive或Spark;Ranger啟用Hive或Spark後,DLF-Auth將無法點擊啟用或禁用Hive或Spark。

DLF-Auth支援的EMR版本及計算引擎列表。

EMR 主要版本

Hive

Spark

Presto

Impala

EMR 3.x版本

EMR-3.39.0及以前版本

不支援

不支援

不支援

不支援

EMR-3.40.0

支援

支援

支援

不支援

EMR-3.41.0至EMR-3.43.1

支援

支援

不支援

不支援

EMR-3.44.0及以上版本

支援

支援

支援

支援

EMR 5.x版本

EMR-5.5.0及以前版本

不支援

不支援

不支援

不支援

EMR-5.6.0

支援

支援

支援

不支援

EMR-5.7.0至EMR-5.9.1

支援

支援

不支援

不支援

EMR-5.10.0 及以上版本

支援

支援

支援

支援

操作流程

通過本文操作,您可以開啟DLF-Auth,實現資料湖上全託管的統一的許可權管理。

步驟一:開啟Hive許可權控制

進入DLF-Auth頁面。

登入EMR on ECS。

在頂部功能表列處,根據實際情況選擇地區和資源群組。

在EMR on ECS頁面,單擊目的地組群操作列的叢集服務。

在叢集服務頁面,單擊DLF-Auth服務地區的狀態。

開啟Hive許可權控制。

在DLF-Auth服務頁面,開啟enableHive開關。

在彈出的對話方塊中,單擊確定。

重啟HiveServer。

在叢集服務頁面,選擇Hive服務。

在Hive服務頁面,單擊HiveServer操作列的。

在彈出的對話方塊中,輸入執行原因,單擊確定。

在確認對話中,單擊確定。

步驟二:添加RAM使用者

您可以通過使用者管理功能添加使用者,詳細操作如下。

進入使用者管理頁面。

登入EMR on ECS。

在頂部功能表列處,根據實際情況選擇地區和資源群組。

在EMR on ECS頁面,單擊目的地組群操作列的叢集服務。

單擊上方的使用者管理頁簽。

在使用者管理頁面,單擊添加使用者。

在添加使用者對話方塊中,在使用者名稱下拉式清單中,選擇已有的RAM使用者作為EMR使用者的名稱,輸入密碼和確認密碼。

單擊確定。

步驟三:驗證許可權

如果RAM使用者已擁有AliyunDLFDssFullAccess許可權,或者RAM使用者被授予了AdministratorAccess,則該RAM使用者具備了所有DLF細粒度資源的存取權限,無需進行資料授權操作。

授權前驗證目前使用者許可權。

使用SSH方式登入到叢集,詳情請參見登入叢集。

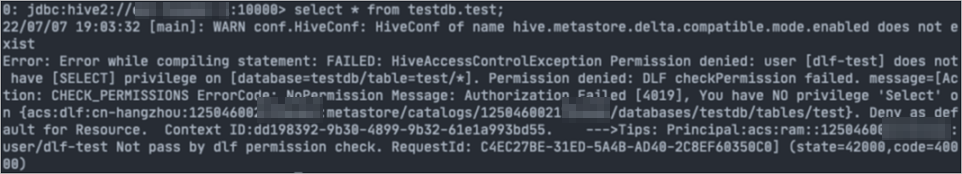

執行以下命令訪問HiveServer2。

beeline -u jdbc:hive2://master-1-1:10000 -n <user> -p <password>說明<user>和<password>為步驟二:添加RAM使用者中您設定的使用者名稱和密碼。

查看已有資料表資訊。

例如,執行以下命令,查看test表資訊。

testdb.test請根據您實際資訊修改。select * from testdb.test;因為目前使用者沒有許可權,會報沒有許可權而查詢失敗的錯。

為RAM使用者添加許可權。

登入資料湖構建控制台。

在左側導覽列中,選擇。

在資料授權頁面,單擊新增授權。

在新增授權頁面,配置以下參數。

參數

描述

授權主體

主體類型

預設RAM使用者。

主體選擇

在主體選擇下拉式清單中,選擇您在步驟二:添加RAM使用者中添加的使用者。

授權資源

授權方式

預設資源授權。

資源類型

根據您實際情況選擇。

本文樣本為中繼資料表。

許可權配置

資料許可權

本文樣本為Select。

授權許可權

單擊確定。

授權後驗證目前使用者許可權。

參見步驟1重新查看資料表的資訊,因為已經授權,所以可以查詢到相關資料表的資訊。

(可選)步驟四:開啟Hive LDAP認證

如果開啟了DLF-Auth許可權,建議您開啟Hive LDAP認證,以便於串連Hive的使用者都可以通過LDAP認證後執行相關指令碼。

進入叢集服務頁面。

登入EMR on ECS。

在頂部功能表列處,根據實際情況選擇地區和資源群組。

在EMR on ECS頁面,單擊目的地組群操作列的叢集服務。

開啟LDAP認證。

在叢集服務頁面,單擊Hive服務地區的狀態。

開啟enableLDAP開關。

EMR-5.11.1及之後版本,EMR-3.45.1及之後版本

在服務概述地區,開啟enableLDAP開關。

在彈出的對話方塊中,單擊确定。

EMR-5.11.0及之前版本,EMR-3.45.0及之前版本

在组件列表地區,選擇HiveServer操作列的。

在彈出的對話方塊中,輸入执行原因,單擊确定。

在確認對話方塊中,單擊确定。

重啟HiveServer。

在組件列表地區,單擊HiveServer操作列的重啟。

在彈出的對話方塊中,輸入執行原因,單擊確定。

在確認對話方塊中,單擊確定。

> enableLDAP

> enableLDAP