Alibaba Cloud Elasticsearchは、セキュリティ、機械学習、グラフ、アプリケーションパフォーマンス管理 (APM) などのオープンソースのElasticsearch機能と互換性があります。 Alibaba Cloud Elasticsearchは、エンタープライズレベルのアクセス制御、セキュリティモニタリングとアラート、レポートの自動生成などの機能を提供します。 Alibaba Cloud Elasticsearchを使用して、データを検索および分析できます。 このトピックでは、data Transmission Service (DTS) を使用して、PolarDB for MySQLクラスターからElasticsearchクラスターにデータを同期する方法について説明します。

前提条件

バージョン5.5、5.6、6.3、6.7、または7. xのElasticsearchクラスターが作成されます。 詳細については、次をご参照ください: Elasticsearchクラスターの作成

PolarDB for MySQLクラスターでバイナリログ機能が有効になっています。 詳細については、次をご参照ください: バイナリログの有効化

注意事項

DTSは、最初の完全データ同期中に、ソースRDSインスタンスとターゲットRDSインスタンスの読み取りおよび書き込みリソースを使用します。 これにより、RDSインスタンスの負荷が増加する可能性があります。 インスタンスのパフォーマンスが悪い場合、仕様が低い場合、またはデータ量が多い場合、データベースサービスが利用できなくなる可能性があります。 たとえば、ソースRDSインスタンスで多数の低速SQLクエリが実行されている場合、テーブルにプライマリキーがない場合、またはターゲットRDSインスタンスでデッドロックが発生する場合、DTSは大量の読み取りおよび書き込みリソースを占有します。 データ同期の前に、ソースRDSインスタンスとターゲットRDSインスタンスのパフォーマンスに対するデータ同期の影響を評価します。 オフピーク時にデータを同期することを推奨します。 たとえば、ソースRDSインスタンスとターゲットRDSインスタンスのCPU使用率が30% 未満の場合にデータを同期できます。

DTSはDDL操作を同期しません。 データ同期中にソースデータベースのテーブルに対してDDL操作を実行する場合は、同期するオブジェクトからテーブルを削除し、Elasticsearchクラスターからテーブルのインデックスを削除してから、同期するオブジェクトにテーブルを追加する操作を実行する必要があります。 詳細については、「データ同期タスクからのオブジェクトの削除」および「データ同期タスクへのオブジェクトの追加」をご参照ください。

同期するテーブルに列を追加するには、次の手順を実行します。Elasticsearchクラスターでテーブルのマッピングを変更し、PolarDB for MySQLクラスターでDDL操作を実行してから、データ同期タスクを一時停止して開始します。

同期可能なSQL操作

INSERT、DELETE、UPDATE

データ型マッピング

PolarDB for MySQLクラスターとElasticsearchクラスターのデータ型は1対1で対応していません。 初期スキーマ同期中に、DTSはPolarDB for MySQLクラスターのデータ型をElasticsearchクラスターのデータ型に変換します。 詳細については、「スキーマ同期のためのデータ型マッピング」をご参照ください。

手順

データ同期インスタンスを購入します。 詳細については、「DTSインスタンスの購入」をご参照ください。

説明購入ページで、ソースインスタンスをPolarDB、宛先インスタンスをElasticsearch、同期トポロジを片道同期に設定します。

DTSコンソールにログインします。

説明データ管理 (DMS) コンソールにリダイレクトされている場合は、

の

の アイコンをクリックして、以前のバージョンのDTSコンソールに移動し。

アイコンをクリックして、以前のバージョンのDTSコンソールに移動し。左側のナビゲーションウィンドウで、[データ同期] をクリックします。

[同期タスク] ページの上部で、ターゲットインスタンスが存在するリージョンを選択します。

データ同期インスタンスを見つけ、[操作] 列の [タスクの設定] をクリックします。

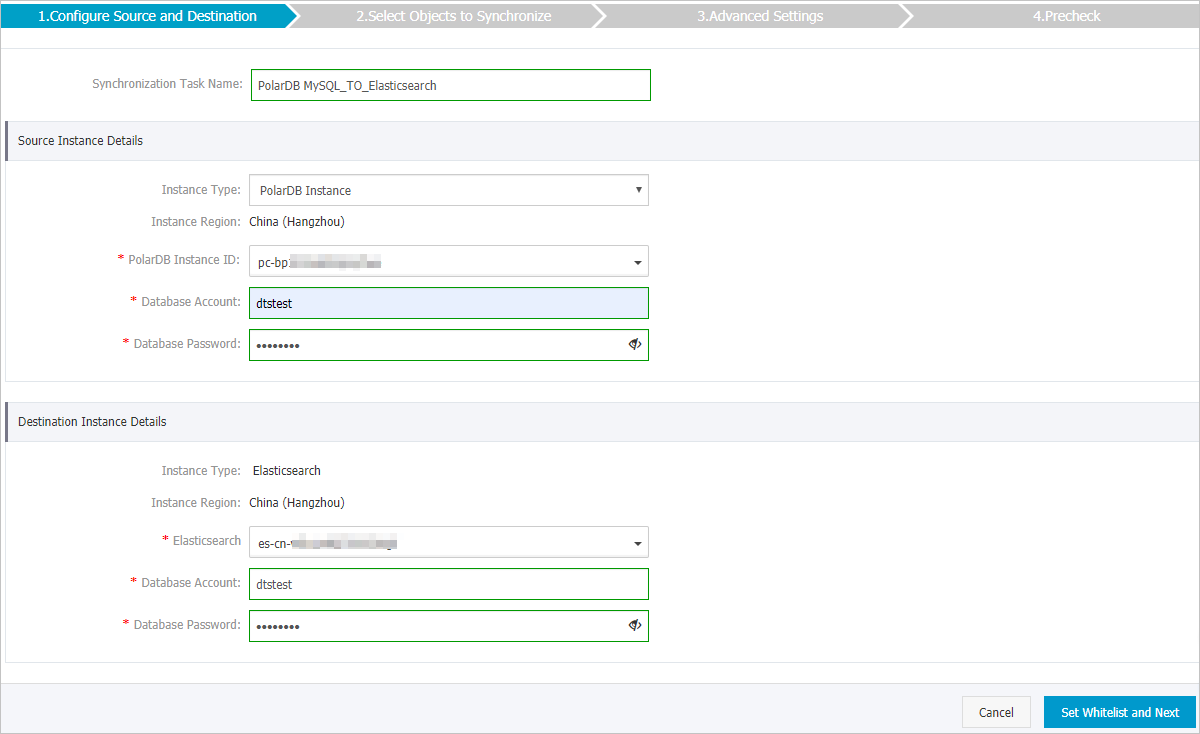

ソースインスタンスとターゲットインスタンスを設定します。

セクション

パラメーター

説明

非該当

同期タスク名

DTSが自動的に生成するタスク名。 タスクを簡単に識別できるように、わかりやすい名前を指定することをお勧めします。 一意のタスク名を使用する必要はありません。

ソースインスタンスの詳細

インスタンスタイプ

このパラメーターの値はPolarDB Instanceに設定されており、変更することはできません。

インスタンスリージョン

購入ページで選択したソースリージョン。 このパラメーターの値は変更できません。

PolarDBインスタンスID

ソースPolarDB for MySQLクラスターのID。

データベースアカウント

PolarDB for MySQLクラスターのデータベースアカウント。

説明アカウントには、ソースデータベースに対する読み取り権限が必要です。

データベースパスワード

データベースアカウントのパスワードを設定します。

ターゲットインスタンスの詳細

インスタンスタイプ

このパラメーターはElasticsearchに設定されており、変更できません。

インスタンスリージョン

購入ページで選択したターゲットリージョン。 このパラメーターの値は変更できません。

Elasticsearch

ターゲットElasticsearchクラスターのID。

データベースアカウント

Elasticsearchクラスターへの接続に使用されるアカウント。 デフォルトのアカウントはelasticです。

データベースパスワード

データベースアカウントのパスワードを設定します。

ページの右下隅にあるホワイトリストと次への設定をクリックします。

、ソースまたはターゲットデータベースがAlibaba Cloudデータベースインスタンス (ApsaraDB RDS for MySQL、ApsaraDB for MongoDBインスタンスなど) の場合、DTSは自動的にDTSサーバーのCIDRブロックをインスタンスのIPアドレスホワイトリストに追加します。 ソースデータベースまたはターゲットデータベースがElastic Compute Service (ECS) インスタンスでホストされている自己管理データベースの場合、DTSサーバーのCIDRブロックがECSインスタンスのセキュリティグループルールに自動的に追加されます。ECSインスタンスがデータベースにアクセスできることを確認する必要があります。 自己管理データベースが複数のECSインスタンスでホストされている場合、DTSサーバーのCIDRブロックを各ECSインスタンスのセキュリティグループルールに手動で追加する必要があります。 ソースデータベースまたはターゲットデータベースが、データセンターにデプロイされているか、サードパーティのクラウドサービスプロバイダーによって提供される自己管理データベースである場合、DTSサーバーのCIDRブロックをデータベースのIPアドレスホワイトリストに手動で追加して、DTSがデータベースにアクセスできるようにする必要があります。 詳細については、「DTSサーバーのCIDRブロックの追加」をご参照ください。

警告DTSサーバーのCIDRブロックがデータベースまたはインスタンスのホワイトリスト、またはECSセキュリティグループルールに自動的または手動で追加されると、セキュリティリスクが発生する可能性があります。 したがって、DTSを使用してデータを同期する前に、潜在的なリスクを理解して認識し、次の対策を含む予防策を講じる必要があります。VPNゲートウェイ、またはSmart Access Gateway。

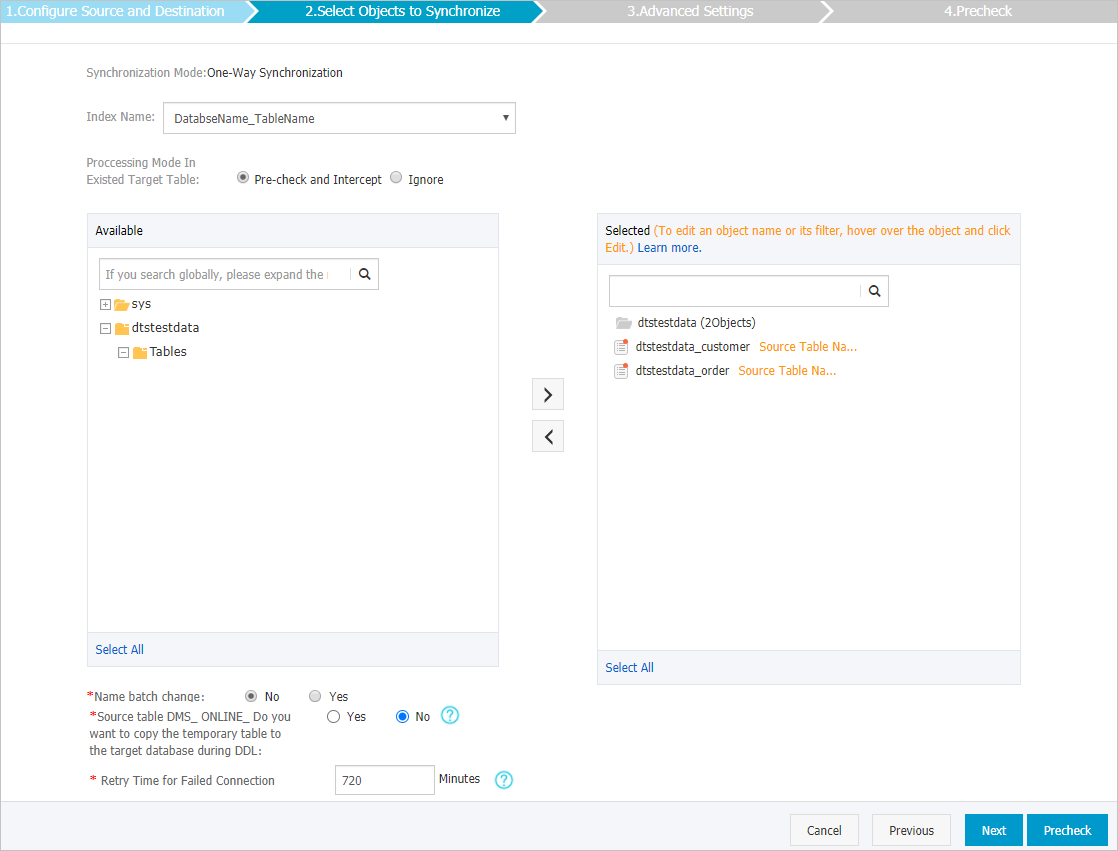

インデックス名、同一のインデックス名の処理モード、および同期するオブジェクトを設定します。

パラメータまたは設定

説明

インデックス名

テーブル名

[テーブル名] を選択した場合、Elasticsearchクラスターに作成されたインデックスの名前は、テーブルの名前と同じになります。 この例では、インデックス名はcustomerです。

DatabaseName_TableName

DatabaseName_TableNameを選択した場合、Elasticsearchクラスターで作成されたインデックスの名前は <データベース名 >_< テーブル名> です。 この例では、インデックス名はdtstestdata_customerです。

既存のターゲットテーブルの処理モード

事前チェックとインターセプト: ターゲットクラスターにソーステーブルと同じ名前のインデックスが含まれているかどうかを確認します。 ターゲットクラスターにソーステーブルと同じ名前のインデックスが含まれていない場合、事前チェックが渡されます。 それ以外の場合、事前チェック中にエラーが返され、データ同期タスクを開始できません。

説明ターゲットクラスターのインデックスの名前がソーステーブルと同じで、削除または名前を変更できない場合は、オブジェクト名マッピング機能を使用できます。 詳細については、「同期するオブジェクトの名前変更」をご参照ください。

無視: ソーステーブルと同じ名前を持つターゲットクラスターのインデックスの事前チェックをスキップします。

警告[無視] を選択すると、データの不整合が発生し、ビジネスが潜在的なリスクにさらされる可能性があります。

ソースデータベースとターゲットクラスターのマッピングが同じで、ターゲットクラスターのレコードのプライマリキーがソースデータベースのプライマリキーと同じ場合、最初のデータ同期中もレコードは変更されません。 ただし、増分データ同期中にレコードが上書きされます。

ソースデータベースと宛先クラスターのマッピングが異なる場合、初期データ同期が失敗する可能性があります。 この場合、一部の列のみが同期されるか、データ同期タスクが失敗します。

同期するオブジェクトの選択

[使用可能] セクションから1つ以上のオブジェクトを選択し、

アイコンをクリックして、オブジェクトを [選択済み] セクションに追加します。

アイコンをクリックして、オブジェクトを [選択済み] セクションに追加します。 同期するオブジェクトとしてテーブルまたはデータベースを選択できます。

データベースとテーブルの名前変更

オブジェクト名マッピング機能を使用して、ターゲットインスタンスに同期されるオブジェクトの名前を変更できます。 詳細は、オブジェクト名のマッピングをご参照ください。

DMSがDDL操作を実行するときの一時テーブルのレプリケート

DMSを使用してソースデータベースでオンラインDDL操作を実行する場合、オンラインDDL操作によって生成された一時テーブルを同期するかどうかを指定できます。

Yes: DTSは、オンラインDDL操作によって生成された一時テーブルのデータを同期します。

説明オンラインDDL操作が大量のデータを生成する場合、データ同期タスクが遅延する可能性があります。

No: DTSは、オンラインDDL操作によって生成された一時テーブルのデータを同期しません。 ソースデータベースの元のDDLデータのみが同期されます。

説明[いいえ] を選択すると、ターゲットデータベースのテーブルがロックされる可能性があります。

失敗した接続の再試行時間

既定では、DTSがソースデータベースまたはターゲットデータベースへの接続に失敗した場合、DTSは次の720分 (12時間) 以内に再試行します。 必要に応じて再試行時間を指定できます。 DTSが指定された時間内にソースデータベースとターゲットデータベースに再接続すると、DTSはデータ同期タスクを再開します。 それ以外の場合、データ同期タスクは失敗します。

説明DTSが接続を再試行すると、DTSインスタンスに対して課金されます。 ビジネスニーズに基づいて再試行時間を指定することを推奨します。 ソースインスタンスとターゲットインスタンスがリリースされた後、できるだけ早くDTSインスタンスをリリースすることもできます。

[選択済み] セクションで、テーブルの上にポインターを移動し、[編集] を選択します。 [テーブルの編集] ダイアログボックスで、Elasticsearchクラスター内のテーブルのパラメーター (インデックス名やタイプ名など) を設定します。

パラメータまたは設定

説明

インデックス名

詳細については、次をご参照ください: 規約をご参照ください。

警告インデックス名または型名には、特殊文字としてアンダースコア (_) のみを含めることができます。

同じスキーマを持つ複数のソーステーブルをターゲットオブジェクトに同期するには、この手順を繰り返してテーブルに同じインデックス名と型名を設定する必要があります。 そうしないと、データ同期タスクが失敗するか、データが失われます。

タイプ名

フィルター

データをフィルタリングするために指定するSQL条件。 指定された条件を満たすデータレコードのみが、ターゲットクラスターに同期されます。 詳細については、「フィルター条件の設定」をご参照ください。

IsPartition

パーティションを設定するかどうかを指定します。 [はい] を選択した場合、パーティションキー列とパーティション数も指定する必要があります。

Settings_routing

ターゲットElasticsearchクラスターの特定のシャードにドキュメントを保存するかどうかを指定します。 詳細については、「 _routing」をご参照ください。

[はい] を選択した場合、ルーティングのカスタム列を指定できます。

[いいえ] を選択した場合、_id値がルーティングに使用されます。

説明ターゲットElasticsearchクラスターのバージョンが7.4の場合、[いいえ] を選択する必要があります。

_id 値

主キー列

複数の列が1つの複合主キーにマージされます。

ビジネスキー

ビジネスキーを選択する場合は、ビジネスキー列も指定する必要があります。

パラメーターの追加

[paramの追加] をクリックして行を追加できます。 各行で、列パラメーターとパラメーター値を指定します。 詳細については、Elasticsearchドキュメントの「マッピングパラメーター」をご参照ください。

説明DTSは、列パラメータードロップダウンリストに表示されるパラメーターのみをサポートします。

ページの右下隅にある事前チェックをクリックします。

説明データ同期タスクを開始する前に、DTSは事前チェックを実行します。 データ同期タスクは、タスクが事前チェックに合格した後にのみ開始できます。

タスクが事前チェックに合格しなかった場合は、失敗した各項目の横にある

アイコンをクリックして詳細を表示できます。

アイコンをクリックして詳細を表示できます。 詳細に基づいて問題をトラブルシューティングした後、新しい事前チェックを開始します。

問題をトラブルシューティングする必要がない場合は、失敗した項目を無視して新しい事前チェックを開始してください。

次のメッセージが表示されたら、[事前チェック] ダイアログボックスを閉じます。[事前チェックの合格] その後、データ同期タスクが開始されます。

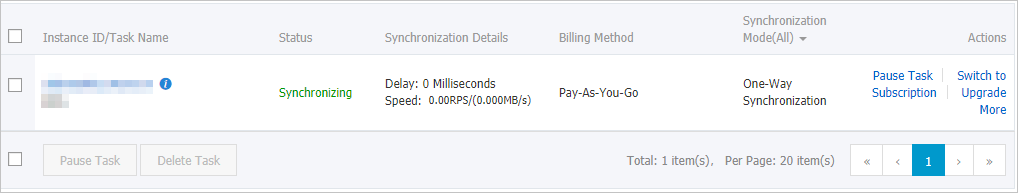

初期同期が完了し、データ同期タスクが同期状態になるまで待ちます。

データ同期タスクのステータスは、[同期タスク] ページで確認できます。

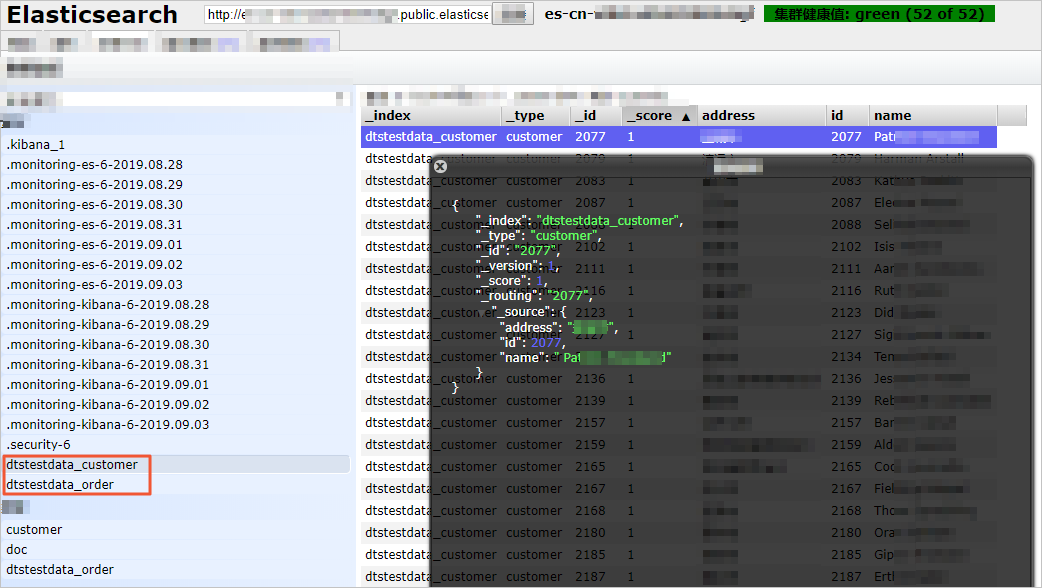

インデックスとデータを確認する

データ同期タスクが同期状態の場合、 Elasticsearch-Headプラグイン。 次に、インデックスが作成され、データが期待どおりに同期されているかどうかを確認できます。 詳細については、「Cerebroを使用したElasticsearchクラスターへのアクセス」をご参照ください。

インデックスが作成されていないか、データが期待どおりに同期されていない場合は、インデックスとデータを削除してから、データ同期タスクを再設定できます。