This topic describes a complete data transformation process to walk you through the features and related operations. Website access logs are used as an example to describe the process.

Preparations

Create a project named web-project. For more information, see Manage a project.

Create a Logstore named website_log in the web-project project. The Logstore is used as the source Logstore. For more information, see Manage a Logstore.

Collect website access logs and store them in the website_log Logstore. For more information, see Data collection overview.

Create the destination Logstore website_fail in the web-project project.

If you use a Resource Access Management (RAM) user, make sure that the RAM user has management permissions on data transformation jobs. For more information, see Grant a RAM user the permissions to manage a data transformation job.

Indexes are configured for the source and destination Logstores. For more information, see Create indexes.

Data transformation does not require indexes. However, if you do not configure indexes, you cannot perform query or analysis operations.

Background information

The access logs of a website are stored in the website_log Logstore. To improve user experience, access errors must be analyzed. During this process, access logs with the access status code 4XX need to be filtered, personal information of access users must be masked, and the filtered logs must be written to the destination Logstore website_fail for analysis. Sample log:

body_bytes_sent: 1061

http_user_agent: Mozilla/5.0 (Windows; U; Windows NT 5.1; ru-RU) AppleWebKit/533.18.1 (KHTML, like Gecko) Version/5.0.2 Safari/533.18.5

remote_addr: 192.0.2.2

remote_user: vd_yw

request_method: GET

request_uri: /request/path-1/file-5

status: 400

time_local: 10/Jun/2021:19:10:59

error: Invalid time rangeStep 1: Create a data transformation job

Log on to the Simple Log Service console.

Go to the data transformation page.

In the Projects section, click the project that you want to manage.

On the tab, click the Logstore that you want to manage.

On the query and analysis page, click Data Transformation.

In the upper-right corner of the page, specify a time range for the log data that you want to transform.

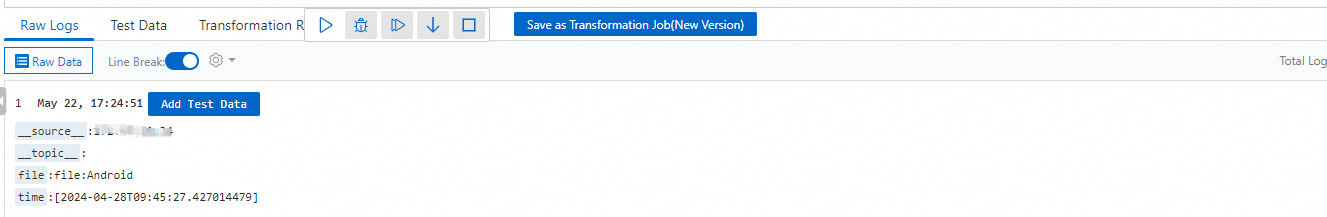

After you select a time range, verify that logs appear on the Raw Logs tab.

In the edit box, enter the following Service Processing Language (SPL) rule:

* | extend status=cast(status as BIGINT) | where status>=0 AND status<500 | project-away remote_addr, remote_userDebug the SPL rule.

Select test data from the Raw Logs tab or manually enter the logs to test.

Click ▷ to start debugging.

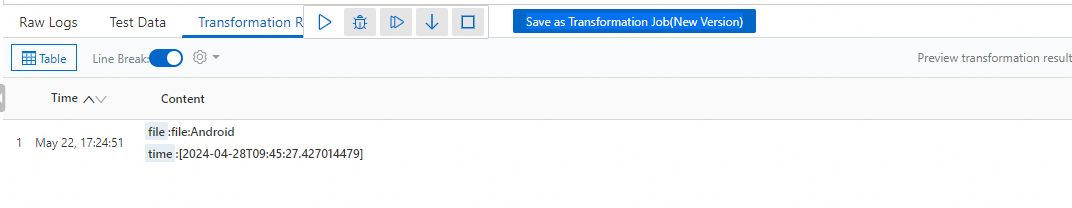

Preview the results.

Create a data transformation job.

Click Save as Transformation Job (New Version).

In the Create Data Transformation Job (New Version) panel, configure the following parameters and click OK.

Parameter

Description

Job Name

The name of the data transformation job.

Display Name

The display name of the job.

Job Description

The description of the job.

Authorization Method

The method that is used to authorize the data transformation job to read data from the source Logstore. Options:

Default Role: allows the data transformation job to use the Alibaba Cloud system role AliyunLogETLRole to read data from the source Logstore. You must authorize the system role AliyunLogETLRole. Then, configure other parameters as prompted to complete the authorization. For more information, see Access data by using a default role.

ImportantIf you use a RAM user, make sure that the authorization is complete within your Alibaba Cloud account.

If the authorization is complete within your Alibaba Cloud account, you can skip this operation.

Custom Role: authorizes the data transformation job to assume a custom role to read data from the source Logstore.

You must grant the custom role the permissions to read from the source Logstore. Then, you must enter the Alibaba Cloud Resource Name (ARN) of the custom role in the Role ARN field. For more information, see Access data by using a custom role.

AccessKey Pair: authorizes the data transformation job to use the AccessKey pair of the Alibaba Cloud account or RAM user to read data from the source Logstore.

Alibaba Cloud account: The AccessKey pair of an Alibaba Cloud account has the permissions to read data from the source Logstore. You can enter the AccessKey pair of your Alibaba Cloud account in the AccessKey ID and AccessKey Secret fields. For more information about how to obtain an AccessKey pair, see AccessKey pair.

RAM user: You must grant the RAM user the permissions to read data from the source Logstore. Then, you can enter the AccessKey ID and AccessKey secret of the RAM user in the AccessKey ID and AccessKey Secret fields. For more information, see Access data by using AccessKey pairs.

Storage Destination

Destination Name

The name of the storage destination. In the Storage Destination area, you must configure parameters including Destination Project and Target Store.

Destination Region

The region of the project to which the destination Logstore belongs.

ImportantData transformation (new version) supports data transmission only in the same region.

Destination Project

The name of the project to which the destination Logstore belongs.

Target Store

The name of the destination Logstore, which stores transformed data.

Authorization Method

The method that is used to authorize the data transformation job to write transformed data to the destination Logstore. Options:

Default Role: allows the data transmission job to assume the Alibaba Cloud system role AliyunLogETLRole to write data transformation results to the destination Logstore. You must authorize the system role AliyunLogETLRole. Then, configure other parameters as prompted to complete the authorization. For more information, see Access data by using a default role.

ImportantIf you use a RAM user, make sure that the authorization is complete within your Alibaba Cloud account.

If the authorization is complete within your Alibaba Cloud account, you can skip this operation.

Custom Role: authorizes the data transformation job to assume a custom role to write transformed data to the destination Logstore. You must grant the permission to the custom role to write data to the destination Logstore. Then, enter the Alibaba Cloud Resource Name (ARN) of the custom role in the Role ARN field. For more information, see Access data by using a custom role.

AccessKey Pair: allows the data transformation job to use the AccessKey pair of an Alibaba Cloud account or a RAM user to write data transformation results to the destination Logstore.

Alibaba Cloud account: The AccessKey pair of an Alibaba Cloud account has the permissions to write data to the destination Logstore. You can enter the AccessKey pair of your Alibaba Cloud account in the AccessKey ID and AccessKey Secret fields. For more information about how to obtain an AccessKey pair, see AccessKey pair.

RAM user: You must grant the RAM user the permissions to write data to the destination Logstore. Then, you can enter the AccessKey ID and AccessKey secret of the RAM user in the AccessKey ID and AccessKey Secret fields. For more information, see Access data by using AccessKey pairs.

Write to Result Set

The result set of the SPL rule to be written to the destination Logstore.

__UNNAMED__, which represents all unnamed result sets, is supported.Time Range for Data Transformation

Time Range for Data Transformation (Data Receiving Time)

The time range of the data that is transformed.

All: The job transforms data in the source Logstore from the first log until the job is manually stopped.

From Specific Time: The job transforms data in the source Logstore from the log that is received at the specified start time until the job is manually stopped.

Specific Time Range: The job transforms data in the source Logstore from the log that is received at the specified start time to the log that is received at the specified end time.

Advanced Options

Advanced Parameter Settings

You may need to specify passwords such as database passwords in transformation statements. Simple Log Service allows you to add a key-value pair to store the passwords. You can specify

res_local("key")in your statement to reference the passwords.You can click the + icon to add more key-value pairs. For example, you can add config.vpc.vpc_id.test1:vpc-uf6mskb0b****n9yj to indicate the ID of the virtual private cloud (VPC) to which an ApsaraDB RDS instance belongs.

Go to the destination Logstore website_fail and perform query and analysis operations. For more information, see Query and analyze logs.

Step 2: Observe the data transformation job

In the left-side navigation pane, choose .

In the Data Transformation job list, click the target job.

On the Data Transformation Overview (new version) page, view the details of the data transformation job. You can view details and status of the job. You can also modify, start, stop, or delete the job. You can also observe the job running status and metrics. For more information, see Monitor data transformation jobs (new version).